How to Use Edge AI for Latency-Critical Control Decisions

JUL 2, 2025 |

In recent years, Edge AI has emerged as a transformative technology, especially for applications requiring rapid decision-making. At its core, Edge AI involves deploying artificial intelligence algorithms on edge devices—such as sensors and smartphones—rather than relying solely on cloud-based systems. This local processing capability allows for real-time data analysis and decision-making, which is crucial for latency-critical control applications.

The significance of Edge AI lies in its ability to significantly reduce latency. Traditional cloud computing models involve sending data to a centralized server for processing, which can incur delays, especially if the data must travel long distances. However, Edge AI processes data close to its source, minimizing these delays and enabling faster responses.

Key Components of Edge AI Systems

To effectively use Edge AI for latency-critical control decisions, it's essential to understand the key components that make up these systems. At the heart of Edge AI are robust machine learning models that can operate with limited computational resources available on edge devices. These models need to be efficient in terms of both memory and processing power, ensuring they can execute quickly without draining device resources.

Moreover, integration with IoT (Internet of Things) devices is crucial, as these devices often serve as data sources for edge applications. The ability to seamlessly collect, process, and analyze data from IoT devices enables more informed and timely decision-making. Additionally, a robust communication protocol is vital to ensure data integrity and reliability during transmission between devices.

Applications of Edge AI in Latency-Critical Control

One of the most prominent applications of Edge AI in latency-critical scenarios is in autonomous vehicles. In this context, quick decision-making is paramount, as vehicles must respond in real-time to changing conditions, such as obstacles, traffic signals, and pedestrian crossings. By employing Edge AI, these vehicles can process sensor data locally, enabling them to make split-second decisions that enhance safety and efficiency.

Another notable application is in industrial automation, where Edge AI can monitor machinery and production lines, identifying anomalies and making control decisions without delay. This capability not only increases operational efficiency but also reduces downtime by anticipating maintenance needs before failures occur.

Healthcare is yet another field benefiting from Edge AI, particularly for patient monitoring and diagnostics. Devices equipped with Edge AI can analyze patient data in real-time, providing immediate insights and alerts to medical professionals, which is especially critical in emergency situations.

Challenges and Solutions for Implementing Edge AI

Despite its advantages, implementing Edge AI is not without challenges. One significant challenge is the limited computational power available on edge devices, which can restrict the complexity of AI models. To address this, developers can employ model compression techniques, such as quantization and pruning, to reduce model size and improve processing speed.

Another challenge is ensuring data privacy and security, as edge devices often operate in diverse and potentially unsecured environments. Implementing robust encryption and secure communication protocols can mitigate these risks and protect sensitive information.

Additionally, managing the deployment and updating of AI models across numerous edge devices can be complex. Leveraging containerization and orchestration tools can simplify this process, ensuring that devices are consistently running the latest, most efficient models.

Future Trends in Edge AI

Looking ahead, the future of Edge AI appears promising, with ongoing advancements in hardware and software likely to enhance its capabilities further. The development of specialized AI chips designed for edge computing will provide greater processing power and efficiency, enabling more complex models to run locally.

Moreover, as 5G networks continue to expand, the increased bandwidth and reduced latency will further empower Edge AI applications, facilitating faster data transmission and more robust connectivity between devices.

In conclusion, Edge AI represents a pivotal advancement for latency-critical control decisions across various industries. By understanding its components, applications, and challenges, businesses and developers can harness its potential to create faster, more efficient, and more responsive systems, ultimately driving innovation and improving outcomes in real-time decision-making scenarios.

Ready to Reinvent How You Work on Control Systems?

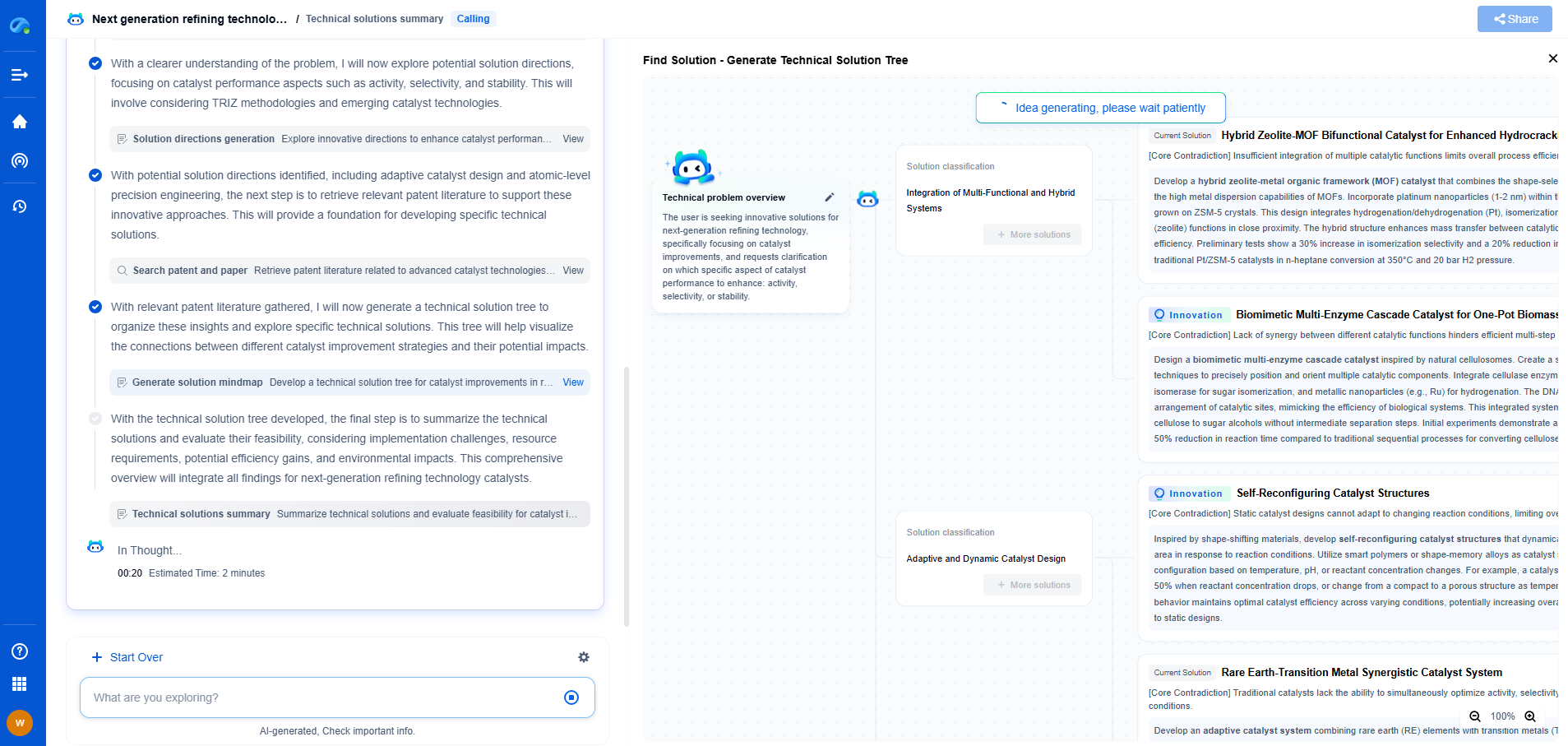

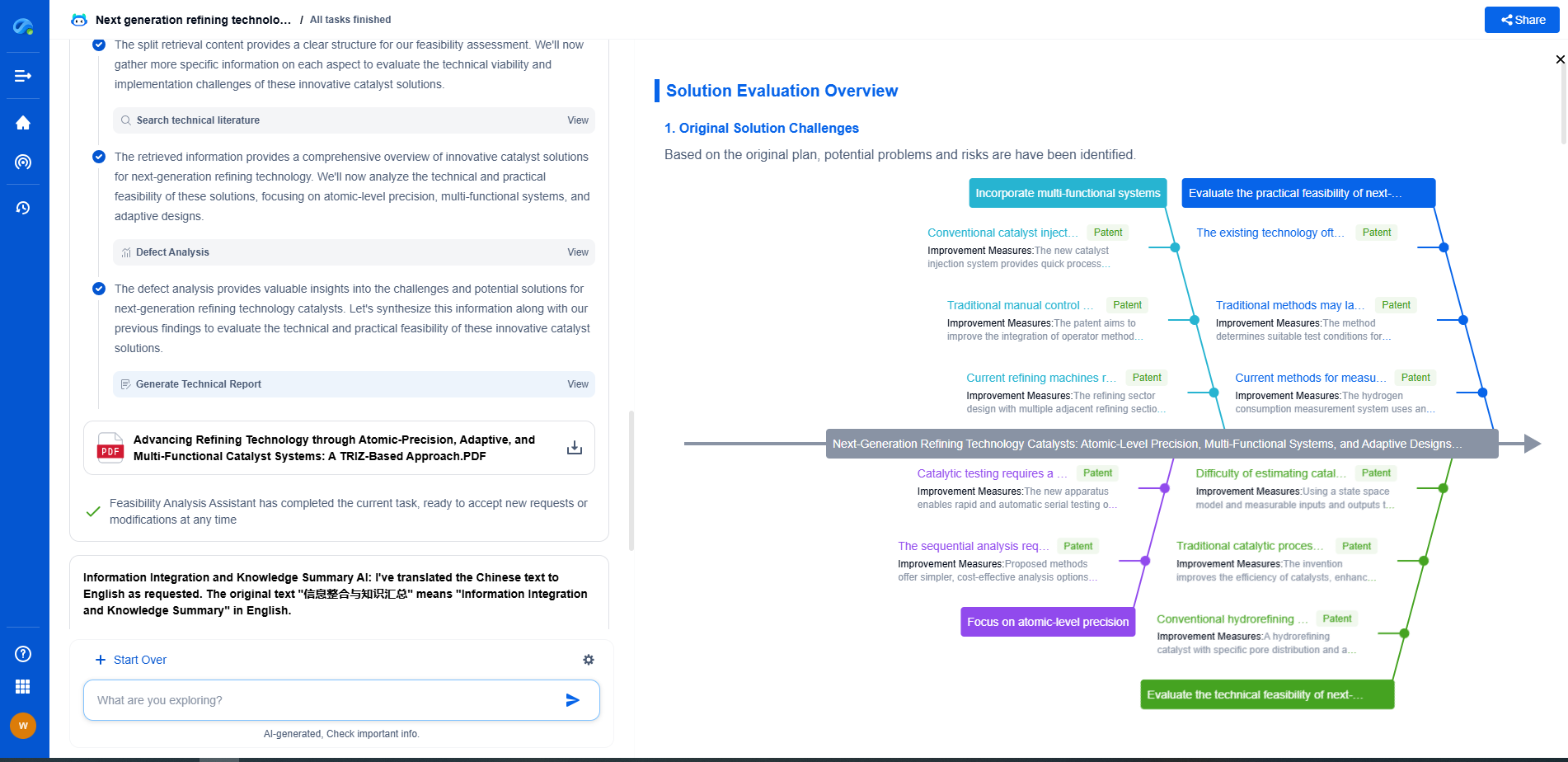

Designing, analyzing, and optimizing control systems involves complex decision-making, from selecting the right sensor configurations to ensuring robust fault tolerance and interoperability. If you’re spending countless hours digging through documentation, standards, patents, or simulation results — it's time for a smarter way to work.

Patsnap Eureka is your intelligent AI Agent, purpose-built for R&D and IP professionals in high-tech industries. Whether you're developing next-gen motion controllers, debugging signal integrity issues, or navigating complex regulatory and patent landscapes in industrial automation, Eureka helps you cut through technical noise and surface the insights that matter—faster.

👉 Experience Patsnap Eureka today — Power up your Control Systems innovation with AI intelligence built for engineers and IP minds.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com