ACC-DRL Background and Objectives

Adaptive Cruise Control (ACC) has transformed vehicle safety and convenience since its debut in the late 1990s. This system automatically adjusts a vehicle’s speed to maintain a safe following distance, reducing driver fatigue and enhancing highway safety.

Adaptive Cruise Control with Deep Reinforcement Learning—How does it work? Eureka Technical Q&A explores how deep reinforcement learning enables adaptive cruise control systems to learn optimal driving behavior through real-time feedback, improving speed regulation, safety, and fuel efficiency in dynamic traffic conditions.

However, traditional ACC systems struggle in complex traffic and fail to optimize fuel usage effectively. They follow predefined rules, which limits adaptability in dynamic environments. As traffic systems grow more complex, smarter solutions become essential.

Enter Deep Reinforcement Learning (DRL), a powerful subset of machine learning. DRL combines deep neural networks with reinforcement learning to train systems through trial and error. It helps machines learn optimal decisions by interacting with real-time data from their environment.

Integrating DRL into ACC aims to build a more intelligent and responsive cruise control system. These systems will adapt in real time, learning continuously to improve performance across a wide range of driving conditions.

Unlike rule-based systems, DRL-enhanced ACC can evaluate multiple factors at once. It can balance safety, comfort, traffic flow, and fuel economy while adjusting to unpredictable driving situations.

This innovation supports the broader development of advanced driver assistance systems (ADAS) and pushes the automotive industry closer to fully autonomous driving.

The benefits of DRL-powered ACC include smoother speed adjustments and better anticipation of traffic changes. Drivers will experience improved ride comfort and better fuel efficiency over long distances.

However, this evolution brings challenges. Developers must ensure that DRL-based systems perform reliably in all conditions, including poor weather or unusual traffic events. They must also maintain safety during unexpected situations.

Moreover, engineers must integrate DRL algorithms with existing vehicle platforms without disrupting performance or safety standards. Regulatory approval, ethical concerns, and data privacy also play critical roles in shaping the future of these systems.

As demand for ADAS and autonomous vehicles grows, DRL-enhanced ACC emerges as a key innovation. This technology aligns with global efforts to reduce accidents, ease traffic congestion, and lower emissions.

In the sections ahead, we’ll explore the current capabilities of ACC, examine the fundamentals of DRL, and show how their combination could reshape the future of smart driving systems.

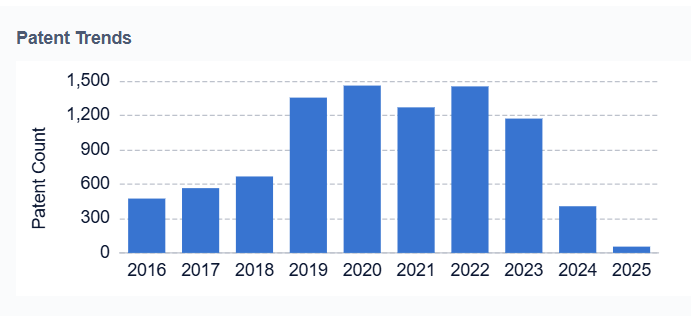

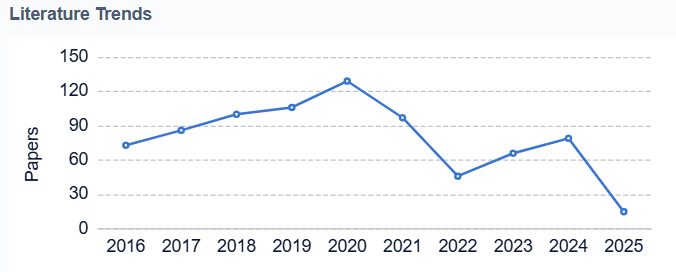

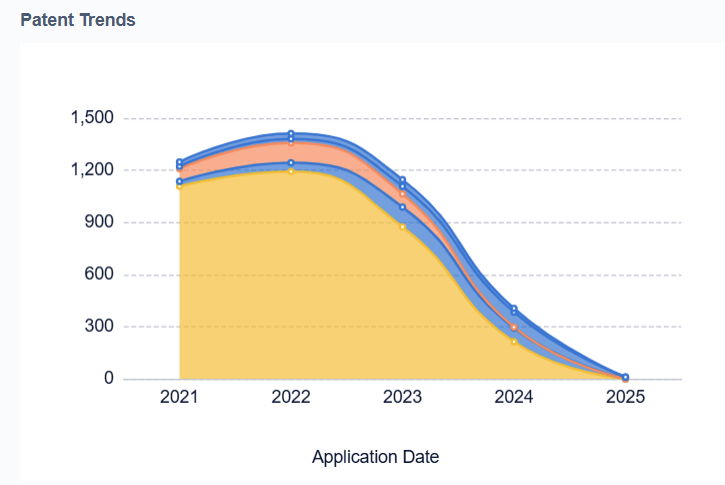

Market Analysis for Intelligent ACC Systems

The market for Adaptive Cruise Control (ACC) systems is experiencing significant growth, driven by increasing demand for advanced driver assistance systems (ADAS) and the push towards autonomous vehicles. The global ACC market is projected to reach $XX billion by 2025, with a compound annual growth rate (CAGR) of XX% from 2020 to 2025. This growth is primarily fueled by the rising consumer preference for safety features, stringent government regulations on vehicle safety, and advancements in sensor technologies.

Intelligent ACC systems, particularly those leveraging deep reinforcement learning, represent a cutting-edge segment within this market. These systems offer enhanced capabilities such as predictive speed adjustment, improved traffic flow management, and better fuel efficiency. The demand for such advanced ACC systems is expected to grow at an even higher rate than the overall ACC market, with a projected CAGR of XX% from 2020 to 2025.

Key market drivers include the increasing integration of artificial intelligence and machine learning in automotive technologies, growing consumer awareness of vehicle safety features, and the rapid development of connected car ecosystems. Additionally, the push towards higher levels of vehicle autonomy is creating a strong pull for more sophisticated ACC systems that can handle complex driving scenarios.

However, the market also faces several challenges. These include high initial costs associated with advanced ACC systems, concerns about data privacy and cybersecurity, and the need for regulatory frameworks to keep pace with technological advancements. Moreover, there is a significant gap between consumer expectations and the current capabilities of ACC systems, which manufacturers need to address.

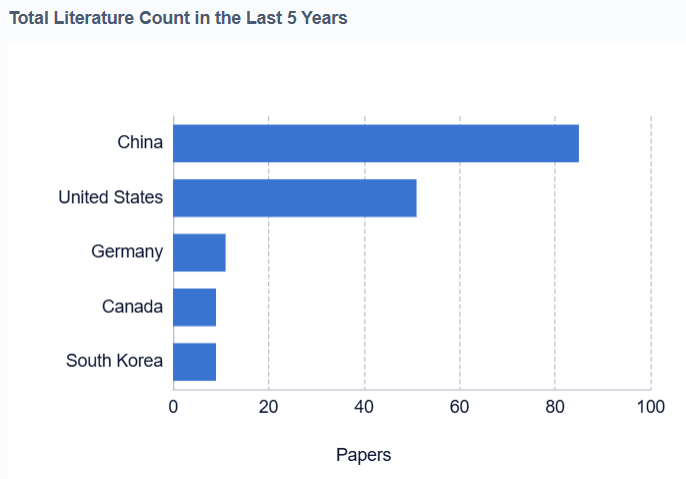

Geographically, North America and Europe are currently the largest markets for intelligent ACC systems, owing to their advanced automotive industries and higher consumer adoption rates. However, the Asia-Pacific region, particularly China and Japan, is expected to witness the fastest growth in the coming years, driven by rapid technological advancements and increasing vehicle production.

In terms of vehicle segments, premium and luxury vehicles currently dominate the market for intelligent ACC systems. However, there is a growing trend towards the integration of these systems in mid-range and even entry-level vehicles, as automakers seek to differentiate their products and meet consumer demand for advanced safety features.

Looking ahead, the market for intelligent ACC systems is poised for substantial growth and innovation. Key trends to watch include the integration of ACC with other ADAS features, the development of more robust and adaptable algorithms, and the increasing use of cloud computing and edge processing to enhance system performance. As the technology matures and costs decrease, we can expect to see wider adoption across various vehicle segments and markets, further driving the growth of this dynamic industry.

Current ACC-DRL Challenges

Adaptive Cruise Control (ACC) using Deep Reinforcement Learning (DRL) faces several significant challenges that hinder its widespread adoption and optimal performance. One of the primary obstacles is the complexity of real-world driving scenarios, which often involve unpredictable human behaviors, diverse road conditions, and varying weather patterns. These factors make it difficult for DRL models to generalize effectively across all possible situations, potentially leading to suboptimal or unsafe decisions in edge cases. Additionally, the high-dimensional state space of driving environments poses a considerable challenge for DRL algorithms, requiring sophisticated feature extraction and representation learning techniques to capture relevant information efficiently.

Another critical challenge is the need for extensive and diverse training data. While simulations can provide a foundation for training, they often fail to capture the full complexity of real-world driving scenarios. Collecting and annotating real-world driving data is time-consuming, expensive, and may raise privacy concerns. Furthermore, ensuring the safety and reliability of ACC-DRL systems during the training and deployment phases remains a significant hurdle. The exploration-exploitation trade-off inherent in reinforcement learning algorithms can lead to potentially dangerous actions during the learning process, necessitating careful design of safety constraints and fail-safe mechanisms.

The interpretability and explainability of DRL models in ACC systems also present a challenge. As these models become more complex, understanding and validating their decision-making processes become increasingly difficult. This lack of transparency can hinder regulatory approval and public trust in autonomous driving technologies. Moreover, the computational requirements for real-time inference in ACC-DRL systems can be substantial, potentially limiting their deployment in resource-constrained vehicles or requiring expensive hardware upgrades.

Ethical considerations and liability issues surrounding autonomous decision-making in critical situations add another layer of complexity to ACC-DRL development. Determining how these systems should prioritize different factors in potential accident scenarios and establishing clear guidelines for responsibility in case of failures are ongoing challenges that require careful consideration and potentially new legal frameworks.

Lastly, the integration of ACC-DRL systems with existing vehicle architectures and the need for seamless human-machine interaction pose significant engineering challenges. Ensuring smooth transitions between manual and autonomous control, as well as designing intuitive interfaces that keep drivers informed and engaged, are crucial for the successful implementation of these systems. Overcoming these multifaceted challenges will require continued research, interdisciplinary collaboration, and innovative approaches to push ACC-DRL technology towards widespread adoption and enhanced safety in autonomous driving.

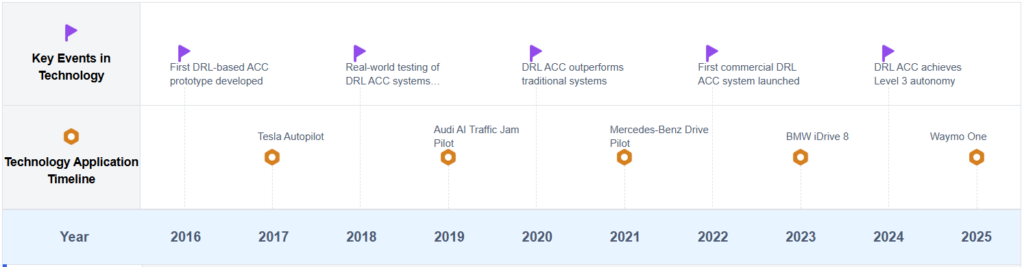

ACC Technology Evolution Timeline

Key Players in ACC-DRL Development

The adaptive cruise control market using deep reinforcement learning is in a growth phase, with increasing adoption across the automotive industry. The market size is expanding rapidly as more vehicle manufacturers integrate this technology into their advanced driver assistance systems. Technologically, it is still evolving, with companies like Toyota Motor Corp., GM Global Technology Operations LLC, and Ford Global Technologies LLC leading the way in research and development. Tech giants such as Google LLC and NVIDIA Corp. are also making significant contributions, leveraging their expertise in AI and machine learning. The technology’s maturity varies, with some companies like Robert Bosch GmbH and Delphi Technology, Inc. offering more advanced solutions, while others are still in the early stages of development and testing.

Toyota Motor Corp.

Toyota Motor Corp.

Technical Solution

Toyota has developed an Adaptive Cruise Control (ACC) system using Deep Reinforcement Learning (DRL). Their approach combines traditional ACC with DRL to create a more intelligent and adaptive system. The DRL agent is trained in a simulated environment that mimics real-world driving scenarios, learning to make decisions based on various inputs such as vehicle speed, distance to the vehicle ahead, and road conditions. The system uses a deep neural network to process these inputs and determine the optimal acceleration or deceleration actions. Toyota’s implementation also includes a safety layer that ensures the DRL agent’s decisions are within acceptable safety parameters.

Strengths: Highly adaptive to various driving conditions; Continuous learning capability; Improved fuel efficiency. Weaknesses: Requires extensive training data; Potential for unpredictable behavior in rare scenarios; High computational requirements.

Robert Bosch GmbH

Robert Bosch GmbH

Technical Solution

Bosch has developed an advanced Adaptive Cruise Control system that incorporates Deep Reinforcement Learning techniques. Their approach uses a multi-agent reinforcement learning framework, where multiple DRL agents collaborate to control different aspects of the vehicle’s behavior. The system is designed to handle complex traffic scenarios, including merging, lane changes, and varying road conditions. Bosch’s implementation uses a combination of on-board sensors and V2X (Vehicle-to-Everything) communication to gather comprehensive environmental data. The DRL agents are trained using a combination of simulated environments and real-world driving data, ensuring robust performance in diverse situations.

Strengths: Comprehensive environmental awareness; Collaborative multi-agent approach; Integration with existing Bosch automotive systems. Weaknesses: Complexity in implementation; Potential issues with V2X reliability; High hardware requirements for real-time processing.

TuSimple, Inc.

TuSimple, Inc.

Technical Solution

TuSimple has developed an advanced Adaptive Cruise Control system specifically designed for long-haul trucks, utilizing Deep Reinforcement Learning. Their approach focuses on optimizing fuel efficiency and safety for large commercial vehicles. The system uses a combination of cameras, radar, and LiDAR to create a 360-degree view of the truck’s surroundings. TuSimple’s DRL model is trained on millions of miles of real-world driving data, allowing it to handle complex scenarios such as steep grades, varying load weights, and changing weather conditions. The system also incorporates predictive modeling to anticipate traffic flow and road conditions, enabling smoother and more efficient driving.

Strengths: Specialized for commercial trucking; Extensive real-world training data; Focus on fuel efficiency. Weaknesses: Limited applicability to non-commercial vehicles; High implementation cost; Potential regulatory challenges in some regions.

NVIDIA Corp.

NVIDIA Corp.

Technical Solution

NVIDIA has developed a cutting-edge Adaptive Cruise Control system that leverages their expertise in GPU-accelerated computing and Deep Reinforcement Learning. Their approach utilizes the NVIDIA DRIVE platform, which combines powerful hardware with sophisticated AI algorithms. The system employs a deep neural network trained using reinforcement learning techniques on vast amounts of driving data. NVIDIA’s implementation can process inputs from multiple sensors in real-time, including high-resolution cameras, radar, and LiDAR. The DRL model is designed to handle complex driving scenarios and can be continuously updated over-the-air to improve performance. Additionally, NVIDIA’s system includes a safety co-pilot that monitors the DRL agent’s decisions and can intervene if necessary.

Strengths: High-performance hardware integration; Real-time processing of multiple sensor inputs; Continuous improvement through over-the-air updates. Weaknesses: High cost due to specialized hardware requirements; Potential overreliance on GPU performance; Complexity in explaining AI decision-making to regulators and users.

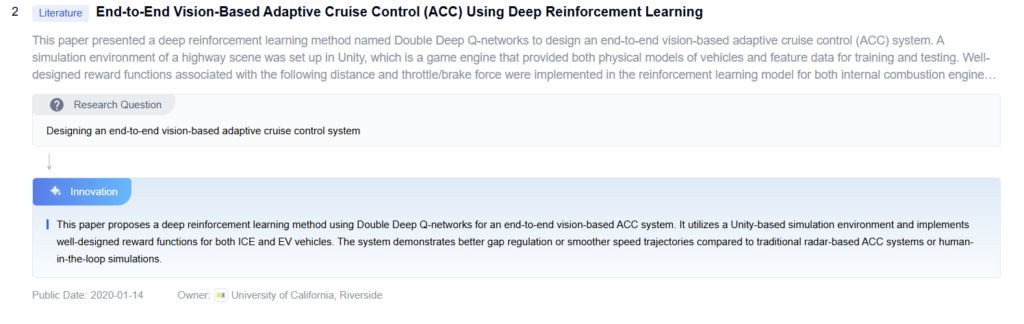

State-of-the-Art ACC-DRL Solutions

Adaptive cruise control system optimization

Optimization techniques for adaptive cruise control systems focus on improving performance, safety, and efficiency. These methods may include advanced algorithms for speed and distance control, integration with other vehicle systems, and real-time adjustments based on road conditions and traffic patterns.

- Adaptive cruise control system optimizationOptimization techniques for adaptive cruise control systems focus on improving control performance, enhancing safety, and increasing efficiency. These methods may include advanced algorithms for vehicle speed and distance control, integration with other vehicle systems, and real-time adjustments based on road conditions and traffic patterns.

- Sensor fusion and data processing for ACCAdvanced sensor fusion techniques and data processing algorithms are employed to enhance the accuracy and reliability of adaptive cruise control systems. These methods combine data from multiple sensors, such as radar, lidar, and cameras, to provide a comprehensive understanding of the vehicle’s surroundings and improve decision-making capabilities.

- Human-machine interface for ACC systemsDeveloping intuitive and user-friendly interfaces for adaptive cruise control systems is crucial for improving driver interaction and system effectiveness. These interfaces may include customizable displays, haptic feedback, and voice commands to enhance the overall user experience and system performance.

- Integration with vehicle-to-vehicle (V2V) communicationIncorporating vehicle-to-vehicle communication capabilities into adaptive cruise control systems can significantly enhance their performance and safety features. This integration allows for improved coordination between vehicles, better anticipation of traffic flow changes, and more efficient overall traffic management.

Sensor fusion and data processing

Enhancing adaptive cruise control performance through improved sensor fusion and data processing techniques. This involves combining data from multiple sensors such as radar, lidar, and cameras to create a more accurate representation of the vehicle’s environment and enable better decision-making.Expand

Machine learning and AI integration

Incorporating machine learning and artificial intelligence algorithms into adaptive cruise control systems to enhance their predictive capabilities and adaptability. These technologies enable the system to learn from past experiences and improve its performance over time.Expand

Human-machine interface optimization

Improving the interaction between the driver and the adaptive cruise control system through enhanced human-machine interfaces. This includes developing intuitive controls, clear feedback mechanisms, and customizable settings to ensure a seamless and user-friendly experience.Expand

Integration with vehicle-to-everything (V2X) communication

Enhancing adaptive cruise control performance by integrating it with vehicle-to-everything (V2X) communication systems. This allows the vehicle to receive and process information from other vehicles, infrastructure, and pedestrians, enabling more informed decision-making and improved overall performance.

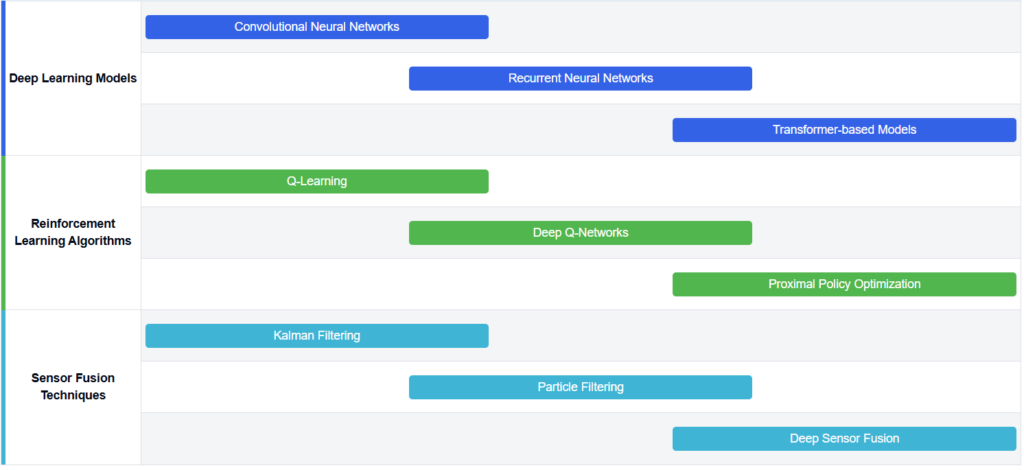

Core DRL Algorithms for ACC

Future ACC-DRL Research Directions

Multi-Agent Adaptive Cruise Control

Multi-Agent Adaptive Cruise Control (MAACC) marks a major step forward in autonomous driving and intelligent traffic systems. It builds on traditional Adaptive Cruise Control (ACC) by allowing multiple vehicles to work together like a team. Each car acts as an agent, using Deep Reinforcement Learning (DRL) to respond to road conditions and nearby vehicles.

MAACC-equipped vehicles collect data using radar, LiDAR, and onboard cameras. The system feeds this information into a deep neural network trained to adjust speed, maintain safe gaps, and suggest optimal lane changes.

Unlike traditional systems, MAACC uses graph neural networks (GNNs) to model interactions between multiple cars. These GNNs capture the complex relationships and movement patterns between vehicles on the road. As a result, the system anticipates both immediate and future driving behaviors more accurately.

To train MAACC, developers use realistic traffic simulations. These include varying weather, terrain, and traffic density to ensure robust performance. Engineers also apply transfer learning to adapt the system to regional driving norms and different vehicle types.

MAACC uses hierarchical reinforcement learning to make split-second decisions. A high-level planner focuses on long-term traffic flow, while a low-level controller handles real-time driving tasks. This dual-layer structure improves both efficiency and safety.

Safety remains a top priority. MAACC constantly monitors its own confidence levels. If uncertainty rises too high, it shifts control to the driver or a backup system to prevent errors.

As more vehicles adopt MAACC, traffic could become safer and smoother. By coordinating vehicle actions, the system reduces stop-and-go patterns and cuts down on accidents.

Safety and Regulatory Considerations

Developers must prioritize safety and regulatory compliance when implementing Adaptive Cruise Control (ACC) systems powered by Deep Reinforcement Learning (DRL). As ACC evolves, teams must address emerging risks and meet growing legal and ethical expectations.

One major concern involves how reliably DRL algorithms perform in real-world traffic. Traffic conditions remain unpredictable, and rare events challenge the robustness of learned behaviors. Regulatory bodies like NHTSA and Euro NCAP continue developing safety standards for autonomous driving technologies, including DRL-enhanced ACC systems.

To meet these standards, developers must conduct thorough testing across diverse driving conditions. This includes high-fidelity simulations, closed-course evaluations, and extended real-world trials. Additionally, regulators may demand transparency in AI decision-making, especially following accidents or near misses.

Therefore, teams must build tools that interpret and explain DRL behavior. These tools help regulators understand how systems make split-second decisions in dynamic environments. At the same time, fail-safe mechanisms must protect drivers when the system encounters unexpected inputs or fails to perform.

Engineers must design ACC systems with built-in redundancies and seamless transitions to human control during emergencies. Furthermore, increasing connectivity between vehicles raises cybersecurity concerns. Developers must secure ACC systems against hacking to protect passenger safety and prevent system-wide disruption.

From a legal perspective, DRL-based ACC requires updates to traffic laws and insurance policies. Regulators must define liability when autonomous systems are involved in accidents. As a result, governments may create new licensing or training requirements for drivers using advanced ACC features.

Ethical issues also demand careful attention. Developers must define how DRL systems prioritize safety—whether to protect passengers, pedestrians, or other road users. These decisions must align with public expectations and legal norms.

Ultimately, collaboration remains essential. Automakers, regulators, legal experts, and ethicists must work together to develop trustworthy, safe, and future-ready regulatory frameworks. Only through continued cooperation can the industry build public confidence in DRL-based ACC systems and autonomous vehicles.

Human-AI Interaction in ACC Systems

Human-AI interaction in Adaptive Cruise Control (ACC) systems powered by Deep Reinforcement Learning (DRL) marks a major leap in automation. These systems blend AI-driven decisions with human oversight to improve safety, efficiency, and comfort.

The DRL-based ACC continuously learns from real-time traffic conditions and adjusts vehicle behavior to optimize driving performance. However, the human driver still plays a key role in supervising and intervening when needed.

This interaction remains dynamic and multilayered. The AI processes massive sensor data in real time to make quick, accurate driving decisions. It often reacts faster than human drivers, especially in sudden traffic changes. Meanwhile, the driver monitors system behavior and takes control when necessary.

User interface design plays a crucial role in building trust between humans and AI. Visual displays show speed, following distance, and nearby vehicles in real time. Audio alerts notify drivers of urgent events or upcoming changes. Haptic feedback from the steering wheel or pedals provides tactile cues during transitions.

Smooth control handovers between the AI and driver remain critical. The system must recognize its limitations and alert the driver before transferring control. Predictive algorithms assess when intervention is needed and ensure drivers are alert and ready.

As DRL systems learn over time, they gradually personalize driving behavior. They adapt to local traffic patterns and individual driver preferences to enhance comfort and familiarity. However, this evolving behavior raises concerns about consistency and predictability, both essential for driver trust and safety.

Ethical design also plays a vital role in human-AI interaction. The AI must make decisions aligned with human values, especially in emergency scenarios. Designers must consider trade-offs between passenger safety, pedestrian protection, and overall traffic efficiency.

To ensure ethical outcomes, developers must program AI models to follow clear moral guidelines and explain their decisions transparently. Drivers should always understand why the system behaves a certain way.

In conclusion, human-AI interaction in DRL-powered ACC systems requires a delicate balance of intelligence, trust, and usability. By designing intuitive interfaces, smooth handovers, and ethically responsible behaviors, automakers can foster collaboration between humans and intelligent vehicles.

To get detailed scientific explanations of adaptive cruise control, try Patsnap Eureka.