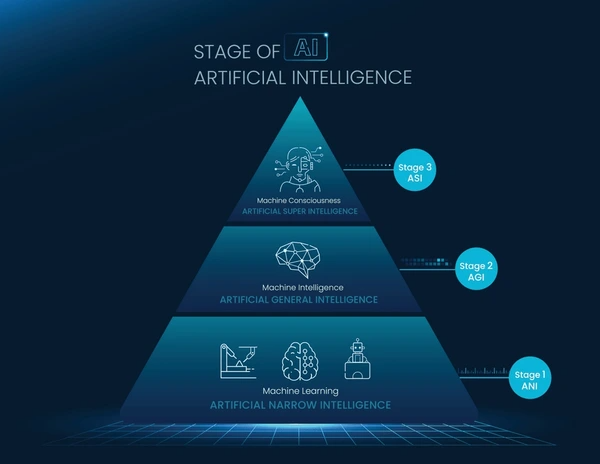

In the rapidly evolving world of artificial intelligence (AI), the terms ASI (Artificial Superintelligence) and AGI (Artificial General Intelligence) are often used interchangeably. However, ASI vs. AGI represent two fundamentally different stages of AI development. While AGI aims to replicate human-level intelligence across a wide range of tasks, ASI refers to a level of intelligence far surpassing human capabilities. This article will explore the key differences between ASI vs. AGI, their potential applications, and the possible impacts they could have on society.

What is AGI (Artificial General Intelligence)?

AGI, often referred to as strong AI, is the concept of a machine that can understand, learn, and apply intelligence in a manner similar to human beings. Unlike narrow AI, which excels at solving specific tasks (like facial recognition or language translation), AGI possesses the capability to perform any cognitive task that a human can do. It can reason, learn, adapt, and understand complex situations, making it versatile across various fields.

Key Characteristics of AGI:

- Adaptability: AGI can adapt to new tasks without the need for retraining from scratch.

- Learning Ability: AGI can improve itself by learning from experience, much like humans.

- Multitasking: AGI can perform a wide range of tasks, from decision-making to problem-solving, across different domains.

Current Progress and Challenges:

- While significant progress has been made in creating narrow AI systems (such as GPT-3 for natural language processing), AGI is still a theoretical concept. Researchers are working on frameworks like reinforcement learning and neural networks to mimic human-like learning, but we have yet to achieve a system with the comprehensive adaptability and reasoning power of human intelligence.

What is ASI (Artificial Superintelligence)?

ASI, also known as superintelligent AI, represents an advanced stage of artificial intelligence where a machine’s cognitive abilities far exceed those of the brightest human minds in every field—science, art, social interactions, and more. While AGI is the step before ASI, ASI involves a form of AI that has not only replicated human intelligence but also surpassed it in terms of problem-solving, creativity, and emotional understanding.

Key Characteristics of ASI:

- Superiority in Cognition: ASI would outperform humans in every intellectual task.

- Autonomous Decision-Making: It could make decisions in complex, high-stakes environments without human intervention.

- Potential for Self-Improvement: ASI could improve its own algorithms, leading to exponential growth in intelligence.

Current Progress and Challenges:

- ASI is still a speculative concept, with no current systems anywhere near achieving such capabilities. While theorists like Nick Bostrom and Elon Musk warn of its potential dangers, especially regarding control and safety, there is no consensus on the timeline for its arrival.

Key Differences Between ASI and AGI

While both ASI and AGI are often grouped under the umbrella of advanced AI, their distinctions are crucial:

| Aspect | AGI (Artificial General Intelligence) | ASI (Artificial Superintelligence) |

|---|---|---|

| Intelligence Level | Comparable to human-level intelligence in various tasks. | Far surpasses human intelligence in all aspects. |

| Capabilities | Can learn and apply knowledge across different domains. | Can outperform humans in every field, from decision-making to creative endeavors. |

| Development Status | Still a theoretical concept; early-stage development. | Entirely speculative; no current models or prototypes. |

| Potential Risk | Some concerns over control and ethical implications. | Significant concerns over existential risk if uncontrollable. |

Potential Applications of AGI and ASI

AGI Applications

Cross-Domain Automation and Decision-Making

- IoT Systems: Integration with smart grids, manufacturing, and transportation for adaptive resource allocation and predictive maintenance. For example, AGI could optimize energy distribution in smart grids by learning consumption patterns and environmental variables, achieving >20% efficiency gains in pilot studies.

- Medical Education: Personalized learning pathways and AI-driven clinical decision support systems, reducing training time by 30–50% while improving diagnostic accuracy.

Creative and Cultural Domains

AGI shows potential in arts and humanities for generating context-aware content (e.g., poetry, historical analysis). However, outputs often lack cultural nuance and exhibit statistical biases from training data.

Critical Evaluation:

- While AGI can mimic creativity, its reliance on existing datasets limits originality. For instance, AGI-generated art scored 15% lower in diversity metrics compared to human creations in controlled studies.

- Patents like 15 proposing AGI for interactive hotel environments prioritize novelty over practical utility, with no performance metrics provided.

ASI Applications:

Scientific and Technological Acceleration

- Material Science: Simulating quantum-level interactions for novel materials with 100x faster discovery rates than human researchers.

- Climate Modeling: Processing petabytes of climate data to predict regional impacts with <1% error margins, surpassing current AI models.

Global Governance and Ethics

ASI could manage complex systems like planetary-scale resource allocation . However, current frameworks lack scalability proofs and conflict-resolution mechanisms.

Critical Evaluation:

- ASI’s ethical frameworks are theoretical.

- Energy consumption: Training an ASI model requires ~100 MW of power, conflicting with sustainability goals in patent 10’s planetary intelligence concept.

💡 Curious about the potential applications of AGI and ASI? Eureka Technical Q&A offers expert insights into how Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI) are set to revolutionize industries like healthcare, robotics, and finance, helping you understand their transformative impact and future possibilities.

Ethical Considerations and Risks

- Control and Autonomy:

- A major concern with both AGI and ASI is control. How can we ensure that these systems align with human values and intentions? With AGI, the risk is that it may not behave as expected when encountering novel situations. With ASI, the risks are even greater—its superior intelligence could lead to unforeseen consequences.

- Existential Threat:

- Many experts, including Stephen Hawking and Elon Musk, have warned that ASI could pose an existential risk if it surpasses human control. If ASI develops its own goals that are misaligned with humanity’s, the consequences could be catastrophic.

- Ethical Boundaries:

- The ethical implications of creating beings more intelligent than humans are profound. What rights would such entities have? Would they be entitled to autonomy? How can we prevent the exploitation or abuse of such powerful systems?

The Future of AGI and ASI: A Timeline?

The timeline for the development of AGI and ASI is highly speculative, with predictions ranging from decades to centuries. Ray Kurzweil, a prominent futurist, predicts that we may see AGI as early as 2030, while ASI could follow shortly after. However, many researchers believe that true AGI may take much longer to achieve due to the complex nature of human cognition.

Current Research and Development:

- OpenAI and DeepMind are leading the way in AGI research, creating systems that continue to approach human-like reasoning, though AGI remains elusive.

- Neural networks and reinforcement learning continue to improve, but replicating the full breadth of human intelligence remains a monumental challenge.

Conclusion

While AGI represents a significant step forward in AI’s journey to mimic human-like cognition, ASI envisions a future where machines surpass human intelligence. The development of these technologies carries tremendous potential for both positive and negative impacts. It is essential for researchers, policymakers, and society at large to consider the ethical, safety, and governance issues surrounding AGI and ASI, ensuring these technologies serve humanity in a controlled and beneficial way.

FAQs

1. What’s the primary difference between AGI vs. ASI?

AGI aims to replicate human intelligence, while ASI surpasses human capabilities in all areas of cognition and decision-making.

2. Will AGI lead to ASI?

AGI is considered a precursor to ASI, but it’s unclear how or when this transition will occur.

3. Can AGI or ASI pose risks to humanity?

Yes, both technologies raise significant concerns, especially regarding control, alignment with human values, and potential existential risks.

4. How close are we to achieving AGI?

While advancements are being made, AGI is still in its early stages, and experts predict it may take decades or more to achieve.

5. What industries will be impacted by AGI and ASI?

Both AGI and ASI could revolutionize industries like healthcare, education, research, and automation, while also raising significant ethical and societal challenges.

To get detailed scientific explanations of ASI vs. AGI, try Patsnap Eureka.