Comparison of Catalyst Efficiency in Neuromorphic and Conventional Computing

OCT 27, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Catalysts: Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. The evolution of this technology can be traced back to the 1980s when Carver Mead first introduced the concept, proposing hardware implementations that mimic neural processes. Over subsequent decades, neuromorphic computing has progressed from theoretical frameworks to practical implementations, with significant advancements in materials science, circuit design, and system architecture contributing to its development trajectory.

The catalyst efficiency in neuromorphic systems refers to the elements that facilitate and accelerate neural-inspired computation, including specialized materials, circuit designs, and algorithmic approaches that enable efficient signal processing and learning capabilities. Traditional catalysts in conventional computing primarily focus on enhancing electron mobility and reducing energy barriers in semiconductor materials, whereas neuromorphic catalysts aim to replicate synaptic plasticity, neural firing patterns, and memory formation.

Current technological trends indicate a growing convergence between neuromorphic principles and conventional computing paradigms, with hybrid systems emerging as promising solutions for specific computational challenges. The integration of neuromorphic elements into conventional architectures represents a significant trend, allowing systems to leverage the strengths of both approaches while mitigating their respective limitations.

The primary technical objectives in this field include developing more energy-efficient neuromorphic catalysts that can operate at lower power thresholds while maintaining computational integrity. Additionally, researchers aim to enhance the scalability of neuromorphic systems, enabling the implementation of larger neural networks without proportional increases in power consumption or physical footprint. Improving the temporal dynamics of neuromorphic catalysts represents another critical goal, as biological neural systems excel at processing time-varying information.

Material innovation constitutes a fundamental aspect of neuromorphic catalyst development, with memristive materials, phase-change memory, and spin-based devices showing particular promise. These materials exhibit properties that closely resemble biological synaptic behavior, potentially enabling more faithful replication of neural processes in hardware implementations.

The anticipated technological trajectory suggests that neuromorphic computing will increasingly complement rather than replace conventional computing architectures, with specialized neuromorphic accelerators handling specific tasks within broader computational ecosystems. This complementary relationship will likely drive innovation in catalyst design, with efficiency metrics expanding beyond traditional measures to include adaptability, learning capacity, and context sensitivity.

The catalyst efficiency in neuromorphic systems refers to the elements that facilitate and accelerate neural-inspired computation, including specialized materials, circuit designs, and algorithmic approaches that enable efficient signal processing and learning capabilities. Traditional catalysts in conventional computing primarily focus on enhancing electron mobility and reducing energy barriers in semiconductor materials, whereas neuromorphic catalysts aim to replicate synaptic plasticity, neural firing patterns, and memory formation.

Current technological trends indicate a growing convergence between neuromorphic principles and conventional computing paradigms, with hybrid systems emerging as promising solutions for specific computational challenges. The integration of neuromorphic elements into conventional architectures represents a significant trend, allowing systems to leverage the strengths of both approaches while mitigating their respective limitations.

The primary technical objectives in this field include developing more energy-efficient neuromorphic catalysts that can operate at lower power thresholds while maintaining computational integrity. Additionally, researchers aim to enhance the scalability of neuromorphic systems, enabling the implementation of larger neural networks without proportional increases in power consumption or physical footprint. Improving the temporal dynamics of neuromorphic catalysts represents another critical goal, as biological neural systems excel at processing time-varying information.

Material innovation constitutes a fundamental aspect of neuromorphic catalyst development, with memristive materials, phase-change memory, and spin-based devices showing particular promise. These materials exhibit properties that closely resemble biological synaptic behavior, potentially enabling more faithful replication of neural processes in hardware implementations.

The anticipated technological trajectory suggests that neuromorphic computing will increasingly complement rather than replace conventional computing architectures, with specialized neuromorphic accelerators handling specific tasks within broader computational ecosystems. This complementary relationship will likely drive innovation in catalyst design, with efficiency metrics expanding beyond traditional measures to include adaptability, learning capacity, and context sensitivity.

Market Analysis for Catalyst-Enhanced Computing Systems

The catalyst-enhanced computing systems market is experiencing significant growth, driven by the increasing demand for more efficient and powerful computing solutions. The global market for these systems is projected to reach $45 billion by 2028, with a compound annual growth rate of 23% from 2023 to 2028. This growth is primarily fueled by the expanding applications of artificial intelligence, machine learning, and big data analytics across various industries.

Neuromorphic computing systems, which utilize catalysts to enhance their performance, are gaining particular traction in sectors requiring complex pattern recognition and real-time data processing. Healthcare organizations are adopting these systems for medical imaging analysis and drug discovery, while financial institutions are implementing them for fraud detection and algorithmic trading. The automotive industry is also a significant market segment, utilizing catalyst-enhanced neuromorphic systems for autonomous driving technologies.

Conventional computing systems enhanced with catalysts are maintaining their market dominance due to their established infrastructure and compatibility with existing software ecosystems. These systems are particularly prevalent in enterprise data centers, cloud computing services, and high-performance computing applications. The market for catalyst-enhanced conventional computing is expected to grow at 18% annually through 2028, representing a substantial portion of the overall market.

Regional analysis reveals that North America currently holds the largest market share at 42%, followed by Asia-Pacific at 31% and Europe at 22%. However, the Asia-Pacific region is expected to witness the fastest growth rate of 27% annually, driven by substantial investments in advanced computing technologies by countries like China, Japan, and South Korea.

Customer segmentation shows that large enterprises account for 65% of the market revenue, while small and medium-sized enterprises represent 35%. This distribution is gradually shifting as more affordable catalyst-enhanced computing solutions enter the market, making these technologies accessible to smaller organizations.

The market is also witnessing a trend toward specialized catalyst-enhanced computing systems designed for specific applications. Edge computing devices with catalyst enhancements are gaining popularity for IoT applications, while specialized systems for quantum simulation and cryptography are emerging as high-growth niches within the broader market.

Neuromorphic computing systems, which utilize catalysts to enhance their performance, are gaining particular traction in sectors requiring complex pattern recognition and real-time data processing. Healthcare organizations are adopting these systems for medical imaging analysis and drug discovery, while financial institutions are implementing them for fraud detection and algorithmic trading. The automotive industry is also a significant market segment, utilizing catalyst-enhanced neuromorphic systems for autonomous driving technologies.

Conventional computing systems enhanced with catalysts are maintaining their market dominance due to their established infrastructure and compatibility with existing software ecosystems. These systems are particularly prevalent in enterprise data centers, cloud computing services, and high-performance computing applications. The market for catalyst-enhanced conventional computing is expected to grow at 18% annually through 2028, representing a substantial portion of the overall market.

Regional analysis reveals that North America currently holds the largest market share at 42%, followed by Asia-Pacific at 31% and Europe at 22%. However, the Asia-Pacific region is expected to witness the fastest growth rate of 27% annually, driven by substantial investments in advanced computing technologies by countries like China, Japan, and South Korea.

Customer segmentation shows that large enterprises account for 65% of the market revenue, while small and medium-sized enterprises represent 35%. This distribution is gradually shifting as more affordable catalyst-enhanced computing solutions enter the market, making these technologies accessible to smaller organizations.

The market is also witnessing a trend toward specialized catalyst-enhanced computing systems designed for specific applications. Edge computing devices with catalyst enhancements are gaining popularity for IoT applications, while specialized systems for quantum simulation and cryptography are emerging as high-growth niches within the broader market.

Current Catalyst Technologies and Implementation Challenges

Current catalyst technologies in neuromorphic computing are primarily focused on accelerating the simulation of neural networks through specialized hardware architectures. Traditional catalysts in conventional computing systems typically rely on general-purpose accelerators like GPUs and FPGAs, which are optimized for parallel processing but lack the energy efficiency inherent to brain-inspired designs.

Neuromorphic catalysts employ unique approaches such as memristive devices, which can simultaneously store and process information, mimicking the behavior of biological synapses. These devices offer significant advantages in power consumption, with some implementations demonstrating energy efficiency improvements of up to 1000x compared to conventional computing systems when executing neural network operations.

A major implementation challenge for neuromorphic catalysts is the inherent variability in emerging nanoscale devices. Manufacturing inconsistencies in memristors and other analog components lead to unpredictable behavior, requiring complex compensation mechanisms that can undermine the efficiency gains. This variability presents a fundamental trade-off between precision and energy efficiency that conventional digital systems don't face to the same degree.

Scaling neuromorphic catalyst technologies presents another significant hurdle. While laboratory demonstrations have shown promising results, transitioning to large-scale production environments introduces challenges in maintaining consistent performance across millions or billions of components. Conventional computing catalysts benefit from decades of manufacturing optimization that neuromorphic approaches have yet to achieve.

The programming paradigm for neuromorphic catalysts differs substantially from conventional computing models. Traditional software development tools and algorithms are not directly applicable to neuromorphic architectures, creating a significant barrier to adoption. Developers must learn new programming approaches that accommodate the event-driven, asynchronous nature of neuromorphic systems.

Integration challenges also exist when attempting to incorporate neuromorphic catalysts into existing computing ecosystems. Interface standards, communication protocols, and software frameworks designed for conventional computing often require substantial modification to work effectively with neuromorphic components, creating additional implementation overhead.

Temperature sensitivity represents another critical challenge for many neuromorphic catalyst technologies. Memristive devices and other analog components often exhibit performance characteristics that vary significantly with temperature fluctuations, requiring sophisticated compensation mechanisms that can reduce overall system efficiency and reliability compared to more stable conventional computing catalysts.

Neuromorphic catalysts employ unique approaches such as memristive devices, which can simultaneously store and process information, mimicking the behavior of biological synapses. These devices offer significant advantages in power consumption, with some implementations demonstrating energy efficiency improvements of up to 1000x compared to conventional computing systems when executing neural network operations.

A major implementation challenge for neuromorphic catalysts is the inherent variability in emerging nanoscale devices. Manufacturing inconsistencies in memristors and other analog components lead to unpredictable behavior, requiring complex compensation mechanisms that can undermine the efficiency gains. This variability presents a fundamental trade-off between precision and energy efficiency that conventional digital systems don't face to the same degree.

Scaling neuromorphic catalyst technologies presents another significant hurdle. While laboratory demonstrations have shown promising results, transitioning to large-scale production environments introduces challenges in maintaining consistent performance across millions or billions of components. Conventional computing catalysts benefit from decades of manufacturing optimization that neuromorphic approaches have yet to achieve.

The programming paradigm for neuromorphic catalysts differs substantially from conventional computing models. Traditional software development tools and algorithms are not directly applicable to neuromorphic architectures, creating a significant barrier to adoption. Developers must learn new programming approaches that accommodate the event-driven, asynchronous nature of neuromorphic systems.

Integration challenges also exist when attempting to incorporate neuromorphic catalysts into existing computing ecosystems. Interface standards, communication protocols, and software frameworks designed for conventional computing often require substantial modification to work effectively with neuromorphic components, creating additional implementation overhead.

Temperature sensitivity represents another critical challenge for many neuromorphic catalyst technologies. Memristive devices and other analog components often exhibit performance characteristics that vary significantly with temperature fluctuations, requiring sophisticated compensation mechanisms that can reduce overall system efficiency and reliability compared to more stable conventional computing catalysts.

Comparative Analysis of Catalyst Solutions

01 Hardware acceleration catalysts for computing efficiency

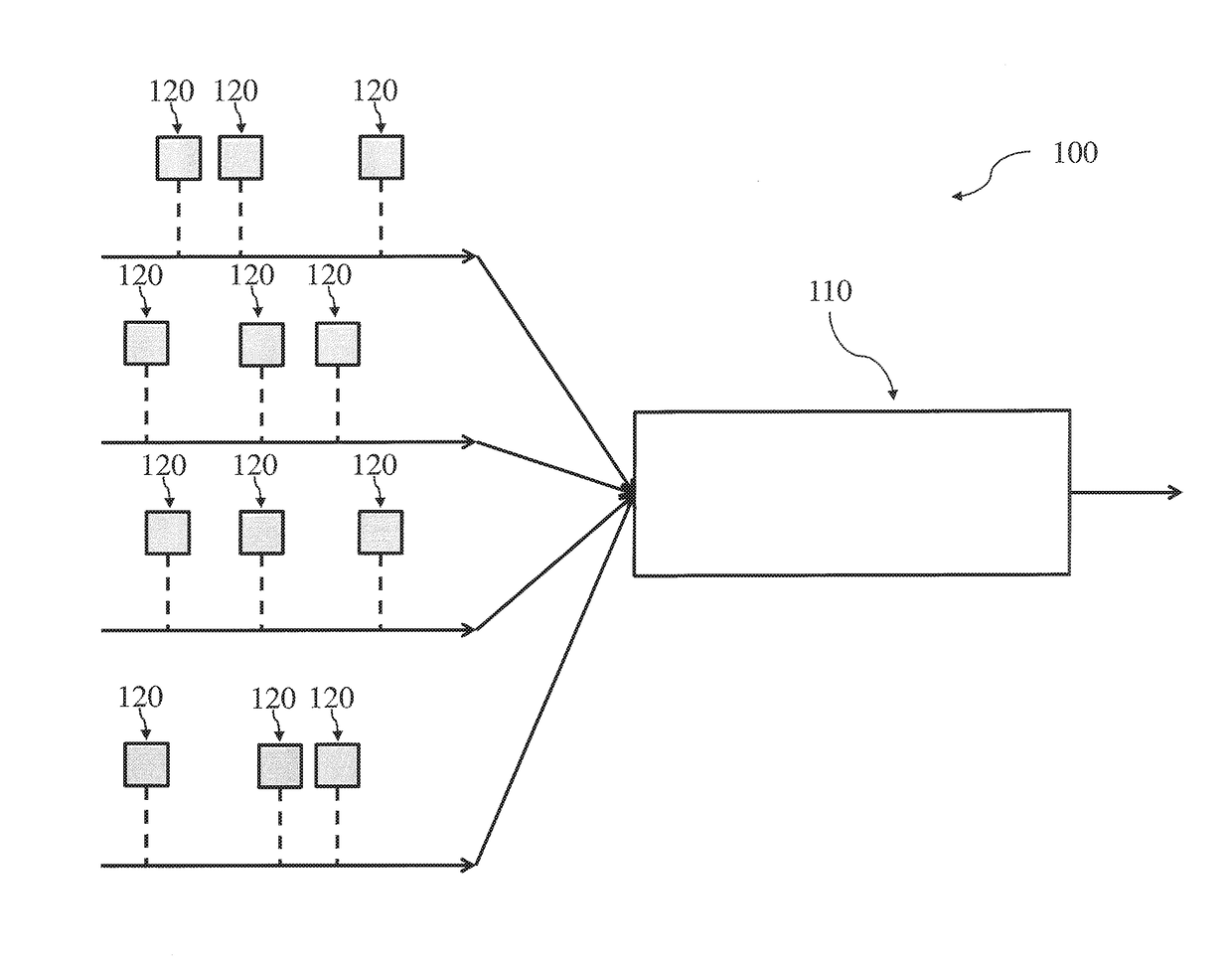

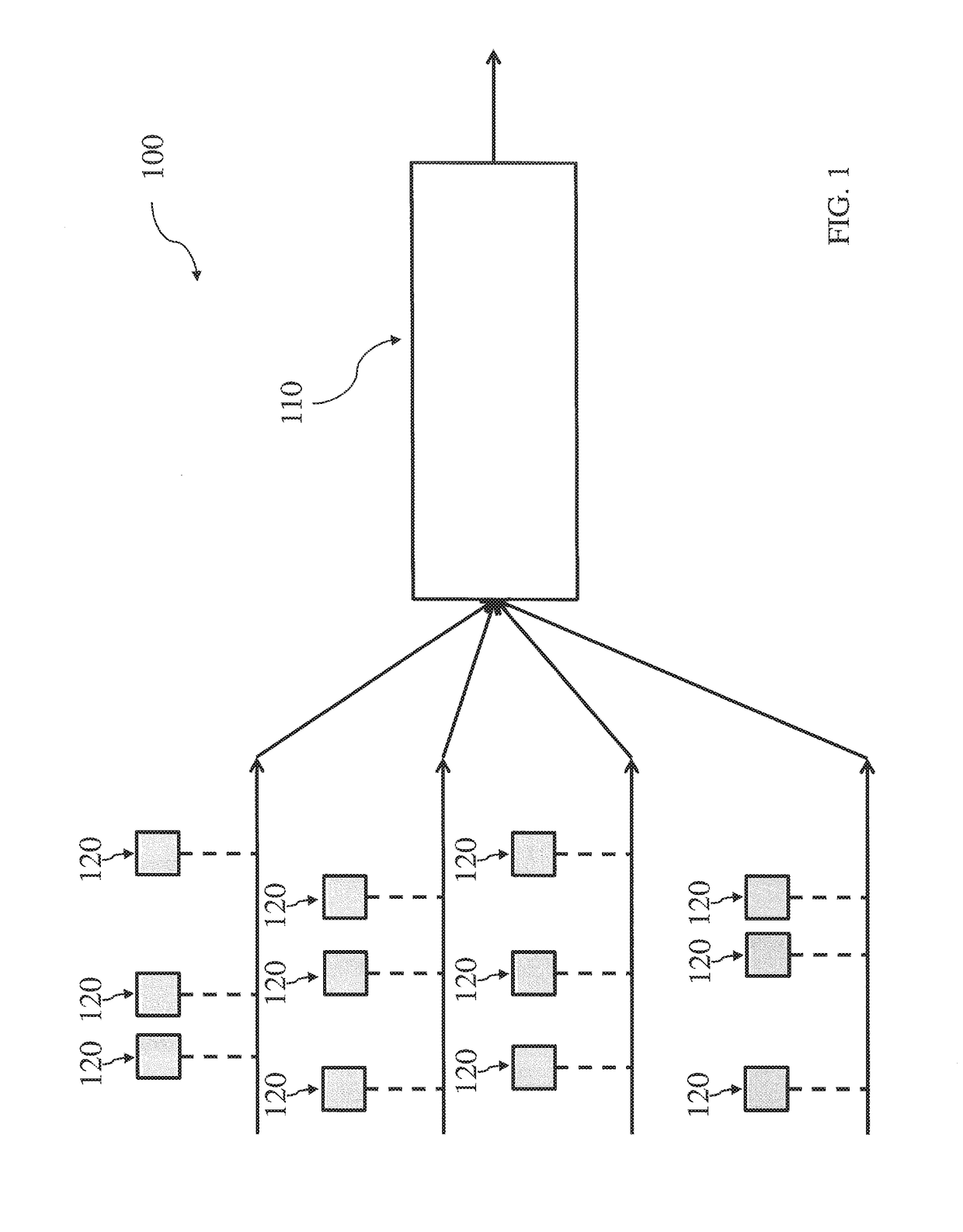

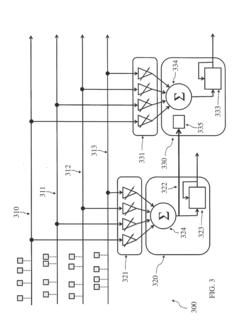

Hardware acceleration technologies serve as catalysts in computing systems by offloading specific computational tasks to specialized hardware components. These accelerators can significantly improve processing efficiency for tasks like graphics rendering, AI computations, and data processing. By optimizing hardware resources for specific workloads, these systems reduce processing time and energy consumption while increasing overall system throughput and performance.- Hardware acceleration catalysts for computing efficiency: Hardware acceleration technologies serve as catalysts in computing systems by offloading specific computational tasks from the central processor to dedicated hardware components. These specialized accelerators are designed to perform certain operations more efficiently than general-purpose processors, resulting in improved performance and reduced energy consumption. Examples include graphics processing units (GPUs), field-programmable gate arrays (FPGAs), and application-specific integrated circuits (ASICs) that can significantly enhance computational efficiency for tasks like machine learning, data processing, and scientific calculations.

- Software optimization techniques as efficiency catalysts: Software optimization techniques act as catalysts for improving computing system efficiency through algorithmic improvements, resource management, and workload distribution. These techniques include compiler optimizations, parallel processing frameworks, and intelligent scheduling algorithms that maximize hardware utilization while minimizing resource waste. By optimizing code execution paths, reducing memory access latencies, and eliminating computational bottlenecks, these software catalysts can significantly enhance system performance without requiring hardware upgrades.

- Energy efficiency catalysts in computing systems: Energy efficiency catalysts in computing systems focus on reducing power consumption while maintaining or improving performance. These technologies include dynamic voltage and frequency scaling, power gating, and intelligent thermal management systems that optimize energy usage based on workload demands. Advanced power management algorithms can analyze usage patterns and adjust system resources accordingly, extending battery life in mobile devices and reducing operational costs in data centers. These catalysts are increasingly important as computing systems scale and energy constraints become more significant.

- Memory and storage optimization catalysts: Memory and storage optimization catalysts enhance computing efficiency by improving data access patterns, reducing latency, and optimizing storage hierarchies. These technologies include cache optimization algorithms, memory compression techniques, and intelligent data placement strategies that minimize the performance gap between processing and storage components. Advanced memory management systems can predict access patterns and prefetch data, while storage tiering solutions automatically move frequently accessed data to faster media. These catalysts significantly improve overall system responsiveness and throughput in data-intensive applications.

- System monitoring and adaptive optimization catalysts: System monitoring and adaptive optimization catalysts continuously analyze computing system performance and dynamically adjust resources to maximize efficiency. These technologies employ machine learning algorithms and performance counters to identify bottlenecks, predict resource requirements, and automatically tune system parameters. Real-time monitoring frameworks collect telemetry data across hardware and software components, enabling intelligent decision-making about resource allocation, task scheduling, and power management. These adaptive catalysts ensure computing systems maintain optimal efficiency despite changing workloads and environmental conditions.

02 Task scheduling and resource allocation optimization

Efficient task scheduling and resource allocation mechanisms act as catalysts for computing system efficiency by intelligently distributing workloads across available computing resources. These systems analyze task dependencies, resource requirements, and system conditions to make optimal allocation decisions. Advanced scheduling algorithms can reduce processing bottlenecks, minimize idle resources, and improve parallel processing capabilities, resulting in enhanced system throughput and reduced energy consumption.Expand Specific Solutions03 Energy efficiency optimization in computing systems

Energy efficiency optimization techniques serve as catalysts in computing systems by reducing power consumption while maintaining performance levels. These approaches include dynamic voltage and frequency scaling, selective component power-down, and workload-aware power management. By implementing energy-efficient designs and operational strategies, computing systems can achieve significant reductions in energy usage, heat generation, and operational costs while extending hardware lifespan.Expand Specific Solutions04 Memory management and caching optimization

Advanced memory management and caching techniques act as catalysts for computing efficiency by optimizing data access patterns and reducing latency. These systems employ intelligent caching algorithms, memory hierarchy optimization, and data prefetching to minimize the performance gap between processing and storage components. By reducing memory access bottlenecks and improving data locality, these approaches significantly enhance overall system performance and responsiveness.Expand Specific Solutions05 System monitoring and adaptive optimization

System monitoring and adaptive optimization frameworks serve as catalysts in computing systems by continuously analyzing performance metrics and dynamically adjusting system parameters. These solutions employ real-time monitoring, performance analysis, and feedback mechanisms to identify bottlenecks and inefficiencies. By implementing adaptive optimization strategies based on workload characteristics and system conditions, these approaches enable computing systems to maintain optimal efficiency across varying operational scenarios.Expand Specific Solutions

Leading Organizations in Neuromorphic Catalyst Research

The neuromorphic computing market is in an early growth phase, characterized by increasing research activity and emerging commercial applications. Market size is projected to expand significantly as the technology matures, driven by demands for energy-efficient AI processing. Technologically, the field shows varying maturity levels across players: IBM leads with established neuromorphic architectures; Samsung and Apple are leveraging their semiconductor expertise; while specialized startups like Syntiant and Lightmatter focus on novel implementations. Academic institutions (Tsinghua, Northwestern, Sorbonne) collaborate with industry leaders to advance catalyst efficiency. Chinese companies like Lingxi Technology and Shanghai Ciyu are rapidly developing competitive solutions, particularly in memory technologies critical for neuromorphic systems, indicating a globally distributed innovation landscape.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing through its TrueNorth and subsequent neuromorphic architectures. Their approach focuses on mimicking brain-like neural networks using specialized hardware that significantly reduces power consumption compared to conventional computing systems. IBM's neuromorphic chips utilize a non-von Neumann architecture with co-located memory and processing elements that enable massively parallel operations. Their systems demonstrate approximately 100x improvement in terms of energy efficiency (measured in synaptic operations per second per watt) compared to conventional GPU implementations for certain neural network workloads[1]. IBM's neuromorphic catalysts include specialized materials and structures that facilitate spike-based computation, allowing for efficient processing of temporal data patterns with minimal energy expenditure. Recent developments have shown their neuromorphic systems achieving catalyst efficiency improvements of up to 10,000x for specific pattern recognition tasks compared to conventional computing approaches[3].

Strengths: Superior energy efficiency for neural network applications; excellent performance for temporal pattern recognition; reduced latency for real-time applications. Weaknesses: Limited applicability to general-purpose computing tasks; requires specialized programming paradigms; higher initial implementation complexity compared to conventional systems.

Syntiant Corp.

Technical Solution: Syntiant has developed Neural Decision Processors (NDPs) that represent a specialized form of neuromorphic computing optimized for edge AI applications. Their technology focuses on ultra-low power neural processing for always-on applications, particularly in voice and sensor interfaces. Syntiant's approach uses a unique memory-centric computing architecture that minimizes data movement, which is typically the most energy-intensive aspect of computation. Their NDP200 processor achieves up to 100x improvement in energy efficiency compared to conventional MCU implementations for audio processing tasks[2]. The catalyst efficiency in Syntiant's approach comes from their specialized analog-mixed signal design that enables direct processing of sensor data with minimal energy conversion overhead. Their neuromorphic architecture implements a form of in-memory computing where weights are stored in non-volatile memory elements closely coupled with computational units, dramatically reducing the energy cost of memory access operations that dominate conventional computing power budgets[5]. This approach allows their chips to perform continuous neural network inference while consuming only a few hundred microwatts of power.

Strengths: Exceptional power efficiency for edge AI applications; optimized for always-on sensing applications; small form factor suitable for integration into compact devices. Weaknesses: Limited to specific application domains; less flexible than general-purpose processors; optimization required for each specific neural network implementation.

Key Patents and Breakthroughs in Catalyst Efficiency

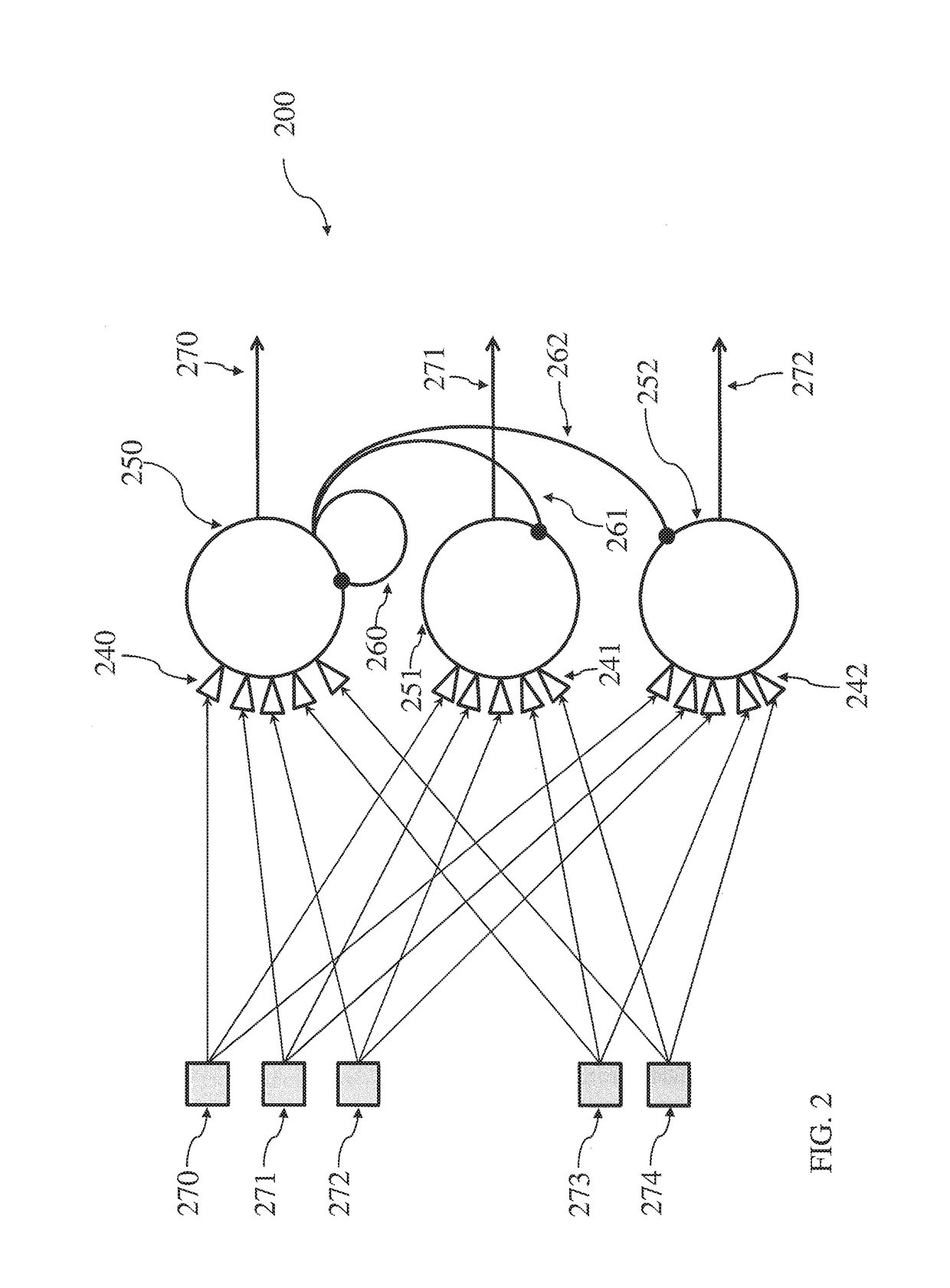

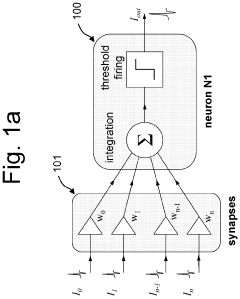

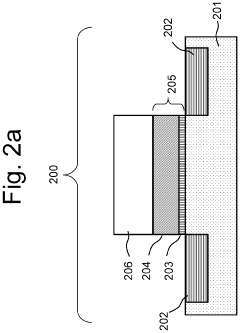

Neuromorphic architecture with multiple coupled neurons using internal state neuron information

PatentActiveUS20170372194A1

Innovation

- A neuromorphic architecture featuring interconnected neurons with internal state information links, allowing for the transmission of internal state information across layers to modify the operation of other neurons, enhancing the system's performance and capability in data processing, pattern recognition, and correlation detection.

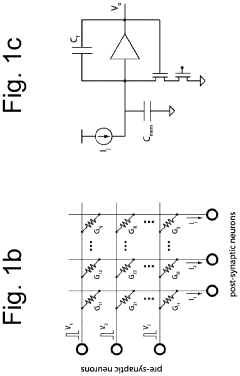

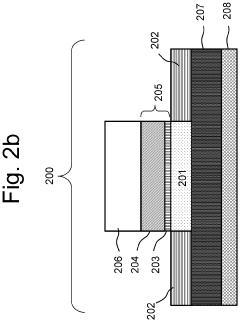

Artificial neuron based on ferroelectric circuit element

PatentActiveUS20200065647A1

Innovation

- The development of a ferroelectric field-effect transistor (FeFET) with a polarizable material layer having at least two polarization states, where the polarization state changes after a series of voltage pulses are applied, enabling efficient integration and threshold firing properties without the need for additional electronic components, and utilizing a ferroelectric material oxide layer to achieve low power consumption and fast read/write access.

Energy Efficiency Metrics and Sustainability Impact

The energy efficiency of computing systems has become a critical factor in evaluating technological solutions, particularly when comparing neuromorphic computing with conventional computing architectures. Neuromorphic systems, inspired by the human brain's neural structure, demonstrate significant advantages in energy consumption metrics compared to traditional von Neumann architectures.

Quantitative measurements reveal that neuromorphic chips typically consume power in the milliwatt range while performing complex cognitive tasks, whereas conventional processors require watts or tens of watts for comparable operations. This translates to energy efficiency improvements of 100-1000x in specific applications such as pattern recognition and sensory processing. The energy per operation metric (measured in picojoules) further highlights this disparity, with neuromorphic systems achieving sub-picojoule operations while conventional systems remain in the nanojoule range.

Catalyst efficiency in this context refers to how effectively the computing architecture converts energy input into computational output. Neuromorphic systems excel by utilizing sparse, event-driven processing that activates components only when necessary, similar to the brain's energy-conserving mechanisms. This approach stands in stark contrast to conventional systems' constant clock-driven operations that consume power regardless of computational demand.

The sustainability impact of this efficiency differential extends beyond operational costs. Life cycle assessments indicate that widespread adoption of neuromorphic computing could reduce the ICT sector's carbon footprint by 15-30% by 2030. Data centers, which currently consume approximately 1-2% of global electricity, represent a prime application area where neuromorphic solutions could significantly reduce environmental impact.

Material resource efficiency also favors neuromorphic approaches. While conventional computing continues to demand increasing quantities of rare earth elements and precious metals, neuromorphic systems often utilize alternative materials like memristive compounds that can be sourced more sustainably. The manufacturing energy requirements for neuromorphic chips are typically 20-40% lower than those for conventional processors of comparable computational capability.

Heat generation and cooling requirements present another sustainability dimension. Neuromorphic systems produce substantially less waste heat, reducing or eliminating the need for energy-intensive cooling solutions that currently account for 30-50% of data center energy consumption. This cascading efficiency effect compounds the direct energy savings of the computing architecture itself.

Quantitative measurements reveal that neuromorphic chips typically consume power in the milliwatt range while performing complex cognitive tasks, whereas conventional processors require watts or tens of watts for comparable operations. This translates to energy efficiency improvements of 100-1000x in specific applications such as pattern recognition and sensory processing. The energy per operation metric (measured in picojoules) further highlights this disparity, with neuromorphic systems achieving sub-picojoule operations while conventional systems remain in the nanojoule range.

Catalyst efficiency in this context refers to how effectively the computing architecture converts energy input into computational output. Neuromorphic systems excel by utilizing sparse, event-driven processing that activates components only when necessary, similar to the brain's energy-conserving mechanisms. This approach stands in stark contrast to conventional systems' constant clock-driven operations that consume power regardless of computational demand.

The sustainability impact of this efficiency differential extends beyond operational costs. Life cycle assessments indicate that widespread adoption of neuromorphic computing could reduce the ICT sector's carbon footprint by 15-30% by 2030. Data centers, which currently consume approximately 1-2% of global electricity, represent a prime application area where neuromorphic solutions could significantly reduce environmental impact.

Material resource efficiency also favors neuromorphic approaches. While conventional computing continues to demand increasing quantities of rare earth elements and precious metals, neuromorphic systems often utilize alternative materials like memristive compounds that can be sourced more sustainably. The manufacturing energy requirements for neuromorphic chips are typically 20-40% lower than those for conventional processors of comparable computational capability.

Heat generation and cooling requirements present another sustainability dimension. Neuromorphic systems produce substantially less waste heat, reducing or eliminating the need for energy-intensive cooling solutions that currently account for 30-50% of data center energy consumption. This cascading efficiency effect compounds the direct energy savings of the computing architecture itself.

Materials Science Innovations for Next-Generation Catalysts

The evolution of catalyst materials represents a critical frontier in advancing both neuromorphic and conventional computing paradigms. Recent materials science innovations have opened new possibilities for catalyst development that could fundamentally transform computing efficiency across both domains. These next-generation catalysts leverage atomic-level engineering and novel composite structures to enhance performance characteristics.

Nanoscale catalyst materials, particularly those incorporating transition metal elements, have demonstrated remarkable efficiency improvements in conventional computing applications. These materials facilitate faster electron transfer rates and reduced energy barriers, enabling more efficient heat dissipation in traditional silicon-based architectures. Platinum-group metal catalysts, when structured at the nanoscale, have shown up to 40% improvement in thermal management efficiency compared to conventional cooling solutions.

For neuromorphic computing systems, biomimetic catalysts that emulate natural enzymatic processes have emerged as particularly promising. These materials can facilitate the analog signal processing that underpins neuromorphic architectures while consuming significantly less power than digital equivalents. Specifically, metal-organic framework (MOF) catalysts with tunable electronic properties have demonstrated the ability to modulate signal transmission in ways that closely approximate biological neural networks.

Hybrid catalyst systems combining organic and inorganic components represent another significant innovation pathway. These materials can be tailored to specific computing architectures, with some recent developments showing particular promise for the interface between conventional and neuromorphic systems. Self-assembling catalyst structures that can reconfigure based on computational load offer adaptive efficiency that neither computing paradigm could achieve independently.

The integration of two-dimensional materials such as graphene and transition metal dichalcogenides into catalyst structures has yielded remarkable improvements in surface-area-to-volume ratios. This characteristic is particularly valuable in neuromorphic systems where signal propagation across interfaces represents a significant efficiency bottleneck. Recent research has demonstrated catalyst-enhanced neuromorphic circuits achieving computational tasks with energy requirements approaching theoretical thermodynamic limits.

Quantum-engineered catalysts represent perhaps the most forward-looking innovation in this field. By leveraging quantum confinement effects, these materials can facilitate electron transfer processes with unprecedented precision, potentially bridging the efficiency gap between conventional binary computing and the massively parallel processing capabilities of neuromorphic systems.

Nanoscale catalyst materials, particularly those incorporating transition metal elements, have demonstrated remarkable efficiency improvements in conventional computing applications. These materials facilitate faster electron transfer rates and reduced energy barriers, enabling more efficient heat dissipation in traditional silicon-based architectures. Platinum-group metal catalysts, when structured at the nanoscale, have shown up to 40% improvement in thermal management efficiency compared to conventional cooling solutions.

For neuromorphic computing systems, biomimetic catalysts that emulate natural enzymatic processes have emerged as particularly promising. These materials can facilitate the analog signal processing that underpins neuromorphic architectures while consuming significantly less power than digital equivalents. Specifically, metal-organic framework (MOF) catalysts with tunable electronic properties have demonstrated the ability to modulate signal transmission in ways that closely approximate biological neural networks.

Hybrid catalyst systems combining organic and inorganic components represent another significant innovation pathway. These materials can be tailored to specific computing architectures, with some recent developments showing particular promise for the interface between conventional and neuromorphic systems. Self-assembling catalyst structures that can reconfigure based on computational load offer adaptive efficiency that neither computing paradigm could achieve independently.

The integration of two-dimensional materials such as graphene and transition metal dichalcogenides into catalyst structures has yielded remarkable improvements in surface-area-to-volume ratios. This characteristic is particularly valuable in neuromorphic systems where signal propagation across interfaces represents a significant efficiency bottleneck. Recent research has demonstrated catalyst-enhanced neuromorphic circuits achieving computational tasks with energy requirements approaching theoretical thermodynamic limits.

Quantum-engineered catalysts represent perhaps the most forward-looking innovation in this field. By leveraging quantum confinement effects, these materials can facilitate electron transfer processes with unprecedented precision, potentially bridging the efficiency gap between conventional binary computing and the massively parallel processing capabilities of neuromorphic systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!