HBM4 Power Consumption And Design Constraints In 2.5D Integration

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

HBM4 Evolution and Performance Targets

High Bandwidth Memory (HBM) has evolved significantly since its introduction, with each generation bringing substantial improvements in bandwidth, capacity, and energy efficiency. HBM4, the latest iteration in this evolution, represents a significant leap forward in addressing the growing memory demands of data-intensive applications such as artificial intelligence, high-performance computing, and graphics processing.

The evolution of HBM technology has followed a clear trajectory of increasing performance while managing power constraints. HBM1, introduced in 2013, offered 128GB/s bandwidth per stack with 4-high configurations. HBM2 doubled this to 256GB/s in 2016, while HBM2E further increased bandwidth to 460GB/s by 2020. HBM3, released in 2022, pushed boundaries with up to 819GB/s per stack and improved power efficiency.

HBM4 aims to achieve unprecedented performance targets, with bandwidth projections exceeding 1.2TB/s per stack—a 50% improvement over HBM3. This advancement is critical for supporting next-generation AI training models that require massive parallel processing capabilities and near-instantaneous memory access.

Capacity targets for HBM4 are equally ambitious, with expectations of up to 36GB per stack through 12-high configurations, representing a significant increase from HBM3's 24GB maximum. This expanded capacity is essential for accommodating larger AI models and more complex computational workloads without requiring additional stacks.

Power efficiency remains a central focus in HBM4 development, with targets to improve energy consumption per bit transferred by approximately 20-25% compared to HBM3. This efficiency gain is crucial for implementation in 2.5D integration scenarios, where thermal management presents significant challenges due to the dense packaging of memory stacks alongside processing units.

Signal integrity improvements are another key performance target for HBM4, with enhanced I/O circuitry designed to maintain reliable data transmission at higher speeds. This includes advanced equalization techniques and improved channel designs to mitigate signal degradation across the interposer.

Thermal performance targets for HBM4 include maintaining junction temperatures below critical thresholds even at peak bandwidth operation. This requires innovations in materials science and thermal interface materials to efficiently dissipate heat from the densely packed memory dies.

The industry anticipates HBM4 to be commercially available by late 2024 to early 2025, with early adoption in high-end AI accelerators and supercomputing applications before broader market penetration. These performance targets position HBM4 as a critical enabling technology for the next generation of computing systems requiring extreme memory bandwidth within strict power envelopes.

The evolution of HBM technology has followed a clear trajectory of increasing performance while managing power constraints. HBM1, introduced in 2013, offered 128GB/s bandwidth per stack with 4-high configurations. HBM2 doubled this to 256GB/s in 2016, while HBM2E further increased bandwidth to 460GB/s by 2020. HBM3, released in 2022, pushed boundaries with up to 819GB/s per stack and improved power efficiency.

HBM4 aims to achieve unprecedented performance targets, with bandwidth projections exceeding 1.2TB/s per stack—a 50% improvement over HBM3. This advancement is critical for supporting next-generation AI training models that require massive parallel processing capabilities and near-instantaneous memory access.

Capacity targets for HBM4 are equally ambitious, with expectations of up to 36GB per stack through 12-high configurations, representing a significant increase from HBM3's 24GB maximum. This expanded capacity is essential for accommodating larger AI models and more complex computational workloads without requiring additional stacks.

Power efficiency remains a central focus in HBM4 development, with targets to improve energy consumption per bit transferred by approximately 20-25% compared to HBM3. This efficiency gain is crucial for implementation in 2.5D integration scenarios, where thermal management presents significant challenges due to the dense packaging of memory stacks alongside processing units.

Signal integrity improvements are another key performance target for HBM4, with enhanced I/O circuitry designed to maintain reliable data transmission at higher speeds. This includes advanced equalization techniques and improved channel designs to mitigate signal degradation across the interposer.

Thermal performance targets for HBM4 include maintaining junction temperatures below critical thresholds even at peak bandwidth operation. This requires innovations in materials science and thermal interface materials to efficiently dissipate heat from the densely packed memory dies.

The industry anticipates HBM4 to be commercially available by late 2024 to early 2025, with early adoption in high-end AI accelerators and supercomputing applications before broader market penetration. These performance targets position HBM4 as a critical enabling technology for the next generation of computing systems requiring extreme memory bandwidth within strict power envelopes.

Market Demand for High-Bandwidth Memory Solutions

The demand for High-Bandwidth Memory (HBM) solutions has experienced exponential growth in recent years, primarily driven by data-intensive applications that require massive memory bandwidth and capacity. The emergence of artificial intelligence, machine learning, high-performance computing, and advanced graphics processing has created unprecedented requirements for memory systems that can deliver exceptional bandwidth while maintaining reasonable power consumption profiles.

Market research indicates that the global HBM market is projected to grow at a compound annual growth rate of over 30% through 2028. This surge is particularly evident in data centers and cloud computing environments, where AI training and inference workloads demand increasingly higher memory bandwidth to process complex neural networks and large datasets efficiently.

Enterprise customers are specifically seeking memory solutions that can address the memory wall problem - the growing disparity between processor and memory performance. HBM technology, with its 3D stacked architecture and wide interface, has emerged as the preferred solution for high-performance applications where memory bandwidth is a critical bottleneck.

The automotive and edge computing sectors are also showing increased interest in HBM solutions as advanced driver-assistance systems (ADAS) and autonomous driving technologies require real-time processing of sensor data with minimal latency. These applications demand memory systems that can deliver high bandwidth while operating within strict power and thermal constraints.

Power efficiency has become a paramount concern for data center operators, with memory subsystems accounting for approximately 25-40% of server power consumption. This has created strong market demand for next-generation HBM solutions like HBM4 that can deliver higher bandwidth while improving power efficiency metrics such as pJ/bit.

Semiconductor manufacturers and system integrators are increasingly adopting 2.5D integration technologies to overcome the limitations of traditional memory interfaces. The ability to place HBM stacks in close proximity to processing elements using silicon interposers or advanced packaging technologies has become a key differentiator in high-performance computing products.

Market analysis reveals that customers are willing to pay premium prices for memory solutions that can demonstrate tangible improvements in system-level performance and energy efficiency. This value proposition is particularly compelling for hyperscale cloud providers and AI research organizations where computational capabilities directly translate to competitive advantages and operational cost savings.

Market research indicates that the global HBM market is projected to grow at a compound annual growth rate of over 30% through 2028. This surge is particularly evident in data centers and cloud computing environments, where AI training and inference workloads demand increasingly higher memory bandwidth to process complex neural networks and large datasets efficiently.

Enterprise customers are specifically seeking memory solutions that can address the memory wall problem - the growing disparity between processor and memory performance. HBM technology, with its 3D stacked architecture and wide interface, has emerged as the preferred solution for high-performance applications where memory bandwidth is a critical bottleneck.

The automotive and edge computing sectors are also showing increased interest in HBM solutions as advanced driver-assistance systems (ADAS) and autonomous driving technologies require real-time processing of sensor data with minimal latency. These applications demand memory systems that can deliver high bandwidth while operating within strict power and thermal constraints.

Power efficiency has become a paramount concern for data center operators, with memory subsystems accounting for approximately 25-40% of server power consumption. This has created strong market demand for next-generation HBM solutions like HBM4 that can deliver higher bandwidth while improving power efficiency metrics such as pJ/bit.

Semiconductor manufacturers and system integrators are increasingly adopting 2.5D integration technologies to overcome the limitations of traditional memory interfaces. The ability to place HBM stacks in close proximity to processing elements using silicon interposers or advanced packaging technologies has become a key differentiator in high-performance computing products.

Market analysis reveals that customers are willing to pay premium prices for memory solutions that can demonstrate tangible improvements in system-level performance and energy efficiency. This value proposition is particularly compelling for hyperscale cloud providers and AI research organizations where computational capabilities directly translate to competitive advantages and operational cost savings.

Power Consumption Challenges in HBM4 Technology

Power consumption in HBM4 technology represents a critical challenge as memory bandwidth demands continue to escalate in high-performance computing applications. The latest generation of High Bandwidth Memory faces unprecedented power constraints due to the increased number of memory channels, higher operating frequencies, and denser integration within 2.5D packaging solutions. Current estimates indicate that HBM4 modules may consume between 12-15W per stack during peak operation, representing a 30-40% increase compared to HBM3E technology.

The primary power consumption challenges stem from several interconnected factors. Signal integrity issues arise as data rates approach 8-10 Gbps per pin, requiring more aggressive equalization techniques that inherently consume additional power. The increased number of TSVs (Through-Silicon Vias) in HBM4 stacks also contributes to higher leakage currents and thermal density concerns, particularly at the interface between the memory dies and the silicon interposer.

Thermal management presents another significant challenge. The compact nature of 2.5D integration creates heat concentration zones that can lead to performance throttling and reliability concerns. With HBM4 stacks positioned in close proximity to high-performance processors or AI accelerators, the combined thermal envelope becomes increasingly difficult to manage using conventional cooling solutions. Thermal simulations suggest that junction temperatures could exceed 105°C under sustained workloads without advanced cooling strategies.

Power delivery network (PDN) design faces substantial constraints as well. The increased current requirements for HBM4 necessitate more sophisticated power distribution architectures within the interposer. Voltage droop and power integrity issues become more pronounced as switching activities increase, potentially leading to timing violations and data corruption if not properly addressed. Industry analysis indicates that PDN-related challenges could account for up to 25% of the overall power efficiency losses in HBM4 implementations.

Dynamic power management capabilities in HBM4 face implementation hurdles due to the complex interplay between memory controllers, silicon interposers, and the stacked memory dies. While previous HBM generations incorporated basic power-saving states, HBM4 requires more granular control mechanisms that can rapidly transition between performance and power-saving modes without introducing latency penalties. The industry is exploring adaptive voltage scaling techniques and fine-grained power gating, though these approaches introduce additional design complexity.

The increased I/O power consumption represents perhaps the most significant challenge, as it scales almost quadratically with data rate increases. Early prototypes suggest that I/O interfaces could account for up to 60% of the total HBM4 power budget, creating a substantial bottleneck for overall system efficiency. This has prompted exploration of alternative signaling methods, including reduced-swing differential signaling and advanced SerDes architectures optimized specifically for the unique constraints of 2.5D integration environments.

The primary power consumption challenges stem from several interconnected factors. Signal integrity issues arise as data rates approach 8-10 Gbps per pin, requiring more aggressive equalization techniques that inherently consume additional power. The increased number of TSVs (Through-Silicon Vias) in HBM4 stacks also contributes to higher leakage currents and thermal density concerns, particularly at the interface between the memory dies and the silicon interposer.

Thermal management presents another significant challenge. The compact nature of 2.5D integration creates heat concentration zones that can lead to performance throttling and reliability concerns. With HBM4 stacks positioned in close proximity to high-performance processors or AI accelerators, the combined thermal envelope becomes increasingly difficult to manage using conventional cooling solutions. Thermal simulations suggest that junction temperatures could exceed 105°C under sustained workloads without advanced cooling strategies.

Power delivery network (PDN) design faces substantial constraints as well. The increased current requirements for HBM4 necessitate more sophisticated power distribution architectures within the interposer. Voltage droop and power integrity issues become more pronounced as switching activities increase, potentially leading to timing violations and data corruption if not properly addressed. Industry analysis indicates that PDN-related challenges could account for up to 25% of the overall power efficiency losses in HBM4 implementations.

Dynamic power management capabilities in HBM4 face implementation hurdles due to the complex interplay between memory controllers, silicon interposers, and the stacked memory dies. While previous HBM generations incorporated basic power-saving states, HBM4 requires more granular control mechanisms that can rapidly transition between performance and power-saving modes without introducing latency penalties. The industry is exploring adaptive voltage scaling techniques and fine-grained power gating, though these approaches introduce additional design complexity.

The increased I/O power consumption represents perhaps the most significant challenge, as it scales almost quadratically with data rate increases. Early prototypes suggest that I/O interfaces could account for up to 60% of the total HBM4 power budget, creating a substantial bottleneck for overall system efficiency. This has prompted exploration of alternative signaling methods, including reduced-swing differential signaling and advanced SerDes architectures optimized specifically for the unique constraints of 2.5D integration environments.

Current 2.5D Integration Solutions for HBM4

01 Power management techniques for HBM4 memory systems

Various power management techniques are implemented in HBM4 memory systems to optimize power consumption. These include dynamic voltage and frequency scaling, power gating unused components, and implementing low-power states during periods of inactivity. Advanced power controllers monitor system usage patterns and adjust power delivery accordingly, helping to balance performance requirements with energy efficiency goals in high-bandwidth memory applications.- Power management techniques for HBM4 memory systems: Various power management techniques are employed in HBM4 memory systems to optimize power consumption. These include dynamic voltage and frequency scaling, power gating unused components, and implementing low-power modes during periods of inactivity. Advanced power controllers monitor system usage patterns and adjust power states accordingly to balance performance requirements with energy efficiency goals.

- Thermal management solutions for HBM4: Thermal management is crucial for controlling power consumption in HBM4 systems. Solutions include integrated temperature sensors, adaptive cooling mechanisms, and thermal throttling algorithms that adjust performance based on temperature thresholds. These approaches help maintain optimal operating temperatures while preventing excessive power draw that occurs when memory components overheat.

- HBM4 architecture optimizations for power efficiency: The HBM4 architecture incorporates specific design optimizations to enhance power efficiency. These include improved stacking technologies, reduced interconnect distances, more efficient refresh mechanisms, and optimized memory controllers. The architecture also features power-aware data transfer protocols and intelligent power distribution across memory banks to minimize overall energy consumption.

- Power-aware memory access and data management: Power-aware memory access strategies are implemented in HBM4 systems to reduce energy consumption. These include data compression techniques, intelligent caching algorithms, and optimized memory access patterns. By minimizing unnecessary data transfers and prioritizing energy-efficient memory operations, these approaches significantly reduce the power requirements of HBM4 memory systems during operation.

- System-level integration and power optimization for HBM4: System-level approaches to HBM4 power optimization focus on the integration of memory with other system components. These include coordinated power management between processors and memory, adaptive workload distribution, and intelligent scheduling algorithms. Power budgeting techniques allocate energy resources dynamically across the system based on application requirements, ensuring optimal performance while maintaining power efficiency.

02 Thermal management solutions for HBM4

Thermal management is critical for controlling power consumption in HBM4 memory systems. Solutions include advanced cooling systems, temperature sensors for real-time monitoring, and thermal throttling mechanisms that adjust performance to prevent overheating. These approaches help maintain optimal operating temperatures, which directly impacts power efficiency and prevents performance degradation due to thermal issues in high-density memory configurations.Expand Specific Solutions03 Power-efficient HBM4 interface designs

Interface designs for HBM4 focus on reducing power consumption while maintaining high bandwidth. These include optimized signaling techniques, reduced voltage swing interfaces, and energy-efficient data transfer protocols. Advanced I/O circuits implement features like dynamic termination and adaptive equalization that adjust power usage based on transmission requirements, significantly reducing the energy cost per bit transferred across the memory interface.Expand Specific Solutions04 System-level power optimization for HBM4 integration

System-level approaches to HBM4 power optimization include intelligent memory controllers, workload-aware scheduling algorithms, and memory access pattern optimization. These techniques minimize unnecessary memory operations, reduce data movement, and coordinate memory access with processing activities. Integration strategies also focus on optimizing the physical placement of HBM4 stacks relative to processors to minimize interconnect power losses and improve overall system energy efficiency.Expand Specific Solutions05 Advanced power reduction techniques in HBM4 architecture

HBM4 architectures incorporate several advanced power reduction techniques including fine-grained refresh control, partial array self-refresh, and bank-group power management. These architectural innovations allow for more precise control over which portions of memory consume power at any given time. Additionally, intelligent prefetching and caching mechanisms reduce redundant memory accesses, while compression techniques minimize data movement, all contributing to significant power savings in high-performance computing applications.Expand Specific Solutions

Key Semiconductor Players in HBM4 Development

The HBM4 power consumption and design constraints in 2.5D integration market is currently in an early growth phase, with an estimated market size of $3-5 billion and projected annual growth of 25-30%. The technology is approaching maturity but faces significant power and thermal challenges. Leading players include memory manufacturers Samsung and Micron, who are developing advanced HBM4 solutions with improved power efficiency, while Intel, IBM, and GLOBALFOUNDRIES are focusing on 2.5D integration platforms. Chinese entities like SMIC and ChangXin Memory are rapidly advancing their capabilities, though still trailing established players. Research institutions including Imec and Georgia Tech are contributing breakthrough innovations in thermal management and power optimization for next-generation HBM implementations.

Intel Corp.

Technical Solution: Intel's approach to HBM4 power consumption in 2.5D integration focuses on their EMIB (Embedded Multi-die Interconnect Bridge) technology, which provides shorter interconnects between HBM stacks and processing dies. Their latest HBM4 implementation incorporates advanced power management techniques including dynamic voltage and frequency scaling specifically optimized for memory operations. Intel has developed proprietary thermal solutions that address the concentrated heat generation in 2.5D packages with HBM4, utilizing a combination of integrated silicon cooling bridges and phase-change thermal interface materials. Their design also implements fine-grained power gating at the bank level, allowing unused portions of the HBM4 stack to be completely powered down when not in use, reducing static power consumption by approximately 30% compared to previous generations.

Strengths: Intel's EMIB technology offers lower manufacturing costs compared to silicon interposers while maintaining high bandwidth. Their thermal management solutions are particularly effective for high-performance computing applications. Weaknesses: The proprietary nature of their interconnect technology creates ecosystem limitations and potential vendor lock-in issues.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung's HBM4 power optimization strategy centers on their advanced process node implementation (likely 4nm or below) specifically tailored for memory applications. Their HBM4 design incorporates innovative low-power signaling techniques that reduce I/O power consumption by approximately 25% compared to HBM3. Samsung has developed a comprehensive thermal management approach for their 2.5D packages that includes specialized thermal interface materials with conductivity exceeding 20 W/m·K and optimized microbump designs that improve heat dissipation pathways. Their HBM4 architecture implements adaptive refresh rates based on temperature and usage patterns, significantly reducing refresh power which typically accounts for 15-20% of DRAM power consumption. Samsung has also pioneered advanced TSV (Through-Silicon Via) designs with reduced capacitance and resistance, lowering the power required for signal transmission between HBM4 die layers.

Strengths: Samsung's vertical integration as both a memory manufacturer and system integrator gives them unique control over the entire HBM4 supply chain and design optimization. Their process technology leadership enables power-efficient implementations. Weaknesses: Their solutions may require specialized cooling systems that increase overall system complexity and cost.

Critical Patents in HBM4 Power Efficiency

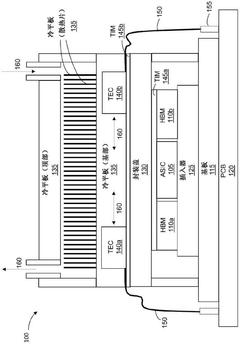

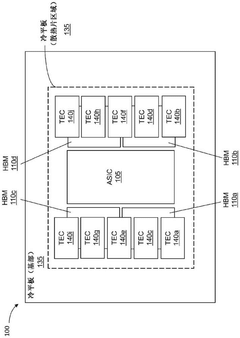

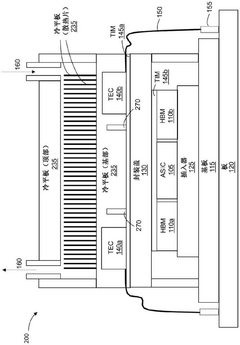

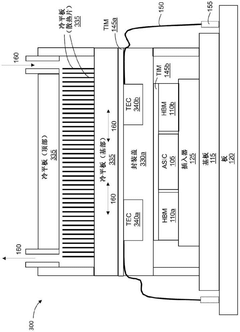

Thermoelectric Coolers for Spot Cooling of 2.5D/3D IC Packages

PatentActiveCN112670184B

Innovation

- Using a hybrid cooling solution of a thermoelectric cooler (TEC) and a traditional heat sink or cold plate, TEC components are placed at fixed points in the package to cover low-power components. The thermoelectric cooler is used to provide auxiliary heat dissipation in high heat-sensitive areas to reduce the temperature of low-power components. , while improving the efficiency of thermoelectric coolers in low thermal sensitivity areas and reducing thermal performance requirements for cold plates.

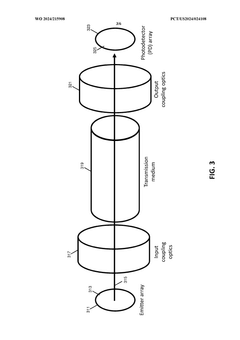

Optically interconnected high bandwidth memory architectures

PatentWO2024215908A1

Innovation

- The implementation of optically interconnected high bandwidth memory architectures using multi-chip packages with optical interfaces and transmission media, such as optical fibers, to enable longer interconnects between System-on-Chip (SoC) and HBM, allowing for increased memory capacity and reduced thermal stress by physically separating HBM from the hot SoC.

Thermal Management Strategies for 2.5D HBM4 Integration

Thermal management has emerged as a critical challenge in 2.5D integration of HBM4 memory, primarily due to the increased power density and thermal constraints inherent in these advanced packaging technologies. As HBM4 pushes performance boundaries with higher bandwidth capabilities, the resulting thermal issues require sophisticated management strategies to maintain system reliability and performance.

Passive cooling techniques represent the first line of defense in thermal management for 2.5D HBM4 integration. Advanced thermal interface materials (TIMs) with enhanced thermal conductivity are being developed specifically for the unique requirements of 2.5D packages. These materials aim to optimize heat transfer between the HBM4 stacks, silicon interposer, and heat spreader. Additionally, innovative heat spreader designs incorporating vapor chambers or graphene-based materials show promising results in distributing heat more efficiently across the package.

Active cooling solutions complement passive approaches by providing dynamic thermal management capabilities. Microfluidic cooling channels integrated directly into the interposer or substrate offer localized cooling precisely where heat generation is most concentrated. These systems can be particularly effective for HBM4 implementations where power density exceeds 150W/cm². Thermoelectric coolers (TECs) represent another active solution being explored, offering the ability to transfer heat against the natural temperature gradient when necessary.

System-level thermal management strategies address the holistic thermal ecosystem. Advanced thermal modeling and simulation tools now incorporate machine learning algorithms to predict hotspots and optimize cooling solutions during the design phase. These tools enable designers to evaluate various thermal management approaches before physical implementation, significantly reducing development cycles and costs.

Dynamic thermal management techniques at the system level include adaptive power management algorithms that intelligently throttle memory operations based on thermal conditions. These approaches often leverage embedded thermal sensors within the HBM4 stack to provide real-time temperature data, enabling precise control of memory operations to prevent thermal emergencies while maximizing performance.

Emerging research directions in thermal management for HBM4 integration include phase-change cooling materials that can absorb significant thermal energy during state transitions, and diamond-based heat spreaders that leverage diamond's exceptional thermal conductivity. Additionally, 3D-printed microstructures with optimized geometries for heat dissipation are showing promise in laboratory settings, potentially offering customized cooling solutions for specific HBM4 implementation scenarios.

The industry is increasingly adopting standardized thermal metrics and testing methodologies specifically designed for 2.5D integrated systems with HBM4. These standards facilitate meaningful comparisons between different thermal management approaches and help establish thermal design guidelines for next-generation memory subsystems.

Passive cooling techniques represent the first line of defense in thermal management for 2.5D HBM4 integration. Advanced thermal interface materials (TIMs) with enhanced thermal conductivity are being developed specifically for the unique requirements of 2.5D packages. These materials aim to optimize heat transfer between the HBM4 stacks, silicon interposer, and heat spreader. Additionally, innovative heat spreader designs incorporating vapor chambers or graphene-based materials show promising results in distributing heat more efficiently across the package.

Active cooling solutions complement passive approaches by providing dynamic thermal management capabilities. Microfluidic cooling channels integrated directly into the interposer or substrate offer localized cooling precisely where heat generation is most concentrated. These systems can be particularly effective for HBM4 implementations where power density exceeds 150W/cm². Thermoelectric coolers (TECs) represent another active solution being explored, offering the ability to transfer heat against the natural temperature gradient when necessary.

System-level thermal management strategies address the holistic thermal ecosystem. Advanced thermal modeling and simulation tools now incorporate machine learning algorithms to predict hotspots and optimize cooling solutions during the design phase. These tools enable designers to evaluate various thermal management approaches before physical implementation, significantly reducing development cycles and costs.

Dynamic thermal management techniques at the system level include adaptive power management algorithms that intelligently throttle memory operations based on thermal conditions. These approaches often leverage embedded thermal sensors within the HBM4 stack to provide real-time temperature data, enabling precise control of memory operations to prevent thermal emergencies while maximizing performance.

Emerging research directions in thermal management for HBM4 integration include phase-change cooling materials that can absorb significant thermal energy during state transitions, and diamond-based heat spreaders that leverage diamond's exceptional thermal conductivity. Additionally, 3D-printed microstructures with optimized geometries for heat dissipation are showing promise in laboratory settings, potentially offering customized cooling solutions for specific HBM4 implementation scenarios.

The industry is increasingly adopting standardized thermal metrics and testing methodologies specifically designed for 2.5D integrated systems with HBM4. These standards facilitate meaningful comparisons between different thermal management approaches and help establish thermal design guidelines for next-generation memory subsystems.

Supply Chain Considerations for HBM4 Implementation

The implementation of HBM4 in 2.5D integration environments necessitates careful consideration of supply chain dynamics to ensure successful deployment. The HBM4 ecosystem involves multiple specialized vendors across semiconductor manufacturing, packaging, and testing, creating complex interdependencies that impact both power consumption management and design constraint adherence.

Memory stack production for HBM4 requires advanced foundry capabilities at 7nm or below process nodes, with only a handful of manufacturers globally possessing the necessary technology. This limited supplier base creates potential bottlenecks, especially as demand increases from AI accelerator and high-performance computing applications. The specialized nature of Through-Silicon Via (TSV) technology further restricts manufacturing options, with only select facilities equipped for high-density interconnect production.

Interposer manufacturing represents another critical supply chain consideration, as silicon or organic interposers must accommodate the increased I/O density and power delivery requirements of HBM4. The precision required for 2.5D integration creates yield challenges that can significantly impact component availability and cost structures. Current manufacturing capacity limitations may lead to extended lead times of 20-30 weeks for custom HBM4 implementations.

Testing infrastructure for HBM4 components presents additional supply chain complexities. The high-speed interfaces operating at 8+ Gbps require specialized testing equipment that may not be widely available across the semiconductor testing ecosystem. This testing bottleneck can impact quality assurance processes and potentially compromise power efficiency if thermal or electrical characteristics are not properly validated.

Substrate material availability for advanced packaging solutions represents a growing concern, particularly for materials engineered to manage the thermal challenges inherent in high-density memory integration. The specialized substrate materials needed for optimal thermal performance may face supply constraints as multiple industries compete for similar resources.

Geopolitical factors increasingly influence the HBM4 supply chain, with trade restrictions and technology export controls potentially limiting access to critical manufacturing technologies or components. Organizations implementing HBM4 must develop contingency plans addressing potential supply disruptions while maintaining design performance targets.

Vertical integration strategies are emerging among major technology companies seeking to secure HBM4 supply chains, with some firms investing in memory manufacturing capabilities or forming strategic partnerships with memory suppliers. These arrangements may provide competitive advantages in managing both supply availability and power optimization capabilities for 2.5D integrated systems.

Memory stack production for HBM4 requires advanced foundry capabilities at 7nm or below process nodes, with only a handful of manufacturers globally possessing the necessary technology. This limited supplier base creates potential bottlenecks, especially as demand increases from AI accelerator and high-performance computing applications. The specialized nature of Through-Silicon Via (TSV) technology further restricts manufacturing options, with only select facilities equipped for high-density interconnect production.

Interposer manufacturing represents another critical supply chain consideration, as silicon or organic interposers must accommodate the increased I/O density and power delivery requirements of HBM4. The precision required for 2.5D integration creates yield challenges that can significantly impact component availability and cost structures. Current manufacturing capacity limitations may lead to extended lead times of 20-30 weeks for custom HBM4 implementations.

Testing infrastructure for HBM4 components presents additional supply chain complexities. The high-speed interfaces operating at 8+ Gbps require specialized testing equipment that may not be widely available across the semiconductor testing ecosystem. This testing bottleneck can impact quality assurance processes and potentially compromise power efficiency if thermal or electrical characteristics are not properly validated.

Substrate material availability for advanced packaging solutions represents a growing concern, particularly for materials engineered to manage the thermal challenges inherent in high-density memory integration. The specialized substrate materials needed for optimal thermal performance may face supply constraints as multiple industries compete for similar resources.

Geopolitical factors increasingly influence the HBM4 supply chain, with trade restrictions and technology export controls potentially limiting access to critical manufacturing technologies or components. Organizations implementing HBM4 must develop contingency plans addressing potential supply disruptions while maintaining design performance targets.

Vertical integration strategies are emerging among major technology companies seeking to secure HBM4 supply chains, with some firms investing in memory manufacturing capabilities or forming strategic partnerships with memory suppliers. These arrangements may provide competitive advantages in managing both supply availability and power optimization capabilities for 2.5D integrated systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!