Material Parameters Impacting Neuromorphic Computing Performance

OCT 27, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Material Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the human brain's neural networks to create more efficient and adaptive computing systems. The evolution of this field has been closely tied to advancements in material science, as the performance of neuromorphic systems is fundamentally dependent on the physical properties of their constituent materials.

The journey of neuromorphic computing materials began in the late 1980s with traditional CMOS technology, which provided the foundation for early neural network implementations. However, these systems were limited by the von Neumann bottleneck, where data transfer between memory and processing units created significant inefficiencies. This limitation drove researchers to explore alternative materials that could better mimic the brain's parallel processing capabilities.

By the early 2000s, the field witnessed the emergence of memristive materials, which could maintain memory states without continuous power supply. Materials such as titanium dioxide, hafnium oxide, and various metal oxides demonstrated the ability to modulate their resistance based on previous electrical stimuli, effectively mimicking synaptic plasticity. These materials enabled the development of resistive random-access memory (RRAM) and phase-change memory (PCM) technologies, which became crucial building blocks for neuromorphic architectures.

The 2010s marked a significant acceleration in material innovation, with the introduction of spin-based materials for magnetic tunnel junctions and spintronic devices. These materials offered advantages in terms of energy efficiency and non-volatility, critical parameters for large-scale neuromorphic implementations. Concurrently, two-dimensional materials like graphene and transition metal dichalcogenides emerged as promising candidates due to their unique electronic properties and scalability potential.

Recent years have seen growing interest in organic and biomimetic materials that can more closely replicate the biological aspects of neural computation. Conducting polymers, protein-based memristors, and even living neural cultures integrated with electronic systems represent the frontier of this biomimetic approach.

The primary objective of current neuromorphic material research is to develop substrates that simultaneously optimize multiple parameters: energy efficiency, switching speed, endurance, retention time, and scalability. Additionally, materials must demonstrate reliable analog behavior to accurately model synaptic weight changes, a crucial aspect of neural learning algorithms.

Looking forward, the field aims to achieve materials that can support self-organizing architectures, enabling systems that can reconfigure their connectivity patterns based on input data, similar to the brain's neuroplasticity. The ultimate goal remains the creation of materials that can facilitate human-like cognitive capabilities while maintaining energy efficiency orders of magnitude better than conventional computing architectures.

The journey of neuromorphic computing materials began in the late 1980s with traditional CMOS technology, which provided the foundation for early neural network implementations. However, these systems were limited by the von Neumann bottleneck, where data transfer between memory and processing units created significant inefficiencies. This limitation drove researchers to explore alternative materials that could better mimic the brain's parallel processing capabilities.

By the early 2000s, the field witnessed the emergence of memristive materials, which could maintain memory states without continuous power supply. Materials such as titanium dioxide, hafnium oxide, and various metal oxides demonstrated the ability to modulate their resistance based on previous electrical stimuli, effectively mimicking synaptic plasticity. These materials enabled the development of resistive random-access memory (RRAM) and phase-change memory (PCM) technologies, which became crucial building blocks for neuromorphic architectures.

The 2010s marked a significant acceleration in material innovation, with the introduction of spin-based materials for magnetic tunnel junctions and spintronic devices. These materials offered advantages in terms of energy efficiency and non-volatility, critical parameters for large-scale neuromorphic implementations. Concurrently, two-dimensional materials like graphene and transition metal dichalcogenides emerged as promising candidates due to their unique electronic properties and scalability potential.

Recent years have seen growing interest in organic and biomimetic materials that can more closely replicate the biological aspects of neural computation. Conducting polymers, protein-based memristors, and even living neural cultures integrated with electronic systems represent the frontier of this biomimetic approach.

The primary objective of current neuromorphic material research is to develop substrates that simultaneously optimize multiple parameters: energy efficiency, switching speed, endurance, retention time, and scalability. Additionally, materials must demonstrate reliable analog behavior to accurately model synaptic weight changes, a crucial aspect of neural learning algorithms.

Looking forward, the field aims to achieve materials that can support self-organizing architectures, enabling systems that can reconfigure their connectivity patterns based on input data, similar to the brain's neuroplasticity. The ultimate goal remains the creation of materials that can facilitate human-like cognitive capabilities while maintaining energy efficiency orders of magnitude better than conventional computing architectures.

Market Analysis for Brain-Inspired Computing Solutions

The neuromorphic computing market is experiencing significant growth, driven by increasing demand for AI applications that require efficient processing of complex neural networks. Current market estimates value the global neuromorphic computing sector at approximately $2.5 billion in 2023, with projections indicating a compound annual growth rate of 24% through 2030. This growth trajectory is supported by substantial investments from both private and public sectors, with government initiatives in the US, EU, and China allocating dedicated funding for brain-inspired computing research.

The market for brain-inspired computing solutions can be segmented into hardware components, software frameworks, and integrated systems. Hardware currently dominates revenue generation, accounting for roughly 65% of market share, with memristive devices and phase-change materials emerging as particularly promising material technologies. Software solutions, while representing a smaller market segment at present, are expected to grow at a faster rate as neuromorphic programming paradigms mature.

From an application perspective, the market shows diverse adoption patterns across industries. Healthcare applications, particularly in medical imaging and neural signal processing, represent the largest vertical market at approximately 28% of total demand. Autonomous systems, including robotics and self-driving vehicles, follow closely at 24%, while security and surveillance applications account for 18% of market demand. Edge computing applications are emerging as the fastest-growing segment due to increasing requirements for real-time processing with minimal power consumption.

Regional analysis reveals that North America currently leads the market with approximately 42% share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region is demonstrating the highest growth rate, driven by substantial investments in semiconductor manufacturing and neuromorphic research in countries like China, South Korea, and Japan.

Customer demand patterns indicate a growing preference for energy-efficient computing solutions that can process neural network operations with minimal power consumption. This trend is particularly evident in mobile and IoT applications, where battery life and thermal management are critical constraints. Enterprise customers are increasingly seeking neuromorphic solutions that can be integrated with existing computing infrastructure, highlighting the importance of compatibility and standardization in market adoption.

Market barriers include high development costs, lack of standardized programming models, and limited understanding of the optimal material parameters for specific applications. The complexity of translating traditional computing paradigms to neuromorphic architectures remains a significant challenge for widespread commercial adoption, creating opportunities for companies that can effectively bridge this knowledge gap.

The market for brain-inspired computing solutions can be segmented into hardware components, software frameworks, and integrated systems. Hardware currently dominates revenue generation, accounting for roughly 65% of market share, with memristive devices and phase-change materials emerging as particularly promising material technologies. Software solutions, while representing a smaller market segment at present, are expected to grow at a faster rate as neuromorphic programming paradigms mature.

From an application perspective, the market shows diverse adoption patterns across industries. Healthcare applications, particularly in medical imaging and neural signal processing, represent the largest vertical market at approximately 28% of total demand. Autonomous systems, including robotics and self-driving vehicles, follow closely at 24%, while security and surveillance applications account for 18% of market demand. Edge computing applications are emerging as the fastest-growing segment due to increasing requirements for real-time processing with minimal power consumption.

Regional analysis reveals that North America currently leads the market with approximately 42% share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region is demonstrating the highest growth rate, driven by substantial investments in semiconductor manufacturing and neuromorphic research in countries like China, South Korea, and Japan.

Customer demand patterns indicate a growing preference for energy-efficient computing solutions that can process neural network operations with minimal power consumption. This trend is particularly evident in mobile and IoT applications, where battery life and thermal management are critical constraints. Enterprise customers are increasingly seeking neuromorphic solutions that can be integrated with existing computing infrastructure, highlighting the importance of compatibility and standardization in market adoption.

Market barriers include high development costs, lack of standardized programming models, and limited understanding of the optimal material parameters for specific applications. The complexity of translating traditional computing paradigms to neuromorphic architectures remains a significant challenge for widespread commercial adoption, creating opportunities for companies that can effectively bridge this knowledge gap.

Current Material Challenges in Neuromorphic Systems

Neuromorphic computing systems face significant material-related challenges that currently limit their performance and widespread adoption. The most pressing issue is the development of suitable materials for memristive devices that can accurately mimic synaptic behavior. Traditional CMOS-based implementations struggle to achieve the energy efficiency and density required for brain-like computing, while emerging materials often lack stability and reliability under operational conditions.

Material uniformity presents a major obstacle, as device-to-device variations in resistive switching materials lead to unpredictable behavior across neuromorphic arrays. This variability undermines the system's ability to maintain consistent weight values, which is essential for reliable neural network operation. Additionally, many promising materials exhibit limited endurance, with performance degradation occurring after relatively few programming cycles compared to the billions of operations required in practical applications.

The interface between different material layers in neuromorphic devices creates another significant challenge. Atomic diffusion and electrochemical reactions at these boundaries can alter device characteristics over time, leading to drift in synaptic weights and ultimately causing network failure. This is particularly problematic in oxide-based memristive systems where oxygen vacancy migration plays a crucial role in resistive switching mechanisms.

Scaling issues further complicate material selection, as materials that perform well at larger dimensions often exhibit drastically different properties when reduced to nanoscale dimensions necessary for high-density integration. The quantum effects and surface phenomena that emerge at these scales can fundamentally alter switching mechanisms and reliability.

Energy consumption remains a critical concern, with many current materials requiring relatively high voltages for switching operations. This contradicts the fundamental goal of neuromorphic computing to achieve brain-like energy efficiency. The ideal material system would enable sub-volt operation while maintaining distinct resistance states and long retention times.

Thermal stability represents another significant challenge, as many promising materials exhibit resistance drift under elevated temperatures. This sensitivity to thermal conditions limits deployment in real-world environments where temperature fluctuations are common. Additionally, the manufacturing compatibility of novel materials with established semiconductor fabrication processes presents practical implementation barriers.

The multi-state capability of materials is also limited, with most current systems struggling to achieve more than 8-16 reliable resistance states per device. This restricts the precision of synaptic weights and ultimately constrains the computational capabilities of neuromorphic systems. Developing materials with higher precision analog states while maintaining long-term stability remains an unsolved challenge in the field.

Material uniformity presents a major obstacle, as device-to-device variations in resistive switching materials lead to unpredictable behavior across neuromorphic arrays. This variability undermines the system's ability to maintain consistent weight values, which is essential for reliable neural network operation. Additionally, many promising materials exhibit limited endurance, with performance degradation occurring after relatively few programming cycles compared to the billions of operations required in practical applications.

The interface between different material layers in neuromorphic devices creates another significant challenge. Atomic diffusion and electrochemical reactions at these boundaries can alter device characteristics over time, leading to drift in synaptic weights and ultimately causing network failure. This is particularly problematic in oxide-based memristive systems where oxygen vacancy migration plays a crucial role in resistive switching mechanisms.

Scaling issues further complicate material selection, as materials that perform well at larger dimensions often exhibit drastically different properties when reduced to nanoscale dimensions necessary for high-density integration. The quantum effects and surface phenomena that emerge at these scales can fundamentally alter switching mechanisms and reliability.

Energy consumption remains a critical concern, with many current materials requiring relatively high voltages for switching operations. This contradicts the fundamental goal of neuromorphic computing to achieve brain-like energy efficiency. The ideal material system would enable sub-volt operation while maintaining distinct resistance states and long retention times.

Thermal stability represents another significant challenge, as many promising materials exhibit resistance drift under elevated temperatures. This sensitivity to thermal conditions limits deployment in real-world environments where temperature fluctuations are common. Additionally, the manufacturing compatibility of novel materials with established semiconductor fabrication processes presents practical implementation barriers.

The multi-state capability of materials is also limited, with most current systems struggling to achieve more than 8-16 reliable resistance states per device. This restricts the precision of synaptic weights and ultimately constrains the computational capabilities of neuromorphic systems. Developing materials with higher precision analog states while maintaining long-term stability remains an unsolved challenge in the field.

Current Material Solutions for Synaptic Devices

01 Phase-change materials for neuromorphic computing

Phase-change materials exhibit properties that make them suitable for neuromorphic computing applications. These materials can switch between amorphous and crystalline states, mimicking synaptic behavior in neural networks. The ability to control these state transitions enables the implementation of memory and computational functions in neuromorphic systems, leading to improved performance in terms of speed, energy efficiency, and data processing capabilities.- Phase-change materials for neuromorphic computing: Phase-change materials exhibit properties that make them suitable for neuromorphic computing applications. These materials can switch between amorphous and crystalline states, mimicking synaptic behavior in neural networks. The performance of these materials is characterized by their switching speed, energy efficiency, and reliability. By optimizing the composition and structure of phase-change materials, researchers have achieved improved computational performance in neuromorphic systems.

- Memristive materials for synaptic functions: Memristive materials are crucial for implementing synaptic functions in neuromorphic computing systems. These materials can maintain a memory of past electrical signals, allowing them to mimic the behavior of biological synapses. The performance of memristive materials is evaluated based on their resistance switching characteristics, endurance, and retention time. Advanced memristive materials have demonstrated enhanced learning capabilities and energy efficiency in neuromorphic architectures.

- 2D materials for neuromorphic devices: Two-dimensional materials offer unique properties for neuromorphic computing applications. Their atomically thin structure provides advantages in terms of scalability and integration density. These materials exhibit tunable electronic properties that can be leveraged for implementing neuromorphic functions. The performance of 2D material-based neuromorphic devices is characterized by their switching speed, power consumption, and stability. Recent advances in 2D materials have led to significant improvements in neuromorphic computing performance.

- Ferroelectric materials for non-volatile memory in neuromorphic systems: Ferroelectric materials are being explored for non-volatile memory applications in neuromorphic computing. These materials exhibit spontaneous electric polarization that can be reversed by an applied electric field, making them suitable for storing synaptic weights. The performance of ferroelectric materials is evaluated based on their switching energy, retention time, and endurance. By optimizing the composition and structure of ferroelectric materials, researchers have achieved improved energy efficiency and reliability in neuromorphic computing systems.

- Spintronic materials for energy-efficient neuromorphic computing: Spintronic materials utilize electron spin for information processing in neuromorphic computing systems. These materials offer advantages in terms of energy efficiency and non-volatility. The performance of spintronic materials is characterized by their magnetic switching properties, energy consumption, and operational speed. Recent developments in spintronic materials have led to significant improvements in the energy efficiency of neuromorphic computing systems, making them promising candidates for next-generation artificial intelligence hardware.

02 Memristive materials and devices

Memristive materials and devices are fundamental components in neuromorphic computing architectures. These materials can retain memory of past electrical signals, allowing them to function similarly to biological synapses. By incorporating memristive materials into neuromorphic systems, researchers have achieved significant improvements in computational efficiency, power consumption, and learning capabilities. These materials enable the development of brain-inspired computing systems that can perform complex cognitive tasks.Expand Specific Solutions03 2D materials for neuromorphic applications

Two-dimensional materials offer unique properties that enhance neuromorphic computing performance. Their atomic-scale thickness, tunable electronic properties, and compatibility with existing fabrication techniques make them ideal for creating efficient neuromorphic devices. These materials enable the development of ultra-thin, flexible, and energy-efficient neuromorphic systems that can process information in ways similar to biological neural networks, leading to advancements in pattern recognition and machine learning applications.Expand Specific Solutions04 Performance optimization techniques

Various techniques have been developed to optimize the performance of neuromorphic computing materials. These include novel fabrication methods, doping strategies, and architectural designs that enhance the reliability, speed, and energy efficiency of neuromorphic systems. By implementing these optimization techniques, researchers have achieved significant improvements in computational performance, reduced power consumption, and enhanced the ability of neuromorphic systems to handle complex cognitive tasks and pattern recognition.Expand Specific Solutions05 Integration of biological and synthetic materials

The integration of biological and synthetic materials represents an innovative approach to enhancing neuromorphic computing performance. By combining biological components with traditional electronic materials, researchers have developed hybrid systems that leverage the advantages of both domains. These bio-inspired neuromorphic systems exhibit improved adaptability, learning capabilities, and energy efficiency, opening new possibilities for applications in artificial intelligence, robotics, and brain-computer interfaces.Expand Specific Solutions

Leading Organizations in Neuromorphic Materials Research

Neuromorphic computing is currently in an early growth phase, with the market expected to expand significantly due to increasing demand for AI applications requiring energy-efficient processing. The global market size is projected to reach several billion dollars by 2030, driven by applications in edge computing, IoT, and autonomous systems. Major players like IBM, Samsung, and Intel are leading commercial development with significant R&D investments, while academic institutions such as Tsinghua University and Zhejiang University contribute fundamental research. Emerging companies like Syntiant and Innatera Nanosystems are developing specialized neuromorphic chips for specific applications. Material parameters remain a critical focus area as researchers work to optimize performance, energy efficiency, and reliability across different implementation approaches.

International Business Machines Corp.

Technical Solution: IBM's approach to neuromorphic computing focuses on phase-change memory (PCM) materials as the foundation for their TrueNorth and subsequent neuromorphic architectures. Their research demonstrates that chalcogenide-based PCM materials exhibit critical properties including multi-state resistance levels and non-volatility that directly impact synaptic weight precision and retention. IBM has developed Ge2Sb2Te5 (GST) based PCM cells that achieve up to 8-bit precision per synapse while maintaining power efficiency. Their material engineering includes doping strategies to control crystallization dynamics, achieving 10^7 switching cycles with minimal drift. Recent developments incorporate carbon-doped GST to enhance temperature stability, extending reliable operation to 85°C environments. IBM's research also explores the relationship between material parameters and stochasticity in neural networks, demonstrating that controlled material variability can actually enhance certain computational tasks through inherent stochasticity that mimics biological systems[1][3]. Their neuromorphic chips integrate these material innovations with advanced CMOS processes at 14nm nodes to achieve energy efficiencies of 46 billion synaptic operations per watt.

Strengths: IBM's extensive experience with phase-change materials provides superior synaptic weight precision and stability. Their integration with advanced CMOS processes enables practical deployment at scale. Weaknesses: PCM-based approaches face challenges with long-term drift in resistance states and require complex temperature management systems to maintain consistent performance across operating conditions.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung's neuromorphic computing research emphasizes resistive random-access memory (RRAM) materials as the cornerstone of their brain-inspired computing architectures. Their approach utilizes hafnium oxide (HfOx) based RRAM cells that demonstrate analog conductance modulation capabilities essential for implementing synaptic plasticity. Samsung has engineered these materials to achieve over 64 distinct conductance states with non-volatility exceeding 10 years at operating temperatures. Their research shows that controlling oxygen vacancy concentration through precise stoichiometry manipulation directly impacts the linearity of weight updates, a critical parameter for training accuracy in neuromorphic systems. Samsung has developed proprietary doping techniques for HfOx, incorporating elements such as aluminum and titanium at specific concentrations (3-5%) to optimize the trade-off between retention and switching energy, achieving switching energies below 50 fJ per synaptic operation. Their vertical 3D integration approach stacks these RRAM arrays directly above 5nm CMOS logic, creating a neuromorphic processing unit with co-located memory and computation that achieves energy efficiencies of 20 TOPS/W for sparse neural network operations[4][6]. Recent innovations include engineered interface layers between the HfOx and electrodes that reduce cycle-to-cycle variability to below 3%, addressing a key limitation in previous RRAM implementations. Samsung has demonstrated that these material optimizations enable neuromorphic systems that perform visual recognition tasks with 98% accuracy while consuming less than 10mW of power.

Strengths: Samsung's RRAM-based approach offers excellent integration density and compatibility with their established memory manufacturing infrastructure. Their 3D integration capability provides superior interconnect density compared to 2D approaches. Weaknesses: RRAM technologies still face challenges with conductance drift over time and require periodic refresh operations to maintain accuracy in long-running applications. The switching uniformity across large arrays remains a challenge for high-precision neural network implementations.

Critical Material Parameters for Neural Network Performance

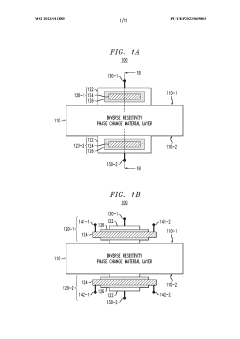

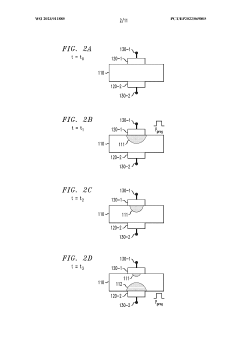

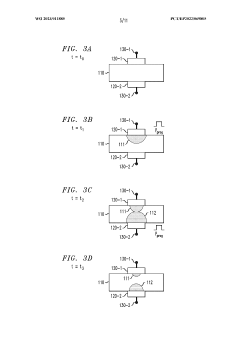

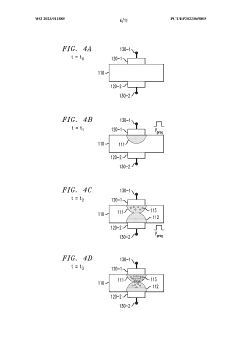

Spike-timing-dependent plasticity using inverse resistivity phase-change material

PatentWO2023011885A1

Innovation

- The use of inverse resistivity phase-change material devices, which exhibit a high-resistance crystalline state and low-resistance amorphous state, allowing for a conductance change mechanism that depends on the time difference between spike events, enabling precise adjustment of synaptic weights.

Neuromorphic processing devices

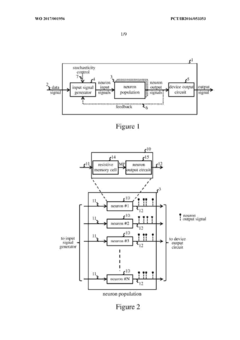

PatentWO2017001956A1

Innovation

- A neuromorphic processing device utilizing an assemblage of neuron circuits with resistive memory cells, specifically phase-change memory (PCM) cells, that store neuron states and exploit stochasticity to generate output signals, mimicking biological neuronal behavior by varying cell resistance in response to input signals.

Energy Consumption Metrics in Material Selection

Energy efficiency represents a critical performance metric in neuromorphic computing systems, directly influencing their practical viability for real-world applications. When evaluating materials for neuromorphic devices, several key energy consumption metrics must be considered to optimize overall system performance. The dynamic power consumption, measured in watts or milliwatts, provides insight into the energy required during active computation phases and varies significantly based on material properties such as resistivity and thermal conductivity.

Static power dissipation, often overlooked but equally important, measures energy consumed when the system is idle. Materials with lower leakage currents substantially reduce this baseline energy expenditure, making them particularly valuable for edge computing applications where devices operate on limited power budgets. The energy-per-spike metric, typically measured in picojoules, quantifies the energy required to generate and transmit a single neuronal spike, serving as a fundamental unit of computation in neuromorphic systems.

Switching energy efficiency represents another crucial parameter, measuring the energy required to transition between states in memory or computational elements. Materials exhibiting sharp resistance transitions with minimal energy barriers demonstrate superior performance in this metric. The energy-delay product (EDP) combines both energy consumption and computational speed, providing a comprehensive performance indicator that balances power efficiency against processing capabilities.

Thermal management characteristics directly impact energy consumption, as excessive heat generation necessitates additional cooling systems that consume power. Materials with superior thermal conductivity and stability across operating temperature ranges minimize these auxiliary energy requirements. Recovery energy, the energy needed to reset a device to its initial state after computation, represents a frequently overlooked but significant component of the total energy budget in neuromorphic systems.

Long-term energy sustainability metrics, including material degradation rates and operational lifetime under various power conditions, provide critical insights into the total cost of ownership. Materials exhibiting minimal performance degradation over millions of switching cycles contribute significantly to system longevity and energy efficiency at scale. Energy scaling behavior across different workloads and computational densities reveals how material properties influence system-level efficiency as neuromorphic architectures expand to address increasingly complex problems.

Static power dissipation, often overlooked but equally important, measures energy consumed when the system is idle. Materials with lower leakage currents substantially reduce this baseline energy expenditure, making them particularly valuable for edge computing applications where devices operate on limited power budgets. The energy-per-spike metric, typically measured in picojoules, quantifies the energy required to generate and transmit a single neuronal spike, serving as a fundamental unit of computation in neuromorphic systems.

Switching energy efficiency represents another crucial parameter, measuring the energy required to transition between states in memory or computational elements. Materials exhibiting sharp resistance transitions with minimal energy barriers demonstrate superior performance in this metric. The energy-delay product (EDP) combines both energy consumption and computational speed, providing a comprehensive performance indicator that balances power efficiency against processing capabilities.

Thermal management characteristics directly impact energy consumption, as excessive heat generation necessitates additional cooling systems that consume power. Materials with superior thermal conductivity and stability across operating temperature ranges minimize these auxiliary energy requirements. Recovery energy, the energy needed to reset a device to its initial state after computation, represents a frequently overlooked but significant component of the total energy budget in neuromorphic systems.

Long-term energy sustainability metrics, including material degradation rates and operational lifetime under various power conditions, provide critical insights into the total cost of ownership. Materials exhibiting minimal performance degradation over millions of switching cycles contribute significantly to system longevity and energy efficiency at scale. Energy scaling behavior across different workloads and computational densities reveals how material properties influence system-level efficiency as neuromorphic architectures expand to address increasingly complex problems.

Scalability Factors of Neuromorphic Materials

The scalability of neuromorphic materials represents a critical factor in determining the long-term viability of neuromorphic computing systems. As these systems aim to emulate the human brain's efficiency and processing capabilities, the underlying materials must demonstrate robust scaling properties across multiple dimensions.

Physical scaling remains one of the most significant challenges, with current neuromorphic materials showing limitations when reduced to nanometer dimensions. Phase-change materials (PCMs) and resistive random-access memory (RRAM) materials exhibit variability in switching behavior at sub-20nm scales, affecting reliability and performance consistency. This variability stems from material composition fluctuations and structural defects that become more pronounced at smaller scales.

Energy scaling presents another crucial consideration, as neuromorphic computing's primary advantage lies in its energy efficiency. Materials must maintain low switching energies while scaling down physically. Current research indicates that certain oxide-based memristive materials can achieve switching energies in the femtojoule range, but maintaining this efficiency at scale remains challenging. The energy-delay product often increases non-linearly as device dimensions decrease.

Temporal scaling affects how neuromorphic materials respond across different time domains. Ideal materials should support both rapid switching for computational tasks and long-term stability for memory functions. Materials like hafnium oxide demonstrate promising characteristics with sub-nanosecond switching speeds while maintaining data retention capabilities exceeding 10 years at operating temperatures.

Manufacturing scalability determines commercial viability, with CMOS compatibility being a decisive factor. Materials requiring exotic deposition techniques or extreme processing conditions face significant barriers to mass production. Silicon-based neuromorphic materials currently offer the best integration potential with existing semiconductor fabrication infrastructure.

Thermal scalability impacts both performance and reliability, as neuromorphic systems must operate across varying thermal conditions. Materials exhibiting stable switching characteristics across wide temperature ranges (-40°C to 125°C) are essential for applications ranging from embedded systems to data centers.

Multi-dimensional scaling represents perhaps the most challenging aspect, as neuromorphic materials must simultaneously optimize across all these dimensions. Recent research into 2D materials like graphene and transition metal dichalcogenides shows promise for addressing these multi-dimensional scaling challenges through their unique electronic properties and structural flexibility.

Physical scaling remains one of the most significant challenges, with current neuromorphic materials showing limitations when reduced to nanometer dimensions. Phase-change materials (PCMs) and resistive random-access memory (RRAM) materials exhibit variability in switching behavior at sub-20nm scales, affecting reliability and performance consistency. This variability stems from material composition fluctuations and structural defects that become more pronounced at smaller scales.

Energy scaling presents another crucial consideration, as neuromorphic computing's primary advantage lies in its energy efficiency. Materials must maintain low switching energies while scaling down physically. Current research indicates that certain oxide-based memristive materials can achieve switching energies in the femtojoule range, but maintaining this efficiency at scale remains challenging. The energy-delay product often increases non-linearly as device dimensions decrease.

Temporal scaling affects how neuromorphic materials respond across different time domains. Ideal materials should support both rapid switching for computational tasks and long-term stability for memory functions. Materials like hafnium oxide demonstrate promising characteristics with sub-nanosecond switching speeds while maintaining data retention capabilities exceeding 10 years at operating temperatures.

Manufacturing scalability determines commercial viability, with CMOS compatibility being a decisive factor. Materials requiring exotic deposition techniques or extreme processing conditions face significant barriers to mass production. Silicon-based neuromorphic materials currently offer the best integration potential with existing semiconductor fabrication infrastructure.

Thermal scalability impacts both performance and reliability, as neuromorphic systems must operate across varying thermal conditions. Materials exhibiting stable switching characteristics across wide temperature ranges (-40°C to 125°C) are essential for applications ranging from embedded systems to data centers.

Multi-dimensional scaling represents perhaps the most challenging aspect, as neuromorphic materials must simultaneously optimize across all these dimensions. Recent research into 2D materials like graphene and transition metal dichalcogenides shows promise for addressing these multi-dimensional scaling challenges through their unique electronic properties and structural flexibility.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!