Understanding the Role of Neural Networks in Neuromorphic Chips

OCT 9, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neural Networks in Neuromorphic Computing: Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This field has evolved significantly since the 1980s when Carver Mead first introduced the concept, aiming to overcome the limitations of traditional von Neumann architectures by mimicking neural processes. The evolution of neuromorphic systems has been characterized by increasing integration of neural network principles with specialized hardware designs, creating systems capable of parallel processing, energy efficiency, and adaptive learning.

Neural networks serve as the foundational model for neuromorphic chips, providing the theoretical framework for how information is processed, stored, and transmitted. The historical trajectory shows a progression from simple perceptron models to complex deep learning architectures, with each advancement bringing neuromorphic implementations closer to biological neural efficiency. Current research focuses on bridging the gap between artificial neural network algorithms and their physical implementation in specialized hardware that can truly capture the brain's computational advantages.

The primary technical objectives in this field include developing neuromorphic chips that significantly reduce power consumption while maintaining or improving computational capabilities for specific tasks. Energy efficiency remains a critical goal, with researchers aiming to achieve processing capabilities that require orders of magnitude less power than conventional computing systems. This is particularly important for edge computing applications where power constraints are significant.

Another key objective is creating systems capable of online learning and adaptation, similar to biological neural networks. This requires developing novel materials, circuit designs, and architectures that support spike-based computing and synaptic plasticity mechanisms. The field is increasingly moving toward heterogeneous integration of different technologies, combining digital, analog, and memristive elements to achieve optimal performance.

The convergence of advances in neural network algorithms, particularly spiking neural networks (SNNs), with innovations in semiconductor technology has accelerated development in recent years. This has been further catalyzed by the growing demands of artificial intelligence applications that require efficient processing of complex patterns and massive datasets. The limitations of traditional computing architectures in meeting these demands have highlighted the potential value of neuromorphic approaches.

Looking forward, the field is trending toward greater integration of biological principles, including neuromodulation, structural plasticity, and hierarchical organization. These developments aim to create neuromorphic systems that not only mimic basic neural functions but also capture the brain's remarkable ability to learn from limited examples, adapt to changing environments, and operate with extreme energy efficiency.

Neural networks serve as the foundational model for neuromorphic chips, providing the theoretical framework for how information is processed, stored, and transmitted. The historical trajectory shows a progression from simple perceptron models to complex deep learning architectures, with each advancement bringing neuromorphic implementations closer to biological neural efficiency. Current research focuses on bridging the gap between artificial neural network algorithms and their physical implementation in specialized hardware that can truly capture the brain's computational advantages.

The primary technical objectives in this field include developing neuromorphic chips that significantly reduce power consumption while maintaining or improving computational capabilities for specific tasks. Energy efficiency remains a critical goal, with researchers aiming to achieve processing capabilities that require orders of magnitude less power than conventional computing systems. This is particularly important for edge computing applications where power constraints are significant.

Another key objective is creating systems capable of online learning and adaptation, similar to biological neural networks. This requires developing novel materials, circuit designs, and architectures that support spike-based computing and synaptic plasticity mechanisms. The field is increasingly moving toward heterogeneous integration of different technologies, combining digital, analog, and memristive elements to achieve optimal performance.

The convergence of advances in neural network algorithms, particularly spiking neural networks (SNNs), with innovations in semiconductor technology has accelerated development in recent years. This has been further catalyzed by the growing demands of artificial intelligence applications that require efficient processing of complex patterns and massive datasets. The limitations of traditional computing architectures in meeting these demands have highlighted the potential value of neuromorphic approaches.

Looking forward, the field is trending toward greater integration of biological principles, including neuromodulation, structural plasticity, and hierarchical organization. These developments aim to create neuromorphic systems that not only mimic basic neural functions but also capture the brain's remarkable ability to learn from limited examples, adapt to changing environments, and operate with extreme energy efficiency.

Market Analysis for Brain-Inspired Computing Solutions

The brain-inspired computing market is experiencing significant growth, driven by the increasing demand for efficient processing of complex AI workloads. Current market valuations place neuromorphic computing at approximately $3.1 billion in 2023, with projections indicating a compound annual growth rate of 24.7% through 2030. This rapid expansion reflects the growing recognition of neuromorphic solutions' potential to address limitations in traditional computing architectures.

Market segmentation reveals diverse application areas for neuromorphic chips. The largest current segment is image recognition and computer vision, accounting for roughly 35% of market share. This is followed by natural language processing (22%), autonomous systems (18%), and healthcare applications (15%), with the remaining 10% distributed across various emerging use cases. These applications span industries including automotive, consumer electronics, healthcare, aerospace, and defense.

Regional analysis shows North America leading with 42% market share, followed by Europe (28%), Asia-Pacific (25%), and other regions (5%). However, the Asia-Pacific region demonstrates the fastest growth trajectory, particularly in China, Japan, and South Korea, where substantial investments in neuromorphic research and manufacturing capabilities are underway.

Customer demand is primarily driven by three factors: energy efficiency requirements, real-time processing needs, and edge computing applications. Organizations seeking to deploy AI solutions in power-constrained environments show particular interest in neuromorphic solutions that offer 50-100x improvements in energy efficiency compared to traditional GPU-based systems.

Market challenges include high development costs, limited software ecosystems, and integration complexities with existing systems. The average R&D investment required to bring a neuromorphic solution to market exceeds $50 million, creating significant barriers to entry for smaller players.

Competitive dynamics reveal a market dominated by both established semiconductor companies and specialized startups. Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida represent leading commercial offerings, while university spin-offs like SynSense and aiCTX are gaining traction with specialized solutions. Strategic partnerships between chip developers and application providers are becoming increasingly common, with over 40 such collaborations announced in the past two years.

Market forecasts suggest neuromorphic computing will increasingly disrupt traditional computing paradigms, particularly in edge AI applications where power constraints are significant. The technology adoption curve indicates we are approaching an inflection point, with broader commercial deployment expected within 3-5 years as software ecosystems mature and manufacturing costs decrease.

Market segmentation reveals diverse application areas for neuromorphic chips. The largest current segment is image recognition and computer vision, accounting for roughly 35% of market share. This is followed by natural language processing (22%), autonomous systems (18%), and healthcare applications (15%), with the remaining 10% distributed across various emerging use cases. These applications span industries including automotive, consumer electronics, healthcare, aerospace, and defense.

Regional analysis shows North America leading with 42% market share, followed by Europe (28%), Asia-Pacific (25%), and other regions (5%). However, the Asia-Pacific region demonstrates the fastest growth trajectory, particularly in China, Japan, and South Korea, where substantial investments in neuromorphic research and manufacturing capabilities are underway.

Customer demand is primarily driven by three factors: energy efficiency requirements, real-time processing needs, and edge computing applications. Organizations seeking to deploy AI solutions in power-constrained environments show particular interest in neuromorphic solutions that offer 50-100x improvements in energy efficiency compared to traditional GPU-based systems.

Market challenges include high development costs, limited software ecosystems, and integration complexities with existing systems. The average R&D investment required to bring a neuromorphic solution to market exceeds $50 million, creating significant barriers to entry for smaller players.

Competitive dynamics reveal a market dominated by both established semiconductor companies and specialized startups. Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida represent leading commercial offerings, while university spin-offs like SynSense and aiCTX are gaining traction with specialized solutions. Strategic partnerships between chip developers and application providers are becoming increasingly common, with over 40 such collaborations announced in the past two years.

Market forecasts suggest neuromorphic computing will increasingly disrupt traditional computing paradigms, particularly in edge AI applications where power constraints are significant. The technology adoption curve indicates we are approaching an inflection point, with broader commercial deployment expected within 3-5 years as software ecosystems mature and manufacturing costs decrease.

Current State and Technical Challenges in Neuromorphic Implementation

Neuromorphic computing has witnessed significant advancements in recent years, with major research institutions and technology companies investing heavily in this field. Currently, the implementation of neural networks in neuromorphic chips represents a convergence of neuroscience, computer engineering, and artificial intelligence. The state-of-the-art neuromorphic systems include IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida, each demonstrating unique approaches to brain-inspired computing architectures.

These systems have achieved remarkable milestones in energy efficiency, with some neuromorphic chips consuming merely milliwatts of power while performing complex pattern recognition tasks that would require orders of magnitude more energy on traditional computing platforms. The current implementations typically utilize spiking neural networks (SNNs) as their computational paradigm, which more closely mimics biological neural systems than conventional artificial neural networks.

Despite these advances, significant technical challenges persist in neuromorphic implementation. One fundamental challenge is the hardware-software co-design problem. Traditional programming paradigms are ill-suited for neuromorphic architectures, necessitating new programming models and tools that can effectively harness the parallel, event-driven nature of these systems. This gap between existing software frameworks and neuromorphic hardware creates substantial barriers to widespread adoption.

Material limitations present another critical challenge. Current CMOS technology, while adaptable for neuromorphic designs, cannot fully replicate the density and efficiency of biological neural systems. Emerging materials such as memristors, phase-change memory, and spintronic devices show promise but face issues with reliability, scalability, and manufacturing consistency that must be overcome before commercial viability.

The scaling of neuromorphic systems presents additional hurdles. While small-scale implementations have demonstrated impressive capabilities, scaling to billions of neurons with trillions of synapses—approaching the complexity of the human brain—introduces challenges in interconnect density, power distribution, and thermal management that current fabrication technologies struggle to address.

Learning and adaptation mechanisms represent perhaps the most sophisticated challenge. Biological neural systems excel at unsupervised and reinforcement learning in dynamic environments, capabilities that current neuromorphic implementations can only partially replicate. Developing on-chip learning algorithms that maintain efficiency while enabling continuous adaptation remains an active research frontier.

Geographically, neuromorphic research exhibits distinct regional characteristics. North America leads in commercial applications and venture funding, Europe emphasizes theoretical foundations and neuroscientific accuracy, while Asia demonstrates strength in manufacturing innovation and hardware integration. This global distribution of expertise creates both collaborative opportunities and competitive tensions in advancing the field.

These systems have achieved remarkable milestones in energy efficiency, with some neuromorphic chips consuming merely milliwatts of power while performing complex pattern recognition tasks that would require orders of magnitude more energy on traditional computing platforms. The current implementations typically utilize spiking neural networks (SNNs) as their computational paradigm, which more closely mimics biological neural systems than conventional artificial neural networks.

Despite these advances, significant technical challenges persist in neuromorphic implementation. One fundamental challenge is the hardware-software co-design problem. Traditional programming paradigms are ill-suited for neuromorphic architectures, necessitating new programming models and tools that can effectively harness the parallel, event-driven nature of these systems. This gap between existing software frameworks and neuromorphic hardware creates substantial barriers to widespread adoption.

Material limitations present another critical challenge. Current CMOS technology, while adaptable for neuromorphic designs, cannot fully replicate the density and efficiency of biological neural systems. Emerging materials such as memristors, phase-change memory, and spintronic devices show promise but face issues with reliability, scalability, and manufacturing consistency that must be overcome before commercial viability.

The scaling of neuromorphic systems presents additional hurdles. While small-scale implementations have demonstrated impressive capabilities, scaling to billions of neurons with trillions of synapses—approaching the complexity of the human brain—introduces challenges in interconnect density, power distribution, and thermal management that current fabrication technologies struggle to address.

Learning and adaptation mechanisms represent perhaps the most sophisticated challenge. Biological neural systems excel at unsupervised and reinforcement learning in dynamic environments, capabilities that current neuromorphic implementations can only partially replicate. Developing on-chip learning algorithms that maintain efficiency while enabling continuous adaptation remains an active research frontier.

Geographically, neuromorphic research exhibits distinct regional characteristics. North America leads in commercial applications and venture funding, Europe emphasizes theoretical foundations and neuroscientific accuracy, while Asia demonstrates strength in manufacturing innovation and hardware integration. This global distribution of expertise creates both collaborative opportunities and competitive tensions in advancing the field.

Existing Neural Network Architectures for Neuromorphic Systems

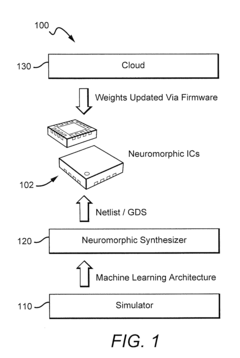

01 Neuromorphic Architecture Design

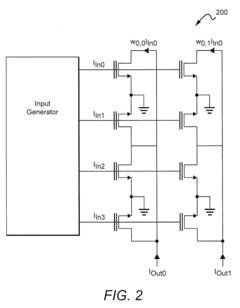

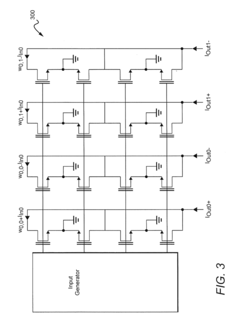

Neuromorphic chips are designed to mimic the structure and functionality of the human brain, incorporating neural networks that process information in parallel. These architectures feature specialized hardware components that enable efficient implementation of neural networks, including synaptic connections, neuron models, and memory structures. The design focuses on optimizing power consumption, processing speed, and scalability for artificial intelligence applications.- Neuromorphic architecture design for neural networks: Neuromorphic chips are designed with architectures that mimic the structure and functionality of biological neural networks. These designs incorporate specialized hardware components that enable efficient implementation of neural network algorithms. The architecture typically includes interconnected processing elements that simulate neurons and synapses, allowing for parallel processing and reduced power consumption compared to traditional computing architectures.

- Spiking neural networks implementation in hardware: Spiking neural networks (SNNs) represent a biologically inspired approach to neural computation where information is transmitted through discrete spikes rather than continuous values. When implemented in neuromorphic chips, SNNs offer advantages in terms of energy efficiency and temporal information processing. These implementations typically use specialized circuits to model the dynamics of biological neurons, including membrane potentials, action potentials, and synaptic plasticity mechanisms.

- Energy-efficient neural network processing: Neuromorphic chips are designed to execute neural network computations with significantly lower power consumption compared to conventional processors. This energy efficiency is achieved through various techniques including analog computing elements, event-driven processing, and specialized memory architectures. These approaches reduce the energy required for neural network inference and training, making them suitable for edge computing and battery-powered devices.

- On-chip learning and adaptation mechanisms: Advanced neuromorphic chips incorporate mechanisms for on-chip learning and adaptation, allowing neural networks to be trained or fine-tuned directly on the hardware. These mechanisms implement various learning algorithms such as spike-timing-dependent plasticity (STDP), backpropagation, and reinforcement learning. On-chip learning reduces the need for external processing and enables continuous adaptation to changing environments or tasks.

- Integration of neural networks with conventional computing systems: Neuromorphic chips are designed to integrate with conventional computing systems, creating hybrid architectures that leverage the strengths of both approaches. These integrations include interfaces for data exchange, control mechanisms, and software frameworks that enable seamless operation. The hybrid systems can accelerate specific neural network operations while maintaining compatibility with existing software ecosystems and development tools.

02 Spiking Neural Networks Implementation

Spiking Neural Networks (SNNs) represent a biologically inspired approach to neural computation in neuromorphic chips. Unlike traditional artificial neural networks, SNNs process information through discrete spikes or events, similar to biological neurons. This event-driven processing enables energy-efficient computation by activating only when necessary. Implementations include various neuron models, spike encoding schemes, and learning algorithms specifically designed for neuromorphic hardware.Expand Specific Solutions03 Memory-Compute Integration

Neuromorphic chips integrate memory and computing elements to overcome the von Neumann bottleneck present in conventional computing architectures. This integration allows for in-memory computing where data processing occurs directly within memory units, significantly reducing energy consumption and latency. Various memory technologies, including resistive RAM, phase-change memory, and magnetic RAM, are utilized to implement synaptic weights and neural connections efficiently.Expand Specific Solutions04 Learning and Adaptation Mechanisms

Neuromorphic chips incorporate on-chip learning and adaptation mechanisms that enable neural networks to modify their behavior based on input data. These mechanisms include various learning algorithms such as spike-timing-dependent plasticity (STDP), backpropagation, and reinforcement learning adapted for neuromorphic hardware. The ability to learn and adapt in real-time allows these systems to continuously improve performance and respond to changing environments without requiring external training.Expand Specific Solutions05 Application-Specific Neuromorphic Solutions

Neuromorphic chips are being developed for specific applications that benefit from neural network processing, including computer vision, natural language processing, autonomous systems, and edge computing. These application-specific designs optimize the neural network architecture, memory configuration, and processing elements for particular use cases. The specialization enables more efficient processing for targeted applications while maintaining low power consumption, making neuromorphic solutions ideal for deployment in resource-constrained environments.Expand Specific Solutions

Leading Organizations in Neuromorphic Chip Development

The neuromorphic chip market is currently in an early growth phase, characterized by significant research and development investments but limited commercial deployment. The global market size is estimated to reach $8-10 billion by 2028, growing at a CAGR of approximately 20%. From a technical maturity perspective, the field remains emergent with key players at different development stages. IBM leads with its TrueNorth and subsequent neuromorphic architectures, while Intel's Loihi chip represents another significant advancement. Companies like Syntiant and Cambricon are focusing on edge AI applications, while Samsung and SK Hynix are leveraging their semiconductor expertise to develop neuromorphic memory solutions. Academic-industry partnerships are prevalent, with institutions like Tsinghua University and Zhejiang University collaborating with commercial entities to bridge fundamental research and practical applications.

International Business Machines Corp.

Technical Solution: IBM's TrueNorth neuromorphic chip architecture represents one of the most significant advancements in neuromorphic computing. The chip contains one million digital neurons and 256 million synapses, organized into 4,096 neurosynaptic cores. Each core implements a network of 256 neurons and 65,536 synapses using an event-driven, asynchronous design that mimics biological neural systems. TrueNorth operates on an extremely low power budget (70mW) while delivering 46 billion synaptic operations per second per watt, making it approximately 10,000 times more energy-efficient than conventional computer architectures. IBM has further evolved this technology with their second-generation neuromorphic chip design that incorporates phase-change memory (PCM) elements to enable on-chip learning capabilities through spike-timing-dependent plasticity (STDP), allowing the neural networks to adapt and learn from data in real-time similar to biological systems[1][3]. IBM's neuromorphic architecture employs a non-von Neumann computing paradigm where memory and processing are integrated, eliminating the traditional bottleneck between these components.

Strengths: Exceptional energy efficiency (70mW for 1 million neurons), scalable architecture, and real-time processing capabilities. The event-driven design eliminates power consumption when neurons are inactive. Weaknesses: Limited precision compared to traditional deep learning accelerators, programming complexity requiring specialized knowledge, and challenges in mapping conventional neural network algorithms to the neuromorphic architecture.

SYNTIANT CORP

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP), a specialized neuromorphic chip designed specifically for edge AI applications with a focus on always-on voice and sensor processing. Their NDP architecture implements neural networks directly in silicon, creating a highly efficient hardware implementation that processes information in a fundamentally different way than traditional CPUs or GPUs. The NDP100 and NDP101 chips can run deep learning algorithms while consuming less than 150 microwatts of power, enabling voice and sensor applications that were previously impossible on battery-powered devices. Syntiant's architecture employs a memory-centric computing approach where computations occur within memory arrays, dramatically reducing data movement and power consumption. The chip architecture features a deeply pipelined dataflow design optimized for neural network inference, with specialized hardware blocks that efficiently implement convolutional, fully-connected, and recurrent neural network layers[2]. Their latest generation chips support multi-modal fusion of audio, vision, and motion sensing, allowing for more sophisticated AI applications at the extreme edge.

Strengths: Ultra-low power consumption (sub-milliwatt operation), specialized for always-on applications, and optimized for specific neural network workloads like keyword spotting and sensor fusion. Weaknesses: Limited flexibility compared to general-purpose neural accelerators, focused primarily on specific application domains rather than general AI workloads, and constrained by the fixed neural network architectures that can be efficiently mapped to the hardware.

Key Innovations in Spiking Neural Network Technologies

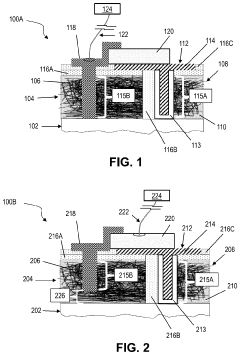

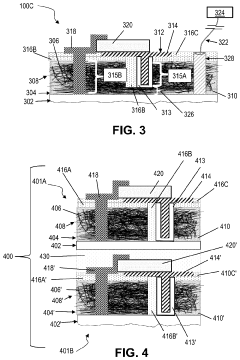

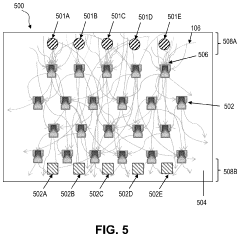

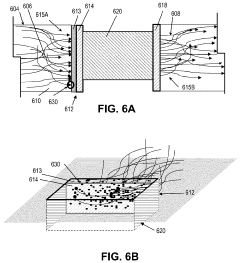

Neural network hardware device and system

PatentPendingUS20230422516A1

Innovation

- A neural network device comprising a mesh layer of randomly dispersed conductive nano-strands with memristor devices and modulating devices that enable electrical communication between a large number of individual nano-strands, mimicking dendritic communication and allowing for non-deterministic signal propagation, exceeding the interconnect capabilities of conventional lithographic methods.

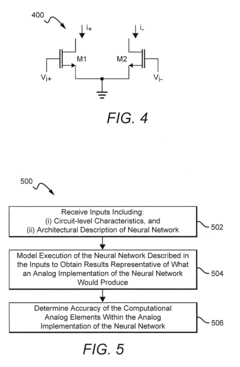

Systems And Methods For Determining Circuit-Level Effects On Classifier Accuracy

PatentActiveUS20190065962A1

Innovation

- The development of neuromorphic chips that simulate 'silicon' neurons, processing information in parallel with bursts of electric current at non-uniform intervals, and the use of systems and methods to model the effects of circuit-level characteristics on neural networks, such as thermal noise and weight inaccuracies, to optimize their performance.

Energy Efficiency Considerations in Neuromorphic Computing

Energy efficiency represents a critical consideration in neuromorphic computing, particularly as these systems aim to emulate the brain's remarkable computational efficiency. Traditional von Neumann architectures suffer from the "memory wall" problem, where energy consumption is dominated by data movement between processing and memory units. Neuromorphic chips address this fundamental limitation through co-locating memory and processing elements, significantly reducing energy requirements for computation.

The energy advantage of neuromorphic systems stems from their event-driven processing paradigm. Unlike conventional systems that operate on fixed clock cycles, neuromorphic neural networks process information only when necessary, triggered by incoming spikes or events. This sparse activation pattern dramatically reduces power consumption during periods of low activity, mirroring the brain's energy-efficient information processing strategy.

Recent benchmarks demonstrate impressive energy efficiency gains in neuromorphic implementations. IBM's TrueNorth architecture achieves approximately 400 billion synaptic operations per second per watt, while Intel's Loihi chip demonstrates 1,000 times better energy efficiency than conventional GPUs for certain neural network workloads. These improvements become particularly significant for edge computing applications where power constraints are severe.

The choice of neural network architecture significantly impacts energy consumption in neuromorphic systems. Spiking Neural Networks (SNNs) offer superior energy efficiency compared to traditional Artificial Neural Networks (ANNs) when implemented on neuromorphic hardware. This advantage stems from their sparse temporal coding and reduced precision requirements. Research indicates that SNNs can achieve comparable accuracy to ANNs while consuming only a fraction of the energy through techniques like temporal coding and spike timing-dependent plasticity.

Materials science advancements further enhance energy efficiency through novel memory technologies. Resistive RAM (ReRAM), phase-change memory (PCM), and magnetic RAM (MRAM) enable low-power, non-volatile storage of synaptic weights directly within computational units. These emerging non-volatile memory technologies reduce static power consumption and enable persistent storage of neural network parameters without continuous power supply.

Looking forward, the energy efficiency frontier in neuromorphic computing involves optimizing the entire hardware-software stack. This includes developing specialized training algorithms that exploit sparse activations, implementing dynamic power management techniques that adapt to computational demands, and exploring novel materials with ultra-low switching energies. As these technologies mature, neuromorphic systems promise to enable sophisticated AI capabilities in severely power-constrained environments, from autonomous sensors to implantable medical devices.

The energy advantage of neuromorphic systems stems from their event-driven processing paradigm. Unlike conventional systems that operate on fixed clock cycles, neuromorphic neural networks process information only when necessary, triggered by incoming spikes or events. This sparse activation pattern dramatically reduces power consumption during periods of low activity, mirroring the brain's energy-efficient information processing strategy.

Recent benchmarks demonstrate impressive energy efficiency gains in neuromorphic implementations. IBM's TrueNorth architecture achieves approximately 400 billion synaptic operations per second per watt, while Intel's Loihi chip demonstrates 1,000 times better energy efficiency than conventional GPUs for certain neural network workloads. These improvements become particularly significant for edge computing applications where power constraints are severe.

The choice of neural network architecture significantly impacts energy consumption in neuromorphic systems. Spiking Neural Networks (SNNs) offer superior energy efficiency compared to traditional Artificial Neural Networks (ANNs) when implemented on neuromorphic hardware. This advantage stems from their sparse temporal coding and reduced precision requirements. Research indicates that SNNs can achieve comparable accuracy to ANNs while consuming only a fraction of the energy through techniques like temporal coding and spike timing-dependent plasticity.

Materials science advancements further enhance energy efficiency through novel memory technologies. Resistive RAM (ReRAM), phase-change memory (PCM), and magnetic RAM (MRAM) enable low-power, non-volatile storage of synaptic weights directly within computational units. These emerging non-volatile memory technologies reduce static power consumption and enable persistent storage of neural network parameters without continuous power supply.

Looking forward, the energy efficiency frontier in neuromorphic computing involves optimizing the entire hardware-software stack. This includes developing specialized training algorithms that exploit sparse activations, implementing dynamic power management techniques that adapt to computational demands, and exploring novel materials with ultra-low switching energies. As these technologies mature, neuromorphic systems promise to enable sophisticated AI capabilities in severely power-constrained environments, from autonomous sensors to implantable medical devices.

Benchmarking Frameworks for Neuromorphic Performance Evaluation

Evaluating the performance of neuromorphic chips presents unique challenges due to their fundamentally different architecture compared to traditional computing systems. Benchmarking frameworks specifically designed for neuromorphic hardware have emerged as essential tools for standardized assessment and comparison across different implementations.

The SNN-TB (Spiking Neural Network Toolbox Benchmark) represents one of the pioneering frameworks, offering a comprehensive suite of tests focused on energy efficiency, spike processing capabilities, and learning algorithm performance. This framework enables researchers to evaluate neuromorphic chips across multiple dimensions, including power consumption per spike, throughput, and accuracy in pattern recognition tasks.

Nengo Benchmark is another significant framework that emphasizes cognitive task performance. It provides standardized implementations of various cognitive models, allowing for direct comparison between different neuromorphic platforms. The framework includes tasks ranging from simple sensorimotor functions to complex working memory and decision-making processes, offering insights into how well neuromorphic systems can replicate brain-like functionality.

For industrial applications, the BrainScaleS Performance Evaluation Suite focuses on scalability and real-time processing capabilities. This framework tests how neuromorphic systems handle increasing network sizes and complexity, providing valuable data on the practical limitations of current implementations. It particularly excels at evaluating the speed-accuracy tradeoffs that are central to neuromorphic computing.

The TrueNorth Neurosynaptic System Benchmark, developed by IBM, specializes in assessing the performance of convolutional neural network implementations on neuromorphic hardware. This framework has become particularly important as more neuromorphic chips incorporate capabilities for deep learning applications alongside their spiking neural network foundations.

More recently, the Neuromorphic Engineering Benchmark Suite (NEBS) has emerged as a comprehensive framework that attempts to standardize performance metrics across the field. NEBS incorporates both application-specific benchmarks and fundamental operations tests, allowing for multi-level evaluation of neuromorphic systems. Its modular design enables researchers to focus on specific aspects of performance relevant to their applications.

Cross-platform benchmarking remains challenging due to the diverse architectures and programming paradigms employed by different neuromorphic chips. The Neuromorphic Evaluation Toolkit (NET) addresses this by providing abstraction layers that allow the same benchmark tests to run across multiple platforms, facilitating more direct comparisons between systems like Intel's Loihi, IBM's TrueNorth, and SpiNNaker.

The SNN-TB (Spiking Neural Network Toolbox Benchmark) represents one of the pioneering frameworks, offering a comprehensive suite of tests focused on energy efficiency, spike processing capabilities, and learning algorithm performance. This framework enables researchers to evaluate neuromorphic chips across multiple dimensions, including power consumption per spike, throughput, and accuracy in pattern recognition tasks.

Nengo Benchmark is another significant framework that emphasizes cognitive task performance. It provides standardized implementations of various cognitive models, allowing for direct comparison between different neuromorphic platforms. The framework includes tasks ranging from simple sensorimotor functions to complex working memory and decision-making processes, offering insights into how well neuromorphic systems can replicate brain-like functionality.

For industrial applications, the BrainScaleS Performance Evaluation Suite focuses on scalability and real-time processing capabilities. This framework tests how neuromorphic systems handle increasing network sizes and complexity, providing valuable data on the practical limitations of current implementations. It particularly excels at evaluating the speed-accuracy tradeoffs that are central to neuromorphic computing.

The TrueNorth Neurosynaptic System Benchmark, developed by IBM, specializes in assessing the performance of convolutional neural network implementations on neuromorphic hardware. This framework has become particularly important as more neuromorphic chips incorporate capabilities for deep learning applications alongside their spiking neural network foundations.

More recently, the Neuromorphic Engineering Benchmark Suite (NEBS) has emerged as a comprehensive framework that attempts to standardize performance metrics across the field. NEBS incorporates both application-specific benchmarks and fundamental operations tests, allowing for multi-level evaluation of neuromorphic systems. Its modular design enables researchers to focus on specific aspects of performance relevant to their applications.

Cross-platform benchmarking remains challenging due to the diverse architectures and programming paradigms employed by different neuromorphic chips. The Neuromorphic Evaluation Toolkit (NET) addresses this by providing abstraction layers that allow the same benchmark tests to run across multiple platforms, facilitating more direct comparisons between systems like Intel's Loihi, IBM's TrueNorth, and SpiNNaker.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!