What Technical Mechanisms Boost Neuromorphic Computing Efficiency

OCT 27, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field has evolved significantly since its conceptual inception in the late 1980s when Carver Mead first proposed the idea of using analog circuits to mimic neurobiological architectures. The evolution trajectory has been marked by several key milestones, from early analog VLSI implementations to today's sophisticated hybrid systems combining analog and digital components.

The fundamental premise of neuromorphic computing lies in its departure from traditional von Neumann architecture, which separates processing and memory units. Instead, neuromorphic systems integrate computation and memory, similar to biological neural networks, enabling parallel processing and potentially dramatic improvements in energy efficiency for specific computational tasks.

Throughout the 2000s, research efforts intensified with projects like IBM's TrueNorth and the European Human Brain Project driving significant advancements. These initiatives helped establish neuromorphic computing as a viable alternative to conventional computing paradigms, particularly for applications involving pattern recognition, sensory processing, and machine learning tasks that benefit from neural network architectures.

The technical evolution has been characterized by progressive improvements in several critical areas: energy efficiency, processing speed, scalability, and adaptability. Modern neuromorphic systems aim to achieve computational densities and energy efficiencies that approach those of biological systems, which operate at remarkably low power levels compared to traditional computing hardware.

The primary objectives of neuromorphic computing research and development center around creating systems that can process information with the efficiency and adaptability of biological neural networks while overcoming the physical and architectural limitations of conventional computing. Specific goals include developing hardware that can perform complex cognitive tasks with minimal energy consumption, creating systems capable of unsupervised learning and adaptation, and establishing platforms that can integrate seamlessly with biological systems for applications in neuroprosthetics and brain-computer interfaces.

Recent technological trends point toward increasing integration of neuromorphic principles with emerging memory technologies such as memristors, phase-change memory, and spintronic devices. These technologies offer promising pathways to overcome current limitations in synaptic density, learning capabilities, and energy efficiency. The convergence of neuromorphic architectures with advances in materials science and nanotechnology is expected to accelerate progress toward truly brain-inspired computing systems capable of unprecedented computational efficiency for specific applications.

The fundamental premise of neuromorphic computing lies in its departure from traditional von Neumann architecture, which separates processing and memory units. Instead, neuromorphic systems integrate computation and memory, similar to biological neural networks, enabling parallel processing and potentially dramatic improvements in energy efficiency for specific computational tasks.

Throughout the 2000s, research efforts intensified with projects like IBM's TrueNorth and the European Human Brain Project driving significant advancements. These initiatives helped establish neuromorphic computing as a viable alternative to conventional computing paradigms, particularly for applications involving pattern recognition, sensory processing, and machine learning tasks that benefit from neural network architectures.

The technical evolution has been characterized by progressive improvements in several critical areas: energy efficiency, processing speed, scalability, and adaptability. Modern neuromorphic systems aim to achieve computational densities and energy efficiencies that approach those of biological systems, which operate at remarkably low power levels compared to traditional computing hardware.

The primary objectives of neuromorphic computing research and development center around creating systems that can process information with the efficiency and adaptability of biological neural networks while overcoming the physical and architectural limitations of conventional computing. Specific goals include developing hardware that can perform complex cognitive tasks with minimal energy consumption, creating systems capable of unsupervised learning and adaptation, and establishing platforms that can integrate seamlessly with biological systems for applications in neuroprosthetics and brain-computer interfaces.

Recent technological trends point toward increasing integration of neuromorphic principles with emerging memory technologies such as memristors, phase-change memory, and spintronic devices. These technologies offer promising pathways to overcome current limitations in synaptic density, learning capabilities, and energy efficiency. The convergence of neuromorphic architectures with advances in materials science and nanotechnology is expected to accelerate progress toward truly brain-inspired computing systems capable of unprecedented computational efficiency for specific applications.

Market Demand Analysis for Brain-Inspired Computing

The neuromorphic computing market is experiencing significant growth driven by increasing demands for energy-efficient AI solutions across multiple industries. Current projections indicate the global neuromorphic computing market will reach approximately $8.9 billion by 2025, with a compound annual growth rate exceeding 20% between 2020-2025. This acceleration is primarily fueled by limitations in traditional computing architectures that struggle with the computational demands of modern AI applications.

Healthcare represents one of the most promising sectors for brain-inspired computing adoption, with applications ranging from real-time patient monitoring systems to advanced diagnostic tools. The market potential in this sector alone is estimated at $2.3 billion by 2025, as medical facilities seek more efficient ways to process vast amounts of patient data while reducing energy consumption.

Autonomous vehicles and advanced driver-assistance systems constitute another significant market segment. These applications require real-time processing of sensory data with minimal latency and power consumption – precisely the strengths of neuromorphic systems. Industry analysts project this segment could represent approximately $1.7 billion of the neuromorphic computing market by 2025.

Edge computing applications are driving substantial demand growth as IoT deployments expand globally. The need for energy-efficient processing at the network edge aligns perfectly with neuromorphic computing's low-power characteristics. Market research indicates edge AI applications could account for nearly 35% of the total neuromorphic computing market within the next five years.

Defense and security sectors are investing heavily in neuromorphic technologies for applications including threat detection, surveillance, and signal intelligence. These applications benefit from the pattern recognition capabilities and energy efficiency of brain-inspired computing systems, with projected market value approaching $1.2 billion by 2025.

Consumer electronics manufacturers are exploring neuromorphic solutions for next-generation smartphones, wearables, and smart home devices. The ability to perform complex AI tasks with minimal battery drain represents a significant competitive advantage in these markets, potentially creating a $1.5 billion opportunity by mid-decade.

Industrial automation represents another growth vector, with manufacturers seeking more efficient ways to implement machine vision, predictive maintenance, and quality control systems. The industrial segment is expected to grow at approximately 25% annually through 2025, outpacing the overall market growth rate.

Despite this promising outlook, market adoption faces challenges including limited developer familiarity with neuromorphic programming paradigms, integration complexities with existing systems, and the need for specialized hardware. These barriers are gradually diminishing as the ecosystem matures and more accessible development tools emerge.

Healthcare represents one of the most promising sectors for brain-inspired computing adoption, with applications ranging from real-time patient monitoring systems to advanced diagnostic tools. The market potential in this sector alone is estimated at $2.3 billion by 2025, as medical facilities seek more efficient ways to process vast amounts of patient data while reducing energy consumption.

Autonomous vehicles and advanced driver-assistance systems constitute another significant market segment. These applications require real-time processing of sensory data with minimal latency and power consumption – precisely the strengths of neuromorphic systems. Industry analysts project this segment could represent approximately $1.7 billion of the neuromorphic computing market by 2025.

Edge computing applications are driving substantial demand growth as IoT deployments expand globally. The need for energy-efficient processing at the network edge aligns perfectly with neuromorphic computing's low-power characteristics. Market research indicates edge AI applications could account for nearly 35% of the total neuromorphic computing market within the next five years.

Defense and security sectors are investing heavily in neuromorphic technologies for applications including threat detection, surveillance, and signal intelligence. These applications benefit from the pattern recognition capabilities and energy efficiency of brain-inspired computing systems, with projected market value approaching $1.2 billion by 2025.

Consumer electronics manufacturers are exploring neuromorphic solutions for next-generation smartphones, wearables, and smart home devices. The ability to perform complex AI tasks with minimal battery drain represents a significant competitive advantage in these markets, potentially creating a $1.5 billion opportunity by mid-decade.

Industrial automation represents another growth vector, with manufacturers seeking more efficient ways to implement machine vision, predictive maintenance, and quality control systems. The industrial segment is expected to grow at approximately 25% annually through 2025, outpacing the overall market growth rate.

Despite this promising outlook, market adoption faces challenges including limited developer familiarity with neuromorphic programming paradigms, integration complexities with existing systems, and the need for specialized hardware. These barriers are gradually diminishing as the ecosystem matures and more accessible development tools emerge.

Current Neuromorphic Technologies and Bottlenecks

Neuromorphic computing currently exists in various technological implementations, each with distinct approaches to mimicking brain functionality. The most prominent technologies include digital neuromorphic systems, analog neuromorphic systems, and hybrid approaches. Digital implementations like IBM's TrueNorth and Intel's Loihi utilize traditional CMOS technology with specialized architectures to simulate neural networks. These systems excel in precision and programmability but face challenges in energy efficiency when scaling to brain-like dimensions.

Analog neuromorphic systems, exemplified by memristor-based architectures and spintronic devices, more closely mimic biological neural processes through physical phenomena. These implementations offer superior energy efficiency and density but struggle with manufacturing consistency, device variability, and limited precision. The inherent stochasticity of analog components presents both advantages for certain algorithms and challenges for deterministic computation.

Hybrid neuromorphic systems attempt to leverage the strengths of both approaches, combining digital control logic with analog computing elements. Notable examples include BrainScaleS and SpiNNaker platforms, which demonstrate promising performance but face integration challenges between disparate technologies.

A significant bottleneck across all neuromorphic implementations is the memory-processor communication bandwidth, often referred to as the "von Neumann bottleneck." Despite neuromorphic architectures' inherent advantage in collocating memory and processing, practical implementations still struggle with efficient data movement, particularly for large-scale systems requiring off-chip communication.

Power consumption remains a critical limitation, with even the most efficient neuromorphic systems consuming orders of magnitude more energy per operation than the human brain. This efficiency gap stems from fundamental differences in signaling mechanisms and the thermodynamic limits of electronic versus biological computation.

Scaling neuromorphic systems presents additional challenges in interconnectivity. The brain's dense three-dimensional connectivity cannot be easily replicated in two-dimensional silicon substrates, leading to routing congestion and communication bottlenecks as system size increases. Current technologies like silicon interposers and through-silicon vias offer partial solutions but remain insufficient for brain-scale connectivity.

Software and programming models represent another significant bottleneck. The lack of standardized programming frameworks and development tools hampers widespread adoption and innovation. Current neuromorphic systems often require specialized knowledge across multiple disciplines, creating a steep learning curve for developers and researchers.

Finally, the gap between theoretical models of neural computation and practical hardware implementations remains substantial. Many neuromorphic systems implement simplified neuron models that capture only a fraction of biological neurons' computational capabilities, limiting their potential for advanced cognitive functions and adaptability.

Analog neuromorphic systems, exemplified by memristor-based architectures and spintronic devices, more closely mimic biological neural processes through physical phenomena. These implementations offer superior energy efficiency and density but struggle with manufacturing consistency, device variability, and limited precision. The inherent stochasticity of analog components presents both advantages for certain algorithms and challenges for deterministic computation.

Hybrid neuromorphic systems attempt to leverage the strengths of both approaches, combining digital control logic with analog computing elements. Notable examples include BrainScaleS and SpiNNaker platforms, which demonstrate promising performance but face integration challenges between disparate technologies.

A significant bottleneck across all neuromorphic implementations is the memory-processor communication bandwidth, often referred to as the "von Neumann bottleneck." Despite neuromorphic architectures' inherent advantage in collocating memory and processing, practical implementations still struggle with efficient data movement, particularly for large-scale systems requiring off-chip communication.

Power consumption remains a critical limitation, with even the most efficient neuromorphic systems consuming orders of magnitude more energy per operation than the human brain. This efficiency gap stems from fundamental differences in signaling mechanisms and the thermodynamic limits of electronic versus biological computation.

Scaling neuromorphic systems presents additional challenges in interconnectivity. The brain's dense three-dimensional connectivity cannot be easily replicated in two-dimensional silicon substrates, leading to routing congestion and communication bottlenecks as system size increases. Current technologies like silicon interposers and through-silicon vias offer partial solutions but remain insufficient for brain-scale connectivity.

Software and programming models represent another significant bottleneck. The lack of standardized programming frameworks and development tools hampers widespread adoption and innovation. Current neuromorphic systems often require specialized knowledge across multiple disciplines, creating a steep learning curve for developers and researchers.

Finally, the gap between theoretical models of neural computation and practical hardware implementations remains substantial. Many neuromorphic systems implement simplified neuron models that capture only a fraction of biological neurons' computational capabilities, limiting their potential for advanced cognitive functions and adaptability.

Mainstream Efficiency-Boosting Mechanisms

01 Energy-efficient neuromorphic hardware architectures

Specialized hardware architectures designed specifically for neuromorphic computing can significantly improve energy efficiency. These designs often incorporate novel circuit configurations, memory-processing integration, and optimized signal processing pathways that mimic neural networks while minimizing power consumption. Such architectures enable more efficient implementation of neural network algorithms by reducing the energy overhead associated with data movement and computation.- Energy-efficient neuromorphic hardware architectures: Specialized hardware architectures designed specifically for neuromorphic computing can significantly improve energy efficiency. These designs often incorporate novel circuit configurations, memory-processing integration, and optimized signal pathways that mimic neural networks while minimizing power consumption. Such architectures enable more efficient processing of neural network operations by reducing data movement and leveraging parallel processing capabilities inherent to brain-inspired computing.

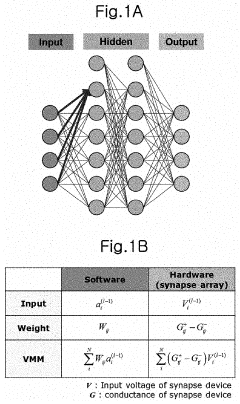

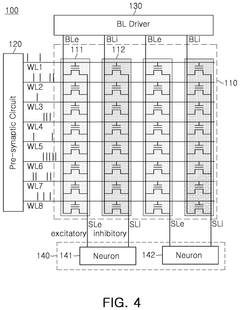

- Memristor-based neuromorphic systems: Memristors serve as key components in energy-efficient neuromorphic computing by emulating synaptic behavior with low power consumption. These non-volatile memory devices can simultaneously store and process information, enabling in-memory computing that reduces the energy costs associated with data transfer between memory and processing units. Memristor-based systems offer advantages in weight storage density, analog computation, and power efficiency for neural network implementations.

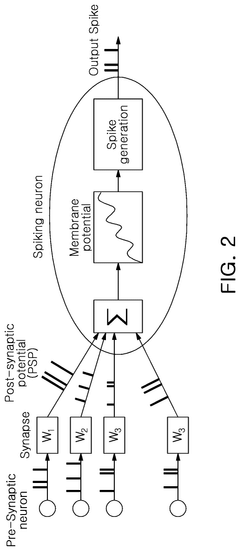

- Spike-based processing techniques: Spike-based processing techniques improve neuromorphic computing efficiency by transmitting information only when necessary through discrete events (spikes) rather than continuous signals. This event-driven approach significantly reduces power consumption while maintaining computational capabilities. Spiking Neural Networks (SNNs) implement temporal coding schemes that enable efficient information processing with minimal energy expenditure, closely mimicking the brain's natural communication mechanisms.

- Optimization algorithms for neuromorphic systems: Advanced optimization algorithms specifically designed for neuromorphic architectures can substantially improve computing efficiency. These algorithms focus on reducing computational complexity, optimizing weight distribution, and minimizing energy consumption during both training and inference phases. By implementing specialized learning rules and network pruning techniques, these approaches enable more efficient resource utilization while maintaining high performance in neural network operations.

- 3D integration and packaging for neuromorphic chips: Three-dimensional integration and advanced packaging technologies enhance neuromorphic computing efficiency by reducing interconnect distances, improving thermal management, and enabling higher density neural implementations. These approaches allow for more efficient signal transmission between neural components while minimizing energy losses. The vertical stacking of processing elements and memory units creates more compact designs with improved performance-per-watt metrics and reduced communication overhead.

02 Memristor-based neuromorphic systems

Memristors are emerging as key components in energy-efficient neuromorphic computing systems due to their ability to simultaneously store and process information. These devices can emulate synaptic behavior with low power consumption, enabling efficient implementation of neural networks. Memristor-based systems offer advantages in terms of reduced power consumption, increased processing density, and improved parallel computing capabilities compared to traditional computing architectures.Expand Specific Solutions03 Spike-based processing techniques

Spike-based processing techniques mimic the brain's communication method by transmitting information through discrete events rather than continuous signals. This approach significantly reduces energy consumption by activating computational resources only when necessary. These techniques include various spike encoding schemes, temporal coding methods, and event-driven processing algorithms that optimize information transfer while minimizing power usage in neuromorphic systems.Expand Specific Solutions04 Optimization algorithms for neuromorphic efficiency

Specialized algorithms designed for neuromorphic computing can substantially improve computational efficiency. These include sparse coding techniques, pruning methods that reduce unnecessary connections, and quantization approaches that minimize bit precision requirements. Such algorithms enable neuromorphic systems to achieve higher performance with lower energy consumption by optimizing network topology and computational resource allocation.Expand Specific Solutions05 3D integration and packaging for neuromorphic systems

Three-dimensional integration and advanced packaging technologies enable more efficient neuromorphic computing by reducing interconnect distances and improving thermal management. These approaches stack multiple layers of computing elements, memory, and sensors to create compact, high-density neuromorphic systems. The resulting architectures minimize signal propagation delays and energy losses while maximizing computational density, leading to significant improvements in overall system efficiency.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic Computing

Neuromorphic computing is currently in a transitional phase from research to early commercialization, with the market expected to grow significantly from its current modest size to reach $8-10 billion by 2030. The technology is approaching maturity in specific applications but remains experimental for broader deployment. Key players driving innovation include IBM with its TrueNorth and subsequent architectures, Intel with Loihi chips, Samsung and Huawei developing specialized hardware, and academic institutions like Tsinghua University and KAIST advancing theoretical frameworks. Emerging companies like Syntiant and Synsense are focusing on edge applications. Technical efficiency gains are primarily achieved through spike-based processing, memristive devices, and neuromorphic learning algorithms that significantly reduce power consumption compared to traditional computing architectures.

International Business Machines Corp.

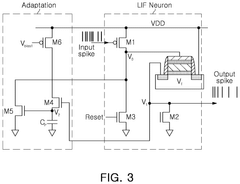

Technical Solution: IBM has pioneered neuromorphic computing through its TrueNorth and subsequent Brain-inspired chips. Their technology implements a non-von Neumann architecture with co-located memory and processing elements that mimic neural networks. IBM's neuromorphic chips feature massively parallel, event-driven processing with extremely low power consumption (typically 70mW for a chip with 1 million neurons and 256 million synapses). Their SyNAPSE program developed digital neuromorphic cores with 256 integrate-and-fire neurons and 64K synapses per core, arranged in a scalable 2D mesh network. The architecture employs time-multiplexing techniques to maximize hardware utilization while maintaining biological timescales. IBM has also developed specialized programming frameworks and algorithms optimized for their neuromorphic hardware, enabling efficient implementation of spiking neural networks for pattern recognition, anomaly detection, and other cognitive tasks.

Strengths: Industry-leading energy efficiency (20mW per cm² for TrueNorth); highly scalable architecture; mature programming ecosystem. Weaknesses: Digital implementation limits biological fidelity compared to analog approaches; requires specialized programming paradigms different from conventional deep learning frameworks.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic computing solutions focused on memory-centric architectures. Their approach integrates processing-in-memory (PIM) technology with resistive RAM (RRAM) and magnetoresistive RAM (MRAM) to create efficient neuromorphic systems. Samsung's neuromorphic chips utilize analog computing principles, where memory cells themselves perform computational operations, dramatically reducing the energy cost of data movement. Their architecture implements spike-timing-dependent plasticity (STDP) learning mechanisms directly in hardware using phase-change memory (PCM) arrays. Samsung has demonstrated neuromorphic systems that achieve 1000x improvement in energy efficiency compared to conventional von Neumann architectures for specific AI workloads. Their recent developments include 3D-stacked memory arrays with integrated neuromorphic processing elements, enabling highly parallel computation with minimal data movement between processing and storage.

Strengths: Expertise in memory technologies provides advantage in memory-centric neuromorphic designs; vertical integration capabilities from chip fabrication to system integration. Weaknesses: Analog computing approaches face challenges with precision and reproducibility; technology still in research phase for many applications.

Key Patents and Breakthroughs in Neural Processing

On-chip training neuromorphic architecture

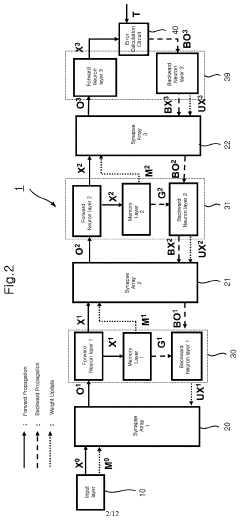

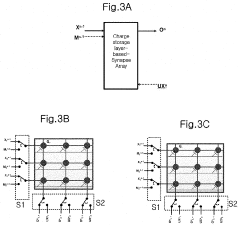

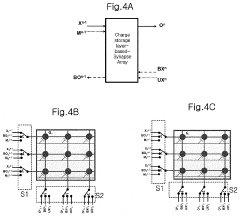

PatentActiveUS20210232900A1

Innovation

- A neuromorphic architecture utilizing synapse arrays with gated Schottky diodes or nonvolatile memory devices, which perform all phases of neural network operations (forward propagation, backward propagation, and weighted value update) using small-sized, low-power-consumption circuits, minimizing memory and area usage.

Neuromorphic computing device using spiking neural network and operating method thereof

PatentPendingUS20250292072A1

Innovation

- A neuromorphic computing device utilizing ferroelectric transistors and synapse arrays with excitatory and inhibitory synapses, enabling 3D stacking and flexible neuron settings through adjustable firing rates, achieved by controlling power supply voltages and gate voltages.

Energy Consumption Optimization Strategies

Energy efficiency represents the cornerstone of neuromorphic computing advancement, with optimization strategies focusing on minimizing power consumption while maximizing computational capabilities. Current neuromorphic systems demonstrate significant energy advantages over traditional von Neumann architectures, yet substantial room for improvement remains through targeted technical interventions.

Material selection plays a crucial role in energy optimization, with emerging materials like phase-change memory (PCM) and resistive random-access memory (RRAM) offering superior energy profiles compared to conventional CMOS technologies. These materials enable efficient implementation of synaptic functions while reducing static power leakage, a persistent challenge in traditional computing paradigms.

Circuit-level innovations constitute another vital optimization avenue. Subthreshold operation of transistors, where devices operate below their threshold voltage, dramatically reduces dynamic power consumption at the cost of computational speed—a trade-off often acceptable in neuromorphic applications where biological-scale timing is sufficient. Additionally, asynchronous circuit designs eliminate the energy overhead of clock distribution networks that plague synchronous systems.

Architectural optimizations leverage the inherent sparsity of neural activity, implementing event-driven computation where processing occurs only when necessary. Spike-based communication protocols further reduce energy requirements by transmitting binary events rather than continuous values, mimicking the energy efficiency of biological neural systems where information is encoded in discrete action potentials.

Memory-compute integration represents perhaps the most transformative energy optimization strategy. By physically collocating memory and processing elements, neuromorphic designs eliminate the energy-intensive data movement that dominates power consumption in conventional architectures. This approach directly addresses the "memory wall" problem through in-memory computing paradigms where computational operations occur directly within memory arrays.

Advanced power management techniques complement these hardware strategies, including dynamic voltage and frequency scaling tailored to neuromorphic workloads, selective activation of neural network regions based on computational demands, and sophisticated power gating to minimize leakage current in inactive components. These techniques adapt energy consumption to computational requirements in real-time.

Quantization and precision reduction strategies further enhance energy efficiency by minimizing the bit-width requirements for neural operations. Research demonstrates that many neuromorphic applications maintain functional accuracy with significantly reduced precision, allowing for substantial energy savings through simplified computational elements and reduced memory requirements.

Material selection plays a crucial role in energy optimization, with emerging materials like phase-change memory (PCM) and resistive random-access memory (RRAM) offering superior energy profiles compared to conventional CMOS technologies. These materials enable efficient implementation of synaptic functions while reducing static power leakage, a persistent challenge in traditional computing paradigms.

Circuit-level innovations constitute another vital optimization avenue. Subthreshold operation of transistors, where devices operate below their threshold voltage, dramatically reduces dynamic power consumption at the cost of computational speed—a trade-off often acceptable in neuromorphic applications where biological-scale timing is sufficient. Additionally, asynchronous circuit designs eliminate the energy overhead of clock distribution networks that plague synchronous systems.

Architectural optimizations leverage the inherent sparsity of neural activity, implementing event-driven computation where processing occurs only when necessary. Spike-based communication protocols further reduce energy requirements by transmitting binary events rather than continuous values, mimicking the energy efficiency of biological neural systems where information is encoded in discrete action potentials.

Memory-compute integration represents perhaps the most transformative energy optimization strategy. By physically collocating memory and processing elements, neuromorphic designs eliminate the energy-intensive data movement that dominates power consumption in conventional architectures. This approach directly addresses the "memory wall" problem through in-memory computing paradigms where computational operations occur directly within memory arrays.

Advanced power management techniques complement these hardware strategies, including dynamic voltage and frequency scaling tailored to neuromorphic workloads, selective activation of neural network regions based on computational demands, and sophisticated power gating to minimize leakage current in inactive components. These techniques adapt energy consumption to computational requirements in real-time.

Quantization and precision reduction strategies further enhance energy efficiency by minimizing the bit-width requirements for neural operations. Research demonstrates that many neuromorphic applications maintain functional accuracy with significantly reduced precision, allowing for substantial energy savings through simplified computational elements and reduced memory requirements.

Hardware-Software Co-design Approaches

Hardware-Software Co-design approaches represent a critical frontier in advancing neuromorphic computing efficiency. This integrated methodology bridges the traditional gap between hardware architecture and software development, creating synergistic solutions that maximize computational performance while minimizing energy consumption. The fundamental principle involves simultaneous optimization of hardware components and software algorithms, rather than treating them as separate development streams.

In neuromorphic systems, hardware-software co-design manifests through specialized programming models that directly leverage the unique capabilities of neuromorphic hardware. These models enable efficient mapping of neural network algorithms onto physical substrates, reducing computational overhead and energy requirements. For instance, event-driven programming paradigms specifically designed for spiking neural networks can dramatically reduce power consumption by activating computational resources only when necessary.

Memory-centric architectures represent another significant co-design approach. By restructuring data flow patterns to minimize the energy-intensive movement of data between processing and memory units, these designs address the von Neumann bottleneck that plagues traditional computing systems. This approach has yielded neuromorphic processors that achieve orders of magnitude improvement in energy efficiency for neural network operations.

Compiler optimization techniques specifically tailored for neuromorphic hardware constitute another vital co-design element. These specialized compilers transform high-level neural network descriptions into optimized instruction sets that exploit the parallel processing capabilities and unique architectural features of neuromorphic systems. Advanced compilers can automatically identify opportunities for spike-based computation, temporal coding, and other neuromorphic-specific optimizations.

Runtime adaptation mechanisms represent a dynamic aspect of hardware-software co-design. These systems continuously monitor performance metrics and power consumption, dynamically adjusting hardware parameters and software execution strategies to maintain optimal efficiency under varying workloads and environmental conditions. Such adaptive systems can selectively activate or deactivate neuromorphic cores based on computational demands.

Cross-layer optimization frameworks provide comprehensive tools for neuromorphic system designers to evaluate trade-offs across the entire hardware-software stack. These frameworks enable simultaneous consideration of algorithm accuracy, hardware constraints, energy budgets, and timing requirements. By providing a holistic view of system performance, they facilitate informed design decisions that maximize overall efficiency rather than optimizing individual components in isolation.

In neuromorphic systems, hardware-software co-design manifests through specialized programming models that directly leverage the unique capabilities of neuromorphic hardware. These models enable efficient mapping of neural network algorithms onto physical substrates, reducing computational overhead and energy requirements. For instance, event-driven programming paradigms specifically designed for spiking neural networks can dramatically reduce power consumption by activating computational resources only when necessary.

Memory-centric architectures represent another significant co-design approach. By restructuring data flow patterns to minimize the energy-intensive movement of data between processing and memory units, these designs address the von Neumann bottleneck that plagues traditional computing systems. This approach has yielded neuromorphic processors that achieve orders of magnitude improvement in energy efficiency for neural network operations.

Compiler optimization techniques specifically tailored for neuromorphic hardware constitute another vital co-design element. These specialized compilers transform high-level neural network descriptions into optimized instruction sets that exploit the parallel processing capabilities and unique architectural features of neuromorphic systems. Advanced compilers can automatically identify opportunities for spike-based computation, temporal coding, and other neuromorphic-specific optimizations.

Runtime adaptation mechanisms represent a dynamic aspect of hardware-software co-design. These systems continuously monitor performance metrics and power consumption, dynamically adjusting hardware parameters and software execution strategies to maintain optimal efficiency under varying workloads and environmental conditions. Such adaptive systems can selectively activate or deactivate neuromorphic cores based on computational demands.

Cross-layer optimization frameworks provide comprehensive tools for neuromorphic system designers to evaluate trade-offs across the entire hardware-software stack. These frameworks enable simultaneous consideration of algorithm accuracy, hardware constraints, energy budgets, and timing requirements. By providing a holistic view of system performance, they facilitate informed design decisions that maximize overall efficiency rather than optimizing individual components in isolation.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!