AI vs. Traditional Methods in Sound Scene Analysis: Which Performs Better?

JUL 16, 2025 |

Sound scene analysis, the process of identifying and understanding various sound sources in an environment, has gained significant attention with the rise of smart technologies. Traditionally, this task was approached using methods grounded in signal processing and pattern recognition. However, with advances in artificial intelligence (AI), new approaches are now emerging, promising improvements in accuracy and efficiency. This blog explores the differences between AI and traditional methods in sound scene analysis, evaluating their strengths and weaknesses to determine which performs better.

Traditional Methods in Sound Scene Analysis

Traditional sound scene analysis relied heavily on techniques such as Fourier Transform, Mel-Frequency Cepstral Coefficients (MFCCs), and Hidden Markov Models (HMMs). These methods focus on extracting features from audio signals and using statistical models to classify and interpret the data.

One advantage of traditional methods is their well-established theoretical foundation. Researchers have spent decades refining these techniques, resulting in robust algorithms that perform reliably under specific conditions. Moreover, they require less computational power compared to AI-based methods, making them suitable for environments with limited processing capabilities.

However, traditional methods have limitations. They often struggle with complex sound environments where overlapping sounds or variable background noise occurs. Their reliance on handcrafted features means they may not capture the full richness of audio data, potentially leading to less accurate results in dynamic and unpredictable environments.

AI Advancements in Sound Scene Analysis

AI-based methods, particularly those utilizing deep learning, have revolutionized sound scene analysis. These systems employ neural networks that learn to recognize patterns in audio data without the need for manual feature extraction. Techniques such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have proven particularly effective in this domain.

AI models excel in handling complex and noisy sound environments. They can automatically learn and adapt to different sound scenes, making them highly versatile. Additionally, the continuous improvement of computational hardware, such as GPUs, has made it feasible to implement these data-intensive techniques in real-world applications.

However, AI approaches also come with challenges. They require large datasets to train effectively, which can be a barrier in scenarios where labeled audio data is scarce. Furthermore, AI models can be seen as black boxes, making it difficult to understand how they reach their conclusions—a significant disadvantage in applications where transparency is crucial.

Performance Comparison: AI vs. Traditional Methods

When comparing the performance of AI and traditional methods, several factors must be considered. In terms of accuracy, AI-based models generally outperform traditional methods, especially in complex and variable environments. The ability of AI to learn from data and improve over time gives it a significant edge in adaptability and precision.

However, traditional methods still hold an advantage in scenarios where computational resources are limited or where the sound environment is relatively stable and predictable. Their lower computational demands and established reliability make them an appealing choice for certain applications.

Furthermore, the choice between AI and traditional methods often depends on the specific requirements of the application. In situations where rapid deployment and cost-effectiveness are priorities, traditional methods might be more suitable. Conversely, for projects aiming for state-of-the-art accuracy and handling complexity, AI-based approaches are likely the better option.

Conclusion

In the debate between AI and traditional methods in sound scene analysis, there is no clear winner. Both approaches have their strengths and weaknesses, and the best choice depends on the specific context and requirements of the task at hand. As AI continues to evolve, it will likely become an increasingly dominant force in this field, pushing the boundaries of what is possible in sound scene analysis. However, traditional methods will remain relevant, providing reliable solutions where simplicity and efficiency are paramount. Ultimately, the most effective strategies may involve a hybrid approach, leveraging the strengths of both AI and traditional techniques to achieve optimal results.

In the world of vibration damping, structural health monitoring, and acoustic noise suppression, staying ahead requires more than intuition—it demands constant awareness of material innovations, sensor architectures, and IP trends across mechanical, automotive, aerospace, and building acoustics.

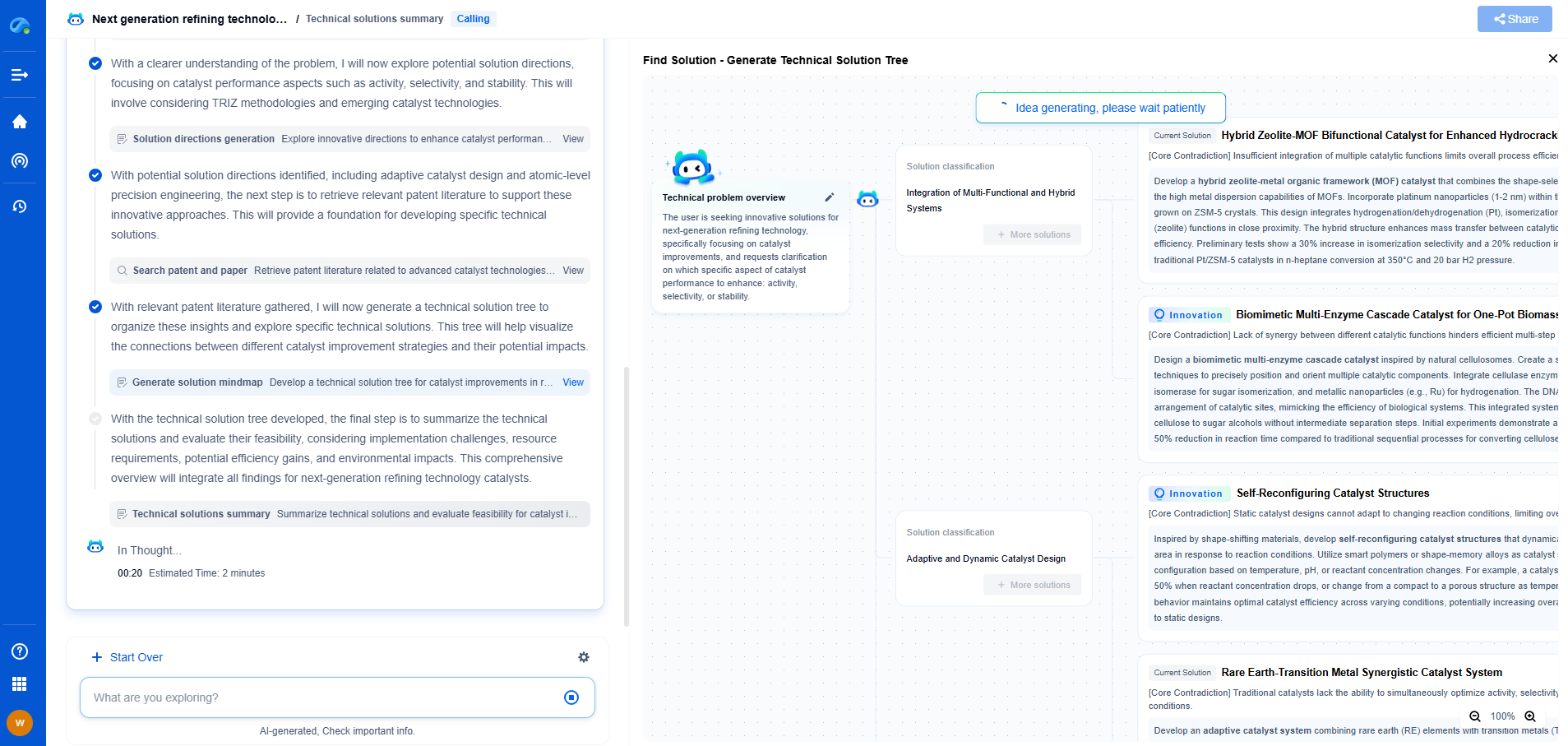

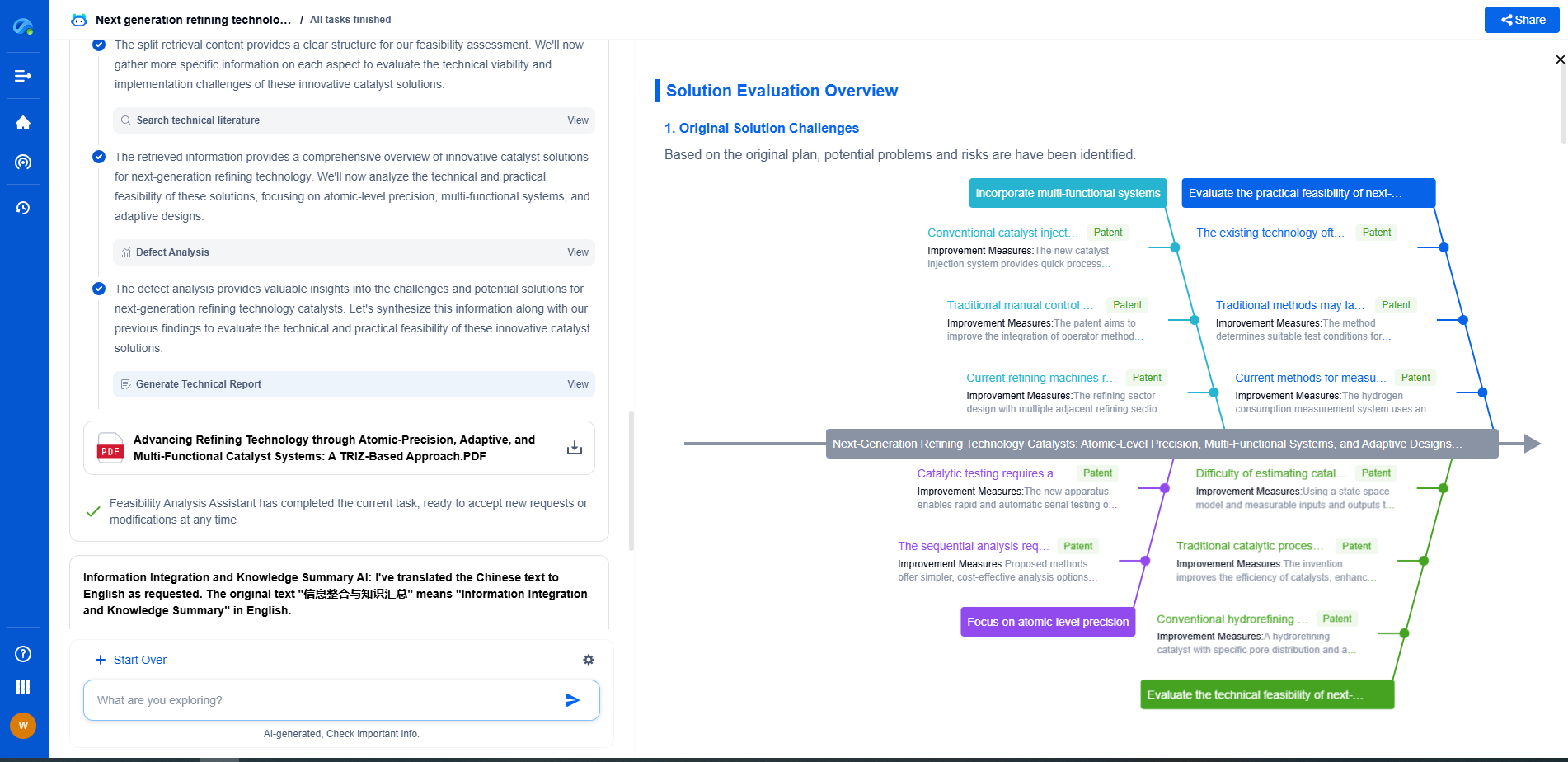

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

⚙️ Bring Eureka into your vibration intelligence workflow—and reduce guesswork in your R&D pipeline. Start your free experience today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com