CCD vs CMOS: Which Image Sensor Technology Still Matters?

JUL 8, 2025 |

Image sensor technology is the backbone of modern photography, whether in consumer-grade cameras, professional-grade equipment, or even smartphone cameras. Two primary types of image sensors dominate the field: Charge-Coupled Device (CCD) and Complementary Metal-Oxide-Semiconductor (CMOS). Each has its unique advantages and disadvantages, but as technology continues to evolve, their relevance and application areas are continually shifting. In this blog, we'll explore both CCD and CMOS sensors, examining their differences and evaluating which technology still holds its ground in today's imaging landscape.

Understanding CCD Technology

CCD sensors have been around since the 1960s and were the dominant technology for many years. Known for their high-quality image reproduction, CCDs work by transferring charge across the chip and reading it at one corner. This method allows for uniform image capture with minimal noise, making CCDs popular in applications where image quality is paramount, such as medical imaging and high-end video cameras.

Advantages of CCDs include their superior image quality with low noise levels and excellent light sensitivity. However, they have some drawbacks, including higher power consumption and cost. Moreover, CCDs typically require additional components for image processing, which adds to their bulk and expense.

The Rise of CMOS Technology

CMOS sensors have gained prominence over the past couple of decades, rapidly evolving and overcoming many of their initial limitations. Unlike CCDs, CMOS chips use a process that allows each pixel to be read individually, leading to faster image processing and lower power consumption. This has made CMOS sensors incredibly popular in consumer electronics, including smartphones and DSLRs.

The advantages of CMOS sensors are numerous. They are cheaper to produce, consume less power, and integrate well with other electronic components, allowing for more compact device designs. Furthermore, advances in CMOS technology have significantly improved their image quality, reducing the gap with CCDs.

Comparing Image Quality

When it comes to image quality, CCDs have traditionally held the upper hand due to their lower noise levels and better sensitivity in low-light conditions. However, CMOS technology has made substantial strides, and modern CMOS sensors often match or exceed CCDs in many aspects. High-end CMOS sensors now offer excellent dynamic range, reduced noise, and superior performance in varying lighting conditions, making them a compelling choice for most applications.

Power Consumption and Speed

One of the critical areas where CMOS outperforms CCD is power consumption. CMOS sensors are inherently more power-efficient, which is a crucial factor for battery-operated devices like smartphones and portable cameras. Additionally, CMOS sensors offer faster readout speeds, allowing for higher frame rates and quicker image processing. This speed is vital for applications involving video capture and real-time image analysis.

Cost Considerations

Cost is another significant factor influencing the choice between CCD and CMOS. The manufacturing process for CMOS sensors is similar to that of other semiconductor devices, making them cheaper to produce in large volumes. This cost advantage has led to their widespread adoption in consumer electronics. Meanwhile, CCDs remain more expensive, limiting their use to specialized applications where their specific benefits justify the added expense.

Future Trends and Applications

As technology continues to advance, the gap between CCD and CMOS sensors is expected to narrow further. CMOS technology is likely to dominate due to its cost-effectiveness, versatility, and integration capabilities. However, CCDs will still matter in niche markets where their superior image quality is indispensable.

In fields like astronomy, scientific research, and certain industrial applications, the unique qualities of CCDs will ensure their continued relevance. Meanwhile, CMOS will remain the go-to choice for mainstream photography and videography, especially as innovations in this area continue to improve their performance and capabilities.

Conclusion

In the ongoing debate of CCD versus CMOS, there is no one-size-fits-all answer. The choice largely depends on the specific requirements of the application, budget constraints, and the desired balance between image quality, power consumption, and speed. While CMOS technology is poised to lead the future of imaging due to its advantages in cost and efficiency, CCDs still hold an essential place in high-quality imaging applications. As both technologies evolve, understanding their strengths and limitations will be crucial in making informed decisions about which image sensor technology truly matters for your needs.

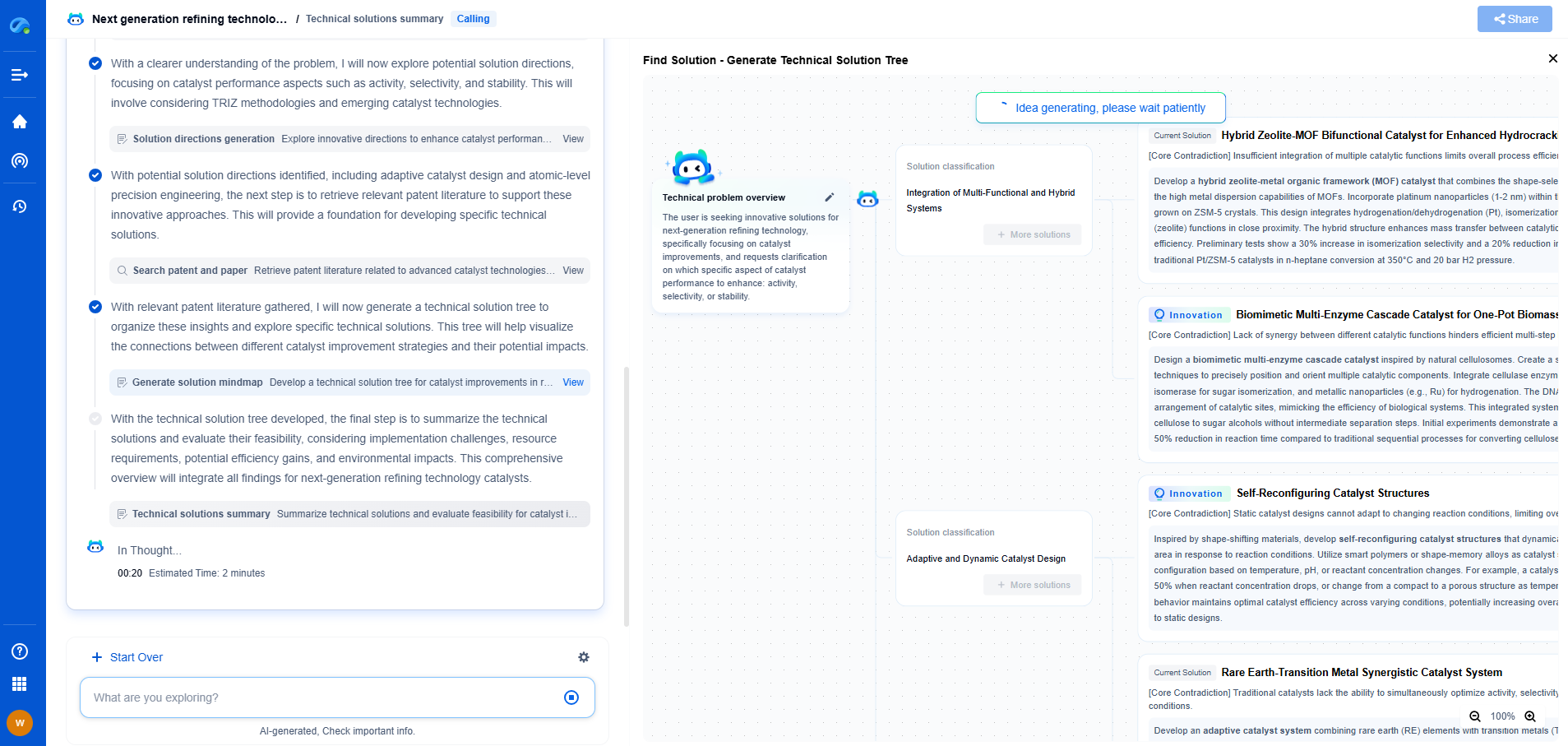

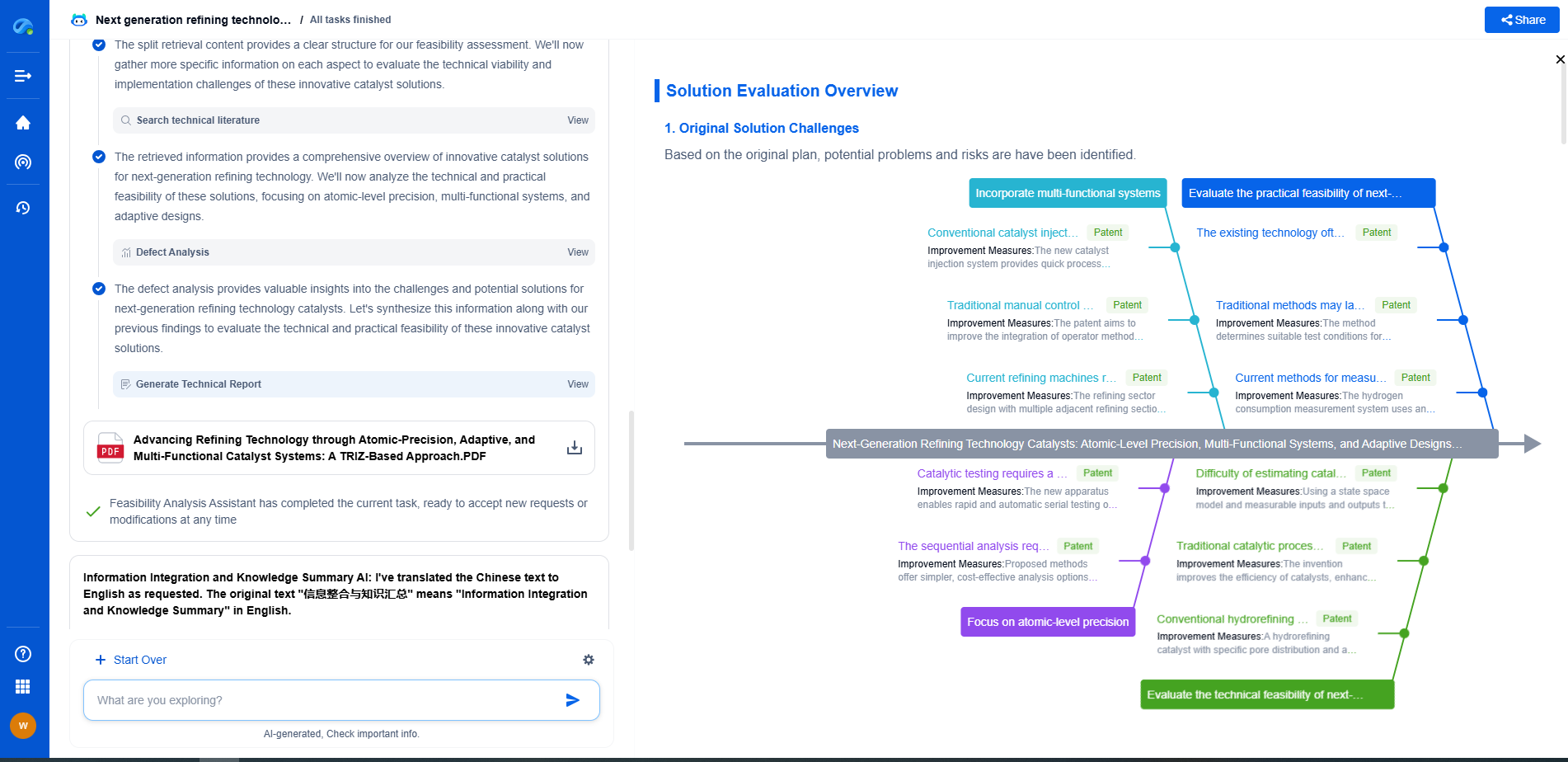

Infuse Insights into Chip R&D with PatSnap Eureka

Whether you're exploring novel transistor architectures, monitoring global IP filings in advanced packaging, or optimizing your semiconductor innovation roadmap—Patsnap Eureka empowers you with AI-driven insights tailored to the pace and complexity of modern chip development.

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Join the new era of semiconductor R&D. Try Patsnap Eureka today and experience the future of innovation intelligence.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com