Cloud vs. Edge AI Processing in Power Systems

JUN 26, 2025 |

The integration of artificial intelligence (AI) in power systems has revolutionized the way energy is generated, distributed, and consumed. By leveraging AI, utilities can predict demand more accurately, manage resources more efficiently, and maintain grid stability in real-time. However, the processing of AI algorithms in power systems can occur in different environments: cloud or edge. Each approach has its unique advantages and limitations, making the choice between cloud and edge AI processing a critical decision for power system operators.

Understanding Cloud AI Processing

Cloud AI processing involves executing AI algorithms in centralized data centers. This approach benefits from the vast processing power, storage capacity, and scalability of cloud computing resources. One of the significant advantages of cloud AI is its ability to access and process large datasets from diverse sources across the power grid. This enables comprehensive analysis and insights, which can drive improved decision-making and predictive modeling.

Furthermore, cloud AI processing supports collaborative efforts by allowing multiple stakeholders, such as utility companies, researchers, and regulatory bodies, to share and access data and insights. This collaboration fosters innovation and enables the development of more sophisticated AI models that can tackle complex challenges in power systems.

However, cloud AI processing has its downsides, primarily revolving around latency and data privacy concerns. Transmitting vast amounts of data to and from the cloud can introduce delays, which is particularly problematic when real-time decision-making is required. Additionally, sensitive data being sent to the cloud might raise privacy and security issues, making some operators hesitant to adopt this approach.

The Rise of Edge AI Processing

Edge AI processing, on the other hand, involves executing AI algorithms directly on devices located at the "edge" of the network, such as sensors, smart meters, or local controllers. This decentralized approach reduces latency, as data is processed closer to its source, enabling faster decision-making and response times. In power systems, where timely interventions are crucial to maintaining stability and reliability, this can be a significant advantage.

Edge AI also addresses data privacy concerns by keeping sensitive information local, reducing the risk of exposure during data transmission. Furthermore, by processing data locally, edge AI can operate even in environments with limited or intermittent internet connectivity, ensuring continuous operation and resilience in remote or rural areas.

Despite its advantages, edge AI processing faces challenges, particularly in terms of computational power and storage. Edge devices often have limited resources compared to cloud data centers, which can constrain the complexity of AI models that can be deployed locally. Moreover, maintaining and updating AI models on numerous distributed devices can be logistically challenging and resource-intensive.

Comparative Analysis: Cloud vs. Edge AI in Power Systems

When comparing cloud and edge AI processing in power systems, it is essential to consider the specific requirements and constraints of the application. Cloud AI is well-suited for large-scale data analysis, predictive maintenance, and long-term strategic planning due to its ability to leverage comprehensive datasets and collaborative frameworks. Meanwhile, edge AI excels in real-time monitoring, fault detection, and localized control, where immediate responses and data privacy are paramount.

In many cases, a hybrid approach that combines cloud and edge AI processing may offer the best of both worlds. For instance, edge devices can perform initial data processing and filtering, sending only relevant data to the cloud for deeper analysis and model training. This approach can optimize resource utilization, reduce latency, and enhance the overall efficiency of AI-powered power systems.

Conclusion

The choice between cloud and edge AI processing in power systems is not a one-size-fits-all decision. Each approach has its strengths and limitations, making it crucial for power system operators to carefully evaluate their specific needs and constraints before implementation. As technology continues to evolve, the convergence of cloud and edge AI processing is likely to offer new opportunities for innovation and improvement in the energy sector. By understanding the capabilities and trade-offs of each approach, stakeholders can harness the full potential of AI to create smarter, more efficient, and resilient power systems.

Stay Ahead in Power Systems Innovation

From intelligent microgrids and energy storage integration to dynamic load balancing and DC-DC converter optimization, the power supply systems domain is rapidly evolving to meet the demands of electrification, decarbonization, and energy resilience.

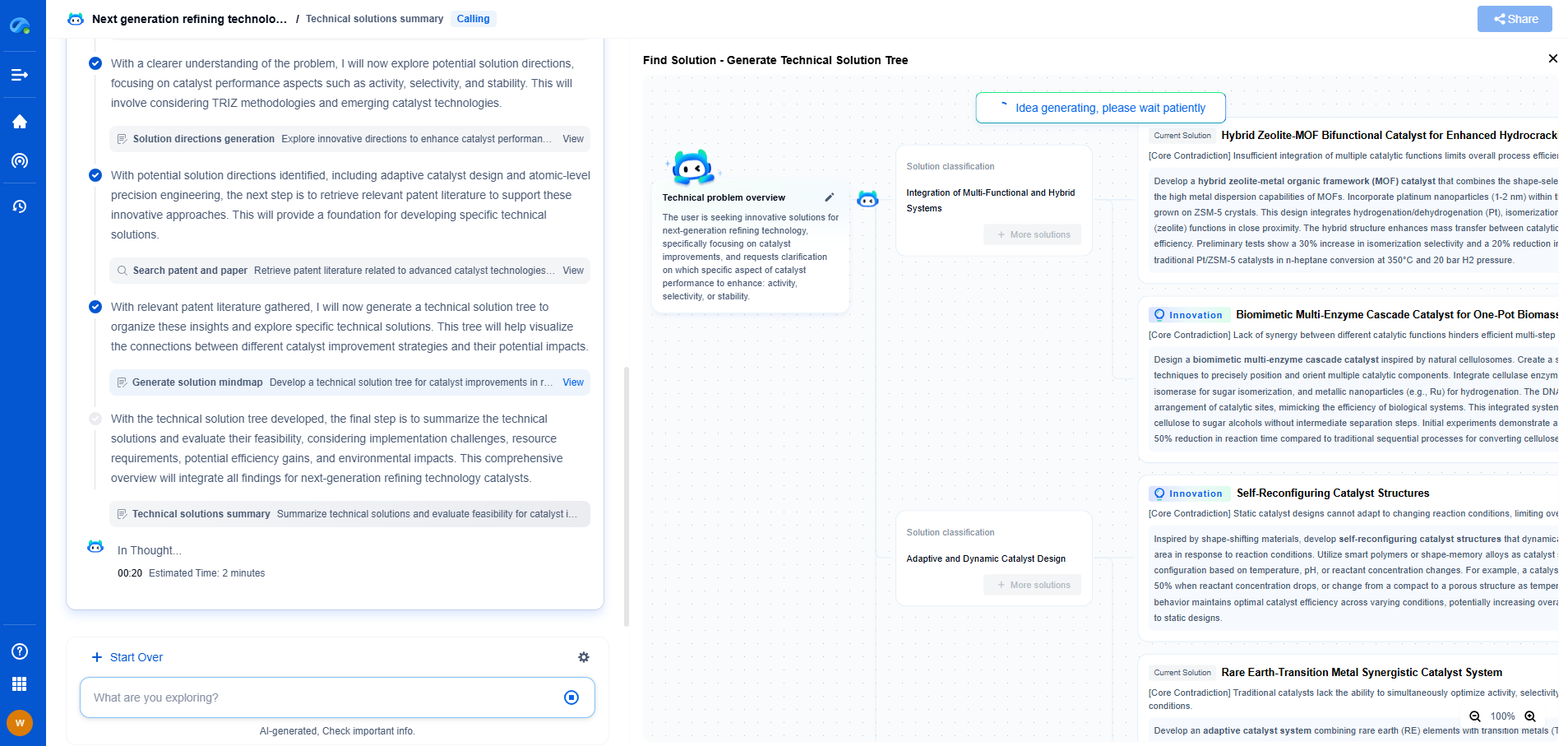

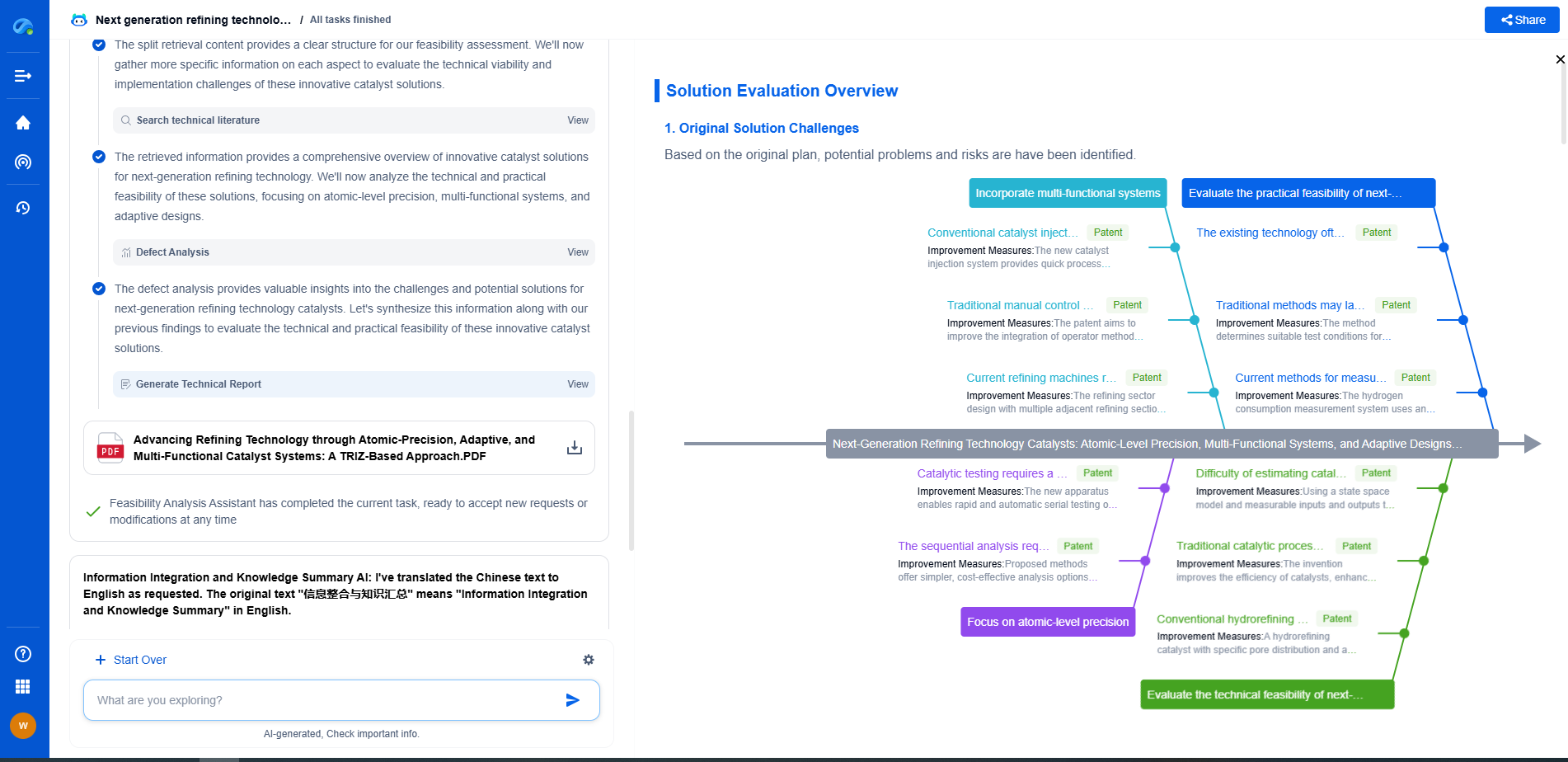

In such a high-stakes environment, how can your R&D and patent strategy keep up?

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Experience how Patsnap Eureka can supercharge your workflow in power systems R&D and IP analysis. Request a live demo or start your trial today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com