Data Compression Techniques for Efficient Measurement Storage

JUL 17, 2025 |

Understanding Data Compression

Data compression is the process of reducing the size of a data file. By encoding information using fewer bits than the original representation, data compression allows for more efficient storage and transmission. This is particularly important for measurement data, where large volumes of data are generated and stored regularly.

Lossless vs. Lossy Compression

Data compression techniques can be broadly categorized into lossless and lossy compression. Lossless compression ensures that the original data can be perfectly reconstructed from the compressed data. This is particularly important for measurement data, where accuracy and precision are paramount. Common lossless compression algorithms include Run Length Encoding (RLE), Huffman Coding, and Lempel-Ziv-Welch (LZW) compression.

On the other hand, lossy compression reduces data size by eliminating some data deemed less important. While this can result in a significant reduction in file size, it may also lead to a loss of accuracy, which is often unacceptable in measurement data applications. Lossy compression techniques are more commonly used in media file storage, where minor loss of detail is tolerable.

Popular Data Compression Techniques

1. Run Length Encoding (RLE)

Run Length Encoding is one of the simplest forms of data compression, which is particularly effective for data with lots of repeated values. It works by replacing sequences of repeated data values with a single value and a count. This technique is commonly used in image compression formats like BMP and TIFF.

2. Huffman Coding

Huffman Coding is a variable-length coding technique that assigns shorter codes to more frequent data values and longer codes to less frequent values. This method is efficient for data with variable symbol frequencies and is widely used in file formats like JPEG and MP3.

3. Lempel-Ziv-Welch (LZW) Compression

LZW is a dictionary-based compression algorithm that replaces repeated occurrences of data with references to a dictionary entry. This technique is effective for text and is used in popular file formats like GIF and TIFF.

4. Delta Encoding

Delta Encoding is useful for compressing data where values change gradually. Instead of storing the absolute values, delta encoding stores the difference between consecutive values. This technique is particularly effective in time-series data compression, where minor changes between consecutive data points are common.

5. Burrows-Wheeler Transform (BWT)

The Burrows-Wheeler Transform rearranges the input data into runs of similar characters, which can then be efficiently compressed using other algorithms like RLE. BWT is a reversible transform and is used in the bzip2 compression tool.

Benefits of Data Compression

Implementing data compression techniques for measurement storage offers several benefits:

- **Reduced Storage Costs**: By compressing data, organizations can significantly reduce their storage costs, allowing for more efficient utilization of available storage resources.

- **Faster Data Transmission**: Compressed data requires less bandwidth for transmission, enabling faster data transfer rates, which is crucial in time-sensitive applications.

- **Improved Data Management**: Smaller data sizes make it easier to manage and retrieve information, enhancing overall data handling efficiency.

Challenges in Data Compression

While data compression offers numerous advantages, it also presents certain challenges:

- **Compression Time**: The time taken to compress and decompress data can be a bottleneck, particularly for real-time applications.

- **Algorithm Complexity**: Some advanced compression algorithms may require significant computational resources, impacting performance in resource-constrained environments.

- **Data Sensitivity**: For measurement data, maintaining data integrity and accuracy is crucial. Choosing the right compression technique is essential to ensure no critical information is lost during compression.

Conclusion

Data compression is an essential tool for efficient measurement storage, enabling organizations to manage large volumes of data effectively. By choosing appropriate compression techniques based on the nature of the data and the application's requirements, organizations can benefit from reduced storage costs, faster data transmission, and improved data management. As technology continues to evolve, the development of more sophisticated compression algorithms will further enhance our ability to store and manage data efficiently.

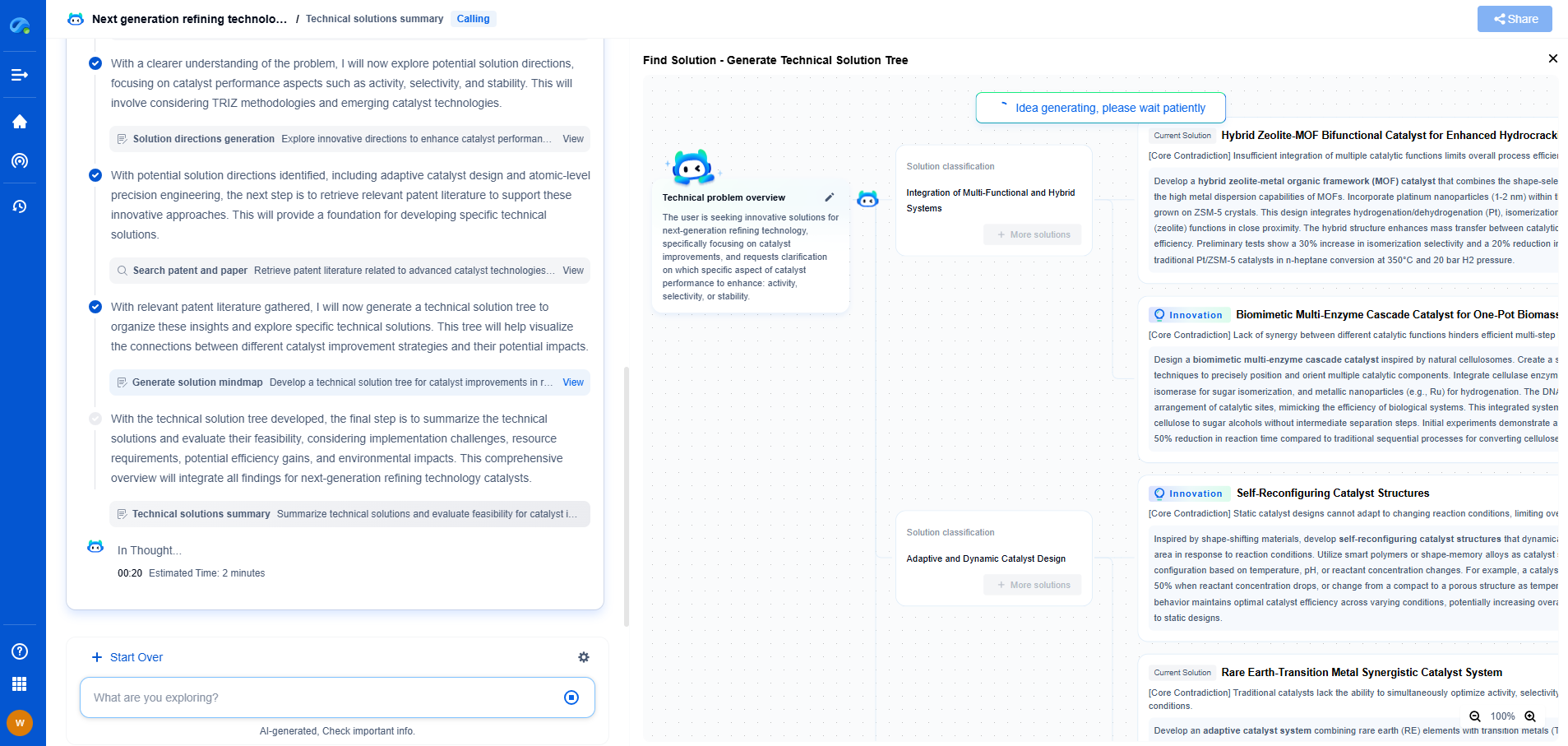

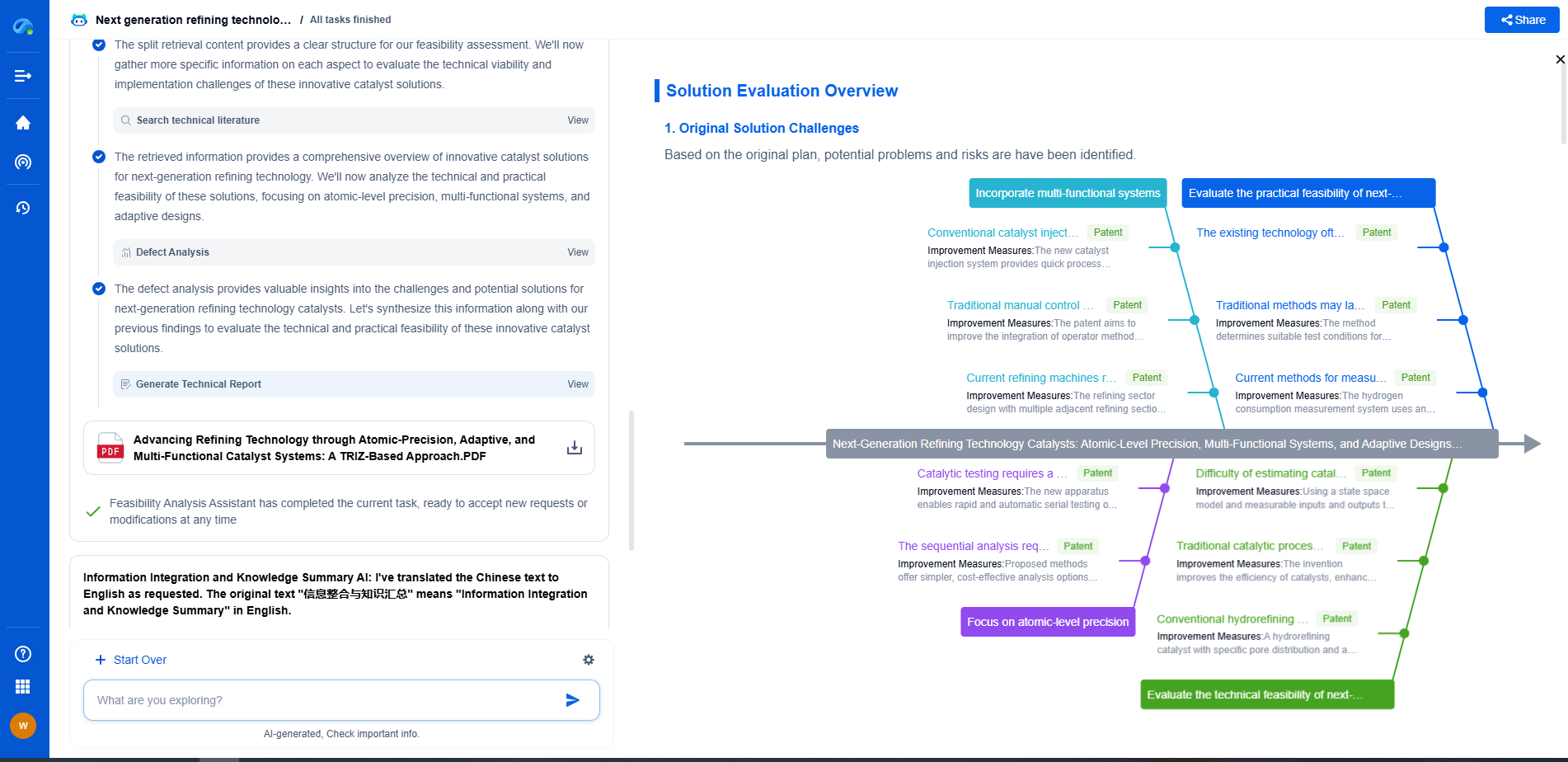

Whether you’re developing multifunctional DAQ platforms, programmable calibration benches, or integrated sensor measurement suites, the ability to track emerging patents, understand competitor strategies, and uncover untapped technology spaces is critical.

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

🧪 Let Eureka be your digital research assistant—streamlining your technical search across disciplines and giving you the clarity to lead confidently. Experience it today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com