Designing Autonomous Control Agents: MARL vs. PPO for Industrial Robotics

JUL 2, 2025 |

In the rapidly evolving landscape of industrial robotics, the integration of autonomous control agents plays a pivotal role in enhancing efficiency and adaptability. As industries strive to automate complex tasks, the choice of an appropriate control strategy becomes crucial. Two prominent approaches in this domain are Multi-Agent Reinforcement Learning (MARL) and Proximal Policy Optimization (PPO). This article delves into these methodologies, exploring their potential and limitations when applied to industrial robotics.

**Understanding Multi-Agent Reinforcement Learning (MARL)**

Multi-Agent Reinforcement Learning (MARL) is an extension of traditional reinforcement learning, where multiple agents interact within a shared environment. This approach is particularly advantageous in industrial settings where tasks are often distributed and require coordination among various robotic units. MARL enables agents to learn policies that optimize collective performance, making it ideal for tasks like assembly lines and warehouse management.

In MARL, each agent learns through interactions with the environment and other agents, adapting its strategy to optimize a cumulative reward. The challenge lies in balancing cooperation and competition among agents, which requires sophisticated algorithms to ensure stability and convergence. Algorithms such as MADDPG (Multi-Agent Deep Deterministic Policy Gradient) and QMIX have shown promise in addressing these challenges, offering scalable solutions in complex industrial scenarios.

**Exploring Proximal Policy Optimization (PPO)**

Proximal Policy Optimization (PPO) is another powerful reinforcement learning technique that has gained significant traction in robotic control. Unlike MARL, which focuses on multiple agents, PPO is typically used for training a single agent. It employs a policy gradient method that optimizes the policy directly, ensuring stable and efficient learning.

PPO stands out due to its ability to maintain a balance between exploration and exploitation. It achieves this by using a clipped objective function that prevents drastic policy updates, thus avoiding destabilization. This attribute makes PPO particularly effective for tasks requiring precise control, such as robotic manipulation and navigation in industrial environments. Its robustness and ease of implementation have made it a popular choice for developers aiming to deploy autonomous agents quickly.

**Comparative Analysis: MARL vs. PPO in Industrial Robotics**

When deciding between MARL and PPO for industrial robotics, several factors must be considered. MARL is inherently suited for environments where multiple robots must operate collaboratively. It offers scalability and flexibility, allowing for dynamic adaptation as the number of agents or complexity of tasks increases. However, MARL's complexity in coordination and communication can pose challenges, particularly in terms of computational resources and convergence speed.

On the other hand, PPO is favored for its simplicity and effectiveness in single-agent scenarios. Its straightforward implementation and stable learning process make it suitable for tasks where a single robot performs independent operations. PPO's ability to quickly adapt to changes in the environment is a significant advantage, particularly in industries where rapid deployment and iteration are essential.

**Applications and Future Prospects**

The choice between MARL and PPO can significantly impact the application and efficiency of industrial robots. Industries such as manufacturing, logistics, and quality assurance can benefit from these advanced control strategies. For instance, MARL can optimize operations in automated warehouses by enabling multiple robots to coordinate in real-time. In contrast, PPO can enhance robotic arms used in assembly lines, providing precise and adaptive control.

Looking ahead, the future of autonomous control in industrial robotics lies in hybrid approaches that combine the strengths of both MARL and PPO. Such strategies could leverage MARL's collaborative capabilities and PPO's stability to create more versatile and efficient robots. Continued advancements in computational power and algorithm development will further unlock the potential of these methodologies, driving innovation across industries.

**Conclusion**

The landscape of industrial robotics is being transformed by the advent of autonomous control agents. As industries navigate the complexities of automation, the choice between Multi-Agent Reinforcement Learning and Proximal Policy Optimization becomes crucial. Each method offers distinct advantages, from MARL's collaborative potential to PPO's stability and adaptability. By understanding these approaches' unique capabilities, developers can better design and implement autonomous agents that meet the specific demands of industrial environments. As technology evolves, the integration of these advanced control strategies will continue to propel the industrial robotics sector into a new era of efficiency and innovation.

Ready to Reinvent How You Work on Control Systems?

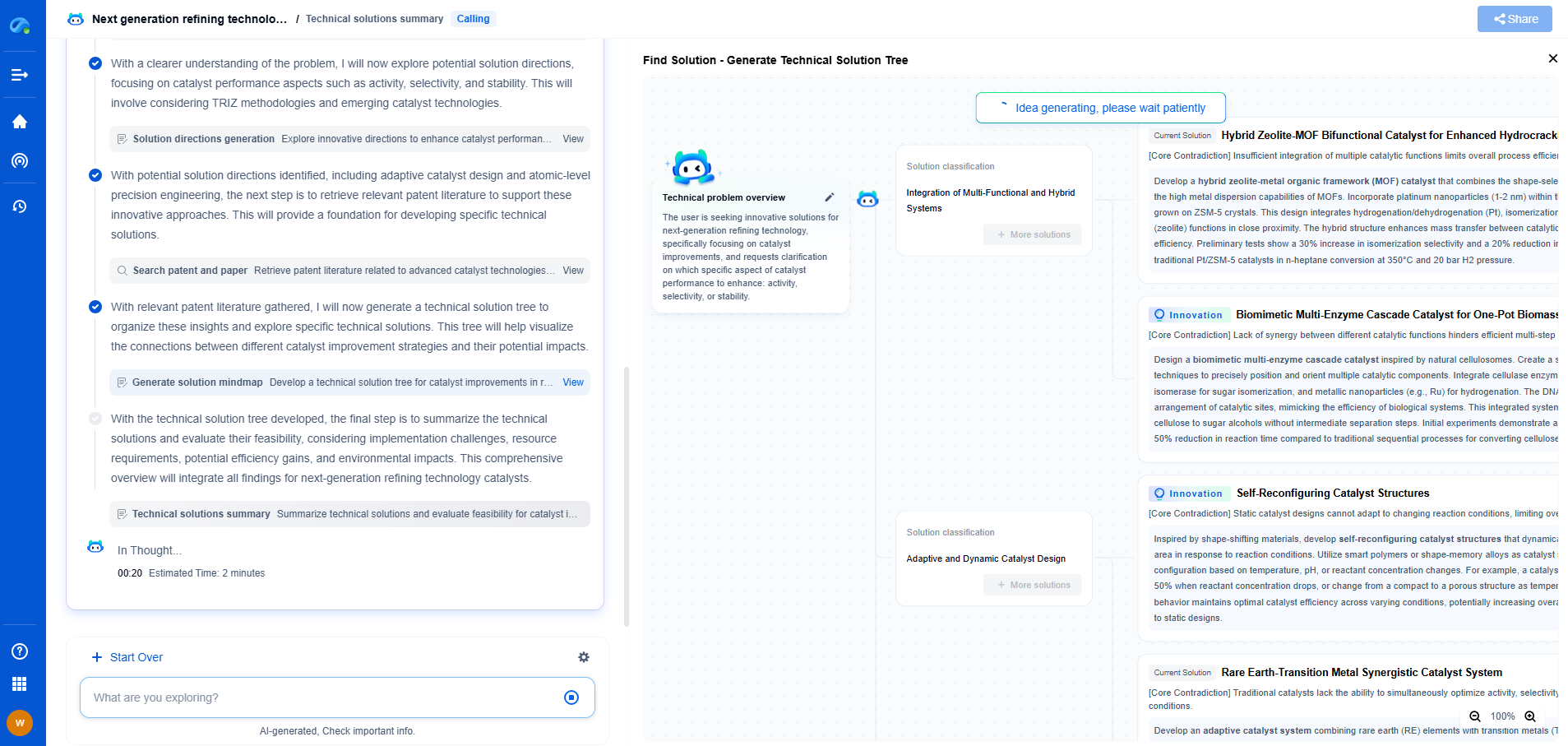

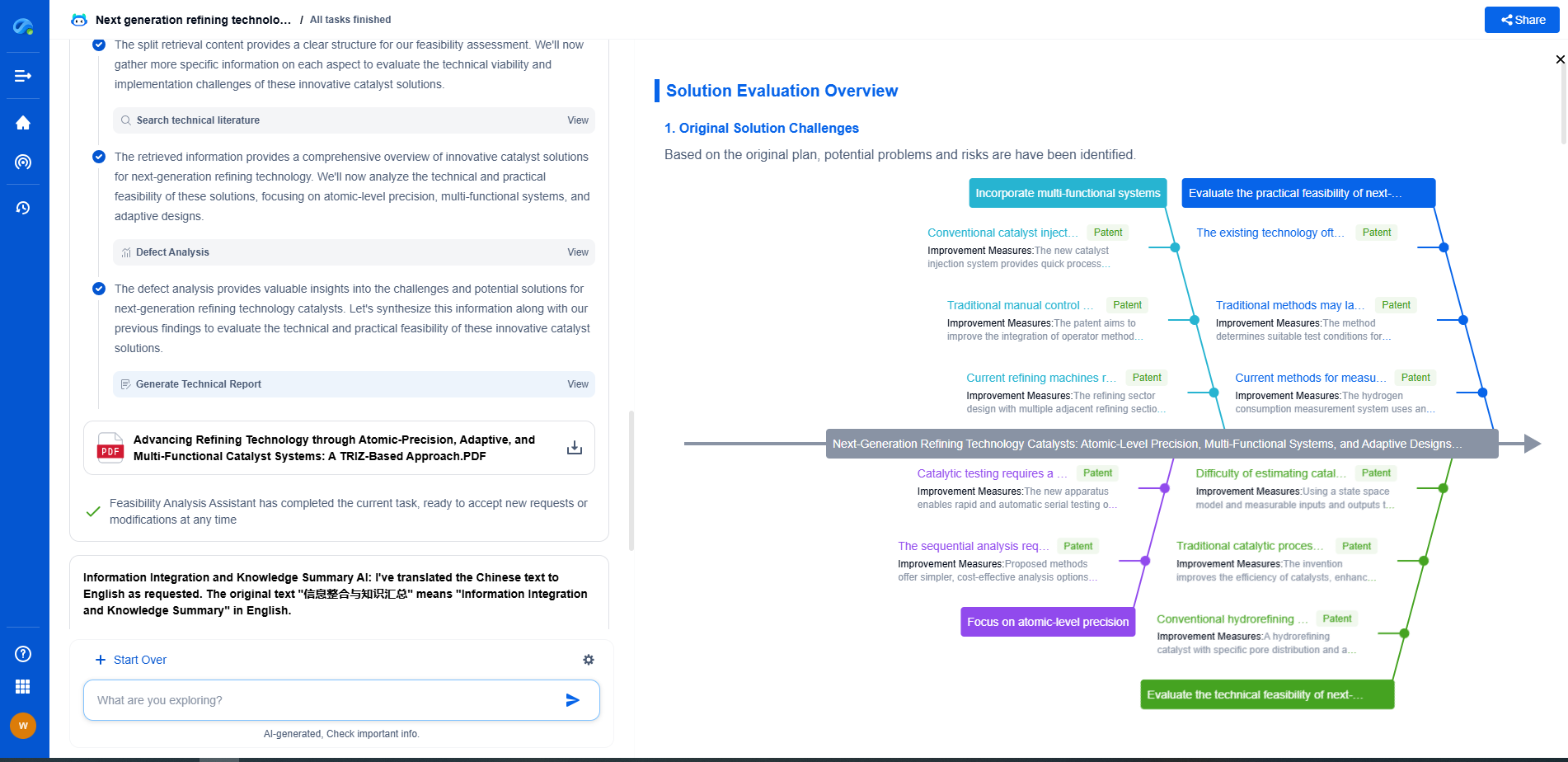

Designing, analyzing, and optimizing control systems involves complex decision-making, from selecting the right sensor configurations to ensuring robust fault tolerance and interoperability. If you’re spending countless hours digging through documentation, standards, patents, or simulation results — it's time for a smarter way to work.

Patsnap Eureka is your intelligent AI Agent, purpose-built for R&D and IP professionals in high-tech industries. Whether you're developing next-gen motion controllers, debugging signal integrity issues, or navigating complex regulatory and patent landscapes in industrial automation, Eureka helps you cut through technical noise and surface the insights that matter—faster.

👉 Experience Patsnap Eureka today — Power up your Control Systems innovation with AI intelligence built for engineers and IP minds.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com