Explainability vs Performance: The AI Transparency Dilemma

JUN 26, 2025 |

In the rapidly evolving landscape of artificial intelligence, a central debate has emerged: the balance between explainability and performance. This dilemma has important implications for businesses, policymakers, and everyday users, as it touches on issues of trust, accountability, and efficacy. Understanding this dichotomy is crucial for anyone involved in the development or utilization of AI technologies.

The Essence of Explainability

Explainability in AI refers to the degree to which an AI system's decision-making process can be understood by humans. This is crucial for transparency, allowing users to trust and verify AI outcomes. Explainability is particularly important in high-stakes fields such as healthcare, finance, and autonomous vehicles, where understanding the rationale behind decisions can prevent harmful outcomes and facilitate better oversight. The push for explainable AI is not merely a technical challenge; it represents a philosophical commitment to ensuring that technology serves humanity in an accountable manner.

The Drive for Performance

On the other hand, performance in AI is often measured by the system's accuracy, speed, and efficiency in achieving its designated tasks. High-performing AI models, such as deep learning neural networks, have demonstrated remarkable capabilities in areas like image recognition, natural language processing, and game playing. These models often function as black boxes, meaning that their internal workings are not easily interpretable by humans. The priority placed on performance has led to breakthroughs that were previously thought impossible, transforming industries and redefining possibilities.

The Trade-Off: Sacrificing Clarity for Capability

The core of the explainability vs. performance dilemma lies in the trade-off between these two objectives. More complex models, which offer superior performance, tend to be less interpretable. Simplifying models to make them more explainable often leads to reduced performance. This trade-off means that stakeholders must carefully consider their priorities and the context in which AI is deployed. While a high-performing model might be preferable in applications where accuracy is paramount, a more explainable model might be essential in settings where trust and transparency are integral.

Implications for Different Stakeholders

For developers and researchers, the challenge is to design AI systems that strike a balance between explainability and performance. This involves employing techniques such as feature visualization, model distillation, and the use of interpretable algorithms, which help demystify the decision-making processes of AI systems without significantly compromising on performance.

For businesses, the decision often hinges on the application domain and regulatory requirements. In sectors like finance and healthcare, where compliance with regulations is stringent, explainability becomes a key factor in AI adoption. Transparent models can help mitigate risks, ensuring that AI-driven decisions are fair and unbiased.

Policymakers and regulators also play a crucial role in this dilemma. By setting guidelines and standards for AI transparency, they can help promote the responsible use of AI technology. Regulatory bodies are increasingly emphasizing the need for explainability, recognizing its importance in protecting consumer rights and fostering public trust.

Building Trust through Hybrid Approaches

In response to the explainability vs. performance dilemma, hybrid approaches are emerging as a viable solution. These approaches combine high-performing algorithms with interpretability techniques, allowing for a balance that does not overly compromise on either side. Techniques such as creating surrogate models, utilizing attention mechanisms, or employing local interpretable model-agnostic explanations (LIME) are being used to enhance transparency while maintaining robust performance.

Conclusion: Navigating the AI Transparency Dilemma

The explainability versus performance dilemma in AI is an ongoing challenge that requires careful consideration from all involved parties. By understanding the nuances of this issue and exploring innovative solutions, stakeholders can work towards AI systems that are not only efficient and effective but also transparent and trustworthy. The balance between explainability and performance will continue to evolve as AI technology advances, and it is the responsibility of the AI community to ensure that this balance serves the greater good of society.

Stay Ahead in Power Systems Innovation

From intelligent microgrids and energy storage integration to dynamic load balancing and DC-DC converter optimization, the power supply systems domain is rapidly evolving to meet the demands of electrification, decarbonization, and energy resilience.

In such a high-stakes environment, how can your R&D and patent strategy keep up?

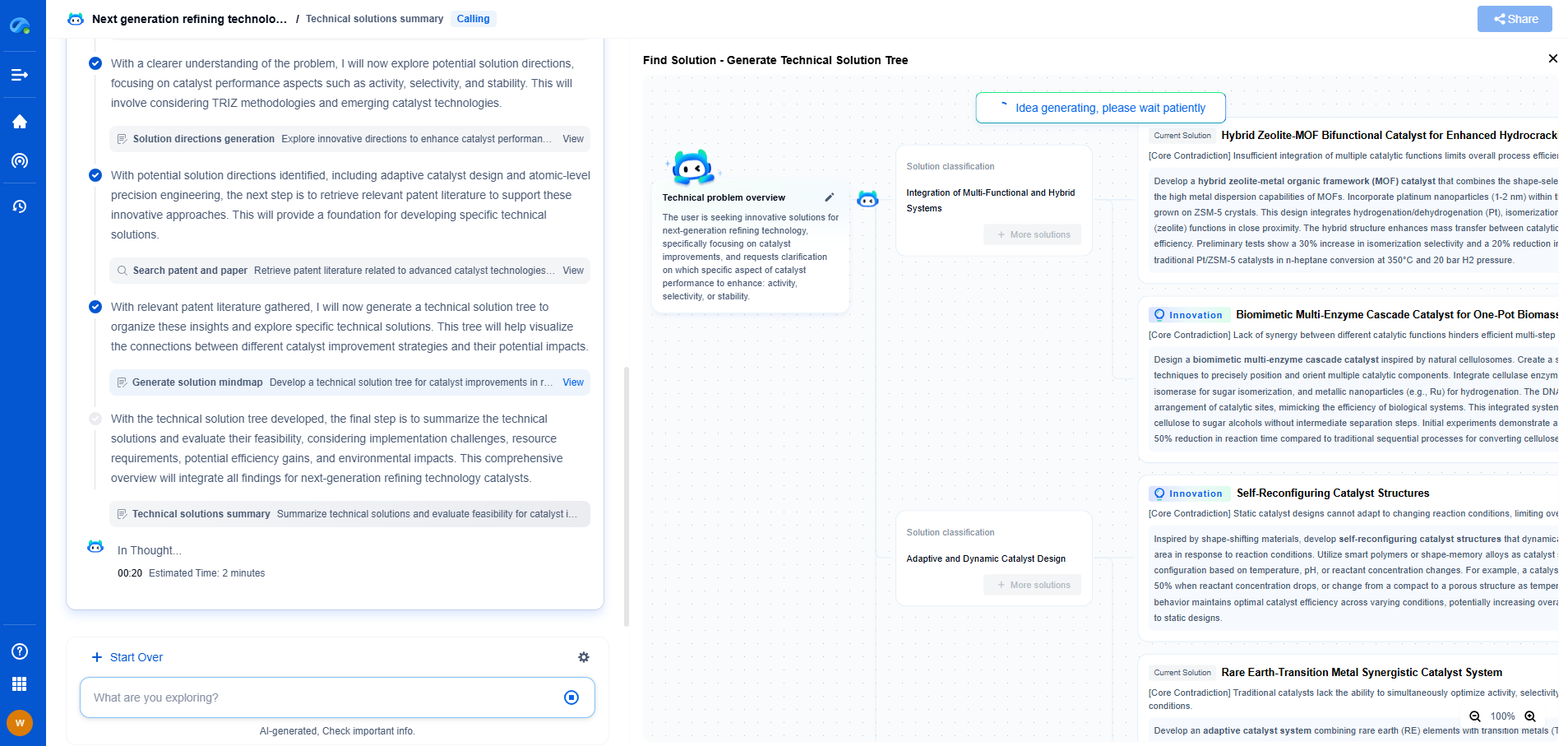

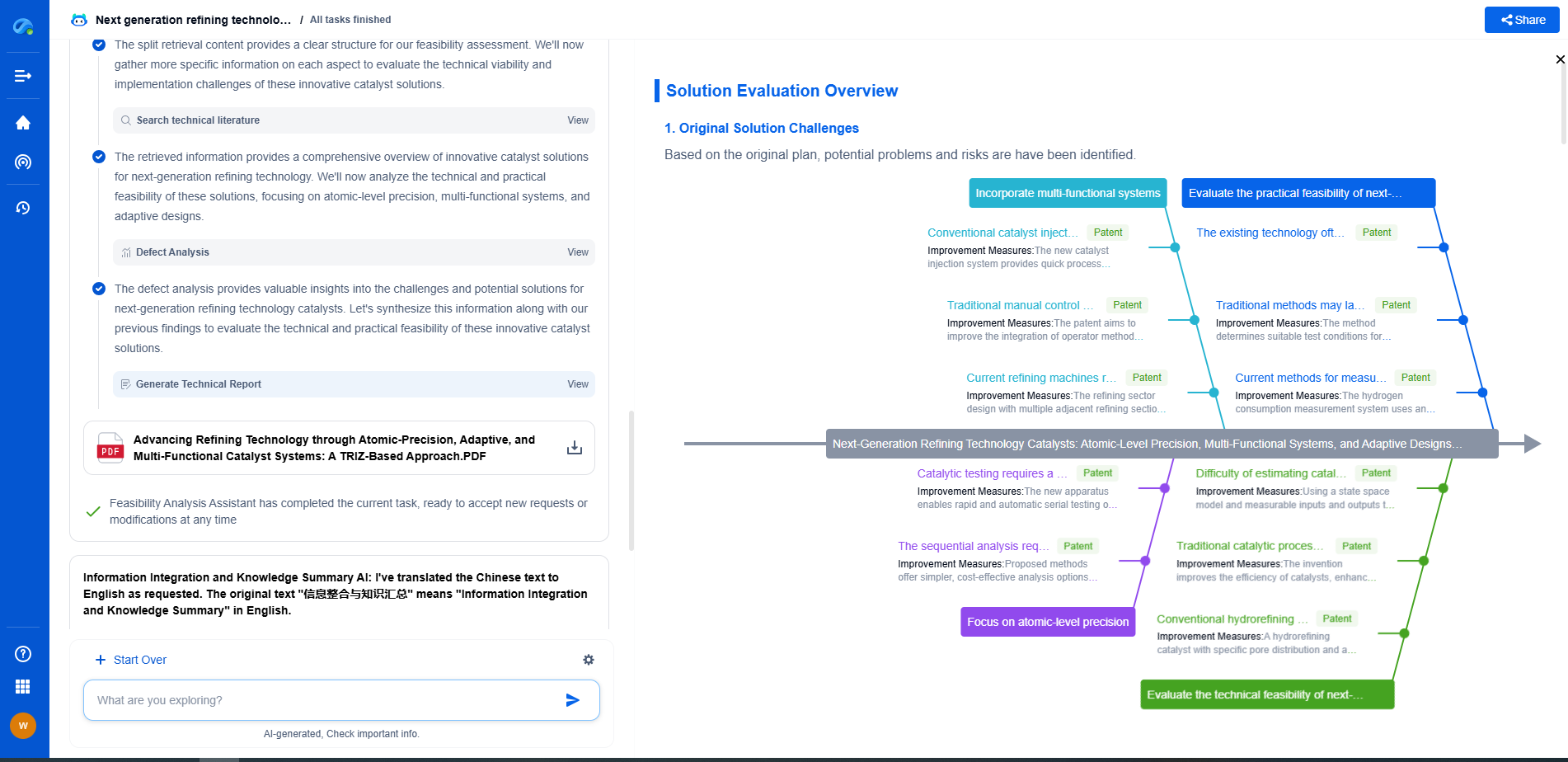

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Experience how Patsnap Eureka can supercharge your workflow in power systems R&D and IP analysis. Request a live demo or start your trial today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com