From Offline to Online RL: Fine-Tuning LLMs for Verifiable Control Tasks

JUL 2, 2025 |

The advent of reinforcement learning (RL) has revolutionized the field of artificial intelligence by enabling machines to learn from interactions with their environment. Traditionally, RL has been applied in offline settings where agents learn from a fixed dataset. However, with the growing capabilities of large language models (LLMs), there is an emerging interest in leveraging these models for online RL tasks. In this blog, we explore the transition from offline to online RL and the fine-tuning of LLMs for verifiable control tasks.

Understanding Offline and Online RL

Offline RL refers to the process where agents learn from a previously collected static dataset without any further interaction with the environment. It is particularly useful in scenarios where real-time data collection is expensive or risky. On the other hand, online RL allows agents to interact with the environment in real-time, updating their policies based on continuous feedback. This dynamic approach is advantageous for adapting to changing environments and fine-tuning strategies for optimal performance.

The Role of Large Language Models

Large language models, such as GPT-3 and its successors, have demonstrated remarkable capabilities in understanding and generating human-like text. These models have been pre-trained on vast amounts of data, equipping them with general knowledge across various domains. However, their potential goes beyond just language processing; they can also be adapted for RL tasks. By utilizing LLMs, researchers aim to bridge the gap between natural language understanding and decision-making in complex control tasks.

Fine-Tuning LLMs for Control Tasks

Fine-tuning is a critical process in leveraging pre-trained LLMs for specific applications. In the context of RL, fine-tuning involves adjusting the model’s parameters to optimize its performance in a given environment. This process enables the LLM to learn the intricacies of control tasks, such as robotics or automated systems, where decision-making is crucial. By fine-tuning LLMs, developers can harness their vast linguistic knowledge and apply it to create more intelligent and adaptable control systems.

Challenges in Transitioning from Offline to Online RL

While the potential of LLMs in online RL is promising, several challenges must be addressed to ensure successful implementation. One significant obstacle is the balance between exploration and exploitation. In online RL, agents must explore the environment to gather new information, while also exploiting known strategies for optimal performance. Striking this balance is essential to prevent models from becoming stuck in suboptimal solutions.

Moreover, ensuring the verifiability of control tasks is crucial. In safety-critical applications, such as autonomous vehicles or healthcare systems, the decisions made by RL agents must be reliable and transparent. Researchers are working on methods to improve the interpretability of LLMs, enabling stakeholders to verify and trust the actions taken by these models.

Case Studies and Applications

Several case studies highlight the successful application of LLMs in online RL tasks. For instance, in robotic manipulation, fine-tuned LLMs have demonstrated the ability to control robotic arms with precision and adaptability. Additionally, in the field of autonomous navigation, LLMs have been employed to enhance decision-making algorithms, improving the efficiency and safety of self-driving cars.

Future Directions

The future of online RL with LLMs holds immense potential. As these models continue to evolve, researchers are exploring ways to integrate multimodal data, such as images and audio, to enhance decision-making capabilities. Furthermore, advancements in hardware and computational resources will enable real-time fine-tuning, making LLMs more accessible for a wider range of applications.

Conclusion

The transition from offline to online RL marks a significant milestone in the development of intelligent systems. By fine-tuning large language models for verifiable control tasks, researchers are paving the way for more adaptive and reliable artificial intelligence. While challenges remain, the ongoing advancements in this field promise exciting opportunities for innovation across various industries. As we continue to explore the capabilities of LLMs in online RL, we are poised to unlock new frontiers in AI-driven decision-making and control systems.

Ready to Reinvent How You Work on Control Systems?

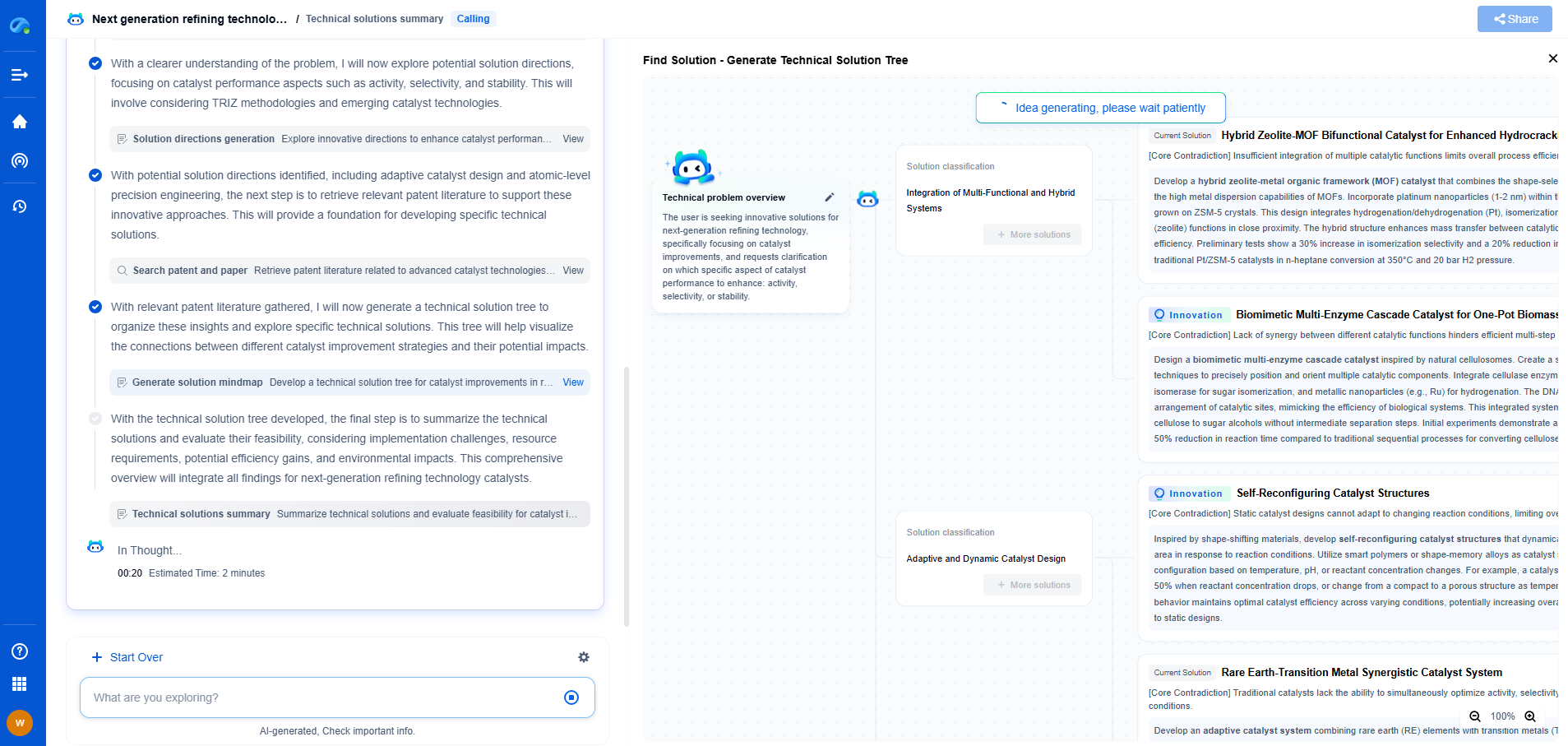

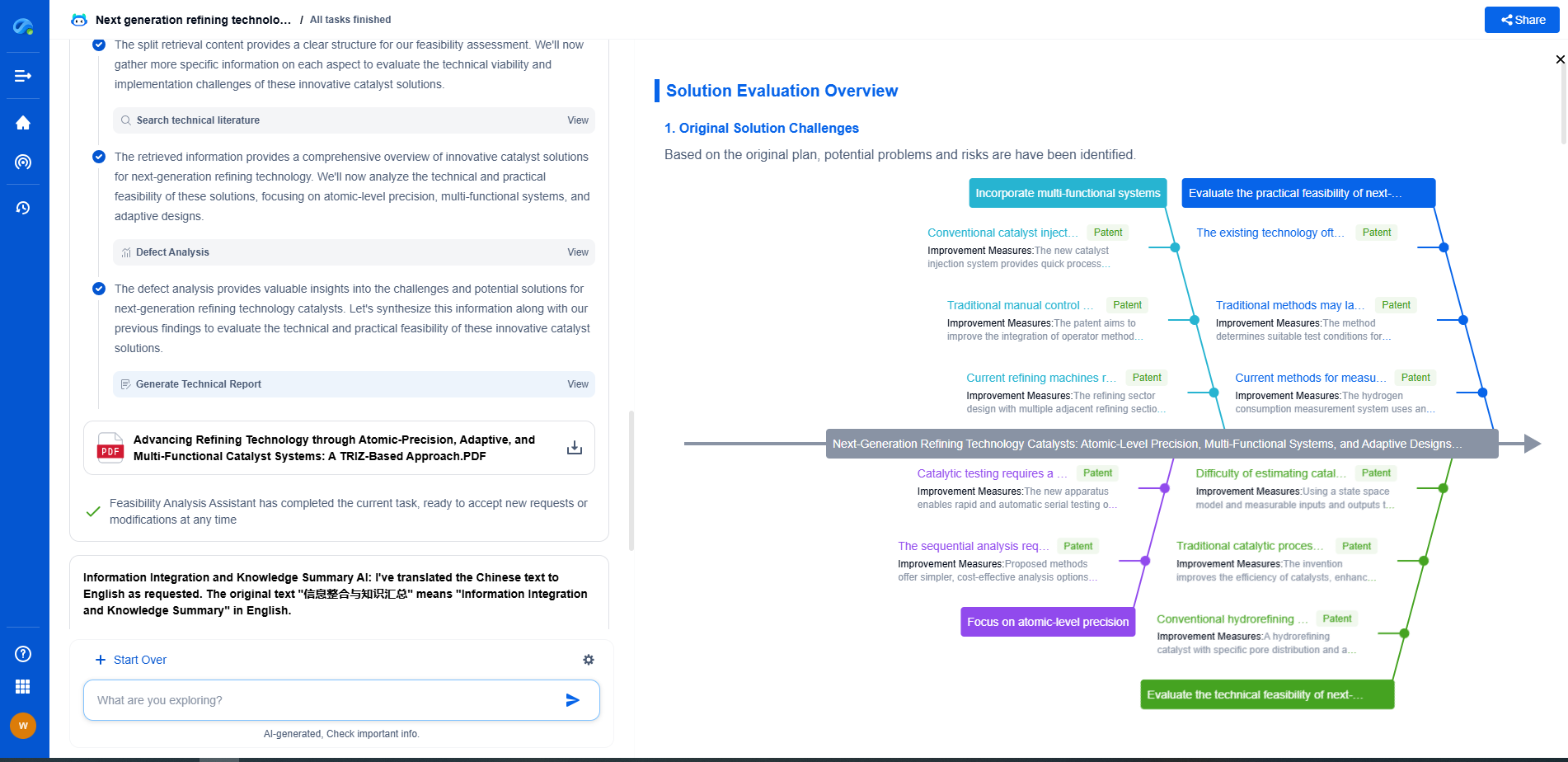

Designing, analyzing, and optimizing control systems involves complex decision-making, from selecting the right sensor configurations to ensuring robust fault tolerance and interoperability. If you’re spending countless hours digging through documentation, standards, patents, or simulation results — it's time for a smarter way to work.

Patsnap Eureka is your intelligent AI Agent, purpose-built for R&D and IP professionals in high-tech industries. Whether you're developing next-gen motion controllers, debugging signal integrity issues, or navigating complex regulatory and patent landscapes in industrial automation, Eureka helps you cut through technical noise and surface the insights that matter—faster.

👉 Experience Patsnap Eureka today — Power up your Control Systems innovation with AI intelligence built for engineers and IP minds.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com