How to Use Sensor Fusion for Autonomous Robotics Control

JUL 2, 2025 |

Sensor fusion is a critical component in the field of autonomous robotics, serving as the backbone for the perception and decision-making capabilities of robots. By integrating data from multiple sensors, sensor fusion enables a more comprehensive understanding of the environment and enhances the accuracy and reliability of robotic systems. In this blog, we will delve into the principles of sensor fusion and explore its application in autonomous robotics control.

**The Role of Sensors in Autonomous Robotics**

Sensors are the primary means by which robots perceive their surroundings. They provide crucial information about the environment, allowing robots to navigate and interact with the world effectively. Common sensors used in autonomous robotics include cameras, LiDAR, radar, GPS, and inertial measurement units (IMUs). Each of these sensors has its strengths and weaknesses, and the key challenge lies in combining their data to create a coherent and accurate representation of the environment.

**Understanding Sensor Fusion**

At its core, sensor fusion is the process of merging data from multiple sensors to produce a more accurate and reliable estimate of the environment. The primary goals of sensor fusion are to enhance the quality of information, reduce uncertainty, and improve the robustness of the system. There are several levels of sensor fusion, ranging from low-level data fusion, where raw data is combined, to high-level fusion, where interpretations and decisions are integrated.

**Types of Sensor Fusion Techniques**

1. **Complementary Fusion**: This technique involves using sensors that provide different types of information. For example, a camera can provide visual data while an IMU offers motion-related data. By combining these complementary sources, a robot can achieve more accurate motion estimation.

2. **Redundant Fusion**: Redundant fusion uses multiple sensors of the same type to ensure that if one sensor fails, the others can take over. This redundancy increases the reliability of the system, making it more robust against sensor failures.

3. **Cooperative Fusion**: In cooperative fusion, data from multiple sensors is combined to correct or enhance each other's outputs. For example, LiDAR and camera data can be fused to improve depth perception in challenging lighting conditions.

**Implementing Sensor Fusion in Autonomous Robotics**

1. **Pre-processing and Calibration**: Before fusing sensor data, it is essential to calibrate sensors to ensure that they present accurate and consistent measurements. Calibration involves aligning the coordinate systems of different sensors and adjusting for any biases or errors.

2. **Data Alignment and Synchronization**: Sensor data must be time-synchronized and spatially aligned to ensure that different measurements correspond to the same point in time and space. This step is crucial for accurate fusion results.

3. **Fusion Algorithms**: Several algorithms can be used for sensor fusion, including Kalman filters, particle filters, and deep learning-based methods. The choice of algorithm depends on factors like computational resources, required accuracy, and real-time constraints.

4. **Testing and Validation**: Once the fusion system is implemented, rigorous testing is necessary to validate its performance. Simulation environments and real-world tests are used to assess the accuracy and reliability of the fusion process under varying conditions.

**Challenges and Considerations in Sensor Fusion**

Sensor fusion is a complex process that poses several challenges. Noise and errors in sensor data can lead to inaccurate fusion results, while computational complexity can affect real-time performance. Additionally, designing a fusion system that adapts to diverse operating conditions requires careful consideration. Balancing these challenges involves ongoing research and development to improve algorithms and optimize system performance.

**Future Prospects of Sensor Fusion in Robotics**

As the field of robotics continues to evolve, sensor fusion will play an increasingly vital role in advancing autonomous technologies. Emerging trends include the integration of machine learning techniques to enhance fusion accuracy and the development of more sophisticated sensors that provide richer data. The future of sensor fusion promises more intelligent and capable autonomous systems, pushing the boundaries of what robots can achieve in various domains.

**Conclusion**

Sensor fusion is an indispensable aspect of autonomous robotics control, providing a robust framework for integrating diverse sensory information. By leveraging the strengths of multiple sensors, robots can achieve a heightened level of awareness and functionality, paving the way for more advanced applications. As technology progresses, sensor fusion will remain a cornerstone in the quest for fully autonomous robotic solutions.

Ready to Reinvent How You Work on Control Systems?

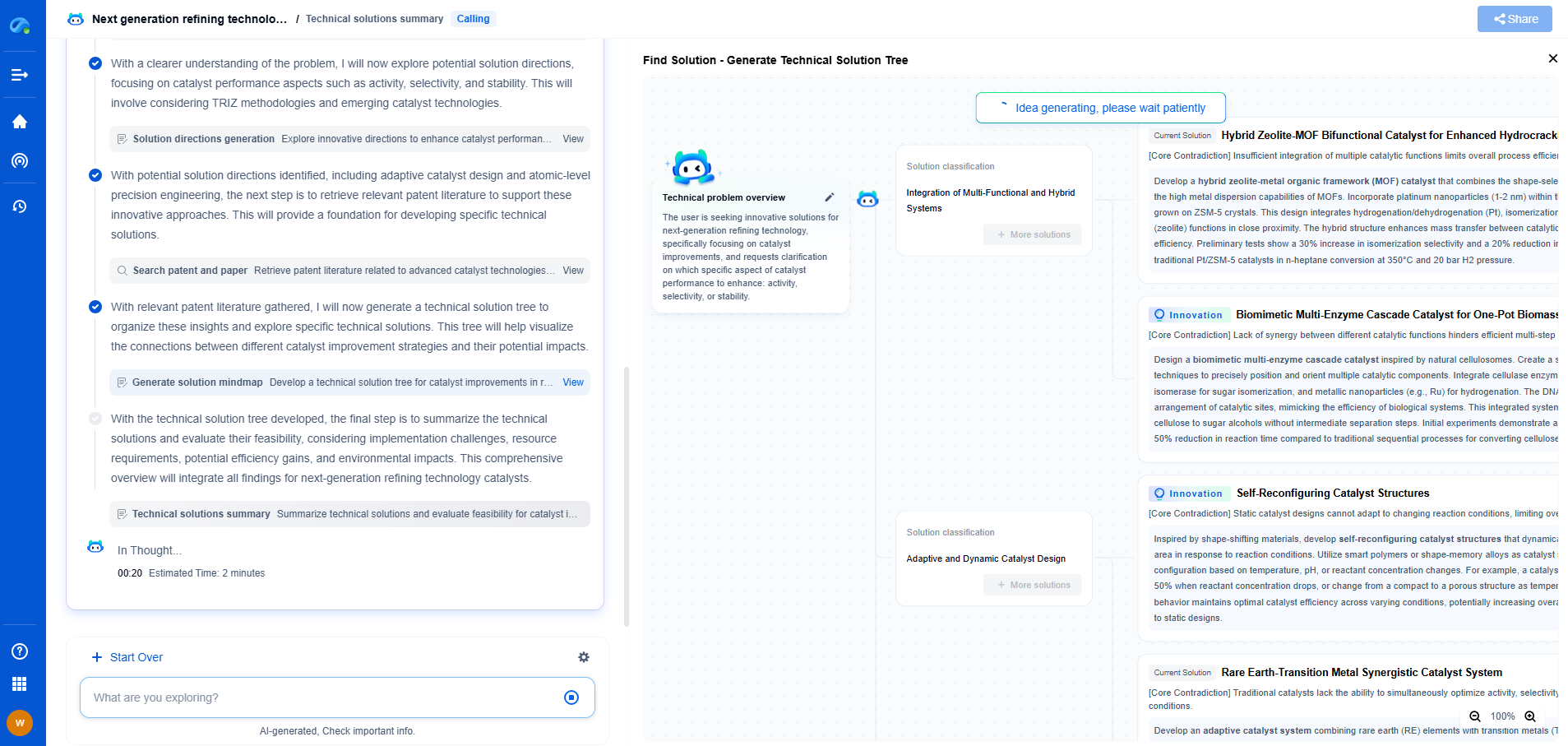

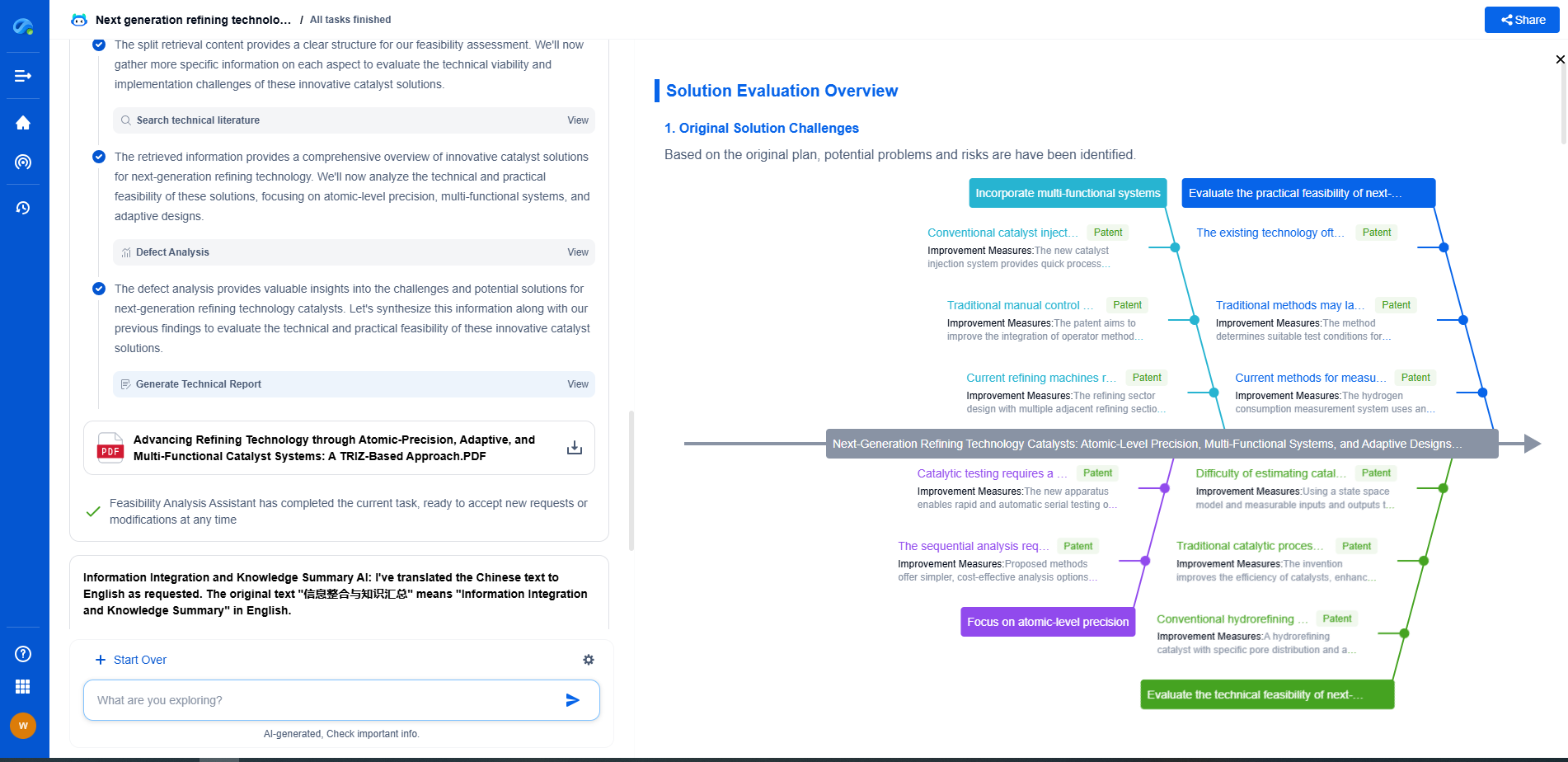

Designing, analyzing, and optimizing control systems involves complex decision-making, from selecting the right sensor configurations to ensuring robust fault tolerance and interoperability. If you’re spending countless hours digging through documentation, standards, patents, or simulation results — it's time for a smarter way to work.

Patsnap Eureka is your intelligent AI Agent, purpose-built for R&D and IP professionals in high-tech industries. Whether you're developing next-gen motion controllers, debugging signal integrity issues, or navigating complex regulatory and patent landscapes in industrial automation, Eureka helps you cut through technical noise and surface the insights that matter—faster.

👉 Experience Patsnap Eureka today — Power up your Control Systems innovation with AI intelligence built for engineers and IP minds.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com