Image Sensor Roadmap: From CMOS to Event-Based Vision Chips

JUL 8, 2025 |

In the past few decades, image sensors have undergone remarkable evolution, transforming from rudimentary devices to sophisticated systems that are integral to various modern technologies. The journey from Charge-Coupled Devices (CCDs) to Complementary Metal-Oxide-Semiconductor (CMOS) sensors marked a significant leap, but the innovation didn't stop there. Today, we're witnessing the dawn of event-based vision chips, which promise to revolutionize the field of visual perception yet again.

**The Rise of CMOS Sensors**

The transition to CMOS technology heralded a new era for image sensors. Unlike CCDs, CMOS sensors are capable of integrating the sensor and processing circuitry on a single chip, leading to smaller, more power-efficient devices. This development opened the door to widespread applications, from consumer electronics like smartphones to automotive cameras and medical imaging.

CMOS sensors offer several advantages, including faster readout speeds and the ability to operate at lower power. Furthermore, their manufacturing process is compatible with standard semiconductor fabrication techniques, which makes them cost-effective. These benefits made CMOS sensors the dominant technology for most imaging applications today.

**Limitations of Conventional Image Sensors**

Despite the success of CMOS technology, conventional image sensors face inherent limitations. They capture images frame by frame at fixed intervals, which can result in motion blur or latency. For dynamic scenes or rapid motion, this traditional frame-based approach can be inefficient, leading to a loss of critical information between frames.

Moreover, frame-based sensors are not adept at handling variations in lighting conditions. When exposed to high dynamic range scenes, they often struggle to capture both bright and dark areas effectively, necessitating complex post-processing.

**Introduction to Event-Based Vision Chips**

To overcome these limitations, researchers and engineers have developed event-based vision chips, also known as neuromorphic or dynamic vision sensors. Inspired by the human retina, these sensors operate on an entirely different principle. Instead of capturing entire frames at regular intervals, they detect changes in brightness at specific pixels, effectively working in an asynchronous manner.

This event-driven approach allows for incredibly high temporal resolution, as each pixel independently responds to changes in the scene. Consequently, event-based sensors can capture fast-moving objects without motion blur and adapt to varying lighting conditions with ease. This capability is particularly beneficial in applications such as robotics, autonomous vehicles, and surveillance, where real-time response is crucial.

**Advantages of Event-Based Vision**

Event-based vision chips offer a host of advantages over traditional sensors. Their asynchronous operation means they consume significantly less power, as they only process relevant changes in the scene rather than entire frames. This efficiency is ideal for battery-powered devices and applications where power conservation is critical.

Moreover, the high temporal resolution provided by event-based sensors allows for more accurate motion detection and tracking. In the realm of robotics and autonomous systems, this means more reliable navigation and interaction with dynamic environments. The reduced latency also enhances the sensor's ability to react swiftly to sudden changes, making them highly suitable for high-speed applications.

**Challenges and Future Prospects**

While event-based vision chips offer compelling advantages, they also present new challenges. The data generated by these sensors is fundamentally different from that of conventional frame-based sensors, requiring novel algorithms and processing techniques for effective interpretation. Additionally, integrating event-based sensors into existing systems and workflows necessitates a shift in how image data is processed and analyzed.

Despite these challenges, the potential applications of event-based vision are vast and varied. From enhancing machine vision in industrial settings to improving the safety and efficiency of autonomous vehicles, the impact of this technology could be transformative. As research continues and the technology matures, we can anticipate even more innovative uses for event-based vision sensors.

**Conclusion**

The roadmap of image sensors, from CMOS to event-based vision chips, highlights a trajectory of innovation driven by the need for faster, more efficient, and more accurate imaging solutions. Each advancement builds upon the last, paving the way for new possibilities in how machines perceive and interact with the world. As we move forward, event-based vision technology promises to unlock new frontiers in imaging, continuing to push the boundaries of what's possible.

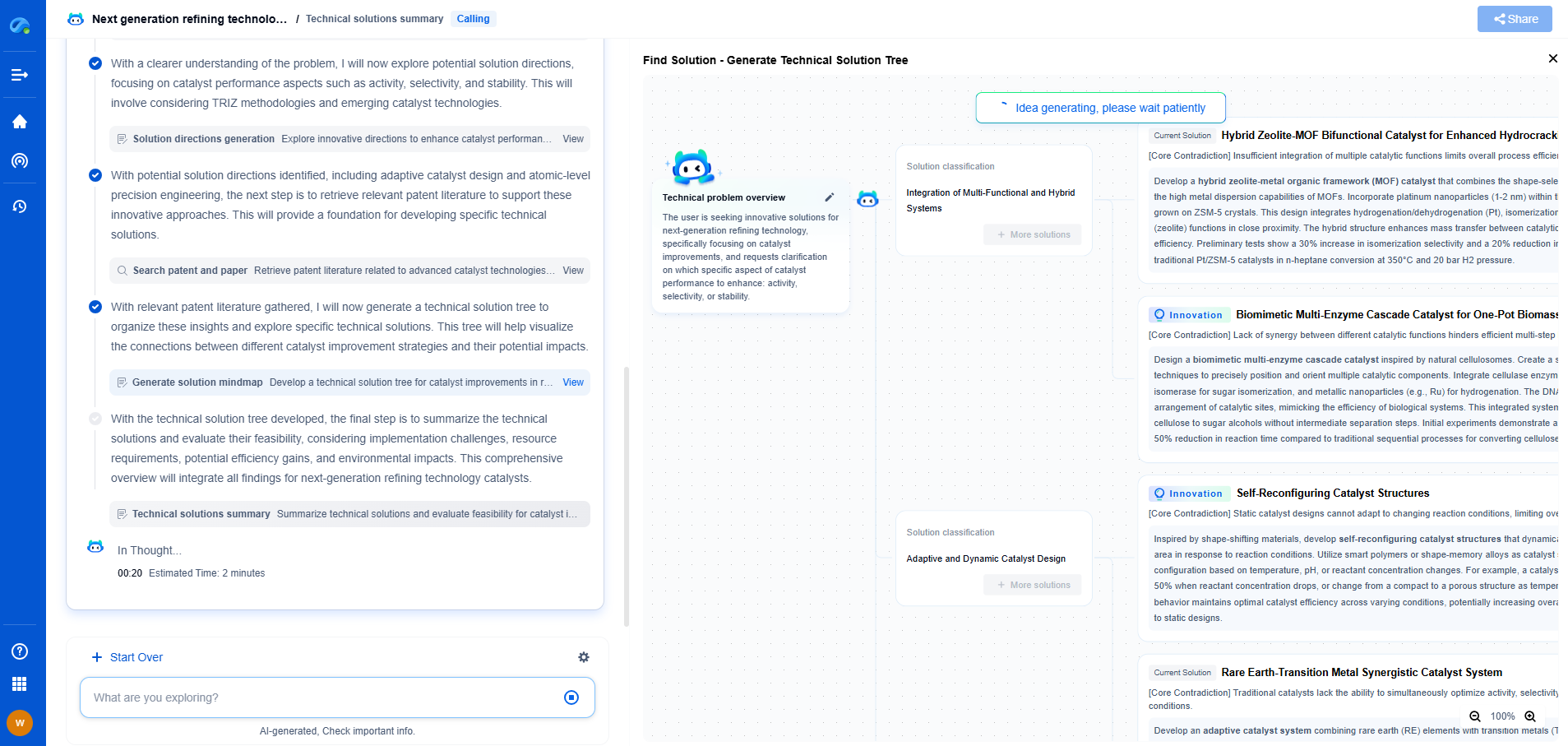

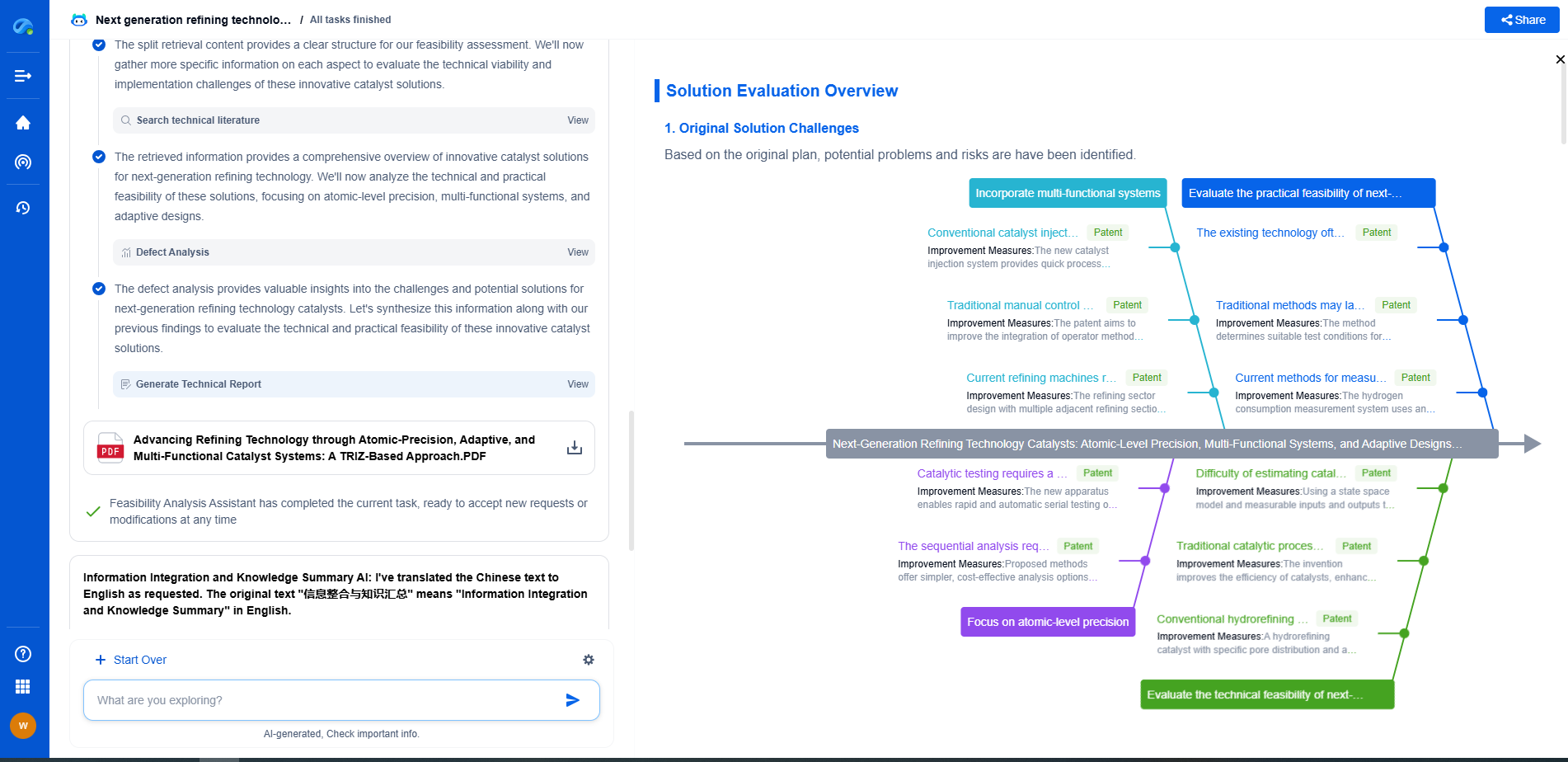

Infuse Insights into Chip R&D with PatSnap Eureka

Whether you're exploring novel transistor architectures, monitoring global IP filings in advanced packaging, or optimizing your semiconductor innovation roadmap—Patsnap Eureka empowers you with AI-driven insights tailored to the pace and complexity of modern chip development.

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Join the new era of semiconductor R&D. Try Patsnap Eureka today and experience the future of innovation intelligence.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com