Latency in Edge AI Control Systems: How to Optimize Inference Speed

JUL 2, 2025 |

Edge AI control systems are revolutionizing various industries by enabling real-time decision-making at the source of data generation. Unlike traditional cloud-based models, edge AI processes data locally, which is crucial for applications requiring immediate responses, such as autonomous vehicles, robotics, and industrial automation. However, latency remains a significant challenge in these systems, potentially hindering performance and reliability.

Understanding Latency in Edge AI

Latency refers to the delay between data input and the corresponding AI-driven action or output. In edge AI control systems, latency can arise from various sources, including data processing, model inference, and communication delays. High latency can lead to slower decision-making, reduced system performance, and in critical applications, it may even comprise safety.

Factors Contributing to Latency

1. Model Complexity:

The complexity of AI models directly influences inference speed. Deep learning models with numerous layers and parameters require more computational resources, leading to increased latency during inference.

2. Hardware Limitations:

Edge devices often have limited computational power compared to cloud servers. The hardware constraints can impede the execution speed of AI models, thus impacting latency.

3. Data Transfer:

Transferring data between sensors, processors, and actuators can introduce delays, especially if the data size is large or if there are bandwidth constraints.

4. Network Congestion:

In systems where edge devices communicate over a network, congestion can lead to additional latency, affecting the real-time capabilities of the system.

Strategies to Optimize Inference Speed

1. Model Optimization Techniques:

a. Model Pruning:

Simplifying models by removing redundant parameters can significantly reduce their size and computation time. Pruned models maintain performance while offering faster inference.

b. Quantization:

Converting model parameters from floating-point to lower bit-width representations reduces the computational load and enhances inference speed without substantial loss of accuracy.

2. Edge-optimized Hardware:

Investing in hardware specifically designed for AI inference, such as AI accelerators or GPUs, can drastically improve processing speeds. These devices are tailored to handle AI workloads efficiently.

3. Efficient Data Management:

a. Data Compression:

Reducing the size of data inputs through compression techniques before processing can decrease latency by lowering the data transfer and processing load.

b. Preprocessing:

Implementing local data preprocessing steps can streamline the amount of data that needs to be fed into the AI model, speeding up the inference process.

4. Network Optimization:

a. Bandwidth Management:

Prioritizing critical data packets and optimizing bandwidth usage can minimize latency due to network traffic.

b. Edge-to-cloud Offloading:

For non-critical tasks, offloading computations to the cloud can alleviate the load on edge devices, enabling them to focus on real-time tasks.

Real-world Applications and Case Studies

Many industries have successfully implemented latency optimization strategies in their edge AI control systems. For instance, automotive manufacturers are using optimized models and edge hardware to enhance the responsiveness of autonomous vehicles. Similarly, smart factories are employing efficient data management and network strategies to ensure seamless operation of AI-driven machinery.

Conclusion

Reducing latency in edge AI control systems is imperative for maximizing their potential benefits. By understanding the sources of latency and employing targeted optimization strategies, developers and engineers can enhance the speed and efficiency of AI inference. As technology advances, continued innovation in model design, hardware capabilities, and data management will further propel the capabilities of edge AI, paving the way for smarter, faster, and more reliable control systems.

Ready to Reinvent How You Work on Control Systems?

Designing, analyzing, and optimizing control systems involves complex decision-making, from selecting the right sensor configurations to ensuring robust fault tolerance and interoperability. If you’re spending countless hours digging through documentation, standards, patents, or simulation results — it's time for a smarter way to work.

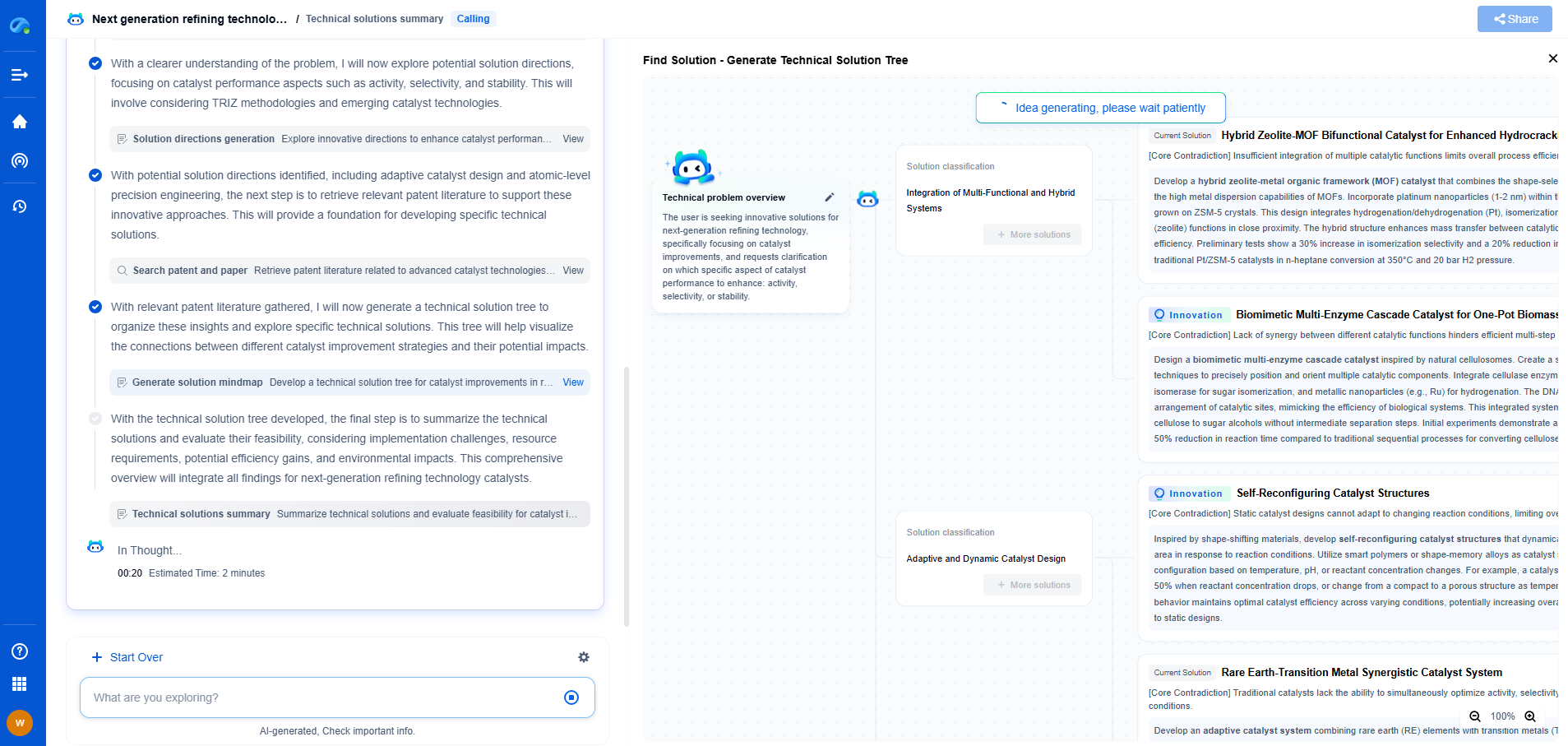

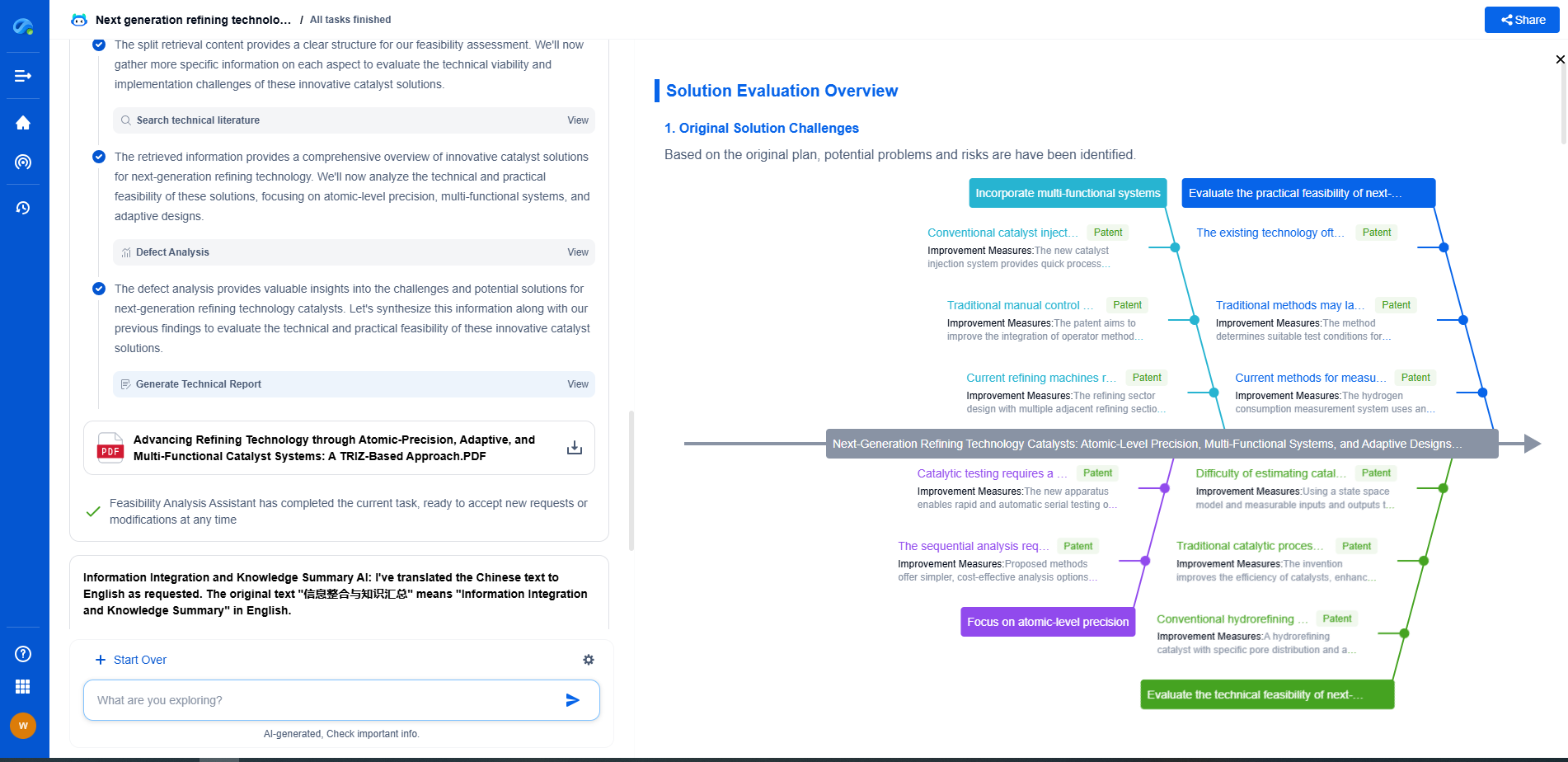

Patsnap Eureka is your intelligent AI Agent, purpose-built for R&D and IP professionals in high-tech industries. Whether you're developing next-gen motion controllers, debugging signal integrity issues, or navigating complex regulatory and patent landscapes in industrial automation, Eureka helps you cut through technical noise and surface the insights that matter—faster.

👉 Experience Patsnap Eureka today — Power up your Control Systems innovation with AI intelligence built for engineers and IP minds.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com