Latency Requirements for Real-Time AI Grid Applications

JUN 26, 2025 |

As we navigate the complexities of modern energy management, the concept of real-time AI grid applications is gaining significant traction. These applications, which leverage artificial intelligence to optimize the distribution and consumption of energy, are pivotal in ensuring efficient and sustainable energy grids. However, the effectiveness of these systems heavily relies on one crucial factor: latency. Understanding and addressing latency requirements is essential for the successful deployment of AI-powered grid solutions.

Understanding Latency in Grid Applications

Latency refers to the time delay between the initiation of an action and its effect, which is a critical consideration in real-time AI applications. In the context of grid management, low latency is essential to enable timely decisions and actions, such as balancing load demands, integrating renewable energy sources, and responding to power outages. High latency can lead to inefficient energy distribution and even system failures, which underscores the importance of minimizing delays in data processing and decision-making.

Key Latency Challenges

Several factors contribute to latency in AI grid applications, each presenting unique challenges. One primary challenge is data transmission delay, which occurs when data travels between various points within the grid network. This challenge is exacerbated by the size and complexity of modern energy grids, which often span vast geographical areas. Additionally, computational delay resulting from the processing of large datasets and complex algorithms can further increase latency. Lastly, storage delay, related to retrieving and writing data, can also impact the overall system performance.

Latency Requirements for Real-Time Decision-Making

To ensure the effectiveness of AI grid applications, latency must be kept within acceptable ranges that allow for real-time decision-making. Generally, latencies of a few milliseconds to a few seconds are considered critical, depending on the specific application. For instance, applications involving load balancing and fault detection require extremely low latency to prevent cascading failures and ensure grid stability. On the other hand, applications such as predictive maintenance might tolerate slightly higher latency since they involve longer-term planning.

Optimizing Latency in AI Grid Applications

Several strategies can be employed to optimize latency in real-time AI grid applications. One effective approach is edge computing, which brings computational resources closer to the data source, thus reducing transmission delays. By processing data locally rather than relying solely on centralized cloud infrastructures, edge computing can significantly cut down latency. Additionally, the use of high-speed communication technologies, such as fiber optics and 5G, can enhance data transmission speeds and reduce delays.

The adoption of advanced AI algorithms that are specifically designed for speed and efficiency is also crucial. These algorithms should be capable of processing large datasets quickly and providing actionable insights in real-time. Furthermore, optimizing data storage and retrieval processes by leveraging technologies such as in-memory databases can further minimize latency, ensuring faster access to critical information.

The Role of AI in Ensuring Grid Security

Beyond efficiency, AI-powered grid applications play a vital role in enhancing grid security. Real-time monitoring and anomaly detection are crucial for identifying potential threats and mitigating risks before they can cause significant damage. Low latency is essential for these security applications, as rapid detection and response are critical in preventing cyberattacks and ensuring the integrity of the energy grid.

Conclusion

In the evolving landscape of energy management, the integration of AI in grid applications offers unparalleled opportunities for enhancing efficiency and stability. However, achieving these benefits hinges on addressing latency requirements to enable real-time decision-making and immediate response capabilities. By employing strategies such as edge computing, high-speed communication technologies, and advanced algorithms, we can overcome latency challenges and pave the way for smarter, more resilient energy grids. As the demand for sustainable and reliable energy continues to grow, optimizing latency in AI grid applications will be key to meeting future energy needs.

Stay Ahead in Power Systems Innovation

From intelligent microgrids and energy storage integration to dynamic load balancing and DC-DC converter optimization, the power supply systems domain is rapidly evolving to meet the demands of electrification, decarbonization, and energy resilience.

In such a high-stakes environment, how can your R&D and patent strategy keep up?

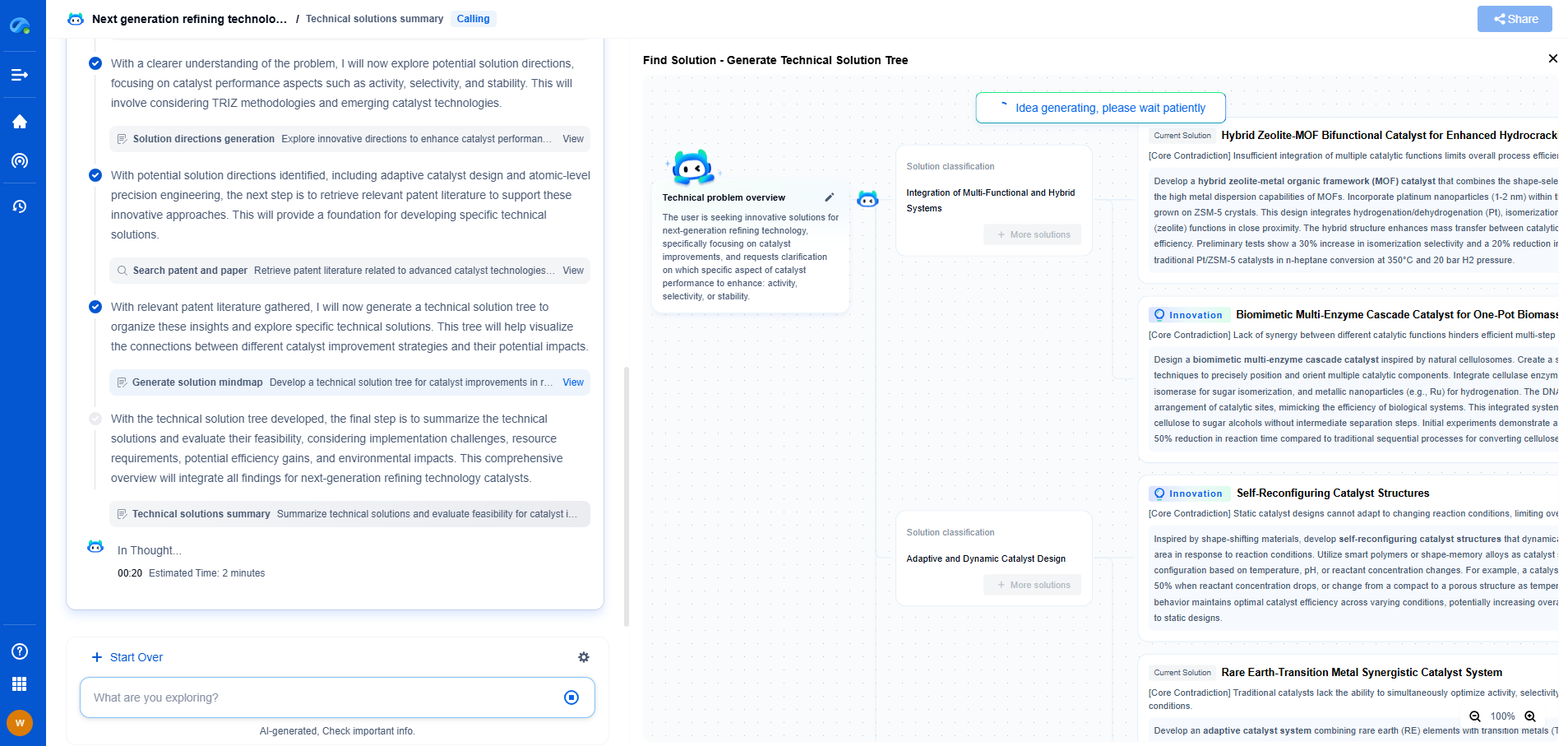

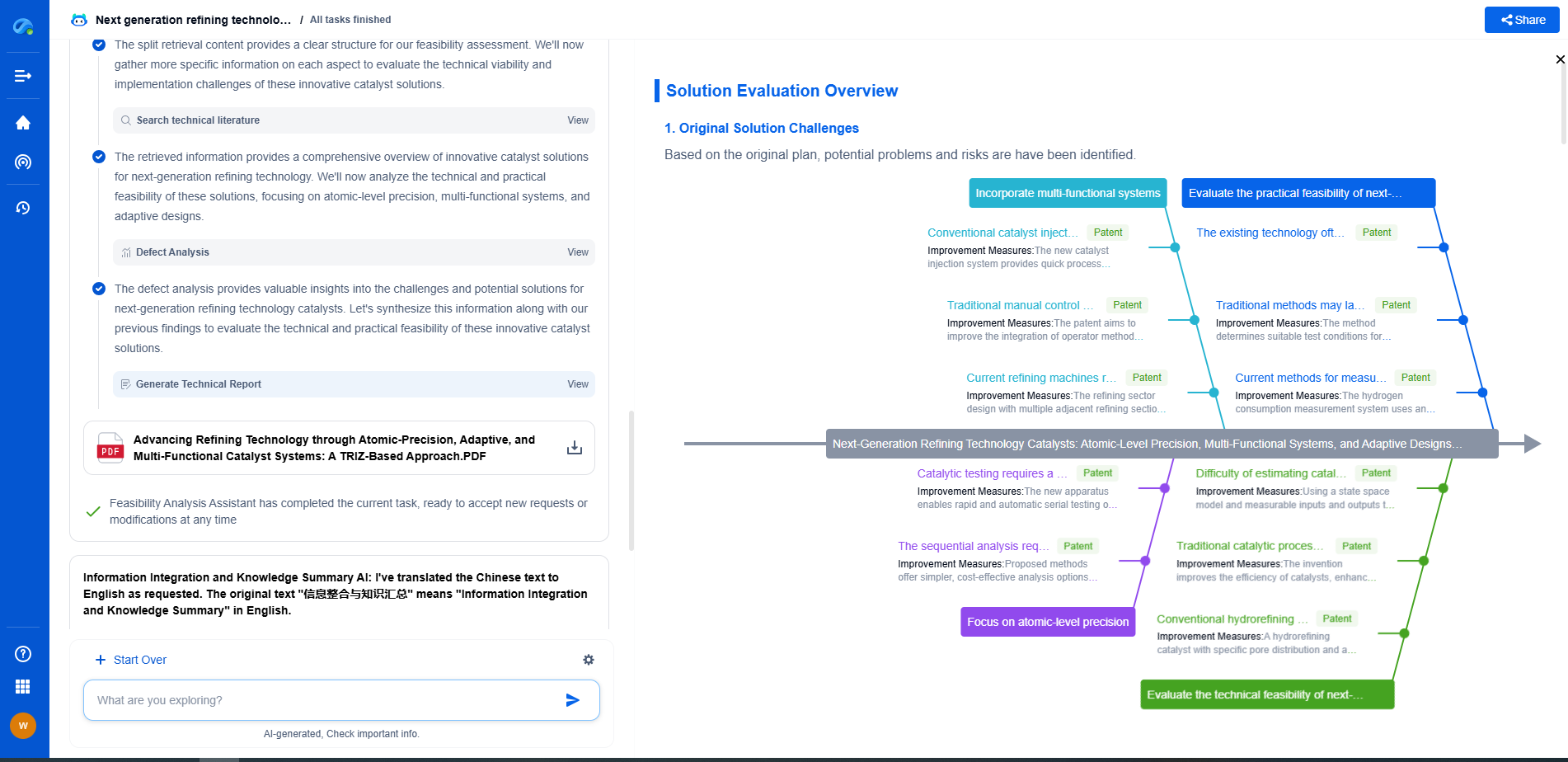

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Experience how Patsnap Eureka can supercharge your workflow in power systems R&D and IP analysis. Request a live demo or start your trial today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com