Memory-Mapped File Techniques for High-Speed Data Acquisition

JUL 17, 2025 |

Understanding Memory-Mapped Files

At its core, a memory-mapped file is a segment of virtual memory that has been assigned a direct byte-for-byte correlation with a portion of a file or file-like resource. The operating system handles the mapping, which allows an application to treat the file region as if it were part of the application's own address space. This memory region can be accessed using standard memory operations, making it extremely efficient for reading and writing data.

The primary advantage of using memory-mapped files is the elimination of the buffering and copying steps typically involved in I/O operations. Since the file is mapped directly into memory, applications can read and write to it without needing intermediary steps. This results in lower latency and higher throughput, which are crucial for performance-critical applications.

Benefits of Memory-Mapped Files in High-Speed Data Acquisition

One of the most significant benefits of memory-mapped files in high-speed data acquisition is the ability to handle large volumes of data efficiently. When acquiring data at high speeds, systems must process and store this data quickly to avoid bottlenecks. Memory-mapped files allow direct access to the data, minimizing delays and enhancing throughput.

Another advantage is reduced I/O overhead. Traditional file I/O operations involve system calls to read and write data, which can be time-consuming. Memory mapping eliminates most of these calls, allowing the CPU to access data directly from memory, thus speeding up operations. This is especially beneficial in real-time data acquisition systems where timely data processing is essential.

Memory-mapped files also simplify the handling of shared data among processes. In high-speed data acquisition, it is common for multiple processes to work with the same data set. Memory mapping enables these processes to access the same memory region without duplicating data, thereby conserving system resources and reducing complexity.

Application Techniques for High-Speed Data Acquisition

To effectively utilize memory-mapped files in high-speed data acquisition, it is essential to follow certain best practices. One key technique is to pre-determine the size of the data to be acquired and manage memory allocation accordingly. While memory-mapped files can handle dynamically increasing data size, pre-allocating memory helps in optimizing performance and avoiding fragmentation issues.

Another important technique is to align data access patterns with the system's memory page size. Misaligned access can lead to page faults and reduced performance. By ensuring that data structures and access patterns are page-aligned, developers can minimize these issues and maintain high data throughput.

It is also advisable to manage access permissions carefully. Memory-mapped regions can be assigned various access levels, such as read-only or read-write, depending on application requirements. Ensuring that permissions align with the intended use of the data prevents unintended modifications and improves security.

Considerations and Limitations

While memory-mapped files offer numerous advantages, there are considerations and limitations to be aware of. One potential issue is memory consumption. Since files are mapped into a process's address space, the virtual memory used can be extensive, particularly with large files. Systems with limited memory may struggle to manage large mappings efficiently.

Additionally, developers must account for the underlying file system's behavior. Memory-mapped files are dependent on the file system's capabilities, and performance can vary based on factors such as caching strategies and physical storage speed. Testing and optimization on the target system are crucial to achieving optimal performance.

Finally, error handling in memory-mapped file operations requires attention. Errors such as access violations or disk failures can lead to application crashes if not properly managed. Implementing robust error-checking and handling mechanisms ensures system reliability and stability.

Conclusion

Memory-mapped files are a powerful tool for high-speed data acquisition, providing direct, efficient access to file data that can significantly enhance performance. By understanding their benefits and limitations, and applying best practices in their implementation, developers can leverage memory-mapped files to build fast, efficient data acquisition systems capable of handling large volumes of data with ease. As technology advances and the demand for high-speed data processing grows, memory-mapped files will continue to be an invaluable asset in the toolkit of developers and engineers alike.

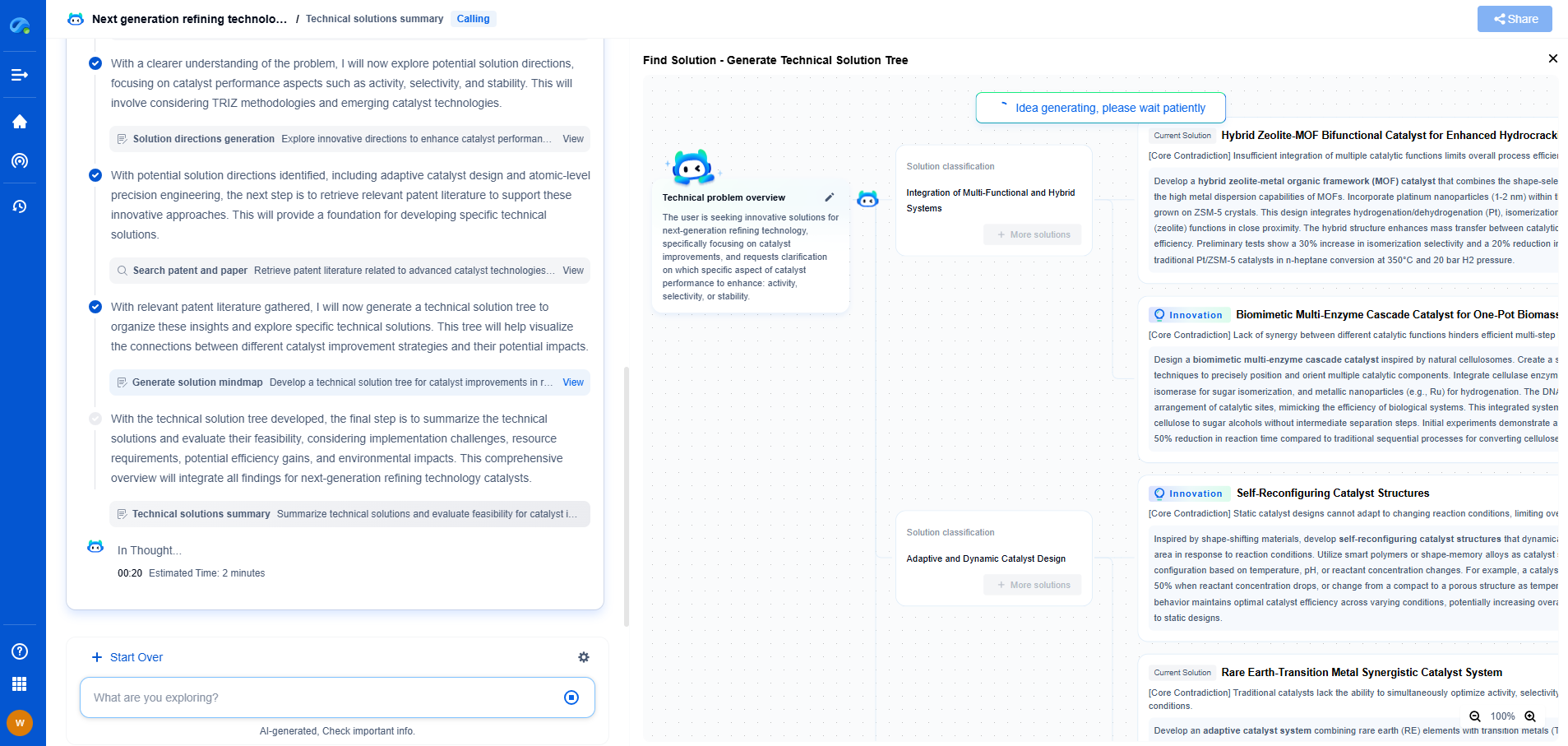

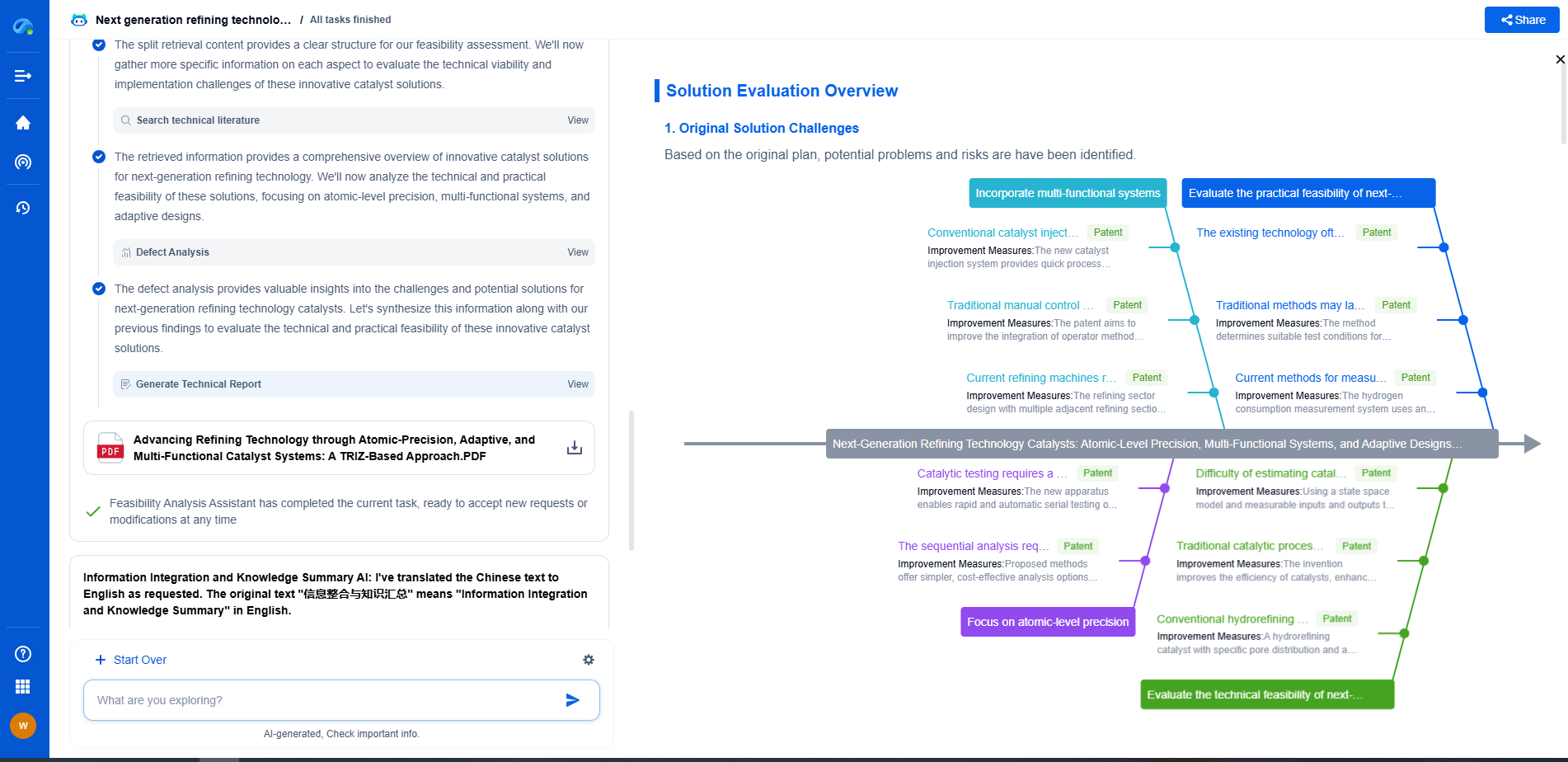

Whether you’re developing multifunctional DAQ platforms, programmable calibration benches, or integrated sensor measurement suites, the ability to track emerging patents, understand competitor strategies, and uncover untapped technology spaces is critical.

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

🧪 Let Eureka be your digital research assistant—streamlining your technical search across disciplines and giving you the clarity to lead confidently. Experience it today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com