Real-time sensor fusion using deep learning models

JUN 26, 2025 |

In recent years, the proliferation of sensors across various applications has generated immense volumes of data. From autonomous vehicles to smart cities, sensor data is critical in enabling intelligent decision-making. However, processing this data in real-time to derive actionable insights poses significant challenges. This is where sensor fusion, combined with the power of deep learning, comes into play. Sensor fusion involves integrating data from multiple sensors to produce more accurate and reliable information than that provided by any individual sensor.

The Importance of Sensor Fusion

Sensor fusion is vital because no single sensor can capture all environmental aspects. For example, in autonomous vehicles, cameras can detect colors and shapes, while LIDAR can provide depth perception. By fusing these data sources, a more comprehensive understanding of the environment can be achieved, leading to safer and more effective decision-making.

Deep Learning in Sensor Fusion

Deep learning models have shown tremendous promise in processing complex datasets, making them ideal for sensor fusion tasks. These models can learn intricate patterns and correlations from the data, enabling them to make sense of multi-sensor inputs. Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and even more advanced architectures like Transformers are often employed in sensor fusion tasks.

CNNs are particularly effective in extracting spatial features from data, making them suitable for image-based sensor fusion. RNNs and their variants, such as Long Short-Term Memory (LSTM) networks, are adept at handling temporal data, which is crucial when dealing with time-series data from sensors. Meanwhile, Transformers, known for their attention mechanisms, can model relationships across different data points, offering flexibility and efficiency in fusion tasks.

Challenges in Real-Time Sensor Fusion

Implementing real-time sensor fusion using deep learning models comes with its challenges. First, there is the issue of latency. Real-time applications, such as autonomous driving or industrial automation, require immediate responses, demanding highly efficient algorithms and hardware for processing.

Data synchronization is another challenge. Sensors often operate at different frequencies, leading to discrepancies in data alignment. Ensuring that data is synchronized correctly is crucial for accurate fusion.

Furthermore, sensor data can be noisy and incomplete, necessitating robust models that can handle such imperfections. Deep learning models must be trained to be resilient to these issues, often requiring extensive datasets for effective learning.

Approaches to Real-Time Implementation

To achieve real-time performance, optimization techniques are essential. Techniques such as model quantization, pruning, and the use of lightweight architectures can significantly reduce the computational load, enabling deployment on edge devices with limited resources.

Edge computing plays a crucial role in real-time sensor fusion, allowing processing to occur closer to the data source, reducing latency, and bandwidth usage. By leveraging edge devices, the system can perform initial data processing and fusion locally, sending only essential information to cloud servers for further analysis if needed.

Applications of Real-Time Sensor Fusion

The applications of real-time sensor fusion using deep learning are vast and varied. In autonomous vehicles, real-time fusion of data from cameras, radar, LIDAR, and GPS ensures accurate perception of the surrounding environment, facilitating safe navigation.

In healthcare, sensor fusion can monitor patients in real-time, integrating data from wearable devices to provide insights into patient health, predict potential health issues, or manage chronic conditions more effectively.

Conclusion

Real-time sensor fusion using deep learning models provides a powerful approach to integrating and processing data from multiple sources. While challenges remain, advancements in deep learning and computing technology continue to push the boundaries of what is possible. As techniques and tools evolve, the potential for sensor fusion to impact various industries and improve decision-making processes becomes increasingly significant. As we look forward, continued research and development in this field promise to unleash new possibilities and innovations.

Ready to Redefine Your Robotics R&D Workflow?

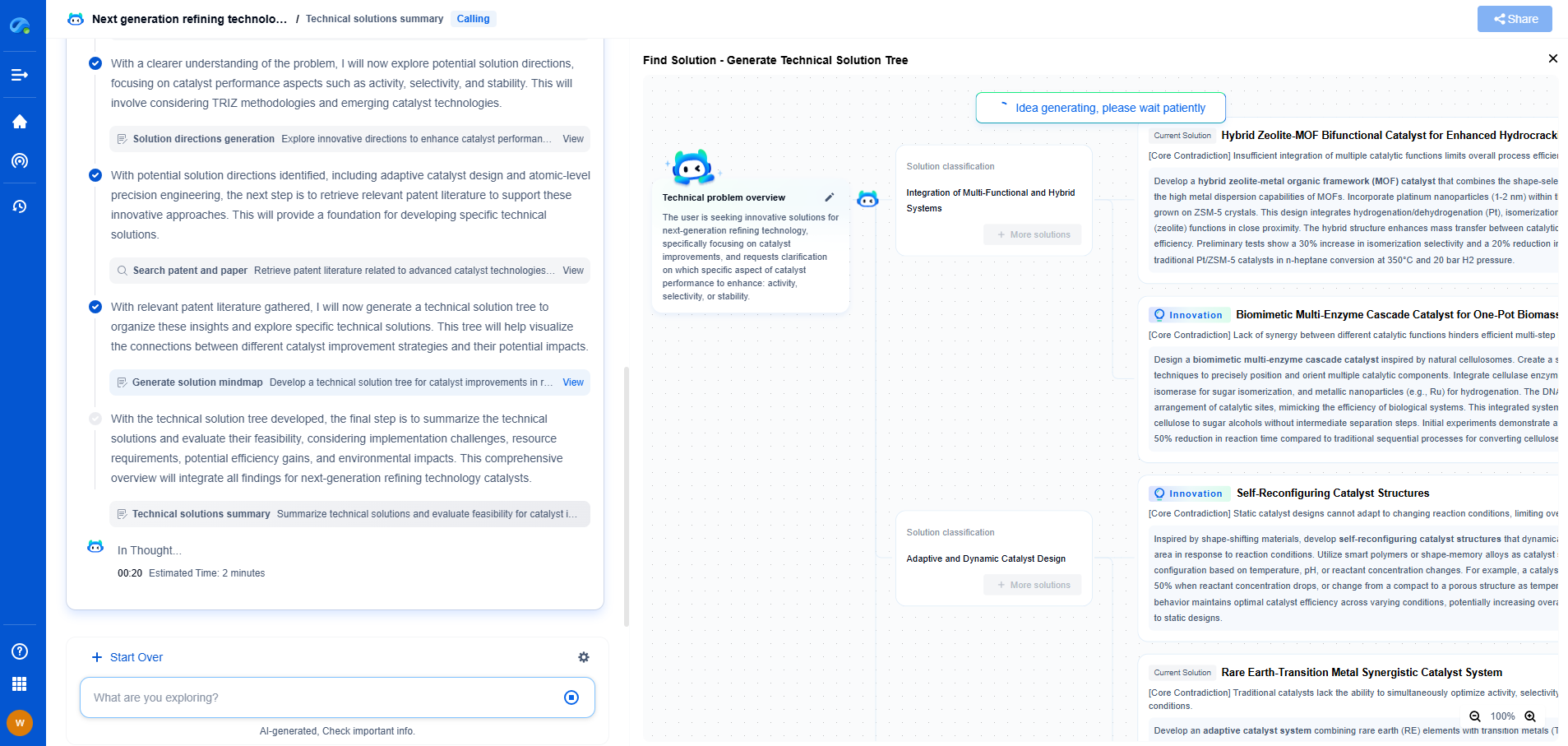

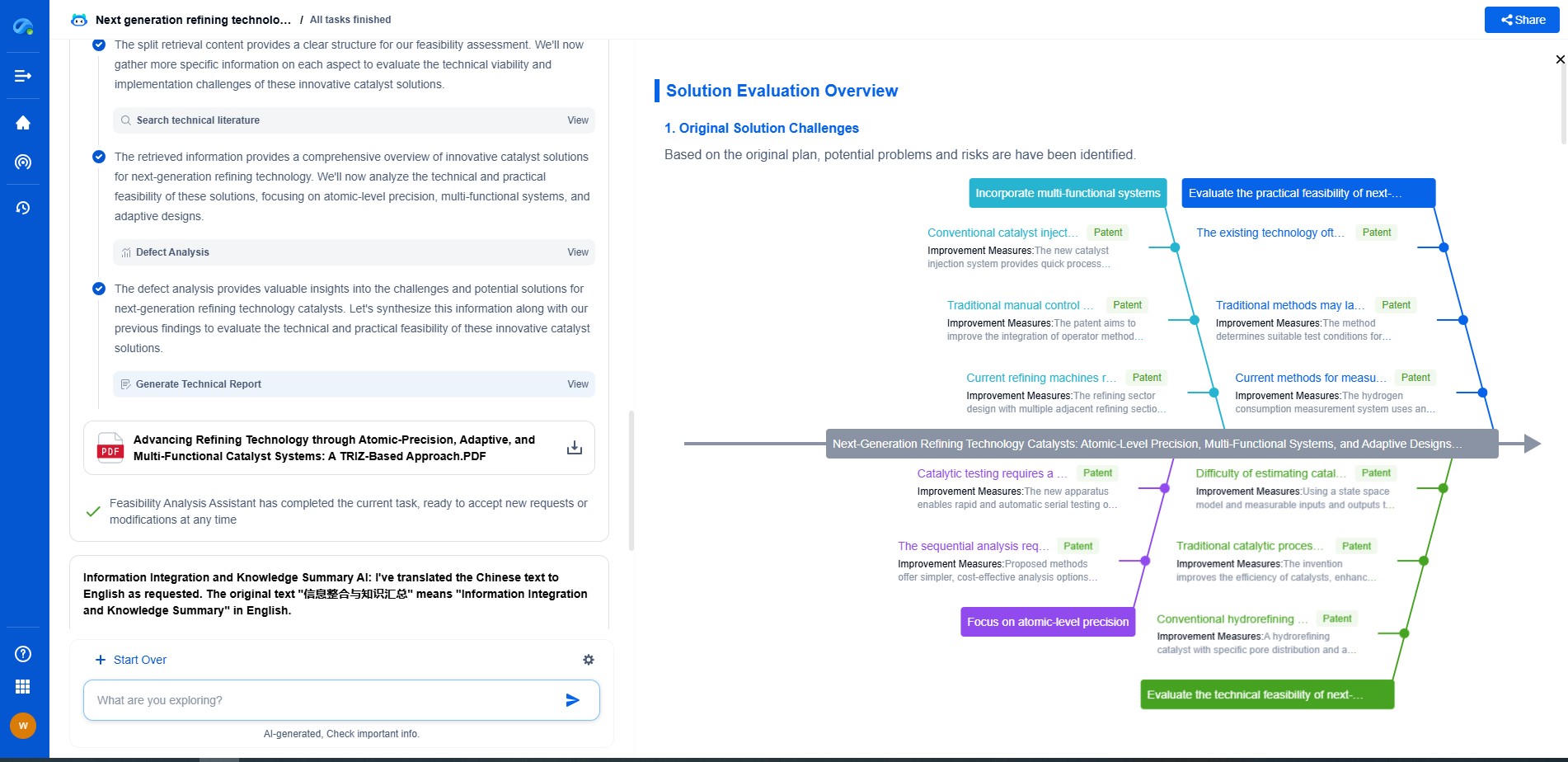

Whether you're designing next-generation robotic arms, optimizing manipulator kinematics, or mining patent data for innovation insights, Patsnap Eureka, our cutting-edge AI assistant, is built for R&D and IP professionals in high-tech industries, is built to accelerate every step of your journey.

No more getting buried in thousands of documents or wasting time on repetitive technical analysis. Our AI Agent helps R&D and IP teams in high-tech enterprises save hundreds of hours, reduce risk of oversight, and move from concept to prototype faster than ever before.

👉 Experience how AI can revolutionize your robotics innovation cycle. Explore Patsnap Eureka today and see the difference.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com