Reinforcement Learning for Control Systems: Reward Function Design

JUL 2, 2025 |

Reinforcement learning (RL) has emerged as a powerful tool for designing control systems, offering a method for systems to autonomously learn optimal behaviors through trial and error interactions with their environment. Unlike traditional control methods, which often rely on predefined models and rules, RL leverages its ability to learn directly from data, making it particularly useful in complex or dynamic environments where modeling every detail is impractical.

The Essence of Reward Functions

A central element of any RL-based control system is the reward function. This function essentially defines what is considered successful behavior and guides the learning process towards achieving desired outcomes. The design of a reward function can significantly influence the effectiveness and efficiency of the learning process, making it a critical component of RL applications in control systems.

Defining Objectives Clearly

The first step in designing a reward function is to clearly define the objectives of the control system. For instance, if the goal is to maintain a certain temperature in a smart thermostat system, the reward should be structured to incentivize maintaining temperatures within a desired range while minimizing energy consumption. Clearly defined objectives help in shaping the reward function to guide the RL agent towards the right behavior.

Balancing Immediate and Long-term Goals

One of the challenges in reward function design is balancing immediate rewards with long-term objectives. This is particularly important in control systems, where short-term performance might conflict with long-term sustainability. For example, in an autonomous vehicle, rapid acceleration might yield immediate rewards by reaching destinations quickly, but it could compromise safety and increase fuel consumption. A well-designed reward function should consider both immediate and long-term implications, encouraging actions that optimize overall system performance over time.

Avoiding Unintended Consequences

A poorly designed reward function can lead to unintended or undesirable behaviors, a phenomenon sometimes referred to as "reward hacking." This occurs when the RL agent finds loopholes in the reward structure to maximize rewards without achieving the actual intended outcomes. For instance, if a robotic vacuum cleaner is rewarded solely for area covered, it might prioritize speed over thoroughness, leaving areas inadequately cleaned. To mitigate such issues, the reward function should be carefully crafted to align closely with the true objectives and include constraints that discourage undesirable tactics.

Incorporating Safety and Robustness

In control systems, especially those operating in real-world environments, safety and robustness are paramount. The reward function should incorporate elements that prioritize safe operations, such as penalizing actions that could lead to system instability or damage. Additionally, designing for robustness ensures that the RL agent can handle a range of scenarios and disturbances, maintaining performance across various conditions.

Iterative Refinement and Validation

The process of designing a reward function is often iterative. Initial implementations may require refinement as the system is tested and evaluated. It's essential to validate the effectiveness of the reward function in guiding the RL agent towards the desired control objectives. This validation process should include diverse scenarios to ensure that the system performs reliably under different conditions.

Conclusion: The Art and Science of Reward Function Design

In the realm of reinforcement learning for control systems, the design of the reward function is both an art and a science. It requires a deep understanding of the system's objectives, potential trade-offs, and operational constraints. A well-crafted reward function can transform RL into a powerful tool for developing autonomous control systems that perform optimally across varying environments and challenges. As this field continues to evolve, ongoing research and development in reward function design will be crucial for advancing the capabilities of RL in control applications.

Ready to Reinvent How You Work on Control Systems?

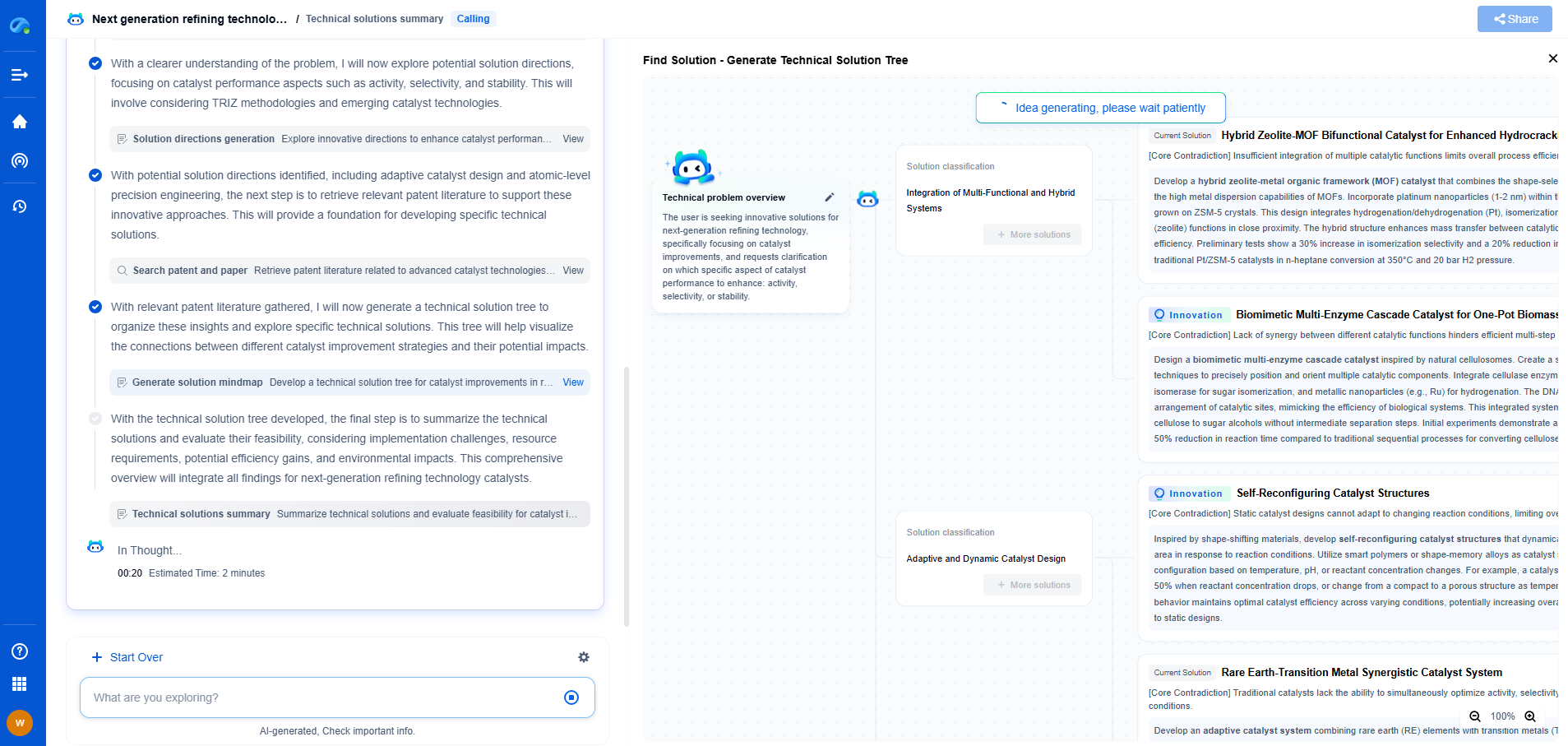

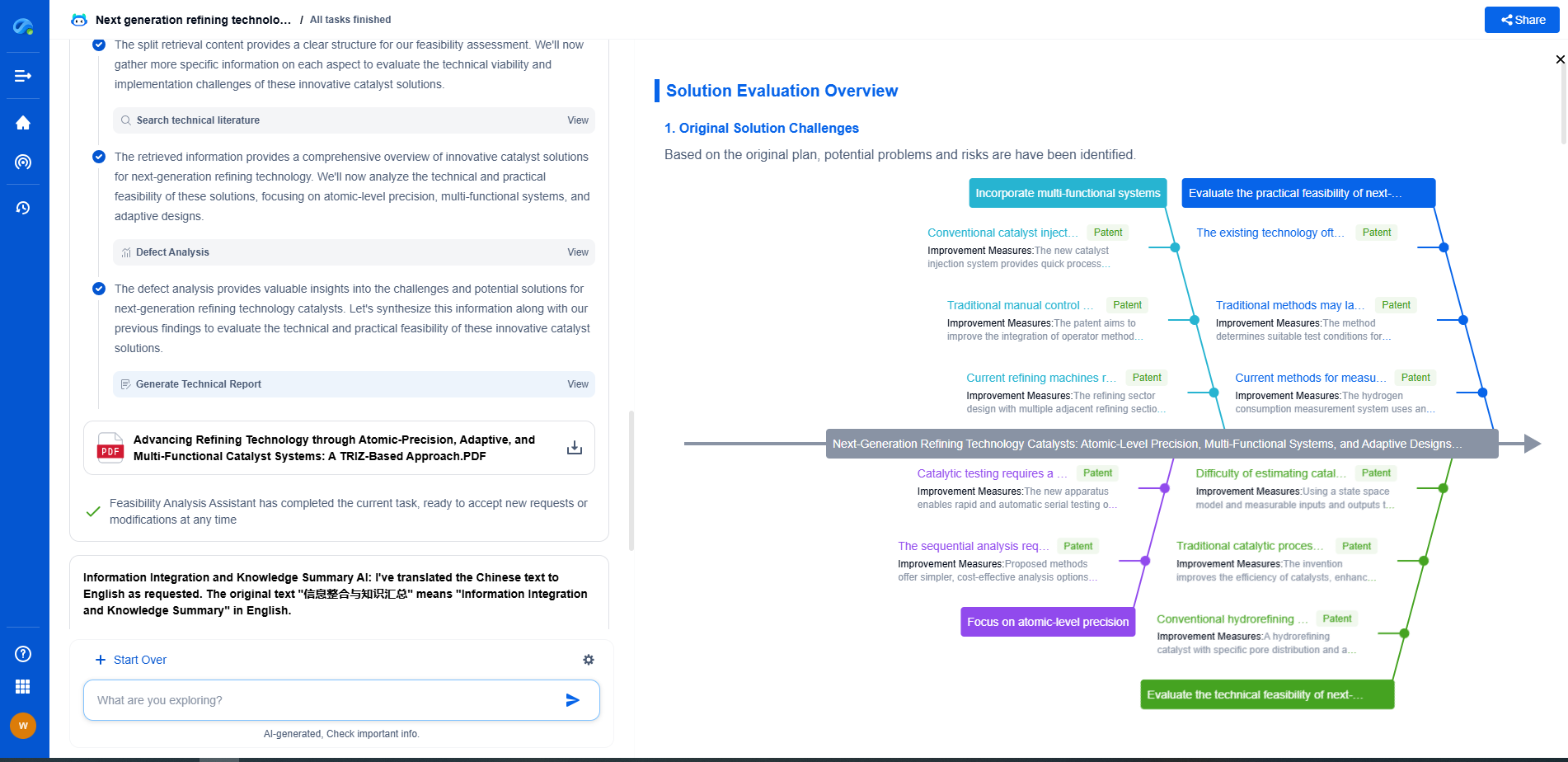

Designing, analyzing, and optimizing control systems involves complex decision-making, from selecting the right sensor configurations to ensuring robust fault tolerance and interoperability. If you’re spending countless hours digging through documentation, standards, patents, or simulation results — it's time for a smarter way to work.

Patsnap Eureka is your intelligent AI Agent, purpose-built for R&D and IP professionals in high-tech industries. Whether you're developing next-gen motion controllers, debugging signal integrity issues, or navigating complex regulatory and patent landscapes in industrial automation, Eureka helps you cut through technical noise and surface the insights that matter—faster.

👉 Experience Patsnap Eureka today — Power up your Control Systems innovation with AI intelligence built for engineers and IP minds.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com