Reinforcement Learning vs PID Control for Voltage Regulation: A Comparison

JUN 26, 2025 |

Voltage regulation is a critical aspect of electrical engineering, ensuring that electrical devices receive a stable voltage supply. Traditional methods like Proportional-Integral-Derivative (PID) control have been used for decades, but recent advances in artificial intelligence have introduced reinforcement learning (RL) as a potential alternative. This blog explores the similarities, differences, and potential applications of these two methods in voltage regulation.

Understanding PID Control

PID control is a classical control strategy used widely in industrial applications. It involves three main components: proportional, integral, and derivative controls. Each of these components reacts to different aspects of the error between the desired and actual voltage levels.

- Proportional Control: This component reacts proportionally to the current error. If the error increases, the corrective action also increases proportionally.

- Integral Control: This addresses the accumulation of past errors. By integrating the error over time, it helps to eliminate steady-state errors.

- Derivative Control: This predicts future errors by calculating the rate of change of the error. It provides a damping effect, reducing overshoot and improving stability.

PID controllers are favored for their simplicity, ease of implementation, and the ability to provide quick corrective actions.

Exploring Reinforcement Learning

Reinforcement Learning is a type of machine learning where an agent learns to make decisions by interacting with an environment. It relies on a system of rewards and penalties to learn optimal policies for decision-making.

In the context of voltage regulation, an RL agent interacts with the power system environment, adjusting the voltage to meet desired levels. The agent receives feedback in the form of rewards based on how close the output voltage is to the target. Over time, RL algorithms like Q-learning or deep Q-networks enable the agent to learn and optimize voltage control strategies.

Key Differences Between PID Control and Reinforcement Learning

1. Learning and Adaptation: PID controllers are static, relying on predefined parameters tuned to specific operating conditions. In contrast, reinforcement learning systems adapt and learn from real-time data, improving their performance even under varying conditions.

2. Complexity and Implementation: PID controllers are straightforward to implement and require less computational power. Conversely, RL methods involve complex algorithms and significant computational resources, which can be a barrier in systems with limited capacities.

3. Performance and Robustness: PID controllers excel in stable environments where system dynamics do not change frequently. However, RL models can outperform PID controllers in complex and dynamic environments, where they can adapt and optimize in real-time.

4. Expertise and Tuning: Implementing a PID controller requires domain expertise to properly tune the parameters. Reinforcement learning reduces the need for manual tuning, as the algorithm learns optimal policies autonomously. However, it requires expertise in RL to set up and maintain the learning process.

Applications and Use Cases

In voltage regulation, PID controllers are typically used in applications where system dynamics are predictable and do not change rapidly. Examples include household voltage regulation and simple industrial processes. Their reliability and simplicity make them an excellent choice for such scenarios.

On the other hand, reinforcement learning is better suited for complex systems with high variability, such as smart grids and renewable energy systems. These systems benefit from RL's ability to adapt to changing conditions and optimize performance over time.

Challenges and Considerations

While reinforcement learning offers promising advantages, it also poses several challenges. The training process can be time-consuming and computationally expensive. Moreover, ensuring the stability and safety of the RL model during training is crucial, especially in critical applications like voltage regulation.

For PID controllers, the main challenge lies in tuning the parameters to match the specific needs of the system accurately. Incorrect tuning can lead to poor performance or instability in the control loop.

Conclusion

Both PID control and reinforcement learning have their place in voltage regulation, each with its strengths and limitations. The choice between them depends heavily on the specific requirements of the application and the operating environment. While PID control offers simplicity and reliability, reinforcement learning brings adaptability and optimization to complex, dynamic systems. As technology continues to evolve, we can expect these methods to complement each other, leading to more efficient and responsive voltage regulation systems.

Stay Ahead in Power Systems Innovation

From intelligent microgrids and energy storage integration to dynamic load balancing and DC-DC converter optimization, the power supply systems domain is rapidly evolving to meet the demands of electrification, decarbonization, and energy resilience.

In such a high-stakes environment, how can your R&D and patent strategy keep up?

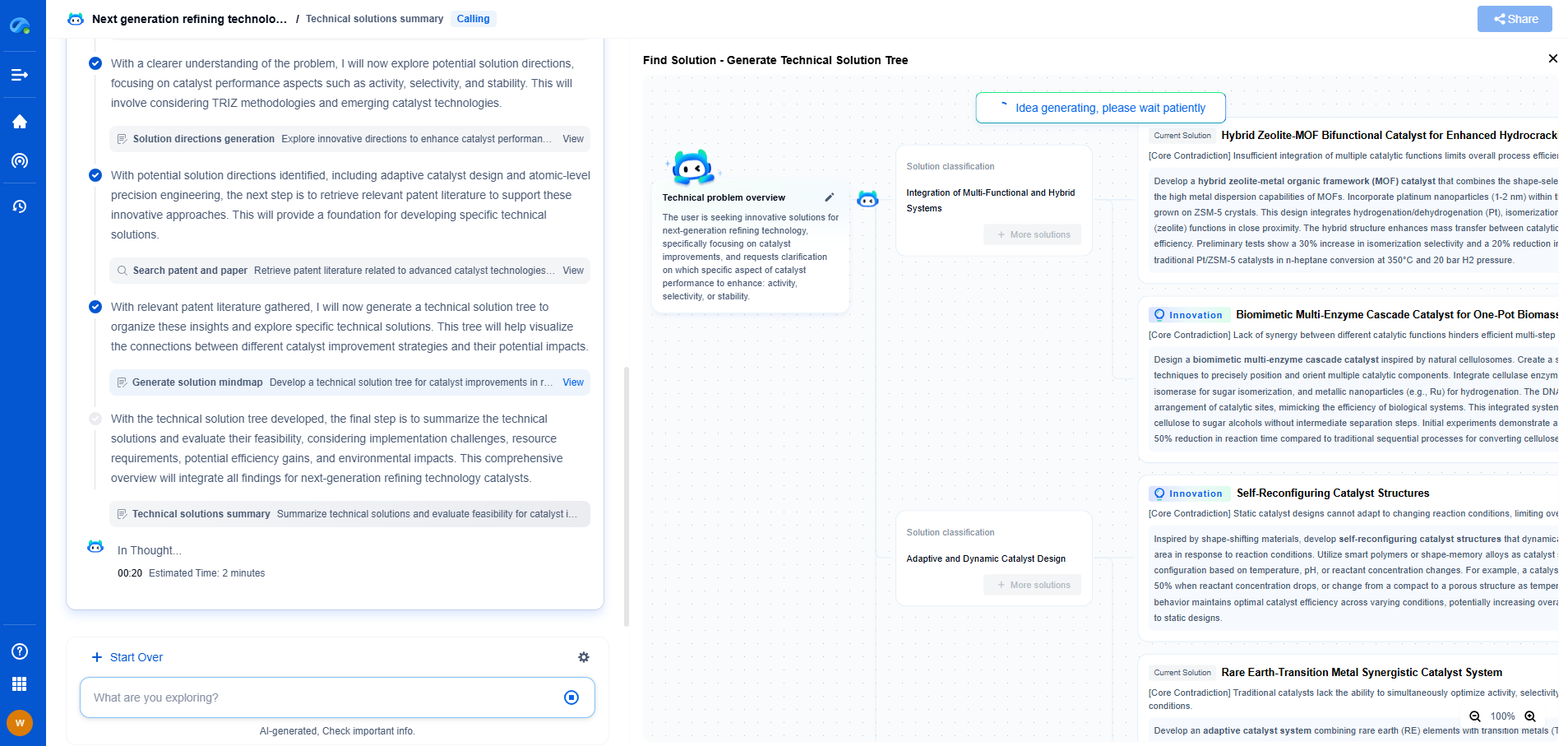

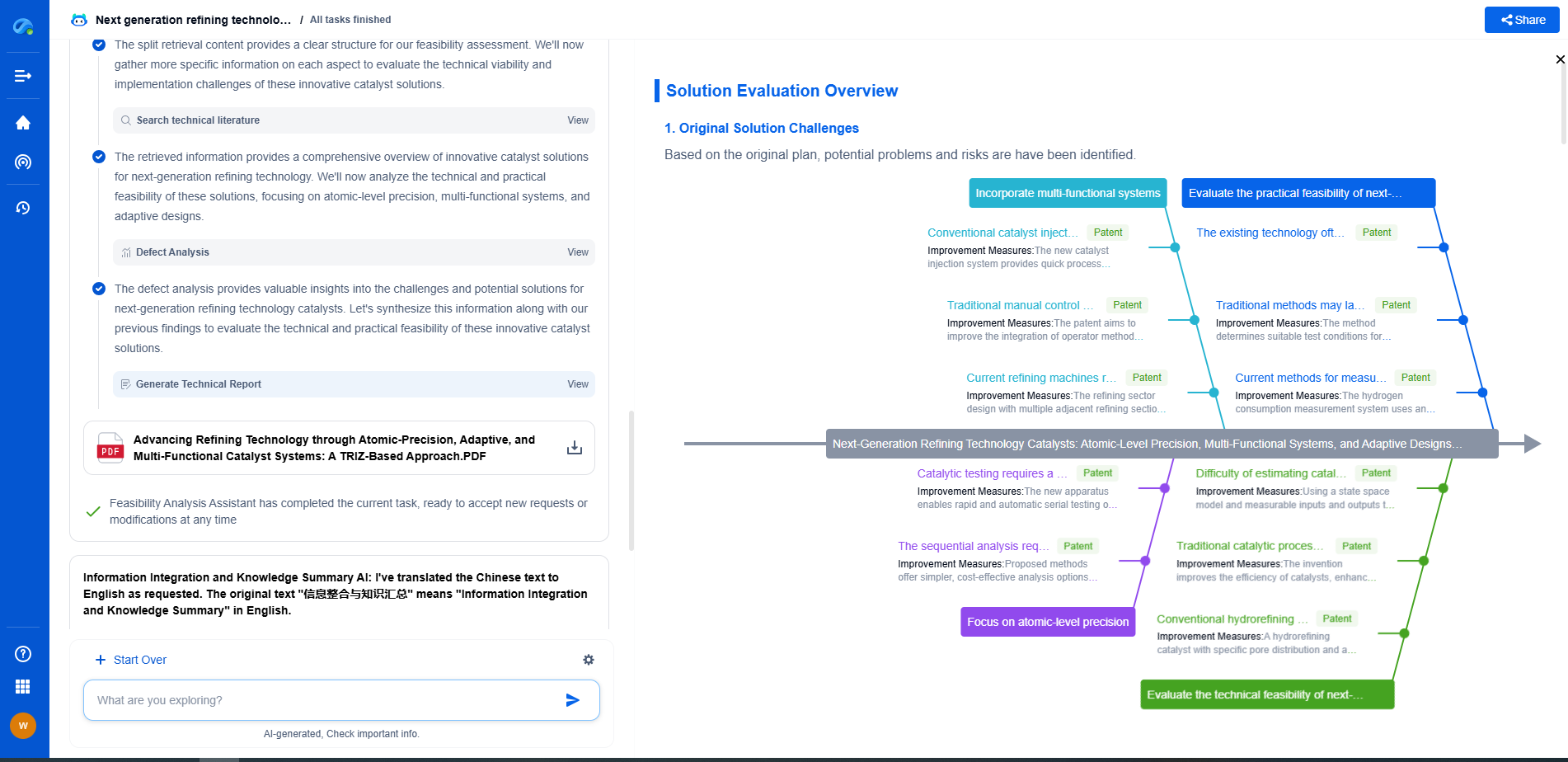

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Experience how Patsnap Eureka can supercharge your workflow in power systems R&D and IP analysis. Request a live demo or start your trial today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com