ROS 2 Integration with Intel RealSense: Point Cloud Processing Tutorial

JUN 26, 2025 |

Integrating ROS 2 with Intel RealSense cameras opens up a myriad of possibilities for creating advanced robotics systems capable of perceiving and interacting with their environment. One of the most exciting applications of this integration is point cloud processing, which provides a rich, 3D representation of the world. This tutorial will guide you through the process of setting up ROS 2 with an Intel RealSense camera, capturing point cloud data, and processing it for various applications.

Setting Up Your Environment

Before diving into the integration, ensure your environment is properly set up. First, install ROS 2 on your system. The installation process varies depending on your operating system, so refer to the official ROS 2 installation guide for detailed instructions. Once ROS 2 is installed, download and install the Intel RealSense SDK, which provides the necessary tools and libraries to interface with RealSense cameras.

Connecting the Intel RealSense Camera

With your environment ready, connect your Intel RealSense camera to your computer via USB. Verify that the camera is recognized by the system using the RealSense Viewer, which is installed as part of the SDK. The viewer allows you to adjust various camera settings and ensure that the camera is functioning correctly.

Installing ROS 2 RealSense Packages

To use the Intel RealSense camera with ROS 2, you need to install the ROS 2 RealSense packages. These packages bridge the gap between the camera and ROS 2, enabling you to publish and subscribe to camera data within the ROS 2 ecosystem. Typically, you can install these packages via your package manager or by building them from source.

Launching the RealSense Node

With the packages installed, launch the RealSense node to start publishing camera data to ROS 2 topics. This is typically done using a launch file provided by the RealSense ROS 2 package. The launch file configures the node to publish various data streams, including depth, color, and most importantly for our purpose, point clouds.

Subscribing to Point Cloud Data

Once the RealSense node is running, you can subscribe to the point cloud topic to access the 3D data. In ROS 2, this is usually done by creating a custom node that subscribes to the point cloud topic and processes the incoming data. Use a ROS 2 client library, such as rclcpp for C++ or rclpy for Python, to create your node and manage subscriptions.

Processing Point Cloud Data

Processing point cloud data involves several steps, including filtering, segmentation, and feature extraction. One common library for processing point clouds is the Point Cloud Library (PCL), which offers a suite of tools for these tasks. Integrate PCL with your ROS 2 node to apply various algorithms to the point cloud data, such as removing noise, identifying objects, or constructing 3D models.

Example Application: Obstacle Detection

As an example application, consider implementing a simple obstacle detection system. By processing the point cloud data, you can identify objects within a certain distance from the camera and generate alerts or commands for a robot to avoid collisions. This involves setting up a PCL processing pipeline to filter out distant points, cluster nearby points, and determine the position of potential obstacles.

Troubleshooting and Optimization

While working with point cloud data, you might encounter issues related to data quality, processing speed, or system stability. Troubleshooting these problems often involves adjusting camera settings, optimizing processing algorithms, or fine-tuning ROS 2 node parameters. Refer to the documentation for both the RealSense SDK and PCL for tips on improving your system's performance.

Conclusion

Integrating ROS 2 with Intel RealSense cameras for point cloud processing is a powerful way to enhance the perception capabilities of robotics systems. By following this tutorial, you should have a solid foundation for capturing and processing 3D data, which you can apply to a wide range of applications in robotics, automation, and computer vision. With practice, you'll be able to create sophisticated systems that can navigate and interact with their environment intelligently.

Ready to Redefine Your Robotics R&D Workflow?

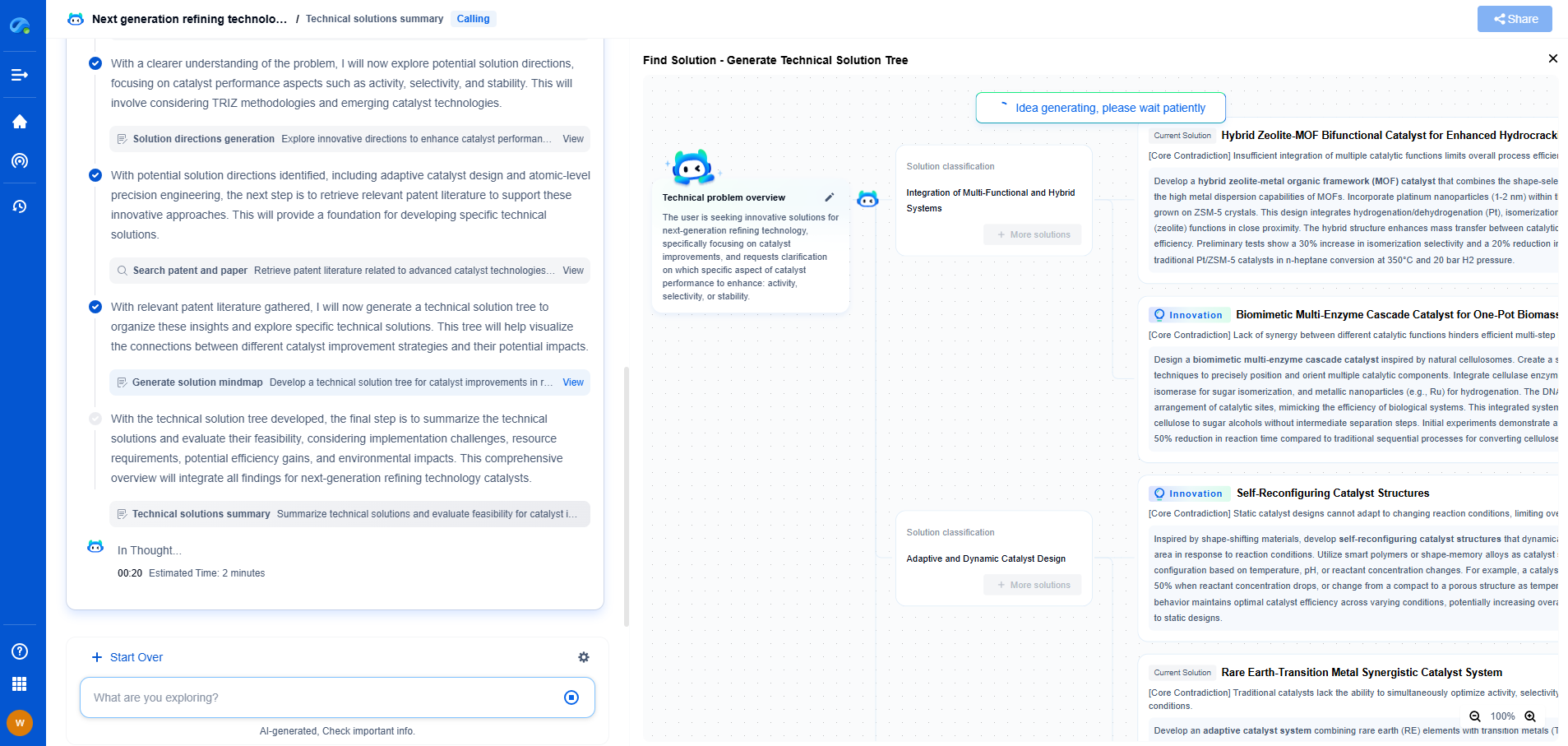

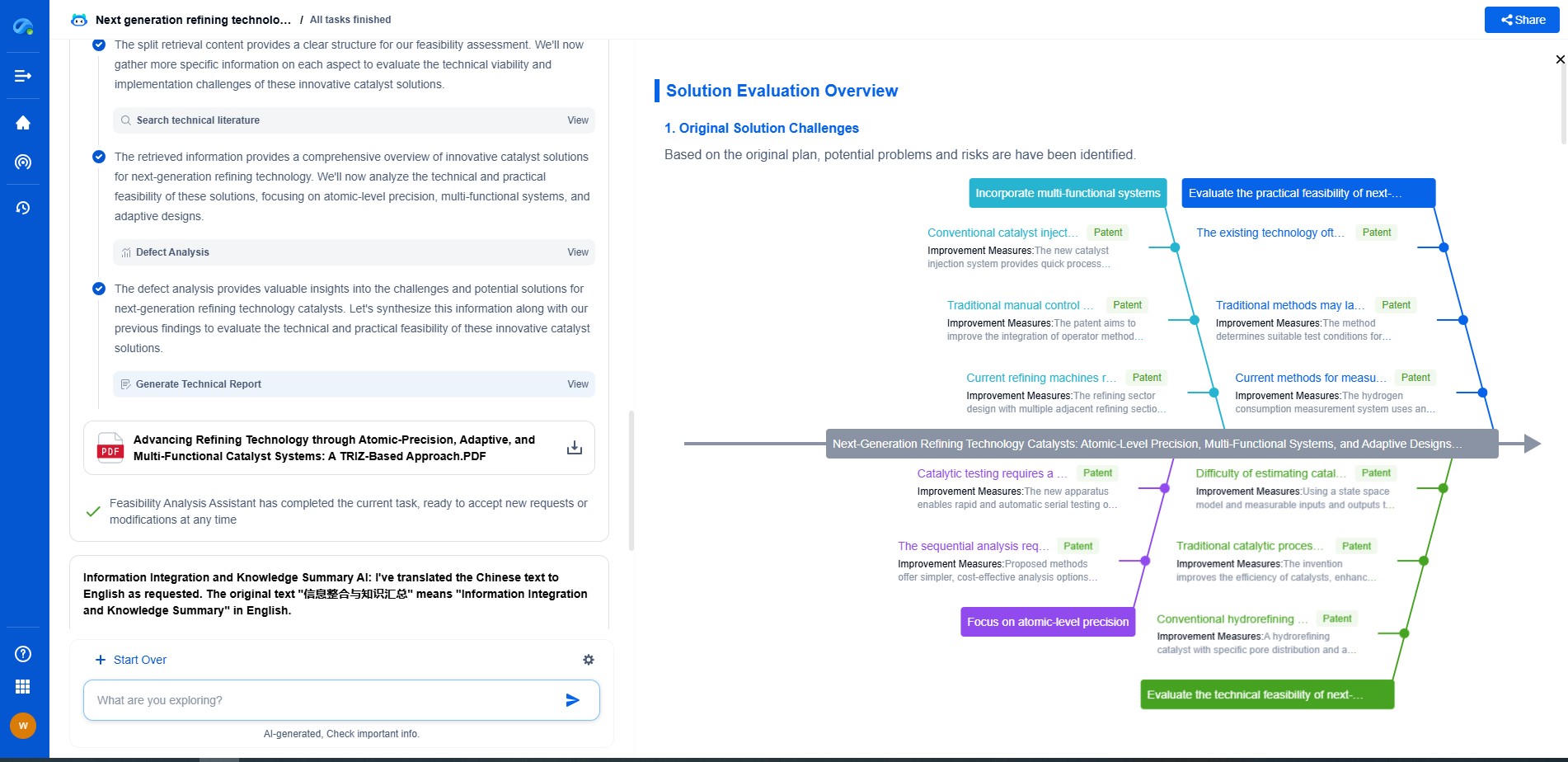

Whether you're designing next-generation robotic arms, optimizing manipulator kinematics, or mining patent data for innovation insights, Patsnap Eureka, our cutting-edge AI assistant, is built for R&D and IP professionals in high-tech industries, is built to accelerate every step of your journey.

No more getting buried in thousands of documents or wasting time on repetitive technical analysis. Our AI Agent helps R&D and IP teams in high-tech enterprises save hundreds of hours, reduce risk of oversight, and move from concept to prototype faster than ever before.

👉 Experience how AI can revolutionize your robotics innovation cycle. Explore Patsnap Eureka today and see the difference.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com