Sensor Fusion for Robots: Combining LIDAR, IMU, and Camera Data

JUN 26, 2025 |

When it comes to robotics, creating an accurate understanding of the environment is crucial for tasks ranging from navigation to manipulation. Sensor fusion is the process of integrating data from multiple sensors to produce more reliable and comprehensive information than would be available from any single sensor alone. In robotic systems, three commonly used sensors are LIDAR, IMU, and cameras. Each of these sensors has its strengths and weaknesses, and combining their data can significantly enhance a robot's perception capabilities.

Understanding LIDAR, IMU, and Camera Data

LIDAR, or Light Detection and Ranging, uses lasers to measure distances. It provides high-resolution depth maps and is particularly useful for creating 3D models of environments. However, LIDAR can struggle with reflective or transparent surfaces and does not provide color information.

IMUs, or Inertial Measurement Units, consist of accelerometers and gyroscopes that measure acceleration and rotational rates. IMUs are indispensable for estimating a robot’s orientation and motion, particularly when visual or GPS data is unreliable. However, they are prone to drift over time, which can lead to inaccuracies if not corrected.

Cameras capture rich visual information, including color and texture, and are essential for tasks that require object recognition and scene understanding. Nevertheless, they lack depth perception unless used in stereo configurations or supplemented by other sensors.

The Benefits of Sensor Fusion

Combining these sensors in a sensor fusion framework allows robots to capitalize on the strengths of each while mitigating their weaknesses. For example, LIDAR can provide accurate depth information, IMUs can offer precise motion data, and cameras can contribute detailed visual context. This fusion results in more robust situational awareness, which is vital for complex tasks such as autonomous navigation and obstacle avoidance.

Techniques for Sensor Fusion

Several techniques can be employed for sensor fusion, each with its own set of advantages and complexities. One common method is the Kalman Filter, which provides an efficient recursive solution to the linear quadratic estimation problem. It is particularly effective in fusing IMU data with other sensors to reduce drift.

Another approach is the use of probabilistic methods like particle filters, which can handle non-linear models and non-Gaussian noise. This makes them suitable for integrating LIDAR and camera data, especially in dynamic environments.

Machine learning techniques, particularly deep learning, have also been increasingly applied to sensor fusion, allowing robots to learn optimal fusion strategies from data. This can lead to improved accuracy and adaptability in diverse scenarios.

Challenges in Sensor Fusion

Despite its benefits, sensor fusion is not without challenges. One major issue is the synchronization of data from different sensors, which may operate at different frequencies and with varying latencies. Ensuring that data is accurately aligned in time is critical for effective fusion.

Another challenge is dealing with the different noise characteristics of each sensor. LIDAR data might be affected by environmental factors such as rain or fog, while camera data can be influenced by lighting conditions. Developing algorithms that can adaptively handle such variations is essential for reliable sensor fusion.

Future Directions in Sensor Fusion

The future of sensor fusion in robotics is promising, with advancements in both hardware and software. As sensors become more affordable and powerful, and as computational capabilities increase, robots will be able to process and integrate even more data in real-time. This will pave the way for more autonomous and intelligent systems capable of operating in increasingly complex environments.

Moreover, the integration of artificial intelligence into sensor fusion processes is expected to advance, enabling robots to make better contextual decisions and improve their learning and adaptation over time.

Conclusion

Sensor fusion is a key enabler for advanced robotic systems, allowing them to perceive and interact with the world more effectively. By combining LIDAR, IMU, and camera data, robots can overcome the limitations of individual sensors and perform tasks with greater precision and reliability. As research and development in this field continue, we can expect to see even more sophisticated and capable robotic systems in the future.

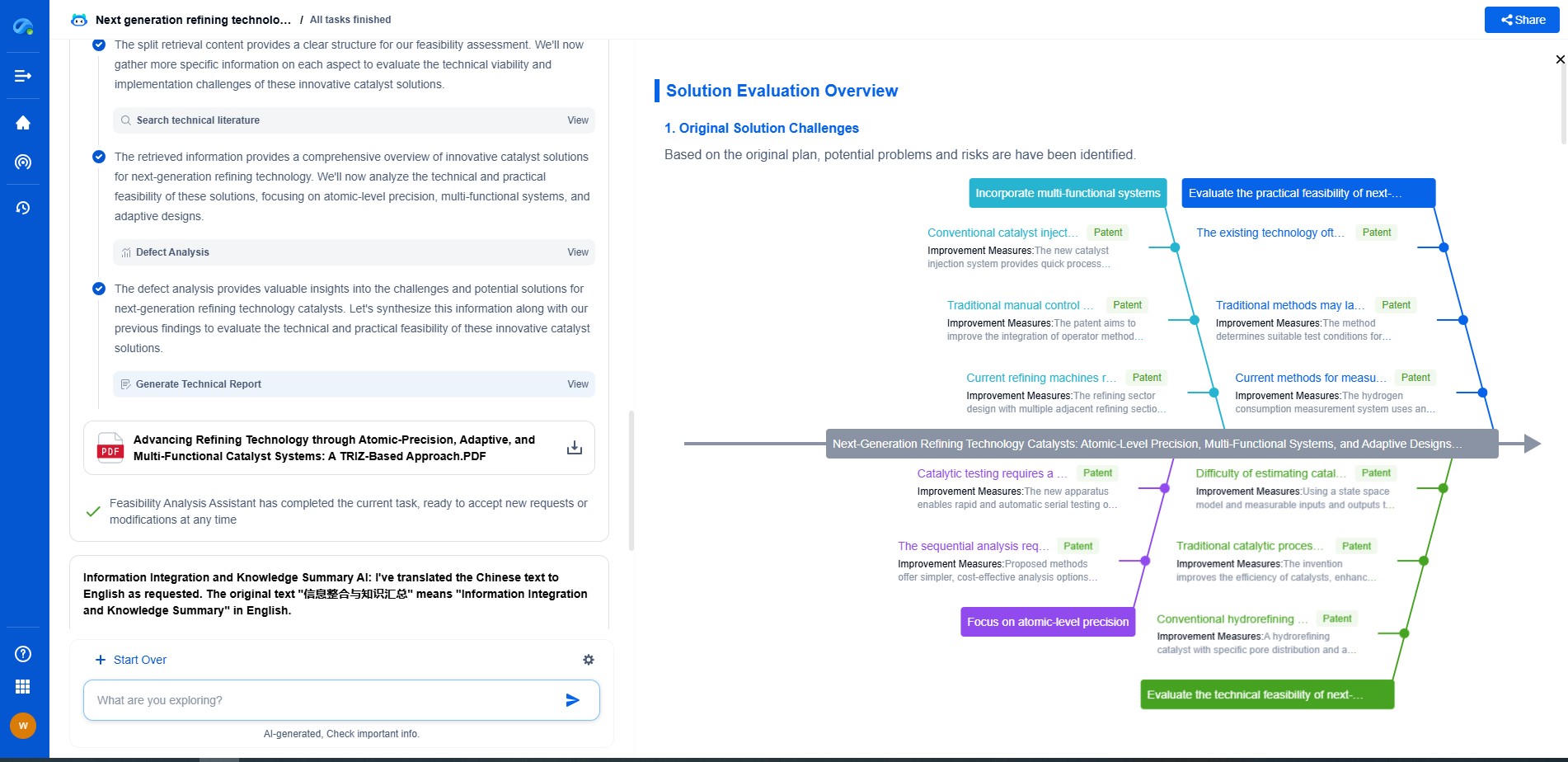

Ready to Redefine Your Robotics R&D Workflow?

Whether you're designing next-generation robotic arms, optimizing manipulator kinematics, or mining patent data for innovation insights, Patsnap Eureka, our cutting-edge AI assistant, is built for R&D and IP professionals in high-tech industries, is built to accelerate every step of your journey.

No more getting buried in thousands of documents or wasting time on repetitive technical analysis. Our AI Agent helps R&D and IP teams in high-tech enterprises save hundreds of hours, reduce risk of oversight, and move from concept to prototype faster than ever before.

👉 Experience how AI can revolutionize your robotics innovation cycle. Explore Patsnap Eureka today and see the difference.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com