TCP Congestion Control Algorithms: From Tahoe to BBR

JUL 14, 2025 |

In the world of computer networking, efficient data transmission is paramount. Transmission Control Protocol (TCP) plays a crucial role in this, ensuring reliable communication between hosts. However, one of the biggest challenges faced by TCP is congestion control—ensuring that the network does not become overloaded with data, which can lead to packet loss and reduced performance. Over the years, several congestion control algorithms have been developed to address this issue. In this blog post, we'll explore the evolution of these algorithms, starting from Tahoe and moving towards the more modern BBR.

The Genesis of TCP Congestion Control: Tahoe

TCP Tahoe was one of the first significant attempts to tackle congestion control. Developed in the late 1980s, it introduced several key concepts that laid the foundation for future algorithms. Tahoe implemented a three-phase mechanism: slow start, congestion avoidance, and fast retransmit.

During the slow start phase, the congestion window (cwnd) size increases exponentially, allowing the network to detect its capacity quickly. Once the threshold is reached, the algorithm shifts to congestion avoidance, where the cwnd size increases linearly, helping to prevent congestion. In the event of packet loss, Tahoe's fast retransmit mechanism quickly resends lost packets, reducing the time the connection is stalled. Despite its innovations, Tahoe's inability to differentiate between packet loss due to congestion and other factors limited its effectiveness in some scenarios.

Advancements with TCP Reno

Building upon Tahoe, TCP Reno introduced the concept of fast recovery, which further improved the response to packet loss. Reno retained slow start and congestion avoidance but enhanced the fast retransmit phase. With fast recovery, rather than reducing the cwnd to one packet as in Tahoe, Reno halves it, allowing for a more gradual reduction in data flow and a quicker recovery to full transmission rates. While TCP Reno was a significant improvement, it still faced challenges in networks with high latency and packet loss.

New Reno and the Challenge of Packet Loss

To address the limitations of Reno, TCP New Reno was introduced. Its primary enhancement was in its ability to handle multiple packet losses within a single congestion window, a scenario that Reno struggled with. New Reno introduced a more sophisticated form of fast recovery, allowing for partial acknowledgments. This meant that if multiple packets were lost, the algorithm could continue to recover without returning to slow start, providing better performance in lossy environments.

Emerging Solutions: TCP Vegas

TCP Vegas marked a departure from the loss-based approach of previous algorithms. Instead of waiting for packet loss, Vegas aimed to predict congestion before it occurred by analyzing round-trip times (RTT). By measuring the difference between expected and actual RTT, Vegas could adjust the cwnd size more proactively, smoothing out data flow and reducing packet loss. Despite its innovative approach, TCP Vegas struggled with deployment because its benefits could be negated when competing with loss-based algorithms like Reno.

The Modern Era: TCP CUBIC and BBR

As networks evolved, so did the need for more robust congestion control algorithms. TCP CUBIC, developed in 2005, became the default algorithm for many systems due to its ability to efficiently utilize high bandwidth-delay product (BDP) networks. CUBIC's cubic growth function allows it to probe network capacity more aggressively than linear growth methods, providing better performance over long-distance and high-speed networks.

The latest evolution in TCP congestion control is BBR (Bottleneck Bandwidth and Round-trip propagation time). Unlike previous algorithms that relied on packet loss, BBR focuses on achieving maximum bandwidth while minimizing delays. It does so by continuously measuring the bottleneck bandwidth and RTT, adjusting its sending rate accordingly. BBR's approach allows for better utilization of available network resources and can significantly improve performance, especially in modern, heterogeneous network environments.

Conclusion

The evolution of TCP congestion control algorithms from Tahoe to BBR represents a fascinating journey of innovation and adaptation. Each new algorithm has built upon the successes and limitations of its predecessors, leading to more efficient and effective ways to manage network congestion. As our digital world continues to grow, these algorithms will remain crucial in ensuring that our networks can handle increasing amounts of data without compromising performance. Whether you're a network engineer, a developer, or simply someone interested in technology, understanding these algorithms offers insights into one of the fundamental challenges of modern computing.

From 5G NR to SDN and quantum-safe encryption, the digital communication landscape is evolving faster than ever. For R&D teams and IP professionals, tracking protocol shifts, understanding standards like 3GPP and IEEE 802, and monitoring the global patent race are now mission-critical.

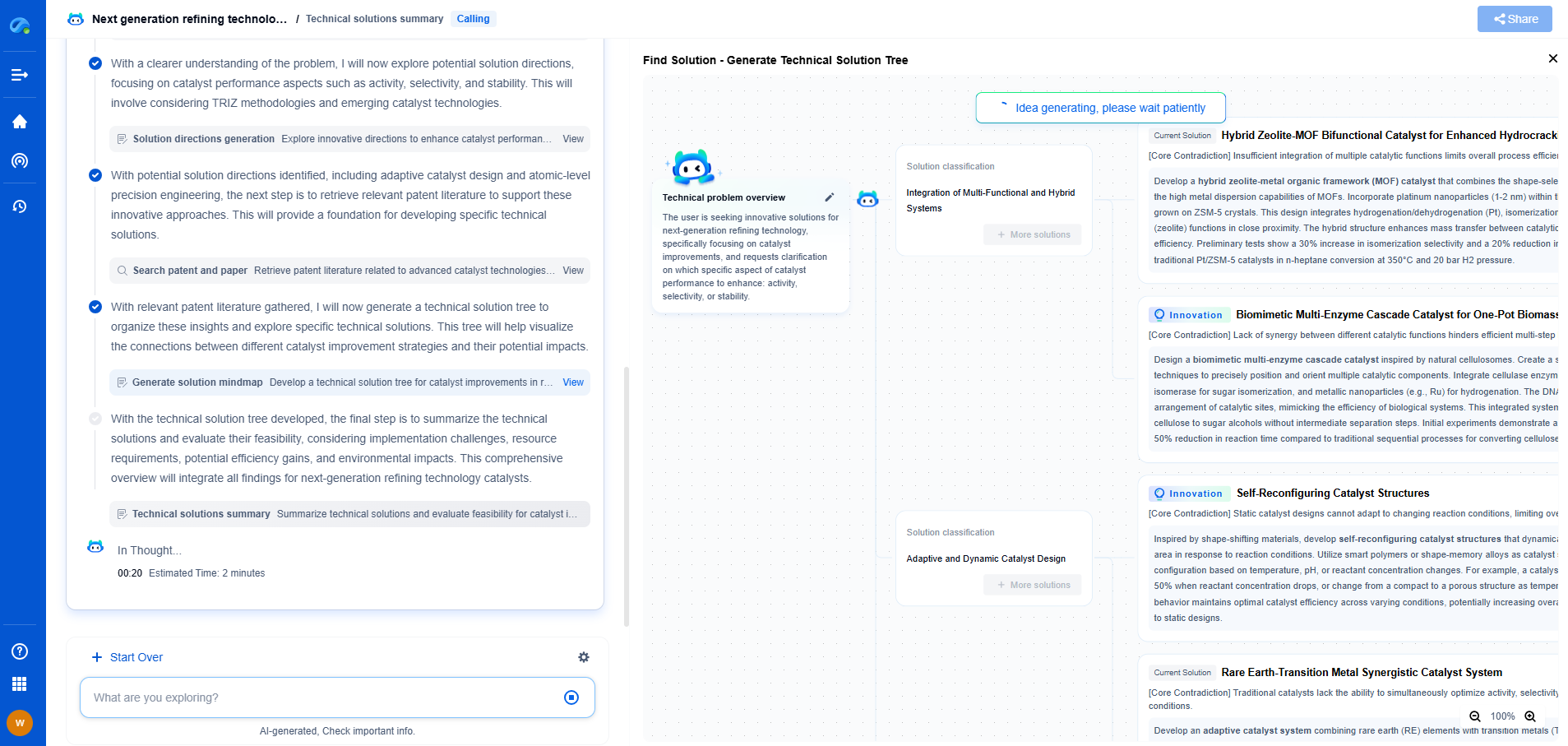

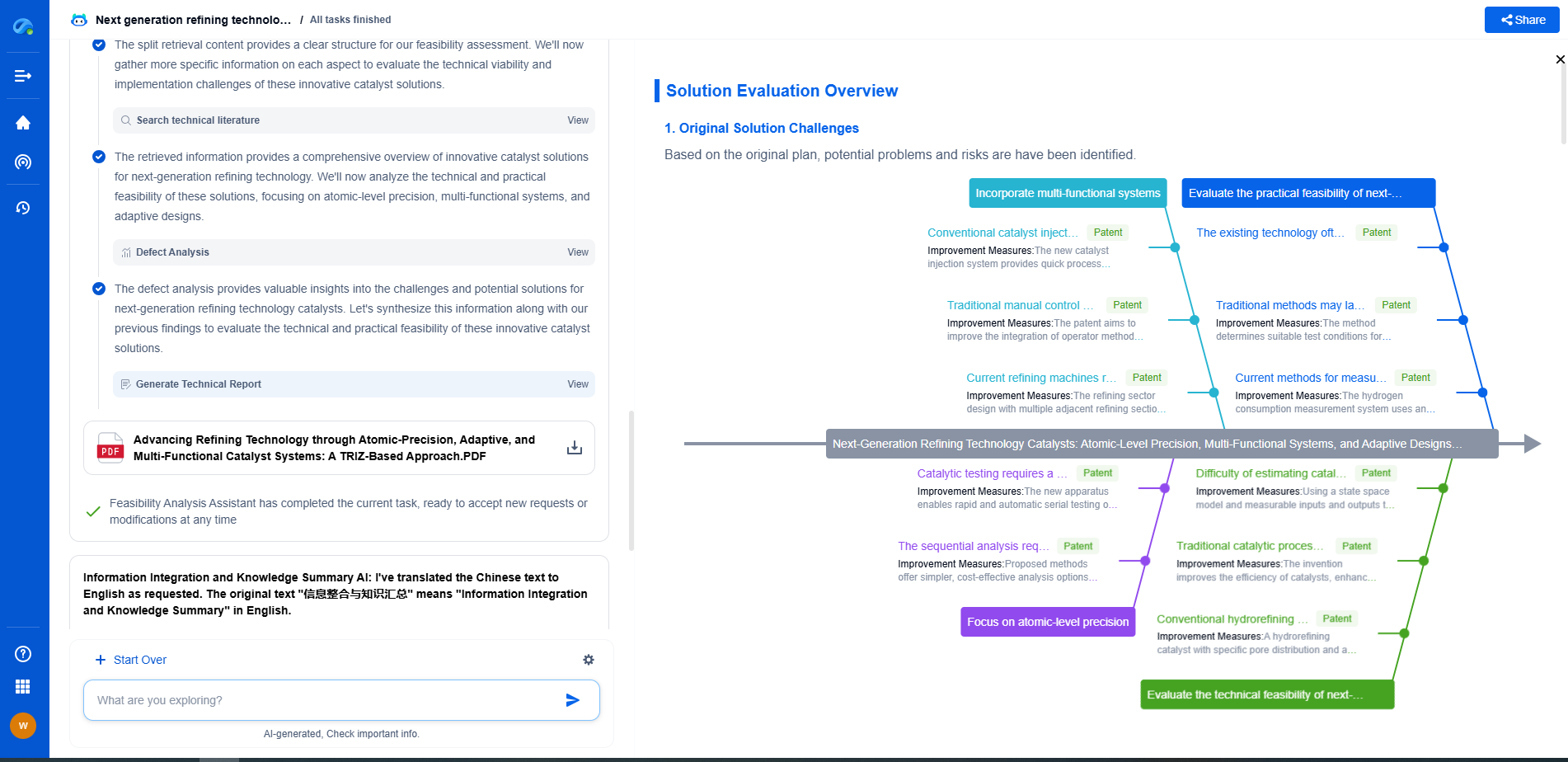

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

📡 Experience Patsnap Eureka today and unlock next-gen insights into digital communication infrastructure, before your competitors do.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com