The Science Behind Measurement Latency: From Sensor to Display

JUL 17, 2025 |

In today's fast-paced digital world, the ability to measure and display data in real-time is critical across various industries, from healthcare and automotive to consumer electronics and industrial automation. At the heart of this capability is the concept of measurement latency: the time delay between the moment a sensor detects a parameter and when that data is displayed to the user. Understanding the science behind measurement latency is essential for optimizing systems to ensure accurate and timely data representation.

Components of Measurement Latency

Measurement latency can be broken down into several distinct components, each contributing to the overall delay. These include sensor response time, data acquisition, processing time, transmission delay, and display lag.

1. Sensor Response Time

The first stage in measuring data is the sensor's detection of a physical parameter, such as temperature, pressure, or motion. Sensor response time refers to how quickly a sensor can react to changes in the environment. This is influenced by the sensor's design and materials. For instance, a thermocouple might have a faster response time than a thermistor because of its smaller mass and direct contact with the heat source.

2. Data Acquisition

Once the sensor detects a change, the data acquisition phase begins. This involves converting the analog signals from the sensor into digital data that can be processed by a microcontroller or computer. The speed of the analog-to-digital converter (ADC) plays a significant role in this phase. High-resolution ADCs provide more precise measurements but can introduce additional latency compared to lower-resolution converters.

3. Processing Time

After the data is acquired, it needs to be processed. This involves filtering, error correction, and sometimes complex computations to transform raw data into meaningful information. Processing time can vary significantly depending on the algorithmic complexity and the processing power available. In real-time systems, efficient coding and powerful processors are crucial in minimizing this latency component.

4. Transmission Delay

In many applications, the processed data must be transmitted from one location to another, such as from a remote sensor to a central processing unit or display. Transmission delay depends on the communication method used. Wired connections like Ethernet can offer lower latency compared to wireless options like Bluetooth or Wi-Fi, although recent advancements in wireless technologies have significantly reduced these differences.

5. Display Lag

Finally, display lag is the time taken to render the processed data on a screen or another output device. This can be influenced by the display technology used, the resolution, and the refresh rate. High-refresh-rate displays can reduce perceived latency, providing a smoother and more immediate representation of data changes.

Mitigating Measurement Latency

Reducing measurement latency is a critical task for engineers and developers, particularly in applications where real-time data is paramount, such as in medical monitoring or automated control systems. Several strategies can be employed to minimize latency:

1. Optimization of Sensor Selection

Choosing the right sensor for the application is the first step. Sensors with faster response times should be prioritized for real-time applications. Additionally, calibrating sensors to operate within optimal conditions can improve response rates.

2. Enhancing Data Acquisition Systems

Utilizing high-speed ADCs and optimizing the sampling rate can significantly reduce the data acquisition latency. It's also important to balance between resolution and speed to avoid unnecessary delays.

3. Efficient Data Processing

Implementing efficient algorithms and leveraging hardware acceleration can reduce processing time. Parallel processing and real-time operating systems (RTOS) can also help in managing tasks more effectively, ensuring that data is processed as quickly as it is acquired.

4. Improving Transmission Protocols

Investing in faster communication protocols and optimizing data packets for transmission can reduce delays. In some cases, using direct wired connections can provide a more consistent and lower-latency path for data transmission.

5. Upgrading Display Technologies

Lastly, to minimize display lag, high-performance displays with low input latency and high refresh rates should be utilized. Optimizing the graphics pipeline can also help in ensuring that visual data representation is as immediate as possible.

Conclusion

Understanding and managing measurement latency is crucial for the development of responsive and reliable data systems. By dissecting the components of latency and implementing targeted strategies, we can create systems that deliver near-instantaneous data feedback, enhancing user experience and operational efficiency across a wide range of applications. As technology continues to advance, the quest to minimize latency will remain a pivotal aspect of engineering and system design.

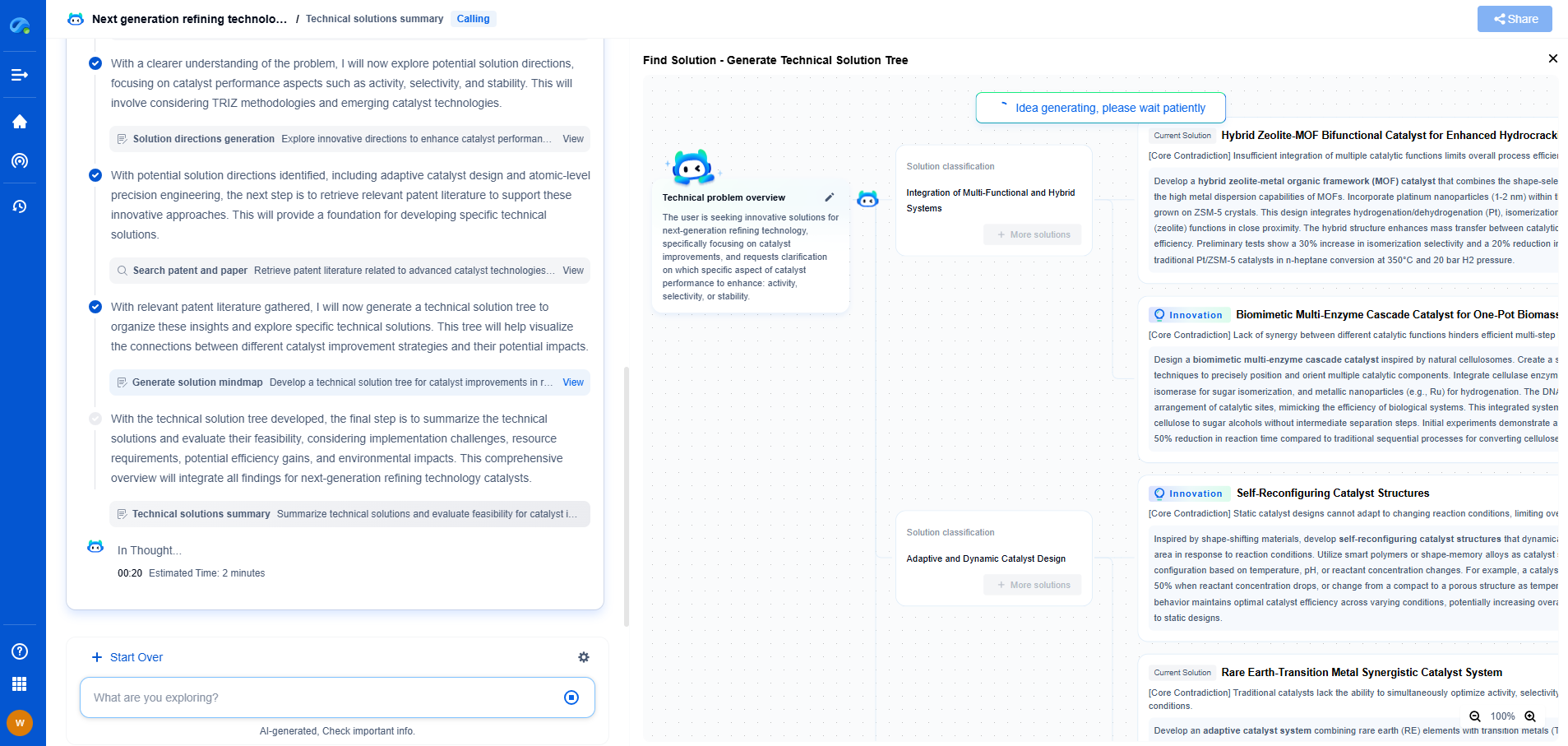

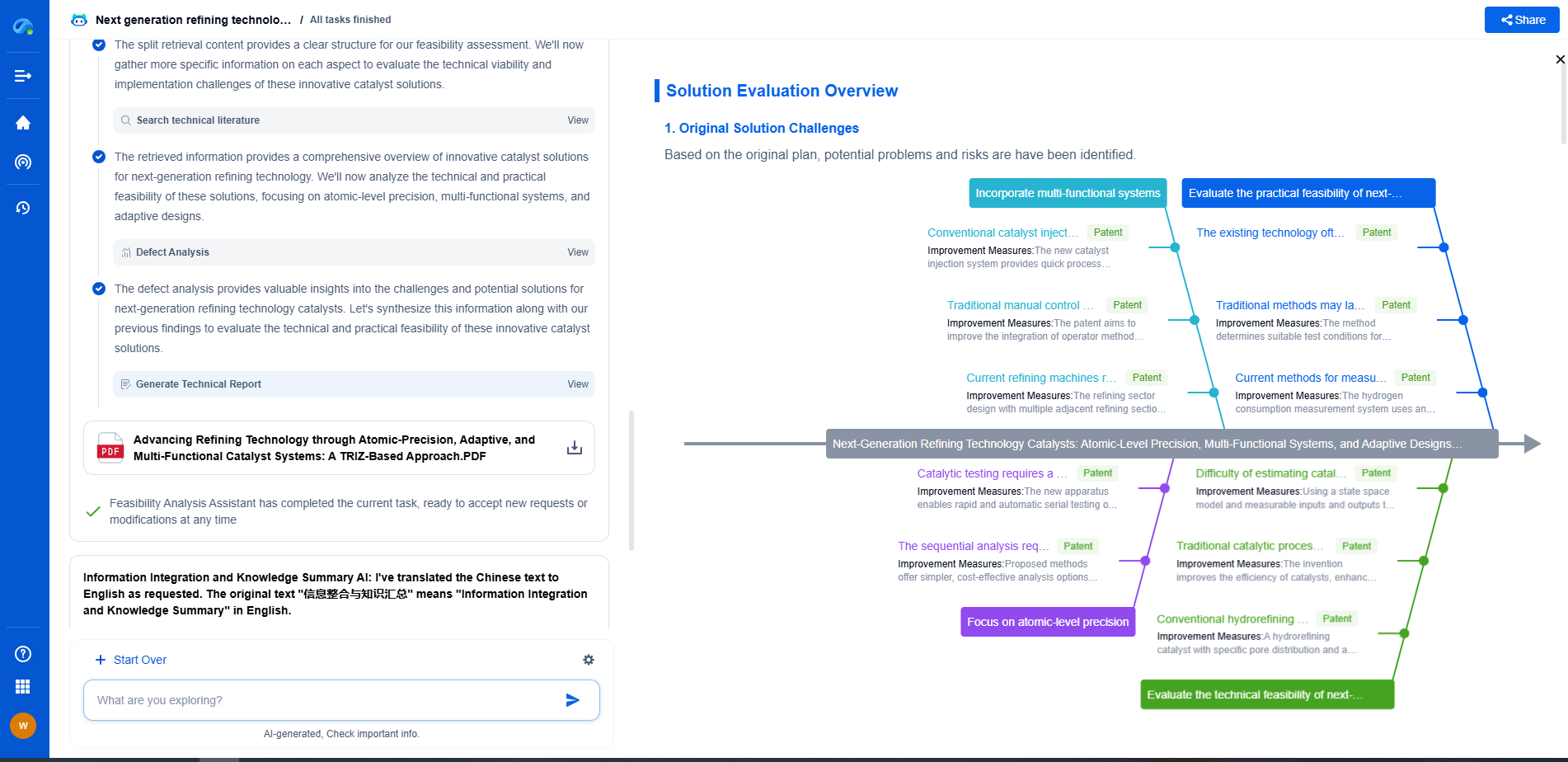

Whether you’re developing multifunctional DAQ platforms, programmable calibration benches, or integrated sensor measurement suites, the ability to track emerging patents, understand competitor strategies, and uncover untapped technology spaces is critical.

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

🧪 Let Eureka be your digital research assistant—streamlining your technical search across disciplines and giving you the clarity to lead confidently. Experience it today.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com