What is learning from demonstration (LfD)?

JUN 26, 2025 |

In the rapidly evolving field of artificial intelligence and robotics, Learning from Demonstration (LfD) stands out as a promising approach to empower machines with human-like learning capabilities. This method, often referred to as imitation learning, allows robots and AI systems to acquire new skills and behaviors by observing and mimicking human demonstrations. Unlike traditional methods that require explicit programming or reinforcement learning that demands trial and error, LfD offers an intuitive and efficient alternative, bridging the gap between human intuition and machine precision.

The Foundations of Learning from Demonstration

At its core, Learning from Demonstration is built on the principle that machines can learn complex tasks by observing a human perform the task successfully. The process typically involves capturing the actions of a human expert through various sensors or interfaces, such as motion capture systems, cameras, or specialized input devices. This data is then processed to extract the essential features of the task, which the machine can use to reproduce the behavior.

One of the key advantages of LfD is its ability to reduce the complexity and time required to teach machines new tasks. Instead of manually coding each step or relying on extensive trial-and-error processes, LfD leverages the natural human ability to perform tasks, making it particularly valuable in dynamic and unstructured environments.

Techniques and Algorithms in LfD

LfD encompasses a range of techniques and algorithms that enable machines to interpret and replicate human demonstrations. Some popular approaches include:

1. **Behavioral Cloning:** This method involves directly mapping the observed actions to the robot's control commands. It is akin to learning by example, where the machine tries to mimic the human actions as closely as possible.

2. **Inverse Reinforcement Learning (IRL):** Unlike behavioral cloning, IRL focuses on understanding the underlying goals and rewards that drive human actions. By inferring the intention behind the demonstrated behavior, machines can adapt and generalize the learned skills to new situations.

3. **Dynamic Movement Primitives (DMPs):** DMPs are used to model complex, time-dependent motor skills observed during demonstrations. They enable robots to adapt to variations in task dynamics while preserving the essential features of the demonstrated behavior.

Applications of LfD in Robotics and AI

Learning from Demonstration has found applications across a wide spectrum of domains, significantly advancing the capabilities of robots and AI systems:

1. **Industrial Automation:** In manufacturing, LfD can be used to program robotic arms to perform tasks like assembly, painting, or welding by demonstrating the task once. This reduces downtime and increases the flexibility of industrial robots.

2. **Service Robotics:** Robots that assist in healthcare, hospitality, or domestic settings can learn to perform tasks such as serving food, cleaning, or assisting the elderly by observing human caregivers.

3. **Autonomous Vehicles:** LfD is employed in developing self-driving cars by allowing the vehicle to learn driving behaviors from human drivers, enhancing its ability to navigate complex urban environments.

Challenges and Future Directions

While Learning from Demonstration offers significant advantages, it also presents challenges. One of the primary issues is the quality and variability of demonstrations, as human performance can be inconsistent. Ensuring that machines generalize correctly from limited demonstrations without overfitting is a critical area of research. Additionally, developing systems that can learn from imperfect or suboptimal demonstrations remains a challenge.

The future of LfD looks promising, with ongoing research focused on improving learning algorithms, enhancing the interpretability of demonstrations, and integrating LfD with other AI techniques like deep learning and reinforcement learning. As these challenges are addressed, LfD is likely to play an increasingly pivotal role in making intelligent systems more autonomous, adaptable, and human-friendly.

Conclusion

Learning from Demonstration represents a crucial step toward creating machines that can learn and adapt with the ease and efficiency of humans. By leveraging human expertise through demonstrations, LfD not only accelerates the training process but also expands the potential applications of robots and AI in everyday life. As researchers continue to refine and innovate within this field, the dream of seamless human-machine collaboration becomes ever more attainable.

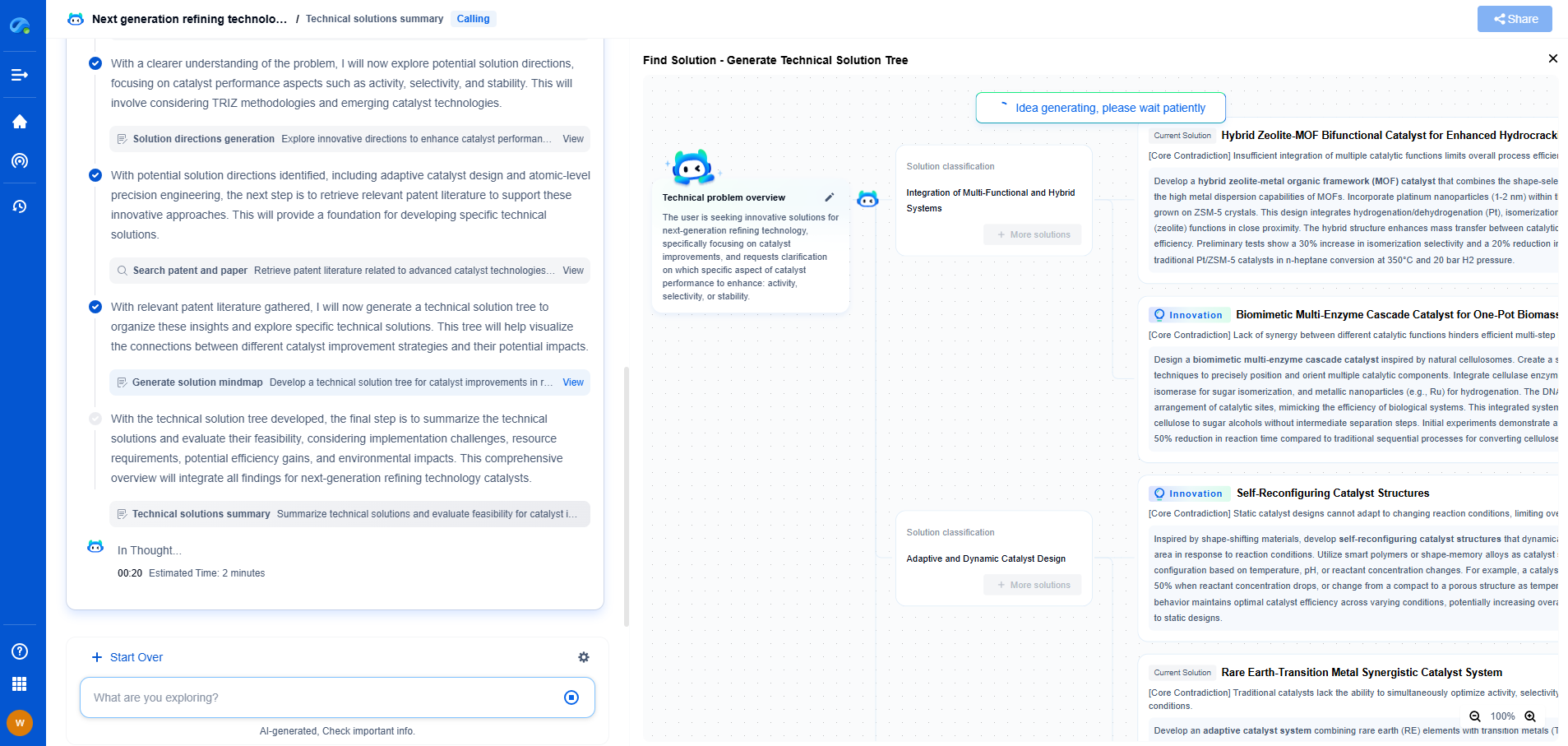

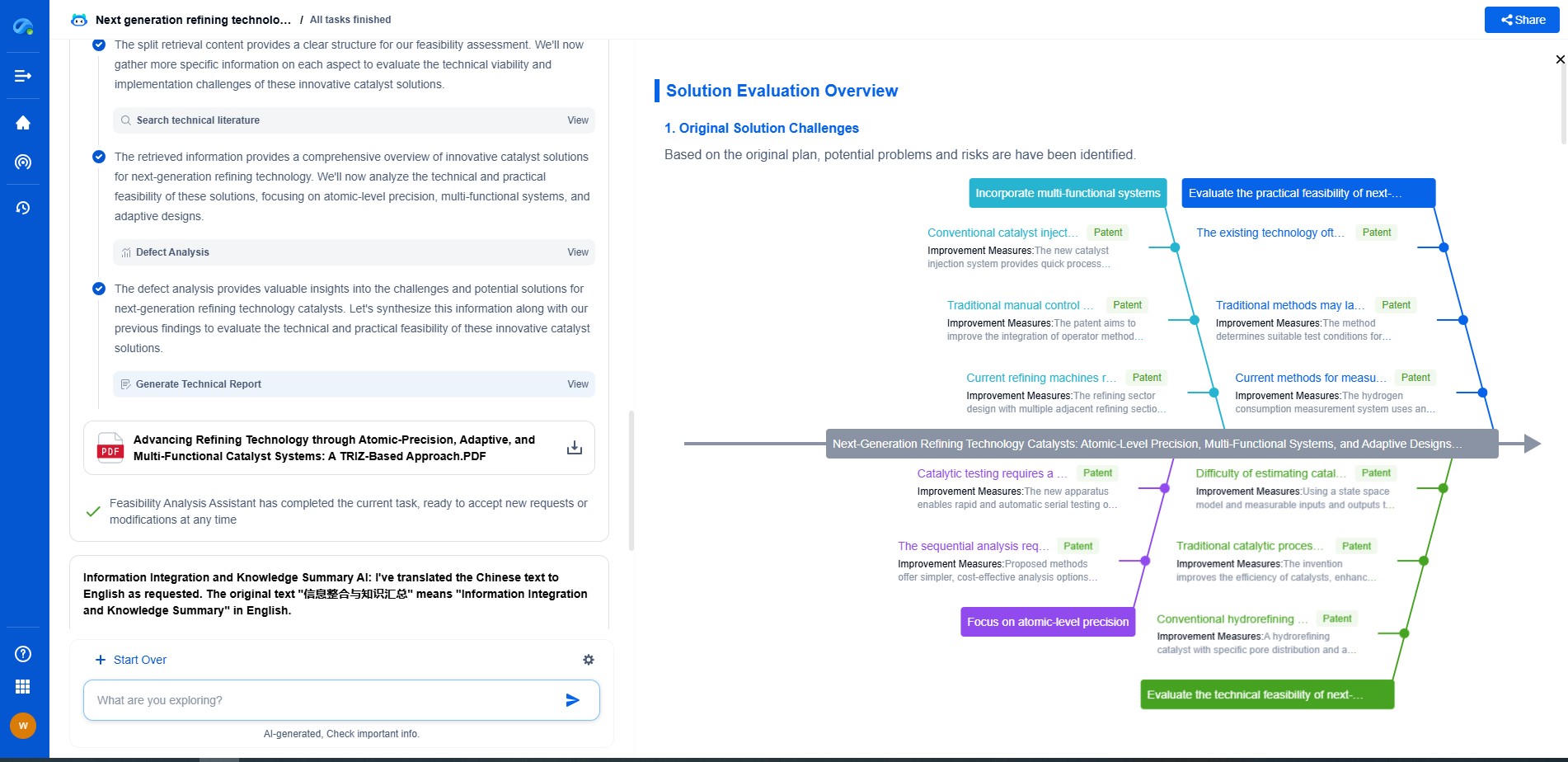

Ready to Redefine Your Robotics R&D Workflow?

Whether you're designing next-generation robotic arms, optimizing manipulator kinematics, or mining patent data for innovation insights, Patsnap Eureka, our cutting-edge AI assistant, is built for R&D and IP professionals in high-tech industries, is built to accelerate every step of your journey.

No more getting buried in thousands of documents or wasting time on repetitive technical analysis. Our AI Agent helps R&D and IP teams in high-tech enterprises save hundreds of hours, reduce risk of oversight, and move from concept to prototype faster than ever before.

👉 Experience how AI can revolutionize your robotics innovation cycle. Explore Patsnap Eureka today and see the difference.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com