What is sensor fusion in robotic systems?

JUN 26, 2025 |

Understanding Sensor Fusion

Sensor fusion refers to the process of combining sensory data from different sources to produce more consistent, accurate, and useful information than that provided by any individual sensor alone. In robotic systems, it involves the integration of data from various sensors such as cameras, lidar, radar, ultrasonic sensors, and inertial measurement units (IMUs). Each type of sensor offers unique data, and when combined, these data sets can significantly enhance a robot's perception and decision-making capabilities.

Why Sensor Fusion is Important in Robotics

Robots operate in complex environments where relying on a single type of sensor may lead to incomplete or skewed information. Sensor fusion addresses this issue by merging data from multiple sensors to create a comprehensive representation of the environment. This is crucial for several reasons:

1. **Increased Accuracy**: By integrating different types of data, sensor fusion reduces uncertainty and increases the accuracy of information about the surroundings.

2. **Robustness**: If one sensor fails or provides erroneous data, other sensors can compensate, thereby improving the reliability of the system.

3. **Enhanced Perception**: Sensor fusion helps robots identify and interpret objects, obstacles, and other key features of their environment with greater precision.

4. **Improved Decision-Making**: With better-quality information, robots can make more informed decisions, leading to improved performance and efficiency.

Methodologies of Sensor Fusion

There are several methodologies employed in sensor fusion, each with its unique approach to combining data:

1. **Kalman Filtering**: This is a mathematical method used to estimate the state of a dynamic system from a series of incomplete and noisy measurements. It's widely used in robotics for sensor fusion as it helps in predicting the state and correcting measurements.

2. **Bayesian Networks**: These probabilistic models represent a set of variables and their conditional dependencies. In sensor fusion, they are used to model the uncertainties and make informed decisions based on the data from different sensors.

3. **Particle Filters**: Also known as Sequential Monte Carlo methods, these are used for implementing a recursive Bayesian filter by Monte Carlo simulations. They are useful in non-linear and non-Gaussian environments commonly found in robotic applications.

4. **Neural Networks and Deep Learning**: These are increasingly being used for sensor fusion due to their ability to handle large volumes of data and learn complex patterns. They can be particularly effective in scenarios where the relationships between sensor data are not explicitly defined.

Applications of Sensor Fusion in Robotics

Sensor fusion finds applications in various domains of robotics:

1. **Autonomous Vehicles**: In self-driving cars, sensor fusion is crucial for integrating data from cameras, lidar, and radar to accurately perceive the environment, detect obstacles, and navigate safely.

2. **Unmanned Aerial Vehicles (UAVs)**: Drones use sensor fusion to stabilize flight, navigate through obstacles, and perform tasks such as surveillance and mapping.

3. **Industrial Robotics**: In manufacturing environments, sensor fusion helps robots in precise operations like assembly, quality inspection, and inventory management.

4. **Healthcare Robotics**: Medical robots use sensor fusion for tasks like surgical navigation and rehabilitation, where precision and adaptability are critical.

The Future of Sensor Fusion in Robotics

As robotics technology advances, the role of sensor fusion is expected to grow even more significant. The development of more sophisticated algorithms and the integration of artificial intelligence will enhance the capability of sensor fusion systems. Future advancements may include:

1. **Real-Time Processing**: Faster processors and improved algorithms will enable real-time sensor fusion, allowing robots to react instantaneously to changes in their environment.

2. **Scalability**: As robots are deployed in more diverse environments, sensor fusion systems will need to scale and adapt to new sensors and data types.

3. **Enhanced Learning Capabilities**: With advancements in machine learning, future sensor fusion systems will be able to learn from past experiences, improving their performance over time.

In conclusion, sensor fusion is a cornerstone of modern robotic systems, providing the foundation for enhanced perception, decision-making, and autonomy. As technology progresses, it will continue to evolve, offering exciting possibilities for the future of robotics.

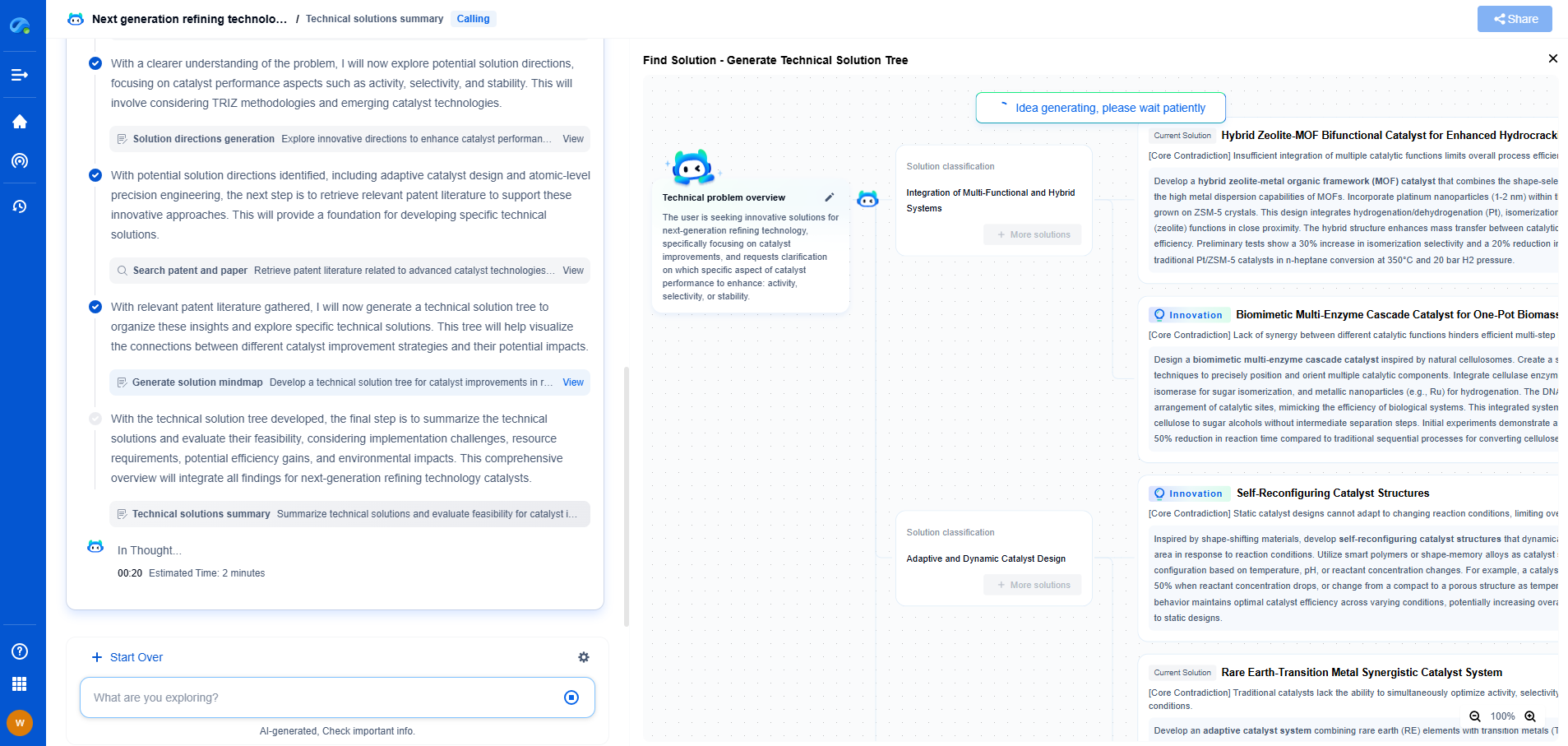

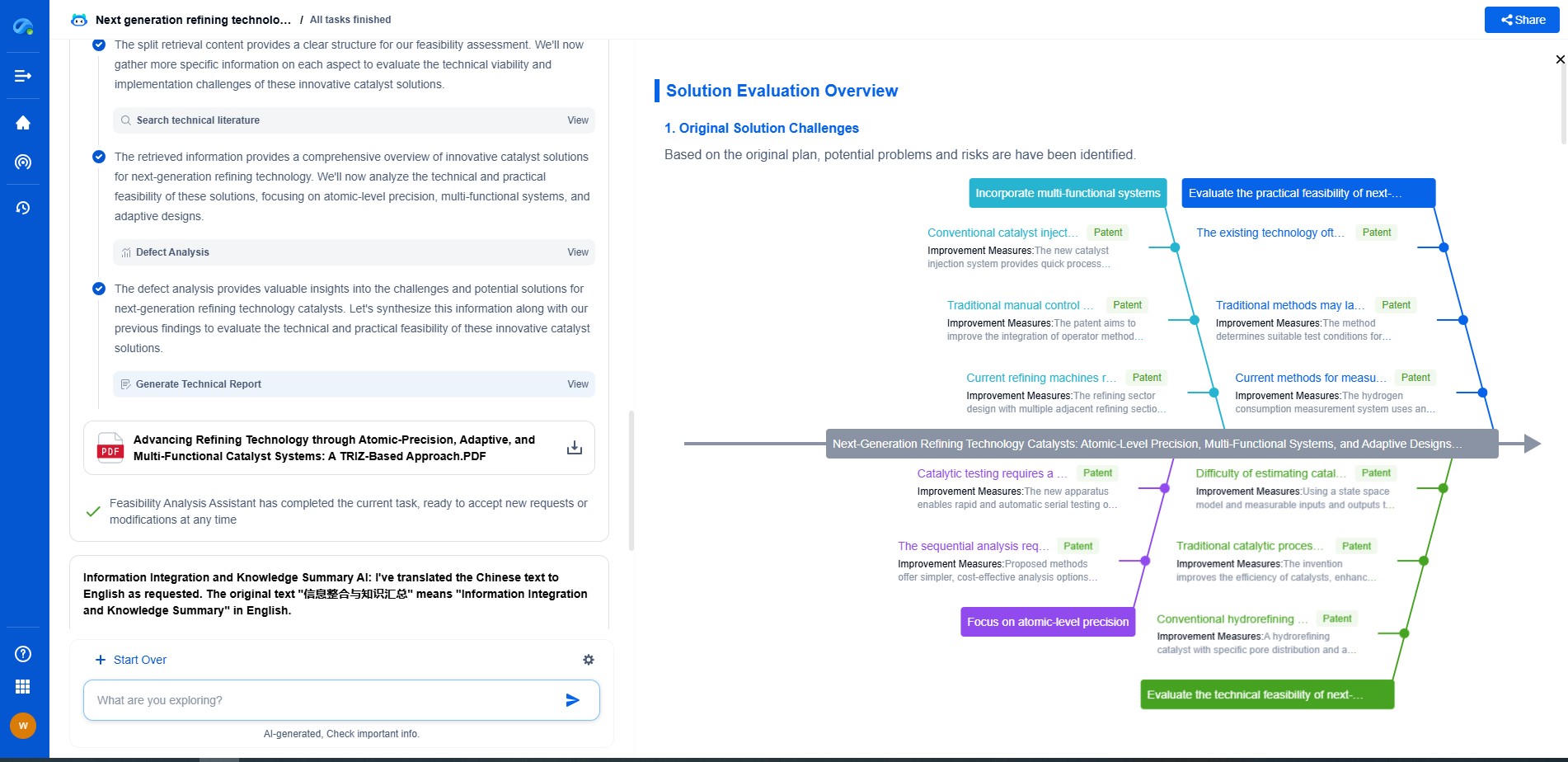

Ready to Redefine Your Robotics R&D Workflow?

Whether you're designing next-generation robotic arms, optimizing manipulator kinematics, or mining patent data for innovation insights, Patsnap Eureka, our cutting-edge AI assistant, is built for R&D and IP professionals in high-tech industries, is built to accelerate every step of your journey.

No more getting buried in thousands of documents or wasting time on repetitive technical analysis. Our AI Agent helps R&D and IP teams in high-tech enterprises save hundreds of hours, reduce risk of oversight, and move from concept to prototype faster than ever before.

👉 Experience how AI can revolutionize your robotics innovation cycle. Explore Patsnap Eureka today and see the difference.

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com