Data centers are now becoming the central engine driving global economic and social development at unprecedented speed and scale. If the PC and smartphone eras once defined the golden decade of the semiconductor industry, then data centers—driven by artificial intelligence (AI), cloud computing, and hyperscale infrastructure—are opening a brand-new era.

This is not a gradual evolution but a disruptive transformation. The demand for chips in data centers is rapidly shifting from simple processors and memory toward a complex, full-spectrum ecosystem encompassing compute, storage, interconnect, and power. This powerful wave of demand is propelling the data center semiconductor market to the trillion-dollar scale at record speed.

The AI Boom: An Arms Race in Data Centers

Artificial intelligence—especially the rise of generative AI—is the most powerful catalyst of this transformation. Industry forecasts indicate that AI-related capital expenditures have already surpassed non-AI spending, accounting for nearly 75% of data center investment. By 2025, this figure is expected to exceed $450 billion. AI servers are expanding rapidly, growing from only a few percent of compute servers in 2020 to more than 10% by 2024.

Driven by foundation model training, inference, and custom chip innovation, global technology giants are locked in an intense “computing arms race.” Companies such as Microsoft, Google, and Meta are investing tens of billions of dollars annually, while smaller players are quickly following suit, recognizing that future competitive advantage will depend directly on infrastructure scale and chip-level differentiation.

This explosive growth is driving unprecedented semiconductor demand. According to Yole Group, the data center semiconductor acceleration market is expected to begin expanding in 2024 and reach $493 billion by 2030. By then, data center semiconductors will account for more than 50% of the entire semiconductor market. The segment’s compound annual growth rate (2025–2030) will be nearly double that of the overall semiconductor industry, reflecting the profound shift driven by AI, cloud computing, and hyperscale infrastructure.

The Semiconductor Renaissance

GPU vs. ASIC Race: GPUs will remain the dominant force, growing fastest due to the complexity and rising compute demands of AI-intensive workloads. NVIDIA, with its powerful GPU ecosystem, is transforming from a traditional chip design company into a full-stack AI and data center solutions provider. Its flagship Blackwell GPU, built with TSMC’s advanced 4nm process, continues to dominate this field.

To counter NVIDIA’s market dominance, hyperscalers such as AWS, Google, and Azure are developing proprietary AI accelerators (e.g., AWS’s Graviton) and innovating in custom storage and networking hardware. These developments are intensifying competition in AI chips, particularly in training and inference, where performance differentiation is becoming central to enterprise competitiveness.

This dual-track race of GPUs and ASICs is emerging as the dominant paradigm in data center computing.

HBM – Breaking the Bandwidth Bottleneck: With AI model sizes growing exponentially, traditional memory bandwidth has become the greatest bottleneck in scaling compute power. High Bandwidth Memory (HBM), enabled by innovative 3D-stacking technology, significantly boosts bandwidth and capacity, becoming standard in AI and high-performance computing (HPC) servers.

According to Archive Market Research, the HBM market is experiencing explosive growth, projected to reach $3.816 billion by 2025, with a compound annual growth rate (2025–2033) of 68.2%. HBM’s rapid expansion is becoming a powerful new growth driver in the memory semiconductor market.

Key trends in AI chipset HBM include:

·Larger single stacks exceeding 8GB, driven by complex AI models’ need for faster data throughput.

·Energy efficiency innovations in low-power HBM designs.

·Increasing integration of HBM directly into AI accelerators, minimizing latency and boosting performance.

·Standardized interfaces accelerating system integration and time-to-market.

·Advanced packaging such as Through-Silicon Via (TSV) enabling dense, efficient HBM stacks.

·Rising demand for cost-effective HBM solutions in edge AI, embedded systems, and mobile devices.

Global leaders in the HBM market include SK hynix, Samsung, and Micron Technology. SK hynix and Samsung together account for over 90% of global HBM supply. Micron has become the first U.S. company to mass-produce HBM3E, with its products already deployed in NVIDIA’s H200 GPU.

DPUs and Network ASICs: In the AI era of massive data flows, efficient interconnects are critical. Data Processing Units (DPUs) and high-performance network ASICs are emerging to offload CPUs and GPUs from networking tasks, optimize traffic management, and free up more compute resources. DPUs also provide advantages in security, scalability, energy efficiency, and long-term cost-effectiveness, making them indispensable for the future of data center infrastructure.

Disruptive Technologies: Opening a New Chapter in the Post-Moore Era

If AI is the driving force behind the surging demand for data center chips, then a series of disruptive technologies are redefining the performance, efficiency, and sustainability of data centers from the ground up.

Silicon Photonics and CPO

Data transmission inside data centers is rapidly shifting from traditional copper cables to optical interconnects. Silicon Photonics, especially Co-Packaged Optics (CPO), is emerging as the key solution to overcome high-speed, low-power interconnect challenges. CPO integrates optical engines directly within the package of compute chips (CPU/GPU/ASIC), drastically shortening electrical signal paths, reducing latency, and significantly improving energy efficiency.

Industry leaders such as Marvell, NVIDIA, and Broadcom are actively investing in this space, with market revenues expected to reach tens of billions of dollars by 2030. In January, Marvell announced a breakthrough CPO architecture tailored for custom AI accelerators. By embedding CPO devices within XPUs, system density scales from dozens per rack to hundreds across multiple racks, substantially boosting AI server performance.

Another breakthrough is the Thin-Film Lithium Niobate (TFLN) Modulator, which combines the high-speed performance of lithium niobate with the scalability of silicon photonics. TFLN modulators deliver ultra-high bandwidth (>70GHz), very low insertion loss (<2dB), compact size, low drive voltage (<2V), and CMOS process compatibility, making them ideal for 200Gbps-per-channel modulation and advanced data center interconnects.

CPO directly addresses the “electrical I/O bottleneck” limiting pluggable optics, representing a leap in power and bandwidth efficiency. Just as HBM overcame the “memory wall,” CPO is breaking through the “electrical wall,” enabling higher-density, longer-distance XPU-to-XPU connections. This allows more flexible disaggregation of compute resources, reshaping data center architectures.

Advanced Packaging

CPO is just one facet of advanced packaging. Technologies such as 3D stacking and chiplets enable heterogeneous integration of compute, memory, and I/O dies on a single substrate. This “Lego-style” design approach not only pushes beyond the physical limits of Moore’s Law but also provides unprecedented flexibility for customized chips.

Next-Generation Data Center Design: Efficiency Maximization

DC Power Distribution

With AI workloads driving explosive compute demand, power density per rack is soaring. Traditional AC supply is becoming unsustainable: rack power has risen from ~20kW historically to 36kW in 2023, with projections of 50kW by 2027. NVIDIA has even proposed 600kW rack architectures, making multiple AC-DC conversions untenable.

As a result, data centers are shifting to direct DC distribution, eliminating redundant conversions and reducing losses. For example, delivering 600kW at 48V requires 12,500A, which is impractical under traditional designs. Here, Wide-Bandgap (WBG) semiconductors such as GaN and SiC play a critical role, offering high electron mobility, higher breakdown voltage, and lower switching losses. These enable compact, high-density power systems with reduced cooling needs, directly tackling the “energy wall.” NVIDIA is already deploying STMicroelectronics’ GaN/SiC power technology in its AI racks to cut cabling bulk and boost efficiency.

Liquid Cooling

Cooling has become the second-largest capital expenditure after power infrastructure and the largest non-IT operating expense. With AI/HPC workloads, traditional air cooling is no longer sufficient. The liquid cooling market is forecast to exceed $61B by 2029 (CAGR 14%). Liquids absorb thousands of times more heat than air, enabling denser, more efficient systems. Liquid cooling can reduce cooling energy consumption by up to 90%, push PUE to ~1.05, shrink facility footprints by 60%, and cut noise dramatically.

Leading AI systems, such as NVIDIA’s GB200 NVL72, are explicitly designed for Direct-to-Chip (DTC) liquid cooling. Hyperscalers including Google, Meta, Microsoft, and AWS are rapidly adopting DTC as well.

Liquid cooling comes in several forms:

·Direct-to-Chip (DTC): Cold plates contact chips directly (single-phase or two-phase, the latter using phase change for greater efficiency).

·Rear Door Heat Exchanger (RDHx): Enhances cooling capacity without modifying IT hardware.

·Immersion Cooling: Entire components are submerged in dielectric fluids (single- or two-phase), offering the highest heat transfer efficiency for extreme density.

To ensure reliability, sensor networks are essential:

·Temperature sensors monitor coolant inlet/outlet and rack temperatures.

·Pressure sensors detect leaks/blockages and ensure optimal flow.

·Flow meters (e.g., ultrasonic) provide real-time efficiency and safety data.

·Coolant quality sensors (humidity, conductivity) track degradation and contamination in dielectric fluids.

The industry is at a “thermal tipping point”: air cooling is obsolete for high-density AI, making liquid cooling not optional but mandatory. Data center design must adapt, from reinforced flooring and redesigned power systems to real estate selection based on power density rather than land footprint.

Advanced thermal management also includes software-driven DTM (Dynamic Thermal Management) and AI model optimization (quantization, pruning) to reduce heat loads.

Conclusion

Future data centers will be increasingly heterogeneous, specialized, and energy-efficient. Chip design will move beyond CPUs/GPUs toward domain-specific processors. Advanced packaging (HBM, CPO) will drive performance, while DC power, liquid cooling, and intelligent sensing will enable green, high-efficiency digital infrastructure.

This AI-driven silicon revolution demands continuous innovation and cross-industry collaboration across the semiconductor value chain to shape the digital future of the AI era.

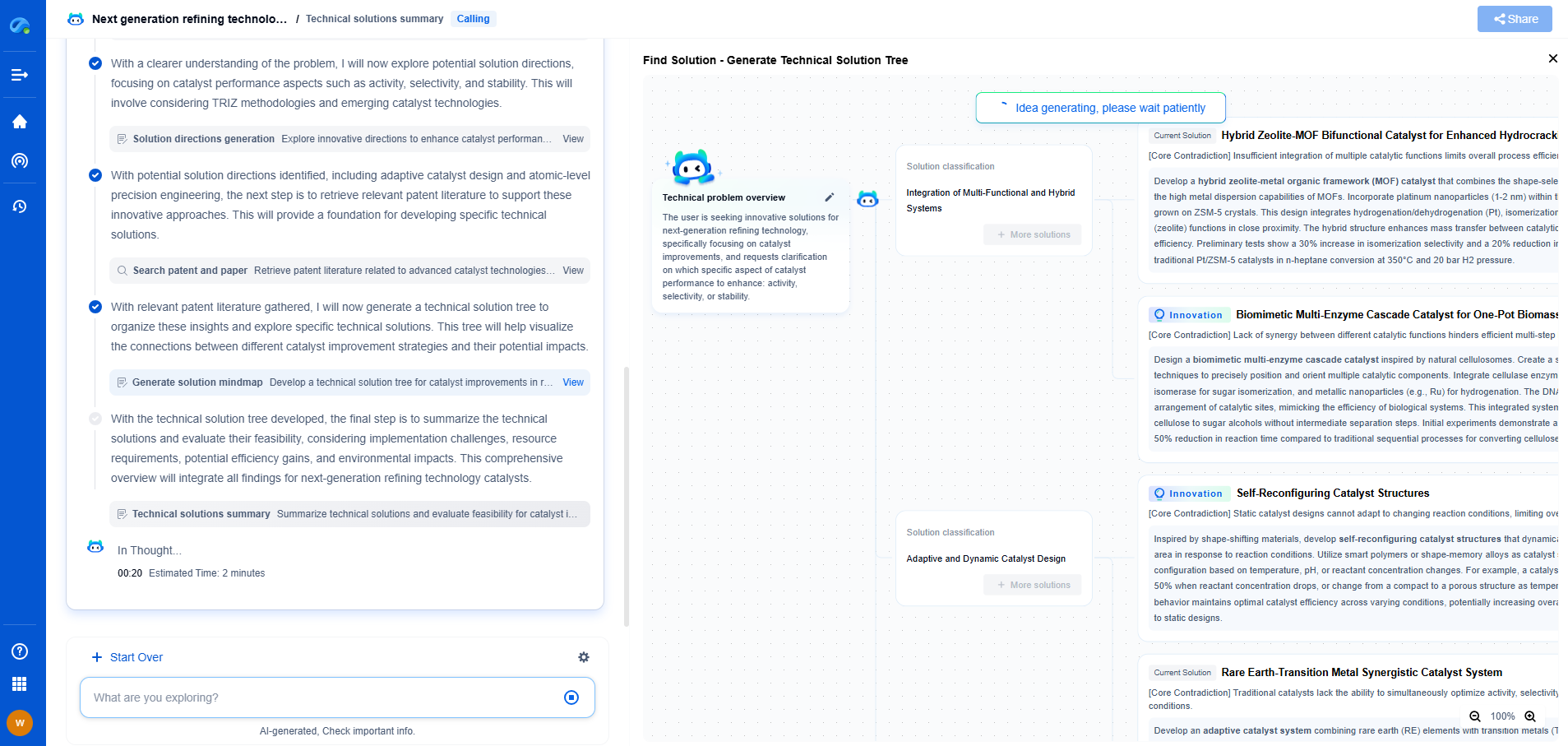

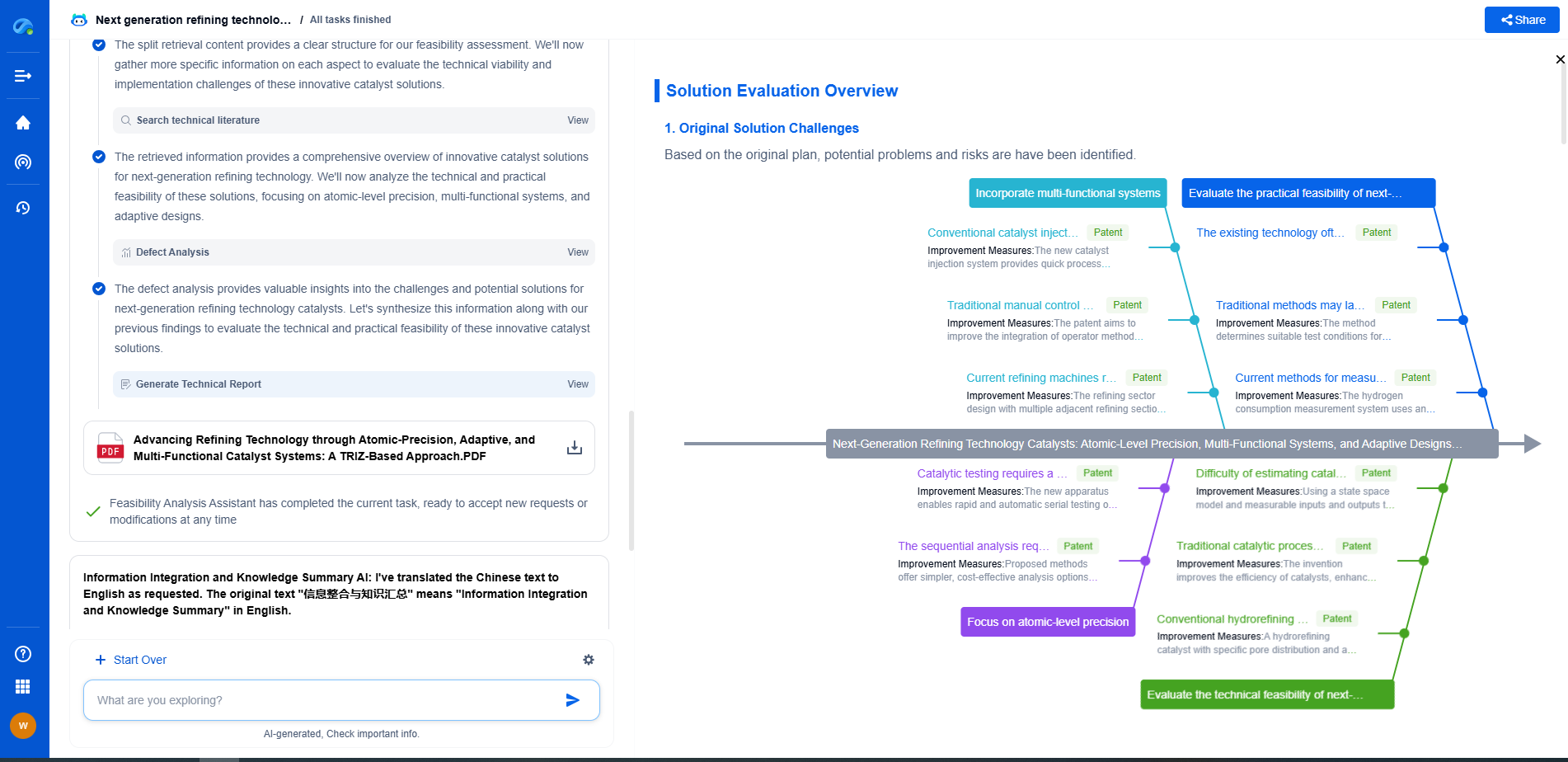

Infuse Insights into Chip R&D with PatSnap Eureka

Whether you're exploring novel transistor architectures, monitoring global IP filings in advanced packaging, or optimizing your semiconductor innovation roadmap—Patsnap Eureka empowers you with AI-driven insights tailored to the pace and complexity of modern chip development.

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Join the new era of semiconductor R&D. Try Patsnap Eureka today and experience the future of innovation intelligence.