In the High Bandwidth Memory (HBM) market, SK hynix and Samsung are the undisputed leaders, jointly holding more than 90% of the global market share in 2024 (SK hynix: 54%, Samsung: 39%), while Micron plays the role of a challenger with a 7% share.

SK hynix: Driving Performance and Efficiency

As power consumption becomes a critical limiting factor, HBM is evolving toward a balance of performance and efficiency. SK hynix believes HBM can significantly enhance AI performance and quality, outpacing the growth trajectory of traditional memory and breaking the so-called “memory wall.”

SK hynix positions HBM as near-memory, meaning it sits closer to compute cores (CPU/GPU) than conventional DRAM, enabling higher bandwidth and faster responsiveness. Its technological advantage stems from three structural innovations:

·3D TSV stacking for higher capacity,

·wide-channel parallel transfer for ultra-high bandwidth,

·lower energy per bit compared with DRAM, achieving higher efficiency.

These attributes make HBM essential for boosting system performance in high-throughput applications such as AI. Across generational upgrades from HBM2E, HBM3, HBM3E to HBM4, SK hynix has consistently improved both bandwidth and efficiency. Notably, the transition from HBM3E to HBM4 marks a 200% bandwidth increase (3×), showcasing a dramatic leap.

SK hynix has already shipped the world’s first HBM4 samples, which deliver an overall 60% advantage in cost per bandwidth, power, and thermal metrics compared with previous generations. HBM4 offers up to 36 GB capacity, sufficient for high-precision large language models (LLMs), and over 2 TB/s bandwidth, enabling a full HD movie (5 GB) download in one second. It achieves ~40% lower power per bit than HBM3E and ~4% better thermal performance.

Samsung: Step-by-Step Progression

Samsung also regards HBM as near-memory and has mapped out its evolutionary roadmap from 2018 through 2027:

·HBM2 (2018): 307 GB/s

·HBM2E (2020): 461 GB/s

·HBM3 (2022): 819 GB/s

·HBM3E (2024): 1.17 TB/s

·HBM4 (2026, projected): 2.048 TB/s

·HBM4E (2027, projected): even greater capacity, bandwidth, and controllability.

As capacity and bandwidth increase from HBM2 to HBM4E, die size has grown, and power consumption has risen from 12 W to 42.5 W—posing significant challenges for thermal design. Yet, energy efficiency has improved steadily, falling from 6.25 pJ/bit (HBM2) to 4.05 pJ/bit (HBM3E). In fact, Samsung’s HBM3E in 2024 maintained the same die size (121.0 mm²) as HBM3 but lowered energy use per bit from 4.12 to 4.05 pJ/bit, reflecting critical technological advances for data centers and AI, where power consumption directly impacts operating costs and cooling requirements.

Samsung views HBM4 and HBM4E as next-generation milestones. A key shift is the transition from conventional processes to FinFET-based logic processes, delivering three benefits:

·Up to 200% performance boost,

·Up to 70% area reduction,

·Up to 50% power reduction.

Samsung also highlighted its Hybrid Cube Bonding (HCB) packaging technology for HBM stacking. Unlike conventional micro-bump or Thermo-Compression Bonding (TCB), HCB enables direct, seamless interconnections between HBM dies and the interposer. Compared with TCB, HCB offers:

·33% higher stacking capability, supporting greater total capacity within the same footprint,

·20% lower thermal resistance, thanks to shorter, more efficient heat conduction paths, enabling better cooling for higher power operation.

Micron: Can the Latecomer Catch Up?

Micron only briefly mentioned HBM4, focusing more on other memory products such as G9 QLC/TLC NAND and PCIe 6 SSDs.

In the race for HBM, Micron may have entered later than its peers, but it has taken bold and steady steps. Instead of following the conventional path from HBM3, Micron skipped ahead and directly launched HBM3E, becoming a key supplier for NVIDIA’s H200 GPUs. Currently, Micron is accelerating its HBM technology roadmap, with plans to introduce HBM4 in 2026.

Micron’s HBM4 features a 12-layer memory die stack. Compared with the 24 GB and 36 GB capacity options of HBM3E, HBM4 will be offered primarily at 36 GB. Bandwidth is expected to see a significant leap from HBM3E’s 1.2 TB/s to over 2 TB/s, supported by a 2048-bit ultra-wide interface. Power efficiency is projected to improve by more than 20% over the previous generation.

As for HBM4E, Micron has not disclosed a detailed roadmap, stating only that future iterations will continue to advance toward higher bandwidth, larger capacity, better energy efficiency, and stronger customization capabilities.

The Complexity of HBM Manufacturing

While the roadmaps of different vendors appear to be progressing step by step, it is important to recognize that HBM manufacturing is extremely complex. According to SK hynix, producing HBM requires roughly seven major steps across front-end and back-end processes:

Silicon etching →TSV copper filling →BEOL metallization →Front-end bump formation and solder reflow →Temporary carrier bonding and back grinding →Bump formation on the wafer backside →Carrier debonding, chip stacking, and packaging.

(Steps 1–4 are front-end processes, while steps 5–7 belong to the back-end.)

TrendForce analysts note that the core strategy for advancing HBM technology lies primarily in front-end process improvements to enhance bandwidth and per-die density. For example, increasing I/O counts requires more TSVs (through-silicon vias) and bumps/pads. TSVs are critical for connecting vertically stacked dies; similarly, increasing per-die density also relies on front-end process innovation. However, as die sizes continue to grow, the cost per gigabyte rises.

At the same time, back-end process innovations represent the other side of HBM development. The primary objective of back-end processes is to increase the number of stack layers. The three major HBM suppliers each adopt different stacking technologies: SK hynix is known for MR-MUF, while Samsung and Micron mainly use TC-NCF.

The technology path for 16-layer stacking remains uncertain—it may involve hybrid bonding or continued use of TC-NCF/TC bonding. With 16-layer stacks, die-to-die gaps become extremely small, at around 5 micrometers, imposing strict requirements on die core thickness (20–25 micrometers).

Analysts from Market and Technology Trends also anticipate that HBM4 (12-layer) and HBM4E (16-layer), expected around 2026, may begin adopting new bonding technologies. Starting with HBM5 (20-layer) around 2028, wafer-to-wafer (W2W) hybrid bonding is expected to become mainstream, while die-to-wafer (D2W) bonding will also play an increasingly important role in subsequent generations.

The Role of Architectural Innovation

Beyond process advancements, architectural innovation is equally critical. HBM is evolving from “standard HBM” (s-HBM) toward “customized HBM” (c-HBM). In customized HBM, some of the HBM PHY and logic functions traditionally located on the GPU/ASIC are integrated into the HBM base die. This shortens channel length, reduces the number of I/Os, and lowers power consumption, thereby significantly improving system performance and reducing total cost of ownership (TCO).

The Bright but Uneven Outlook of the HBM Market

According to data from Yole Group, the HBM (High Bandwidth Memory) market is expected to experience rapid growth in the coming years, whether measured by revenue, bit shipments, or wafer production.

From a revenue perspective, the global HBM market is projected to expand from USD 17 billion in 2024 to USD 98 billion in 2030, representing a compound annual growth rate (CAGR) of 33%. HBM’s revenue share within the DRAM market is expected to grow from 18% in 2024 to 50% by 2030.

In terms of bit shipments, HBM volumes are expected to rise from 1.5 B GB in 2023 to 2.8 B GB in 2024, and further to 7.6 B GB by 2030. HBM’s share of total DRAM bit shipments will grow rapidly starting in 2024, reaching about 10% by 2030.

For wafer production, HBM output will increase from 216 K WPM in 2023 to 350 K WPM in 2024, and is projected to reach 590 K WPM by 2030. By then, HBM wafer production will account for around 15% of the total DRAM wafer output.

It is particularly noteworthy that although HBM’s share of total DRAM bit shipments (~10%) is relatively small, its revenue share will approach 50%. This highlights HBM’s high added value and premium pricing, and also explains why wafer production share (~15%) falls between revenue and bit shipment shares. Currently, the per-bit cost of HBM is about 3x that of DDR, rising to 4x with HBM4. However, due to strong demand, HBM pricing is about 6x that of DDR (8x for HBM4), resulting in gross margins significantly higher than DDR—around 70%.

That said, analysts at MKW Ventures Consulting LLC have issued a warning: when enthusiasm around a new technology runs high, expectations are often overstated, and the risk of overhype must be considered. Moreover, in rapidly growing markets, corrections and cyclicality are common. Increased capacity from major suppliers (SK hynix, Samsung, Micron) may lead to oversupply, triggering market corrections. Ultimately, the market will enter a “digestion” phase characterized by slower growth, lack of backup orders, absence of stockpiling, and excess inventory. A 10% decline could quickly turn into a 30% drop. The HBM market’s ultrafast growth rate will likely fall from around 100% in 2024/2025 to just 20% in 2026.

Some key indicators suggesting that the semiconductor industry (especially memory) may be entering a correction phase include:

·Any disruption in end-customer revenue growth: For instance, if a company like Nokia faces revenue growth challenges, this could serve as an early warning sign.

·Rising inventories: Growth in inventories at either end customers or memory companies.

·Bit growth with slowing profit growth: Memory companies may ship more bits, but profit growth slows due to intensified competition and pricing pressure.

·Sudden supply increases: New capacity coming online, such as Samsung qualifying new production or Micron’s new fabs ramping up.

·Use of the word “digestion”: When hyperscalers or NVIDIA begin mentioning “digestion,” it typically signals a slowdown in demand.

·Statements from CFOs: Phrases such as “this is just a short-term adjustment, and the fundamentals remain strong” often act as contrarian indicators.

·Oversupply by late 2026: If oversupply emerges, aggressive pricing will follow.

·HBM’s unique supply chain: Flexibility between DDR and HBM production is minimal and slow.

·Declining HBM gross margins: Margins could fall to 40% or lower.

Therefore, while the long-term outlook for HBM is undoubtedly bright, its development path will not be smooth, as cyclical adjustments are inevitable.

Although HBM is currently dominated by global memory giants, one should not overlook the fact that China is stepping up efforts to localize HBM production. Strong demand for AI accelerators, significant government support, and a mature industrial ecosystem may help Chinese companies secure a meaningful foothold in the HBM market in the coming years.

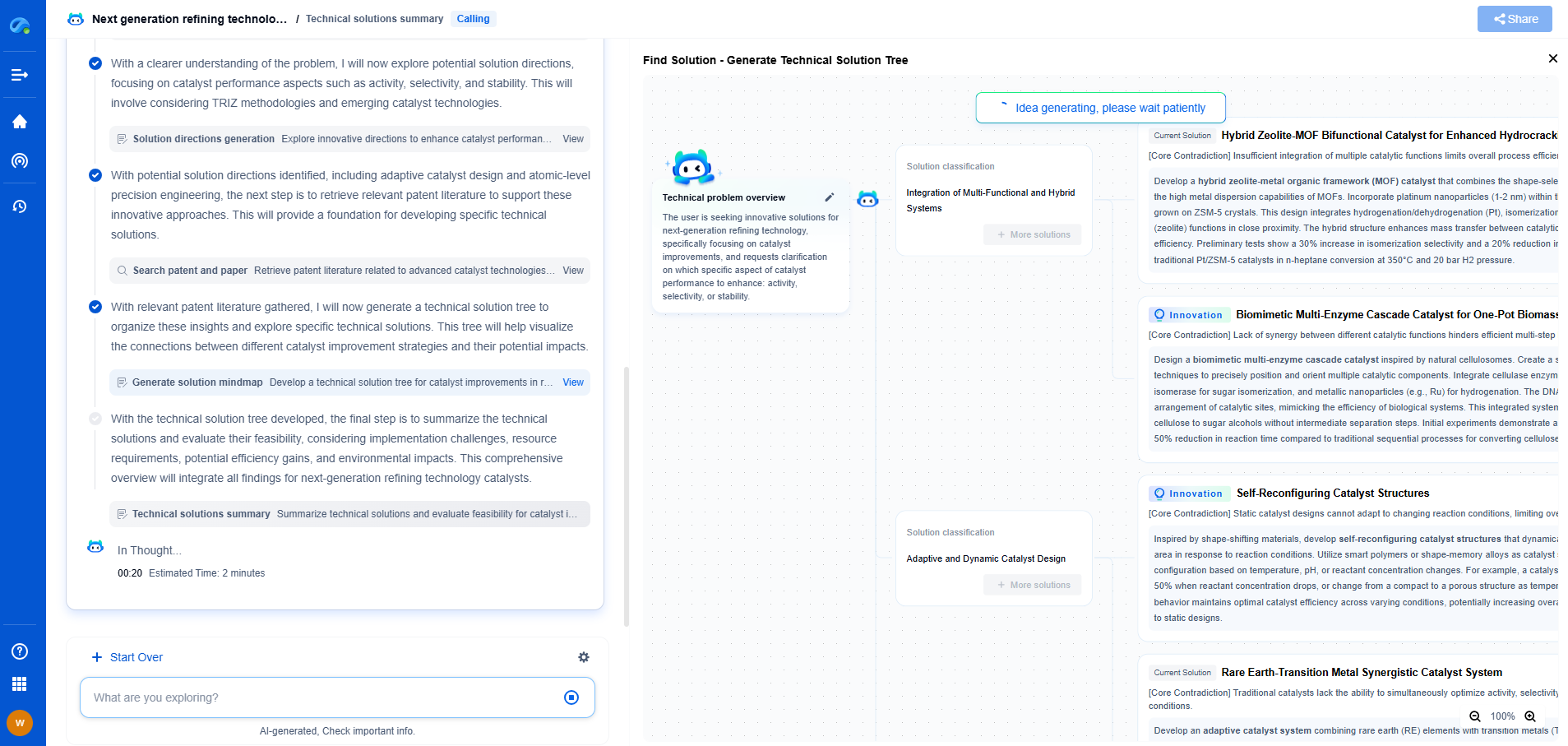

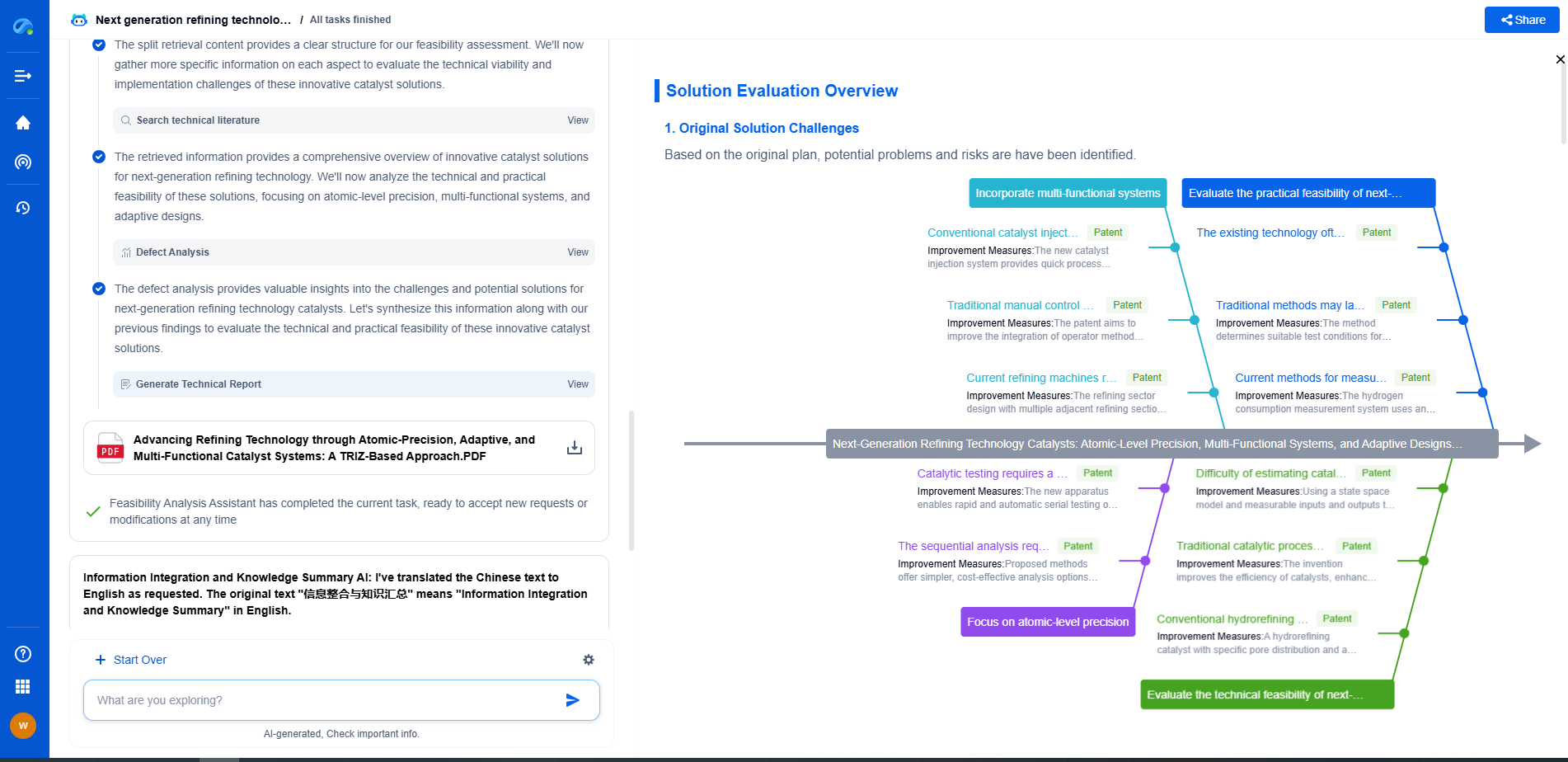

Infuse Insights into Chip R&D with PatSnap Eureka

Whether you're exploring novel transistor architectures, monitoring global IP filings in advanced packaging, or optimizing your semiconductor innovation roadmap—Patsnap Eureka empowers you with AI-driven insights tailored to the pace and complexity of modern chip development.

Patsnap Eureka, our intelligent AI assistant built for R&D professionals in high-tech sectors, empowers you with real-time expert-level analysis, technology roadmap exploration, and strategic mapping of core patents—all within a seamless, user-friendly interface.

👉 Join the new era of semiconductor R&D. Try Patsnap Eureka today and experience the future of innovation intelligence.