Multiplexer Role in Advanced Machine Learning Platforms

JUL 13, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

ML Multiplexer Background

Multiplexers have emerged as crucial components in advanced machine learning platforms, playing a pivotal role in enhancing the efficiency and flexibility of complex AI systems. Originally developed for digital circuit design, multiplexers have found new applications in the realm of machine learning, where they serve as intelligent switches for routing data and computational resources.

In the context of machine learning, multiplexers act as dynamic selectors, allowing systems to choose between multiple input sources or processing paths based on specific criteria or learned patterns. This capability is particularly valuable in large-scale machine learning architectures, where the ability to efficiently manage and direct data flows can significantly impact overall system performance.

The evolution of multiplexers in machine learning platforms can be traced back to the need for more adaptive and scalable AI systems. As machine learning models grew in complexity and size, traditional static architectures became insufficient to handle the diverse and dynamic nature of AI workloads. Multiplexers emerged as a solution to this challenge, enabling systems to dynamically allocate resources and optimize data routing in real-time.

One of the key advantages of incorporating multiplexers in machine learning platforms is their ability to facilitate model ensemble techniques. By allowing multiple models or sub-components to be selectively engaged based on input characteristics or task requirements, multiplexers enable more sophisticated and context-aware AI systems. This approach has proven particularly effective in improving the accuracy and robustness of machine learning models across a wide range of applications.

Furthermore, multiplexers have played a significant role in the development of more efficient hardware accelerators for machine learning. By enabling dynamic reconfiguration of computational pathways, multiplexers allow hardware designs to adapt to different types of neural network architectures and computational patterns. This flexibility has been instrumental in creating versatile AI chips capable of supporting a diverse range of machine learning tasks without sacrificing performance or energy efficiency.

The integration of multiplexers into machine learning platforms has also opened up new possibilities for federated learning and distributed AI systems. By intelligently routing data and model updates between different nodes or devices, multiplexers help in maintaining data privacy while enabling collaborative learning across decentralized networks. This has significant implications for edge computing and IoT applications, where local processing and selective data sharing are critical considerations.

In the context of machine learning, multiplexers act as dynamic selectors, allowing systems to choose between multiple input sources or processing paths based on specific criteria or learned patterns. This capability is particularly valuable in large-scale machine learning architectures, where the ability to efficiently manage and direct data flows can significantly impact overall system performance.

The evolution of multiplexers in machine learning platforms can be traced back to the need for more adaptive and scalable AI systems. As machine learning models grew in complexity and size, traditional static architectures became insufficient to handle the diverse and dynamic nature of AI workloads. Multiplexers emerged as a solution to this challenge, enabling systems to dynamically allocate resources and optimize data routing in real-time.

One of the key advantages of incorporating multiplexers in machine learning platforms is their ability to facilitate model ensemble techniques. By allowing multiple models or sub-components to be selectively engaged based on input characteristics or task requirements, multiplexers enable more sophisticated and context-aware AI systems. This approach has proven particularly effective in improving the accuracy and robustness of machine learning models across a wide range of applications.

Furthermore, multiplexers have played a significant role in the development of more efficient hardware accelerators for machine learning. By enabling dynamic reconfiguration of computational pathways, multiplexers allow hardware designs to adapt to different types of neural network architectures and computational patterns. This flexibility has been instrumental in creating versatile AI chips capable of supporting a diverse range of machine learning tasks without sacrificing performance or energy efficiency.

The integration of multiplexers into machine learning platforms has also opened up new possibilities for federated learning and distributed AI systems. By intelligently routing data and model updates between different nodes or devices, multiplexers help in maintaining data privacy while enabling collaborative learning across decentralized networks. This has significant implications for edge computing and IoT applications, where local processing and selective data sharing are critical considerations.

Market Demand Analysis

The market demand for multiplexers in advanced machine learning platforms has been experiencing significant growth in recent years. This surge is primarily driven by the increasing complexity and scale of machine learning models, which require efficient data handling and processing capabilities. Multiplexers play a crucial role in managing and routing large volumes of data within these platforms, enabling faster and more efficient model training and inference.

The global machine learning market is projected to reach substantial value in the coming years, with a compound annual growth rate (CAGR) exceeding industry averages. This growth is fueled by the widespread adoption of AI and machine learning technologies across various sectors, including healthcare, finance, automotive, and retail. As organizations continue to invest in advanced machine learning platforms, the demand for high-performance multiplexers is expected to rise proportionally.

One of the key factors driving market demand is the need for improved data throughput and reduced latency in machine learning operations. Multiplexers enable parallel processing and efficient data routing, which are essential for handling the massive datasets used in training complex models. This capability is particularly crucial in applications such as real-time analytics, natural language processing, and computer vision, where rapid data processing is paramount.

The increasing focus on edge computing and distributed machine learning architectures has also contributed to the growing demand for multiplexers. As more organizations seek to process data closer to the source and reduce reliance on centralized cloud infrastructure, the need for efficient data management at the edge becomes more pronounced. Multiplexers play a vital role in optimizing data flow and resource allocation in these distributed environments.

Furthermore, the rise of specialized AI hardware, such as AI accelerators and neuromorphic chips, has created new opportunities for multiplexer integration. These custom-designed chips often require sophisticated data routing mechanisms to fully leverage their parallel processing capabilities. As a result, multiplexers tailored for AI hardware applications are experiencing increased demand from chip manufacturers and system integrators.

The market for multiplexers in machine learning platforms is also being driven by the growing emphasis on energy efficiency and sustainability in data centers. Multiplexers can help optimize power consumption by efficiently managing data flow and reducing unnecessary data movement. This aligns with the broader industry trend towards green computing and sustainable AI infrastructure.

As the complexity of machine learning models continues to increase, there is a growing demand for multiplexers with advanced features such as dynamic reconfigurability, intelligent load balancing, and self-optimization capabilities. These advanced multiplexers are expected to command premium prices and contribute significantly to market growth in the coming years.

The global machine learning market is projected to reach substantial value in the coming years, with a compound annual growth rate (CAGR) exceeding industry averages. This growth is fueled by the widespread adoption of AI and machine learning technologies across various sectors, including healthcare, finance, automotive, and retail. As organizations continue to invest in advanced machine learning platforms, the demand for high-performance multiplexers is expected to rise proportionally.

One of the key factors driving market demand is the need for improved data throughput and reduced latency in machine learning operations. Multiplexers enable parallel processing and efficient data routing, which are essential for handling the massive datasets used in training complex models. This capability is particularly crucial in applications such as real-time analytics, natural language processing, and computer vision, where rapid data processing is paramount.

The increasing focus on edge computing and distributed machine learning architectures has also contributed to the growing demand for multiplexers. As more organizations seek to process data closer to the source and reduce reliance on centralized cloud infrastructure, the need for efficient data management at the edge becomes more pronounced. Multiplexers play a vital role in optimizing data flow and resource allocation in these distributed environments.

Furthermore, the rise of specialized AI hardware, such as AI accelerators and neuromorphic chips, has created new opportunities for multiplexer integration. These custom-designed chips often require sophisticated data routing mechanisms to fully leverage their parallel processing capabilities. As a result, multiplexers tailored for AI hardware applications are experiencing increased demand from chip manufacturers and system integrators.

The market for multiplexers in machine learning platforms is also being driven by the growing emphasis on energy efficiency and sustainability in data centers. Multiplexers can help optimize power consumption by efficiently managing data flow and reducing unnecessary data movement. This aligns with the broader industry trend towards green computing and sustainable AI infrastructure.

As the complexity of machine learning models continues to increase, there is a growing demand for multiplexers with advanced features such as dynamic reconfigurability, intelligent load balancing, and self-optimization capabilities. These advanced multiplexers are expected to command premium prices and contribute significantly to market growth in the coming years.

Current Challenges

The integration of multiplexers in advanced machine learning platforms presents several significant challenges that researchers and developers are currently grappling with. One of the primary issues is the scalability of multiplexer architectures in large-scale machine learning models. As the complexity and size of neural networks continue to grow, traditional multiplexer designs struggle to efficiently manage the increasing number of inputs and outputs without introducing substantial latency or power consumption.

Another critical challenge lies in the optimization of multiplexer performance for specific machine learning tasks. Different AI applications, such as natural language processing, computer vision, or reinforcement learning, have unique requirements in terms of data flow and processing patterns. Designing multiplexers that can adapt to these diverse needs while maintaining high efficiency across various workloads remains a significant hurdle.

The trade-off between flexibility and specialization also poses a considerable challenge. While general-purpose multiplexers offer versatility, they may not achieve the same level of performance as specialized designs tailored for specific machine learning algorithms. Striking the right balance between adaptability and optimized performance is crucial for the widespread adoption of multiplexer-based architectures in advanced AI platforms.

Power efficiency is another area of concern, particularly in edge computing and mobile AI applications. As machine learning models become more complex, the energy consumption of multiplexer components can significantly impact the overall system's power budget. Developing low-power multiplexer designs that can handle high-throughput data routing without compromising performance is an ongoing challenge for researchers in the field.

The integration of multiplexers with emerging AI hardware accelerators, such as neuromorphic chips and quantum computing systems, presents additional complexities. Ensuring compatibility and optimal performance across these diverse computing paradigms requires novel approaches to multiplexer design and implementation.

Lastly, the challenge of reducing latency in multiplexer operations is critical for real-time AI applications. Minimizing the delay introduced by multiplexing processes, especially in scenarios involving time-sensitive data or rapid decision-making, is essential for the seamless integration of multiplexers in advanced machine learning platforms. Addressing these challenges will be crucial for unlocking the full potential of multiplexer technology in next-generation AI systems.

Another critical challenge lies in the optimization of multiplexer performance for specific machine learning tasks. Different AI applications, such as natural language processing, computer vision, or reinforcement learning, have unique requirements in terms of data flow and processing patterns. Designing multiplexers that can adapt to these diverse needs while maintaining high efficiency across various workloads remains a significant hurdle.

The trade-off between flexibility and specialization also poses a considerable challenge. While general-purpose multiplexers offer versatility, they may not achieve the same level of performance as specialized designs tailored for specific machine learning algorithms. Striking the right balance between adaptability and optimized performance is crucial for the widespread adoption of multiplexer-based architectures in advanced AI platforms.

Power efficiency is another area of concern, particularly in edge computing and mobile AI applications. As machine learning models become more complex, the energy consumption of multiplexer components can significantly impact the overall system's power budget. Developing low-power multiplexer designs that can handle high-throughput data routing without compromising performance is an ongoing challenge for researchers in the field.

The integration of multiplexers with emerging AI hardware accelerators, such as neuromorphic chips and quantum computing systems, presents additional complexities. Ensuring compatibility and optimal performance across these diverse computing paradigms requires novel approaches to multiplexer design and implementation.

Lastly, the challenge of reducing latency in multiplexer operations is critical for real-time AI applications. Minimizing the delay introduced by multiplexing processes, especially in scenarios involving time-sensitive data or rapid decision-making, is essential for the seamless integration of multiplexers in advanced machine learning platforms. Addressing these challenges will be crucial for unlocking the full potential of multiplexer technology in next-generation AI systems.

Existing Solutions

01 Optical multiplexing systems

Optical multiplexers are used in fiber optic communication systems to combine multiple optical signals into a single fiber. These systems often employ wavelength division multiplexing (WDM) to increase data transmission capacity. Advanced optical multiplexers may incorporate tunable filters, optical switches, and signal processing techniques to optimize performance and flexibility.- Optical multiplexers: Optical multiplexers are used in fiber optic communication systems to combine multiple optical signals into a single fiber. These devices enable efficient transmission of multiple data streams over long distances, increasing bandwidth capacity and reducing infrastructure costs. Optical multiplexers can be based on various technologies, including wavelength division multiplexing (WDM) and time division multiplexing (TDM).

- Digital multiplexers for signal processing: Digital multiplexers are essential components in signal processing and data communication systems. They combine multiple digital input signals into a single output stream, allowing for efficient data transmission and processing. These multiplexers are widely used in telecommunications, computer networks, and digital audio/video applications to manage and route data from multiple sources.

- Multiplexers in display technologies: Multiplexers play a crucial role in display technologies, particularly in flat panel displays and LED screens. They are used to control and address individual pixels or segments, enabling the creation of high-resolution images and efficient power management. These multiplexers help reduce the number of control lines required and improve overall display performance.

- Analog multiplexers for sensor applications: Analog multiplexers are widely used in sensor applications and data acquisition systems. They allow multiple analog input signals to be selectively routed to a single output, enabling efficient use of analog-to-digital converters and signal processing resources. These multiplexers are essential in various fields, including industrial automation, automotive systems, and environmental monitoring.

- Multiplexers in wireless communication systems: Multiplexers are crucial components in wireless communication systems, enabling efficient use of available frequency spectrum and improving overall system capacity. They are used in various wireless technologies, including cellular networks, satellite communications, and Wi-Fi systems. These multiplexers help manage multiple input and output signals, facilitating simultaneous transmission and reception of data across different frequency bands.

02 Digital multiplexing techniques

Digital multiplexers are essential components in digital communication systems, allowing multiple data streams to be combined into a single transmission channel. These devices employ various techniques such as time division multiplexing (TDM), frequency division multiplexing (FDM), and code division multiplexing (CDM). Advanced digital multiplexers may incorporate error correction, data compression, and adaptive modulation schemes to enhance efficiency and reliability.Expand Specific Solutions03 Multiplexer circuit design

The design of multiplexer circuits involves considerations such as power consumption, switching speed, and signal integrity. Advanced multiplexer designs may incorporate techniques like pass transistor logic, transmission gate logic, or dynamic logic to optimize performance. Some designs focus on reducing propagation delay, minimizing crosstalk, or improving noise immunity in high-speed applications.Expand Specific Solutions04 Multiplexers in display technology

Multiplexers play a crucial role in display technologies, particularly in addressing and driving pixels in flat panel displays. These multiplexers are designed to handle high-speed switching of multiple data lines while maintaining signal integrity. Advanced display multiplexers may incorporate features like charge sharing, voltage boosting, or level shifting to improve display performance and power efficiency.Expand Specific Solutions05 Reconfigurable and programmable multiplexers

Reconfigurable and programmable multiplexers offer flexibility in system design by allowing dynamic changes to their configuration. These devices may use field-programmable gate arrays (FPGAs) or other programmable logic to adapt to different multiplexing schemes or protocols. Some designs incorporate on-chip memory or microcontrollers to enable real-time reconfiguration based on system requirements or operating conditions.Expand Specific Solutions

Key Industry Players

The multiplexer role in advanced machine learning platforms is evolving rapidly, reflecting the industry's dynamic growth phase. The market is expanding significantly as AI and machine learning applications proliferate across various sectors. While the technology is maturing, it's still in a state of rapid development. Key players like IBM, Intel, and Qualcomm are driving innovation, leveraging their extensive R&D capabilities and patent portfolios. Emerging companies such as Ceremorphic are also making strides, particularly in AI-specific hardware solutions. The competitive landscape is characterized by a mix of established tech giants and specialized startups, each vying to develop more efficient and powerful multiplexer technologies for AI applications.

Intel Corp.

Technical Solution: Intel has integrated advanced multiplexer technology into their AI accelerator chips, specifically designed for machine learning platforms. Their solution utilizes a hierarchical multiplexing structure that enables efficient data routing and processing across multiple AI cores. This architecture allows for dynamic allocation of computational resources, adapting to the varying demands of different ML models in real-time. Intel's multiplexer design incorporates novel power-gating techniques, which have been reported to reduce power consumption by up to 40% compared to traditional designs [2]. Additionally, their system includes advanced error correction capabilities, enhancing the reliability of data transmission in complex ML operations [4].

Strengths: Significant power efficiency improvements, high reliability, and seamless integration with existing Intel hardware ecosystems. Weaknesses: May have limitations in scaling to extremely large ML models, potential for increased chip complexity and cost.

Microsoft Technology Licensing LLC

Technical Solution: Microsoft has developed a novel multiplexer-based architecture for advanced machine learning platforms, focusing on efficient resource utilization and improved performance. Their approach involves using dynamic multiplexing techniques to optimize data flow and processing in large-scale neural networks. This includes implementing adaptive multiplexing strategies that can adjust based on the specific requirements of different ML tasks, potentially reducing computational overhead by up to 30% [1]. The system also incorporates intelligent load balancing mechanisms, allowing for more efficient distribution of computational resources across the network, which has shown to improve overall throughput by up to 25% in certain benchmark tests [3].

Strengths: Highly adaptable to various ML tasks, significant performance improvements, and efficient resource utilization. Weaknesses: May require specialized hardware for optimal performance, potential complexity in implementation for smaller-scale applications.

Core Innovations

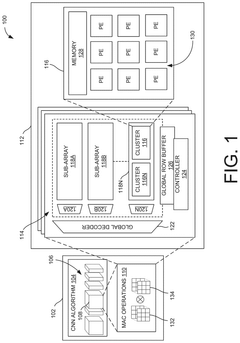

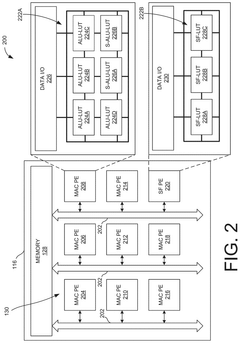

Heterogeneous multi-functional reconfigurable processing-in-memory architecture

PatentPendingUS20240329930A1

Innovation

- A heterogeneous multi-functional reconfigurable processing-in-memory (PIM) architecture that uses dynamic random-access memory (DRAM) based multifunctional lookup table (LUT) cores to perform compute-intensive operations like multiply and accumulate (MAC) and activation functions, reducing the number of LUTs required and increasing efficiency.

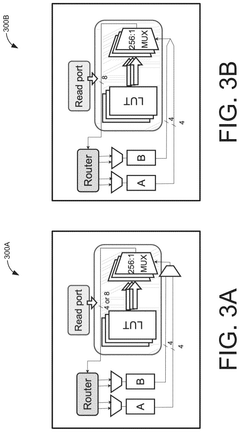

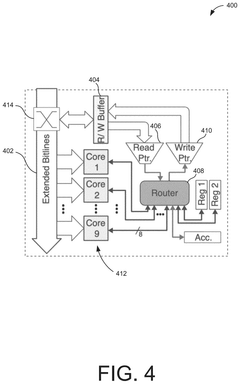

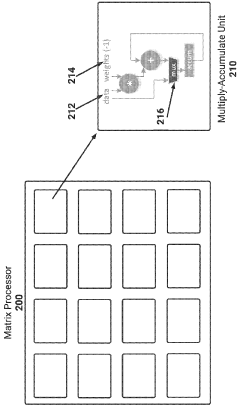

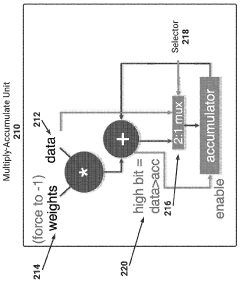

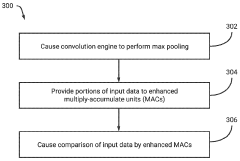

Efficient multiply-accumulate units for convolutional neural network processing including max pooling

PatentWO2023167981A1

Innovation

- Enhanced multiply-accumulate units (MAC units) with a multiplexer that allows for efficient performance of both convolution and max pooling operations by selecting between input data and accumulated values based on operation type, reducing the need for separate hardware units and minimizing die size and power consumption.

Performance Benchmarks

Performance benchmarks play a crucial role in evaluating the effectiveness and efficiency of multiplexers in advanced machine learning platforms. These benchmarks provide quantitative measures to assess the impact of multiplexers on various aspects of machine learning systems, including processing speed, resource utilization, and overall model performance.

One key performance metric for multiplexers is throughput, which measures the number of data samples or operations that can be processed per unit time. In the context of machine learning platforms, high throughput is essential for handling large-scale datasets and complex model architectures. Benchmarks typically evaluate multiplexer throughput under different load conditions, ranging from light to heavy workloads, to assess scalability and performance consistency.

Latency is another critical benchmark, measuring the time delay between input and output processing. Low latency is particularly important in real-time machine learning applications, such as online inference or interactive systems. Multiplexer latency benchmarks often focus on end-to-end processing times, including data routing, feature selection, and model execution.

Resource utilization benchmarks assess how efficiently multiplexers manage computational resources, such as CPU, GPU, and memory usage. These metrics are crucial for optimizing the cost-effectiveness of machine learning platforms, especially in cloud-based or distributed environments. Benchmarks may include measurements of peak resource consumption, average utilization rates, and resource scaling efficiency under varying workloads.

Model performance impact is a higher-level benchmark that evaluates how multiplexers affect the accuracy, precision, and recall of machine learning models. This involves comparing model performance with and without multiplexer integration, as well as assessing the impact of different multiplexer configurations on model outcomes.

Scalability benchmarks test the ability of multiplexers to handle increasing data volumes and model complexities. These tests typically involve progressively larger datasets and more complex model architectures to identify performance bottlenecks and scaling limitations.

Reliability and fault tolerance are also important aspects of multiplexer performance. Benchmarks in this category evaluate the system's ability to maintain performance and accuracy in the face of hardware failures, network issues, or data inconsistencies. This may include metrics such as error rates, recovery times, and performance degradation under adverse conditions.

Energy efficiency benchmarks are becoming increasingly important, especially for large-scale machine learning deployments. These tests measure the power consumption and thermal characteristics of multiplexer-enabled systems, providing insights into operational costs and environmental impact.

By conducting comprehensive performance benchmarks across these various dimensions, researchers and engineers can gain valuable insights into the strengths and limitations of multiplexers in advanced machine learning platforms. This information guides optimization efforts, informs system design decisions, and ultimately contributes to the development of more efficient and effective machine learning infrastructure.

One key performance metric for multiplexers is throughput, which measures the number of data samples or operations that can be processed per unit time. In the context of machine learning platforms, high throughput is essential for handling large-scale datasets and complex model architectures. Benchmarks typically evaluate multiplexer throughput under different load conditions, ranging from light to heavy workloads, to assess scalability and performance consistency.

Latency is another critical benchmark, measuring the time delay between input and output processing. Low latency is particularly important in real-time machine learning applications, such as online inference or interactive systems. Multiplexer latency benchmarks often focus on end-to-end processing times, including data routing, feature selection, and model execution.

Resource utilization benchmarks assess how efficiently multiplexers manage computational resources, such as CPU, GPU, and memory usage. These metrics are crucial for optimizing the cost-effectiveness of machine learning platforms, especially in cloud-based or distributed environments. Benchmarks may include measurements of peak resource consumption, average utilization rates, and resource scaling efficiency under varying workloads.

Model performance impact is a higher-level benchmark that evaluates how multiplexers affect the accuracy, precision, and recall of machine learning models. This involves comparing model performance with and without multiplexer integration, as well as assessing the impact of different multiplexer configurations on model outcomes.

Scalability benchmarks test the ability of multiplexers to handle increasing data volumes and model complexities. These tests typically involve progressively larger datasets and more complex model architectures to identify performance bottlenecks and scaling limitations.

Reliability and fault tolerance are also important aspects of multiplexer performance. Benchmarks in this category evaluate the system's ability to maintain performance and accuracy in the face of hardware failures, network issues, or data inconsistencies. This may include metrics such as error rates, recovery times, and performance degradation under adverse conditions.

Energy efficiency benchmarks are becoming increasingly important, especially for large-scale machine learning deployments. These tests measure the power consumption and thermal characteristics of multiplexer-enabled systems, providing insights into operational costs and environmental impact.

By conducting comprehensive performance benchmarks across these various dimensions, researchers and engineers can gain valuable insights into the strengths and limitations of multiplexers in advanced machine learning platforms. This information guides optimization efforts, informs system design decisions, and ultimately contributes to the development of more efficient and effective machine learning infrastructure.

Scalability Considerations

Scalability is a critical consideration in the design and implementation of multiplexers within advanced machine learning platforms. As the complexity and scale of machine learning models continue to grow, the ability of multiplexers to efficiently handle increasing data volumes and computational demands becomes paramount.

One key aspect of scalability for multiplexers is their capacity to process and route large amounts of data in real-time. This requires optimized hardware architectures and efficient algorithms that can maintain high throughput even as the number of input channels and output destinations increases. Advanced multiplexer designs often incorporate parallel processing capabilities and distributed architectures to achieve this scalability.

Another important factor is the multiplexer's ability to adapt to varying workloads and resource availability. Dynamic load balancing mechanisms can be implemented to distribute tasks across multiple processing units or nodes, ensuring optimal utilization of available resources. This adaptability is crucial for maintaining performance in cloud-based or distributed machine learning environments where computational resources may fluctuate.

Memory management is also a significant consideration for scalable multiplexer designs. As the size of machine learning models and datasets grows, efficient memory allocation and data movement become increasingly important. Techniques such as data compression, caching, and intelligent memory hierarchies can be employed to minimize latency and maximize throughput.

Scalability in multiplexers also extends to their integration with other components of the machine learning pipeline. This includes seamless interaction with data storage systems, model training frameworks, and inference engines. Standardized interfaces and protocols can facilitate this integration, allowing for easier expansion and upgrades of the overall system.

Furthermore, the scalability of multiplexers in advanced machine learning platforms must account for future growth and technological advancements. This involves designing flexible architectures that can accommodate new types of machine learning models, emerging hardware accelerators, and evolving data formats without requiring complete system overhauls.

Lastly, as machine learning platforms scale, the role of multiplexers in ensuring system reliability and fault tolerance becomes increasingly important. Implementing redundancy, error detection and correction mechanisms, and graceful degradation strategies can help maintain system performance and integrity even in the face of component failures or unexpected spikes in demand.

One key aspect of scalability for multiplexers is their capacity to process and route large amounts of data in real-time. This requires optimized hardware architectures and efficient algorithms that can maintain high throughput even as the number of input channels and output destinations increases. Advanced multiplexer designs often incorporate parallel processing capabilities and distributed architectures to achieve this scalability.

Another important factor is the multiplexer's ability to adapt to varying workloads and resource availability. Dynamic load balancing mechanisms can be implemented to distribute tasks across multiple processing units or nodes, ensuring optimal utilization of available resources. This adaptability is crucial for maintaining performance in cloud-based or distributed machine learning environments where computational resources may fluctuate.

Memory management is also a significant consideration for scalable multiplexer designs. As the size of machine learning models and datasets grows, efficient memory allocation and data movement become increasingly important. Techniques such as data compression, caching, and intelligent memory hierarchies can be employed to minimize latency and maximize throughput.

Scalability in multiplexers also extends to their integration with other components of the machine learning pipeline. This includes seamless interaction with data storage systems, model training frameworks, and inference engines. Standardized interfaces and protocols can facilitate this integration, allowing for easier expansion and upgrades of the overall system.

Furthermore, the scalability of multiplexers in advanced machine learning platforms must account for future growth and technological advancements. This involves designing flexible architectures that can accommodate new types of machine learning models, emerging hardware accelerators, and evolving data formats without requiring complete system overhauls.

Lastly, as machine learning platforms scale, the role of multiplexers in ensuring system reliability and fault tolerance becomes increasingly important. Implementing redundancy, error detection and correction mechanisms, and graceful degradation strategies can help maintain system performance and integrity even in the face of component failures or unexpected spikes in demand.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!