Quantum Computing's Impact on Astrophysical Data Interpretation

JUL 17, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing in Astrophysics: Background and Objectives

Quantum computing represents a paradigm shift in computational capabilities, with profound implications for astrophysical data interpretation. This revolutionary technology harnesses the principles of quantum mechanics to perform complex calculations at speeds unattainable by classical computers. The field of astrophysics, characterized by vast datasets and intricate simulations, stands to benefit significantly from quantum computing's potential.

The development of quantum computing traces back to the early 1980s when physicist Richard Feynman proposed the idea of using quantum systems to simulate quantum phenomena. Since then, the field has progressed rapidly, with major milestones including the creation of the first quantum bits (qubits) in the late 1990s and the development of quantum algorithms capable of outperforming classical counterparts in specific tasks.

In the context of astrophysics, quantum computing's evolution aligns with the increasing complexity of astronomical data analysis. As observatories and space missions generate unprecedented volumes of data, traditional computing methods struggle to keep pace with the demands of processing and interpreting this information. The intersection of quantum computing and astrophysics presents an opportunity to address these challenges and unlock new insights into the universe.

The primary objective of integrating quantum computing into astrophysical data interpretation is to enhance our ability to analyze and model complex cosmic phenomena. This includes improving the accuracy and speed of simulations for galaxy formation, dark matter distribution, and gravitational wave detection. Additionally, quantum computing aims to optimize data processing pipelines, enabling more efficient handling of the massive datasets produced by modern astronomical instruments.

Another crucial goal is to develop quantum algorithms specifically tailored for astrophysical problems. These algorithms could potentially solve previously intractable issues, such as N-body simulations of stellar dynamics or the modeling of quantum gravity effects in extreme cosmic environments. By leveraging the unique properties of quantum systems, researchers hope to gain deeper insights into fundamental questions about the nature of space, time, and the universe's evolution.

Furthermore, the integration of quantum computing in astrophysics seeks to bridge the gap between theoretical predictions and observational data. As our understanding of the cosmos becomes increasingly sophisticated, the need for more powerful computational tools to test and refine theoretical models grows. Quantum computing offers the potential to simulate complex astrophysical scenarios with unprecedented accuracy, allowing for more rigorous comparisons between theory and observation.

As we look towards the future, the synergy between quantum computing and astrophysics promises to revolutionize our approach to cosmic exploration. By pushing the boundaries of computational capabilities, we stand on the brink of new discoveries that could reshape our understanding of the universe and our place within it.

The development of quantum computing traces back to the early 1980s when physicist Richard Feynman proposed the idea of using quantum systems to simulate quantum phenomena. Since then, the field has progressed rapidly, with major milestones including the creation of the first quantum bits (qubits) in the late 1990s and the development of quantum algorithms capable of outperforming classical counterparts in specific tasks.

In the context of astrophysics, quantum computing's evolution aligns with the increasing complexity of astronomical data analysis. As observatories and space missions generate unprecedented volumes of data, traditional computing methods struggle to keep pace with the demands of processing and interpreting this information. The intersection of quantum computing and astrophysics presents an opportunity to address these challenges and unlock new insights into the universe.

The primary objective of integrating quantum computing into astrophysical data interpretation is to enhance our ability to analyze and model complex cosmic phenomena. This includes improving the accuracy and speed of simulations for galaxy formation, dark matter distribution, and gravitational wave detection. Additionally, quantum computing aims to optimize data processing pipelines, enabling more efficient handling of the massive datasets produced by modern astronomical instruments.

Another crucial goal is to develop quantum algorithms specifically tailored for astrophysical problems. These algorithms could potentially solve previously intractable issues, such as N-body simulations of stellar dynamics or the modeling of quantum gravity effects in extreme cosmic environments. By leveraging the unique properties of quantum systems, researchers hope to gain deeper insights into fundamental questions about the nature of space, time, and the universe's evolution.

Furthermore, the integration of quantum computing in astrophysics seeks to bridge the gap between theoretical predictions and observational data. As our understanding of the cosmos becomes increasingly sophisticated, the need for more powerful computational tools to test and refine theoretical models grows. Quantum computing offers the potential to simulate complex astrophysical scenarios with unprecedented accuracy, allowing for more rigorous comparisons between theory and observation.

As we look towards the future, the synergy between quantum computing and astrophysics promises to revolutionize our approach to cosmic exploration. By pushing the boundaries of computational capabilities, we stand on the brink of new discoveries that could reshape our understanding of the universe and our place within it.

Market Demand for Advanced Astrophysical Data Analysis

The market demand for advanced astrophysical data analysis has been growing exponentially in recent years, driven by the increasing complexity and volume of data generated by modern astronomical instruments and observatories. As our understanding of the universe expands, so does the need for more sophisticated tools to interpret the vast amounts of information collected from space-based and ground-based telescopes.

One of the primary drivers of this demand is the advent of large-scale sky surveys, such as the Sloan Digital Sky Survey (SDSS) and the upcoming Vera C. Rubin Observatory's Legacy Survey of Space and Time (LSST). These projects generate petabytes of data annually, creating a significant challenge for traditional data analysis methods. The sheer scale of these datasets necessitates advanced computational techniques to extract meaningful insights and make new discoveries.

The field of astrophysics is also experiencing a surge in multi-messenger astronomy, which combines observations from different types of cosmic messengers, such as electromagnetic radiation, gravitational waves, and neutrinos. This approach requires sophisticated data fusion and analysis techniques to correlate information from diverse sources, further fueling the demand for advanced analytical tools.

Moreover, the increasing interest in exoplanet detection and characterization has created a niche market for specialized data analysis tools. The subtle signals produced by exoplanets require highly sensitive and precise analytical methods to distinguish them from background noise and stellar activity.

The commercial space industry has also contributed to the growing demand for advanced astrophysical data analysis. Private companies engaged in space exploration, satellite deployment, and space tourism require robust data interpretation capabilities to support their operations and research initiatives.

Academic institutions and research organizations continue to be significant consumers of advanced astrophysical data analysis tools. The competitive nature of scientific research and the pressure to publish groundbreaking discoveries drive the need for cutting-edge analytical capabilities.

Government space agencies, such as NASA and ESA, represent another major market segment. These organizations require state-of-the-art data analysis tools to support their missions, from planetary exploration to deep space observations.

The potential applications of quantum computing in astrophysical data interpretation have sparked interest from both the scientific community and the technology sector. This emerging field promises to revolutionize how we process and analyze complex astronomical datasets, potentially unlocking new insights into the fundamental laws of the universe.

One of the primary drivers of this demand is the advent of large-scale sky surveys, such as the Sloan Digital Sky Survey (SDSS) and the upcoming Vera C. Rubin Observatory's Legacy Survey of Space and Time (LSST). These projects generate petabytes of data annually, creating a significant challenge for traditional data analysis methods. The sheer scale of these datasets necessitates advanced computational techniques to extract meaningful insights and make new discoveries.

The field of astrophysics is also experiencing a surge in multi-messenger astronomy, which combines observations from different types of cosmic messengers, such as electromagnetic radiation, gravitational waves, and neutrinos. This approach requires sophisticated data fusion and analysis techniques to correlate information from diverse sources, further fueling the demand for advanced analytical tools.

Moreover, the increasing interest in exoplanet detection and characterization has created a niche market for specialized data analysis tools. The subtle signals produced by exoplanets require highly sensitive and precise analytical methods to distinguish them from background noise and stellar activity.

The commercial space industry has also contributed to the growing demand for advanced astrophysical data analysis. Private companies engaged in space exploration, satellite deployment, and space tourism require robust data interpretation capabilities to support their operations and research initiatives.

Academic institutions and research organizations continue to be significant consumers of advanced astrophysical data analysis tools. The competitive nature of scientific research and the pressure to publish groundbreaking discoveries drive the need for cutting-edge analytical capabilities.

Government space agencies, such as NASA and ESA, represent another major market segment. These organizations require state-of-the-art data analysis tools to support their missions, from planetary exploration to deep space observations.

The potential applications of quantum computing in astrophysical data interpretation have sparked interest from both the scientific community and the technology sector. This emerging field promises to revolutionize how we process and analyze complex astronomical datasets, potentially unlocking new insights into the fundamental laws of the universe.

Current State and Challenges in Quantum Astrophysics

The field of quantum astrophysics is currently experiencing a transformative phase, with quantum computing emerging as a powerful tool for interpreting complex astrophysical data. The current state of this interdisciplinary domain is characterized by rapid advancements in both quantum technologies and astrophysical observation techniques. Quantum computers, with their ability to process vast amounts of data and simulate complex quantum systems, are beginning to offer new insights into cosmic phenomena that were previously beyond the reach of classical computing methods.

One of the primary areas where quantum computing is making significant strides is in the analysis of gravitational wave data. The detection and interpretation of gravitational waves require processing enormous datasets and performing complex calculations that can overwhelm classical computers. Quantum algorithms, such as those based on quantum Fourier transforms, are showing promise in accelerating these computations and improving the accuracy of gravitational wave signal analysis.

Another frontier in quantum astrophysics is the simulation of stellar and galactic evolution. Quantum computers are being developed to model the quantum mechanical processes that occur in extreme astrophysical environments, such as the interiors of neutron stars or the event horizons of black holes. These simulations could provide unprecedented insights into the fundamental physics governing these cosmic objects.

Despite these advancements, the field faces several significant challenges. One of the most pressing issues is the current limitations of quantum hardware. While quantum computers have shown theoretical potential, practical implementations are still in their infancy. The number of qubits in existing quantum systems is limited, and maintaining quantum coherence for extended periods remains a significant hurdle.

Additionally, the development of quantum algorithms specifically tailored for astrophysical problems is still in its early stages. Bridging the gap between quantum computing theory and practical applications in astrophysics requires interdisciplinary collaboration and innovative approaches to algorithm design.

Data integration poses another challenge. Combining the outputs of quantum computations with traditional astrophysical data and models requires new methodologies and frameworks. Ensuring the reliability and interpretability of results obtained through quantum methods is crucial for their acceptance and integration into mainstream astrophysical research.

Furthermore, the field faces a shortage of experts who are proficient in both quantum computing and astrophysics. Training a new generation of researchers who can navigate both domains is essential for driving progress in quantum astrophysics.

As the field continues to evolve, addressing these challenges will be crucial for realizing the full potential of quantum computing in astrophysical data interpretation. The coming years are likely to see increased focus on developing more robust quantum hardware, refining quantum algorithms for specific astrophysical applications, and fostering collaborations between quantum physicists and astrophysicists to tackle some of the universe's most profound mysteries.

One of the primary areas where quantum computing is making significant strides is in the analysis of gravitational wave data. The detection and interpretation of gravitational waves require processing enormous datasets and performing complex calculations that can overwhelm classical computers. Quantum algorithms, such as those based on quantum Fourier transforms, are showing promise in accelerating these computations and improving the accuracy of gravitational wave signal analysis.

Another frontier in quantum astrophysics is the simulation of stellar and galactic evolution. Quantum computers are being developed to model the quantum mechanical processes that occur in extreme astrophysical environments, such as the interiors of neutron stars or the event horizons of black holes. These simulations could provide unprecedented insights into the fundamental physics governing these cosmic objects.

Despite these advancements, the field faces several significant challenges. One of the most pressing issues is the current limitations of quantum hardware. While quantum computers have shown theoretical potential, practical implementations are still in their infancy. The number of qubits in existing quantum systems is limited, and maintaining quantum coherence for extended periods remains a significant hurdle.

Additionally, the development of quantum algorithms specifically tailored for astrophysical problems is still in its early stages. Bridging the gap between quantum computing theory and practical applications in astrophysics requires interdisciplinary collaboration and innovative approaches to algorithm design.

Data integration poses another challenge. Combining the outputs of quantum computations with traditional astrophysical data and models requires new methodologies and frameworks. Ensuring the reliability and interpretability of results obtained through quantum methods is crucial for their acceptance and integration into mainstream astrophysical research.

Furthermore, the field faces a shortage of experts who are proficient in both quantum computing and astrophysics. Training a new generation of researchers who can navigate both domains is essential for driving progress in quantum astrophysics.

As the field continues to evolve, addressing these challenges will be crucial for realizing the full potential of quantum computing in astrophysical data interpretation. The coming years are likely to see increased focus on developing more robust quantum hardware, refining quantum algorithms for specific astrophysical applications, and fostering collaborations between quantum physicists and astrophysicists to tackle some of the universe's most profound mysteries.

Existing Quantum Solutions for Astrophysical Data Interpretation

01 Quantum data processing and interpretation techniques

Advanced methods for processing and interpreting data generated by quantum computers, including algorithms for noise reduction, error correction, and signal enhancement. These techniques aim to improve the accuracy and reliability of quantum computations, enabling more effective data interpretation in quantum systems.- Quantum data processing and interpretation techniques: Advanced methods for processing and interpreting data generated by quantum computers, including algorithms for noise reduction, error correction, and signal enhancement. These techniques aim to improve the accuracy and reliability of quantum computations, enabling more effective data interpretation in quantum systems.

- Quantum machine learning for data analysis: Integration of quantum computing with machine learning algorithms to enhance data analysis capabilities. This approach leverages the unique properties of quantum systems to process complex datasets more efficiently, potentially leading to faster and more accurate data interpretation in various fields such as finance, healthcare, and scientific research.

- Quantum-classical hybrid systems for data interpretation: Development of hybrid systems that combine quantum and classical computing resources to optimize data interpretation tasks. These systems aim to leverage the strengths of both quantum and classical architectures, allowing for more flexible and efficient data processing across different computational domains.

- Quantum algorithms for large-scale data analysis: Creation of specialized quantum algorithms designed to handle and interpret large-scale datasets. These algorithms exploit quantum parallelism and superposition to perform complex calculations and data analysis tasks that would be impractical or impossible with classical computing methods.

- Quantum-enhanced data visualization techniques: Innovative approaches to data visualization that leverage quantum computing capabilities. These techniques aim to provide new ways of representing and interpreting complex datasets, potentially revealing patterns and insights that are difficult to discern using traditional visualization methods.

02 Quantum machine learning for data analysis

Integration of quantum computing with machine learning algorithms to enhance data analysis capabilities. This approach leverages the unique properties of quantum systems to process complex datasets more efficiently, potentially leading to faster and more accurate insights in fields such as finance, healthcare, and scientific research.Expand Specific Solutions03 Quantum-classical hybrid systems for data interpretation

Development of hybrid systems that combine quantum and classical computing resources to optimize data interpretation tasks. These systems aim to leverage the strengths of both quantum and classical architectures, allowing for more flexible and efficient data processing across various applications.Expand Specific Solutions04 Quantum algorithms for large-scale data analysis

Creation of specialized quantum algorithms designed to handle and interpret large-scale datasets more efficiently than classical methods. These algorithms exploit quantum parallelism and superposition to process vast amounts of information, potentially revolutionizing fields such as bioinformatics, climate modeling, and financial analysis.Expand Specific Solutions05 Quantum error mitigation in data interpretation

Development of techniques to mitigate errors and improve the reliability of quantum computations in data interpretation tasks. This includes advanced error correction codes, noise reduction methods, and robust quantum circuit designs to enhance the accuracy of quantum-based data analysis and interpretation.Expand Specific Solutions

Key Players in Quantum Astrophysics Research

The quantum computing landscape for astrophysical data interpretation is in its early stages, with significant potential for growth. The market is relatively small but expanding rapidly as research institutions and tech giants invest in quantum technologies. Companies like IBM, Google, and Intel are at the forefront, developing quantum hardware and software solutions. Emerging players such as Origin Quantum and Universal Quantum are also making strides. The technology is still in its infancy, with limited practical applications in astrophysics, but advancements in quantum algorithms and error correction are accelerating progress. Collaborations between academia and industry, exemplified by partnerships involving universities like Jilin University and the University of Sussex, are crucial for driving innovation in this field.

Amazon Technologies, Inc.

Technical Solution: Amazon's approach to quantum computing's impact on astrophysical data interpretation is centered around their Amazon Braket service, which provides access to various quantum hardware and simulators. They are developing quantum algorithms for optimization problems in astrophysics, such as parameter estimation in cosmological models. Amazon's hybrid quantum-classical algorithms show promise in accelerating the analysis of large-scale structure formation and dark energy dynamics [6]. Their quantum annealing solutions, accessible through D-Wave systems on Braket, are being applied to solve inverse problems in astrophysics, potentially improving our understanding of galaxy cluster dynamics and dark matter distribution [7].

Strengths: Cloud-based quantum computing infrastructure, diverse hardware access, and focus on hybrid quantum-classical algorithms. Weaknesses: Less direct involvement in quantum hardware development compared to some competitors.

Intel Corp.

Technical Solution: Intel's approach to quantum computing for astrophysical data interpretation focuses on developing scalable quantum systems based on spin qubits in silicon. Their Horse Ridge II cryogenic control chip enables the control of multiple quantum dots, potentially allowing for larger-scale quantum simulations of astrophysical phenomena [8]. Intel is also working on quantum-inspired algorithms that can run on classical hardware, providing immediate benefits to astrophysical research. Their neuromorphic computing research, while not strictly quantum, shows potential for efficient processing of complex astrophysical data patterns [9].

Strengths: Strong focus on scalable quantum hardware, integration with classical computing infrastructure, and potential for near-term quantum-inspired solutions. Weaknesses: Relatively newer entrant in the quantum computing field compared to some competitors.

Core Quantum Algorithms for Astrophysical Analysis

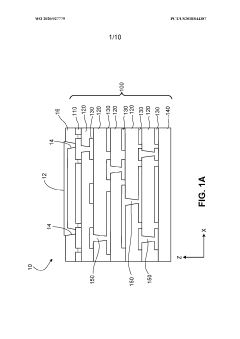

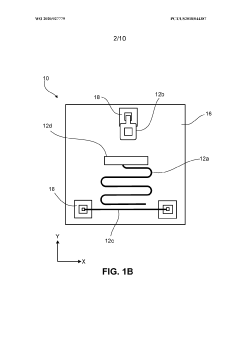

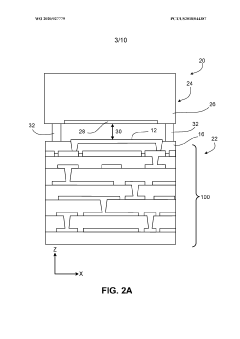

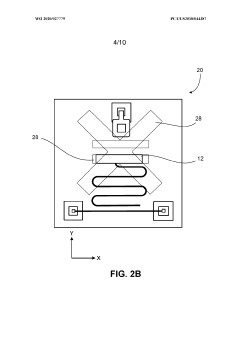

Signal distribution for a quantum computing system

PatentWO2020027779A1

Innovation

- A multilayer wiring stack with a low-loss capping layer, formed from materials like single crystal silicon, is used to house qubit control and readout elements, allowing these elements to be electrically connected through conductive vias, thereby reducing the need for deep via etches and minimizing interference, while maintaining high coherence and scalability.

Quantum Computing Infrastructure for Astrophysics

The development of quantum computing infrastructure for astrophysics represents a significant leap forward in our ability to process and interpret vast amounts of astronomical data. This infrastructure is designed to leverage the unique properties of quantum systems to perform complex calculations and simulations that are currently intractable for classical computers.

At the core of this infrastructure are quantum processors, which utilize quantum bits or qubits. Unlike classical bits, qubits can exist in multiple states simultaneously, allowing for parallel processing of information. This capability is particularly valuable in astrophysics, where researchers often deal with multidimensional datasets and complex physical models.

Quantum memory systems form another crucial component of this infrastructure. These systems are designed to store and manipulate quantum states with high fidelity, enabling the preservation of quantum information throughout complex computations. Advanced error correction techniques are implemented to mitigate the effects of decoherence, a major challenge in maintaining quantum coherence over extended periods.

Quantum communication networks are being developed to facilitate the secure transmission of quantum information between different nodes of the infrastructure. These networks utilize quantum key distribution protocols to ensure the confidentiality of data transfer, which is essential when dealing with sensitive astronomical observations or proprietary research data.

Specialized quantum algorithms tailored for astrophysical applications are a key focus of research and development. These algorithms are designed to exploit quantum phenomena such as superposition and entanglement to solve problems in areas like gravitational wave detection, cosmological simulations, and exoplanet analysis. Quantum machine learning techniques are also being integrated into this infrastructure to enhance pattern recognition and data classification capabilities.

The quantum computing infrastructure for astrophysics also includes classical-quantum hybrid systems. These systems combine the strengths of both classical and quantum computing paradigms, allowing for efficient pre-processing and post-processing of data using classical computers while leveraging quantum systems for the most computationally intensive tasks.

To support the development and operation of this infrastructure, dedicated quantum software development kits (SDKs) and application programming interfaces (APIs) are being created. These tools enable astrophysicists to harness the power of quantum computing without requiring in-depth knowledge of quantum mechanics or low-level quantum circuit design.

As this infrastructure continues to evolve, it promises to revolutionize our understanding of the universe by enabling more accurate simulations of cosmic phenomena, faster analysis of observational data, and the exploration of previously intractable astrophysical problems. The integration of quantum computing into astrophysics research workflows is poised to unlock new insights into the fundamental nature of space, time, and matter.

At the core of this infrastructure are quantum processors, which utilize quantum bits or qubits. Unlike classical bits, qubits can exist in multiple states simultaneously, allowing for parallel processing of information. This capability is particularly valuable in astrophysics, where researchers often deal with multidimensional datasets and complex physical models.

Quantum memory systems form another crucial component of this infrastructure. These systems are designed to store and manipulate quantum states with high fidelity, enabling the preservation of quantum information throughout complex computations. Advanced error correction techniques are implemented to mitigate the effects of decoherence, a major challenge in maintaining quantum coherence over extended periods.

Quantum communication networks are being developed to facilitate the secure transmission of quantum information between different nodes of the infrastructure. These networks utilize quantum key distribution protocols to ensure the confidentiality of data transfer, which is essential when dealing with sensitive astronomical observations or proprietary research data.

Specialized quantum algorithms tailored for astrophysical applications are a key focus of research and development. These algorithms are designed to exploit quantum phenomena such as superposition and entanglement to solve problems in areas like gravitational wave detection, cosmological simulations, and exoplanet analysis. Quantum machine learning techniques are also being integrated into this infrastructure to enhance pattern recognition and data classification capabilities.

The quantum computing infrastructure for astrophysics also includes classical-quantum hybrid systems. These systems combine the strengths of both classical and quantum computing paradigms, allowing for efficient pre-processing and post-processing of data using classical computers while leveraging quantum systems for the most computationally intensive tasks.

To support the development and operation of this infrastructure, dedicated quantum software development kits (SDKs) and application programming interfaces (APIs) are being created. These tools enable astrophysicists to harness the power of quantum computing without requiring in-depth knowledge of quantum mechanics or low-level quantum circuit design.

As this infrastructure continues to evolve, it promises to revolutionize our understanding of the universe by enabling more accurate simulations of cosmic phenomena, faster analysis of observational data, and the exploration of previously intractable astrophysical problems. The integration of quantum computing into astrophysics research workflows is poised to unlock new insights into the fundamental nature of space, time, and matter.

Ethical Implications of Quantum Astrophysics

The intersection of quantum computing and astrophysics raises profound ethical considerations that extend beyond scientific and technological realms. As quantum computing enhances our ability to interpret vast amounts of astrophysical data, it simultaneously challenges our traditional understanding of privacy, security, and the nature of knowledge itself.

One primary ethical concern is the potential for quantum computers to break current encryption methods, potentially compromising the security of sensitive astronomical data and research findings. This capability could lead to unauthorized access to proprietary information or even manipulation of data, undermining the integrity of scientific discoveries and collaborations in the field of astrophysics.

Moreover, the unprecedented processing power of quantum computers may exacerbate existing inequalities in scientific research. Institutions and countries with access to quantum computing resources could gain a significant advantage in astrophysical research, potentially widening the gap between well-funded and under-resourced scientific communities. This disparity could lead to an uneven distribution of knowledge and discoveries, raising questions about fairness and equal opportunities in scientific advancement.

The rapid acceleration of data interpretation capabilities also presents ethical dilemmas regarding the pace of scientific discovery. As quantum computing enables faster analysis of complex astrophysical phenomena, there is a risk of outpacing our ability to fully comprehend and responsibly manage the implications of new findings. This mismatch between technological capability and ethical readiness could lead to premature or misguided conclusions about the universe, potentially influencing public understanding and policy decisions.

Furthermore, the application of quantum computing in astrophysics may challenge our concepts of determinism and free will. As we gain the ability to model and predict cosmic events with unprecedented accuracy, questions arise about the nature of causality and the role of human agency in the face of a seemingly predetermined universe. This philosophical quandary has far-reaching implications for how we perceive our place in the cosmos and our responsibility as stewards of scientific knowledge.

The ethical implications also extend to the potential discovery of extraterrestrial life or intelligence. Quantum computing's enhanced data processing capabilities could significantly increase the likelihood of detecting signs of life beyond Earth. This possibility raises complex ethical questions about first contact protocols, the potential impact on human societies, and our moral obligations to other forms of life in the universe.

In conclusion, as we navigate the ethical landscape of quantum astrophysics, it is crucial to develop robust frameworks for responsible research and data management. These frameworks must address issues of data security, equitable access to scientific resources, and the responsible dissemination of groundbreaking discoveries. Only through careful consideration of these ethical dimensions can we ensure that the transformative potential of quantum computing in astrophysics is harnessed for the benefit of all humanity and in harmony with our deepest values.

One primary ethical concern is the potential for quantum computers to break current encryption methods, potentially compromising the security of sensitive astronomical data and research findings. This capability could lead to unauthorized access to proprietary information or even manipulation of data, undermining the integrity of scientific discoveries and collaborations in the field of astrophysics.

Moreover, the unprecedented processing power of quantum computers may exacerbate existing inequalities in scientific research. Institutions and countries with access to quantum computing resources could gain a significant advantage in astrophysical research, potentially widening the gap between well-funded and under-resourced scientific communities. This disparity could lead to an uneven distribution of knowledge and discoveries, raising questions about fairness and equal opportunities in scientific advancement.

The rapid acceleration of data interpretation capabilities also presents ethical dilemmas regarding the pace of scientific discovery. As quantum computing enables faster analysis of complex astrophysical phenomena, there is a risk of outpacing our ability to fully comprehend and responsibly manage the implications of new findings. This mismatch between technological capability and ethical readiness could lead to premature or misguided conclusions about the universe, potentially influencing public understanding and policy decisions.

Furthermore, the application of quantum computing in astrophysics may challenge our concepts of determinism and free will. As we gain the ability to model and predict cosmic events with unprecedented accuracy, questions arise about the nature of causality and the role of human agency in the face of a seemingly predetermined universe. This philosophical quandary has far-reaching implications for how we perceive our place in the cosmos and our responsibility as stewards of scientific knowledge.

The ethical implications also extend to the potential discovery of extraterrestrial life or intelligence. Quantum computing's enhanced data processing capabilities could significantly increase the likelihood of detecting signs of life beyond Earth. This possibility raises complex ethical questions about first contact protocols, the potential impact on human societies, and our moral obligations to other forms of life in the universe.

In conclusion, as we navigate the ethical landscape of quantum astrophysics, it is crucial to develop robust frameworks for responsible research and data management. These frameworks must address issues of data security, equitable access to scientific resources, and the responsible dissemination of groundbreaking discoveries. Only through careful consideration of these ethical dimensions can we ensure that the transformative potential of quantum computing in astrophysics is harnessed for the benefit of all humanity and in harmony with our deepest values.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!