The Future of Quantum Computing in Big Data Analysis

JUL 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing Evolution and Objectives

Quantum computing has emerged as a revolutionary technology with the potential to transform various fields, including big data analysis. The evolution of quantum computing can be traced back to the early 1980s when Richard Feynman proposed the idea of using quantum mechanical systems to simulate quantum phenomena. Since then, the field has progressed rapidly, with significant milestones achieved in both theoretical and practical aspects.

The development of quantum computing has been driven by the limitations of classical computing in solving complex problems. As data volumes continue to grow exponentially, traditional computing systems struggle to process and analyze vast amounts of information efficiently. Quantum computing offers a promising solution to this challenge by leveraging the principles of quantum mechanics, such as superposition and entanglement, to perform computations at an unprecedented scale.

The primary objective of quantum computing in big data analysis is to unlock new insights and solve problems that are currently intractable for classical computers. This includes optimizing complex systems, simulating molecular interactions for drug discovery, and enhancing machine learning algorithms. By harnessing the power of quantum bits, or qubits, quantum computers have the potential to process and analyze massive datasets in parallel, significantly reducing computation time and uncovering patterns that were previously hidden.

One of the key goals in the field is to achieve quantum supremacy, a point at which quantum computers can solve problems that are beyond the capabilities of even the most powerful classical supercomputers. While this milestone has been claimed by some researchers, the practical applications of quantum computing in big data analysis are still in their early stages.

The evolution of quantum computing technology has seen significant advancements in qubit design, error correction techniques, and quantum algorithms. Researchers are working on developing more stable and scalable qubit architectures, such as superconducting circuits, trapped ions, and topological qubits. These efforts aim to increase the coherence time of qubits and reduce error rates, which are crucial for performing complex computations on large datasets.

As the field progresses, the integration of quantum computing with existing big data infrastructure and analytics tools becomes increasingly important. The development of hybrid quantum-classical algorithms and quantum-inspired classical algorithms represents a promising approach to leveraging the strengths of both paradigms. These hybrid systems aim to address near-term challenges in big data analysis while paving the way for more advanced quantum applications in the future.

The development of quantum computing has been driven by the limitations of classical computing in solving complex problems. As data volumes continue to grow exponentially, traditional computing systems struggle to process and analyze vast amounts of information efficiently. Quantum computing offers a promising solution to this challenge by leveraging the principles of quantum mechanics, such as superposition and entanglement, to perform computations at an unprecedented scale.

The primary objective of quantum computing in big data analysis is to unlock new insights and solve problems that are currently intractable for classical computers. This includes optimizing complex systems, simulating molecular interactions for drug discovery, and enhancing machine learning algorithms. By harnessing the power of quantum bits, or qubits, quantum computers have the potential to process and analyze massive datasets in parallel, significantly reducing computation time and uncovering patterns that were previously hidden.

One of the key goals in the field is to achieve quantum supremacy, a point at which quantum computers can solve problems that are beyond the capabilities of even the most powerful classical supercomputers. While this milestone has been claimed by some researchers, the practical applications of quantum computing in big data analysis are still in their early stages.

The evolution of quantum computing technology has seen significant advancements in qubit design, error correction techniques, and quantum algorithms. Researchers are working on developing more stable and scalable qubit architectures, such as superconducting circuits, trapped ions, and topological qubits. These efforts aim to increase the coherence time of qubits and reduce error rates, which are crucial for performing complex computations on large datasets.

As the field progresses, the integration of quantum computing with existing big data infrastructure and analytics tools becomes increasingly important. The development of hybrid quantum-classical algorithms and quantum-inspired classical algorithms represents a promising approach to leveraging the strengths of both paradigms. These hybrid systems aim to address near-term challenges in big data analysis while paving the way for more advanced quantum applications in the future.

Big Data Analysis Market Demand

The demand for big data analysis solutions has been growing exponentially across various industries, driven by the increasing volume, velocity, and variety of data generated daily. As organizations seek to harness the power of their data assets, the market for advanced analytics tools and technologies continues to expand rapidly. The global big data analytics market size was valued at $271.83 billion in 2022 and is projected to reach $655.53 billion by 2029, exhibiting a compound annual growth rate (CAGR) of 13.4% during the forecast period.

Several key factors are fueling this market growth. First, the proliferation of Internet of Things (IoT) devices and sensors has led to an unprecedented surge in data generation, creating a pressing need for sophisticated analysis tools. Second, businesses across sectors such as finance, healthcare, retail, and manufacturing are increasingly relying on data-driven decision-making to gain competitive advantages. This shift towards data-centric strategies has heightened the demand for advanced analytics capabilities.

The healthcare sector, in particular, has emerged as a significant driver of big data analytics adoption. The need for personalized medicine, improved patient outcomes, and efficient resource allocation has spurred investments in analytics solutions. Similarly, the financial services industry is leveraging big data analytics for risk management, fraud detection, and customer insights, further propelling market growth.

E-commerce and retail sectors are also major contributors to the rising demand for big data analytics. These industries utilize advanced analytics for customer segmentation, personalized marketing, and supply chain optimization. The ability to analyze vast amounts of customer data in real-time has become crucial for maintaining a competitive edge in the digital marketplace.

As the volume and complexity of data continue to grow, traditional computing methods are struggling to keep pace with analytical requirements. This limitation has created a significant opportunity for quantum computing in the big data analysis market. Quantum computers have the potential to process and analyze massive datasets exponentially faster than classical computers, offering solutions to complex problems that are currently intractable.

The integration of quantum computing with big data analysis is expected to revolutionize fields such as drug discovery, financial modeling, and climate change prediction. This convergence is likely to create new market segments and drive further innovation in the big data analytics industry. As quantum technologies mature and become more accessible, the demand for quantum-enhanced big data solutions is anticipated to surge, potentially reshaping the entire analytics landscape.

Several key factors are fueling this market growth. First, the proliferation of Internet of Things (IoT) devices and sensors has led to an unprecedented surge in data generation, creating a pressing need for sophisticated analysis tools. Second, businesses across sectors such as finance, healthcare, retail, and manufacturing are increasingly relying on data-driven decision-making to gain competitive advantages. This shift towards data-centric strategies has heightened the demand for advanced analytics capabilities.

The healthcare sector, in particular, has emerged as a significant driver of big data analytics adoption. The need for personalized medicine, improved patient outcomes, and efficient resource allocation has spurred investments in analytics solutions. Similarly, the financial services industry is leveraging big data analytics for risk management, fraud detection, and customer insights, further propelling market growth.

E-commerce and retail sectors are also major contributors to the rising demand for big data analytics. These industries utilize advanced analytics for customer segmentation, personalized marketing, and supply chain optimization. The ability to analyze vast amounts of customer data in real-time has become crucial for maintaining a competitive edge in the digital marketplace.

As the volume and complexity of data continue to grow, traditional computing methods are struggling to keep pace with analytical requirements. This limitation has created a significant opportunity for quantum computing in the big data analysis market. Quantum computers have the potential to process and analyze massive datasets exponentially faster than classical computers, offering solutions to complex problems that are currently intractable.

The integration of quantum computing with big data analysis is expected to revolutionize fields such as drug discovery, financial modeling, and climate change prediction. This convergence is likely to create new market segments and drive further innovation in the big data analytics industry. As quantum technologies mature and become more accessible, the demand for quantum-enhanced big data solutions is anticipated to surge, potentially reshaping the entire analytics landscape.

Quantum Computing Challenges in Big Data

Quantum computing presents significant challenges when applied to big data analysis. The sheer volume, velocity, and variety of big data pose unique obstacles for quantum systems. One primary challenge is the limited number of qubits in current quantum computers, which restricts their ability to process massive datasets directly. This limitation necessitates the development of hybrid quantum-classical algorithms and data reduction techniques to make big data problems tractable for quantum systems.

Another major hurdle is the issue of quantum decoherence, where qubits lose their quantum properties due to interactions with the environment. This phenomenon becomes more pronounced as the size and complexity of quantum circuits increase, making it difficult to maintain quantum coherence for the extended periods required in big data analysis tasks. Researchers are actively working on error correction codes and fault-tolerant quantum computing architectures to mitigate this challenge.

Data input and output also present significant obstacles. Efficiently loading classical big data into quantum states and extracting meaningful results from quantum measurements are non-trivial tasks. Developing quantum-inspired data encoding schemes and efficient quantum-to-classical interfaces are crucial areas of research to bridge this gap.

The inherent probabilistic nature of quantum measurements adds another layer of complexity to big data analysis. Unlike classical computers, which provide deterministic results, quantum computers often require multiple runs to obtain statistically significant outcomes. This probabilistic aspect necessitates the development of novel quantum algorithms and statistical methods tailored for big data applications.

Scalability remains a critical challenge in applying quantum computing to big data. As datasets grow exponentially, scaling quantum algorithms and hardware to handle increasingly large problems becomes paramount. This includes developing quantum-inspired classical algorithms that can leverage the principles of quantum computing on classical hardware for certain big data tasks.

Furthermore, the integration of quantum computing with existing big data infrastructure and workflows poses significant technical and operational challenges. Developing quantum-compatible data processing pipelines, storage solutions, and software frameworks that can seamlessly interface with classical big data systems is essential for practical implementation.

Lastly, the shortage of skilled professionals who understand both quantum computing and big data analytics is a significant bottleneck. Bridging this knowledge gap and fostering interdisciplinary collaboration between quantum physicists, computer scientists, and data analysts is crucial for advancing the field and overcoming these challenges.

Another major hurdle is the issue of quantum decoherence, where qubits lose their quantum properties due to interactions with the environment. This phenomenon becomes more pronounced as the size and complexity of quantum circuits increase, making it difficult to maintain quantum coherence for the extended periods required in big data analysis tasks. Researchers are actively working on error correction codes and fault-tolerant quantum computing architectures to mitigate this challenge.

Data input and output also present significant obstacles. Efficiently loading classical big data into quantum states and extracting meaningful results from quantum measurements are non-trivial tasks. Developing quantum-inspired data encoding schemes and efficient quantum-to-classical interfaces are crucial areas of research to bridge this gap.

The inherent probabilistic nature of quantum measurements adds another layer of complexity to big data analysis. Unlike classical computers, which provide deterministic results, quantum computers often require multiple runs to obtain statistically significant outcomes. This probabilistic aspect necessitates the development of novel quantum algorithms and statistical methods tailored for big data applications.

Scalability remains a critical challenge in applying quantum computing to big data. As datasets grow exponentially, scaling quantum algorithms and hardware to handle increasingly large problems becomes paramount. This includes developing quantum-inspired classical algorithms that can leverage the principles of quantum computing on classical hardware for certain big data tasks.

Furthermore, the integration of quantum computing with existing big data infrastructure and workflows poses significant technical and operational challenges. Developing quantum-compatible data processing pipelines, storage solutions, and software frameworks that can seamlessly interface with classical big data systems is essential for practical implementation.

Lastly, the shortage of skilled professionals who understand both quantum computing and big data analytics is a significant bottleneck. Bridging this knowledge gap and fostering interdisciplinary collaboration between quantum physicists, computer scientists, and data analysts is crucial for advancing the field and overcoming these challenges.

Current Quantum Algorithms for Big Data

01 Quantum Circuit Design and Optimization

This area focuses on developing and optimizing quantum circuits for various applications. It involves creating efficient quantum gate sequences, reducing circuit depth, and improving overall performance of quantum algorithms. Techniques may include circuit compression, gate decomposition, and noise mitigation strategies to enhance the reliability of quantum computations.- Quantum Circuit Design and Optimization: This area focuses on developing and optimizing quantum circuits for various applications. It involves creating efficient quantum gate sequences, reducing circuit depth, and improving overall performance of quantum algorithms. Techniques may include circuit compression, gate decomposition, and noise mitigation strategies to enhance the reliability of quantum computations.

- Error Correction and Fault Tolerance: Error correction and fault tolerance are crucial for building reliable quantum computers. This field involves developing techniques to detect and correct quantum errors, as well as designing fault-tolerant quantum architectures. Methods may include surface codes, topological quantum computing, and other quantum error correction protocols to improve the stability and scalability of quantum systems.

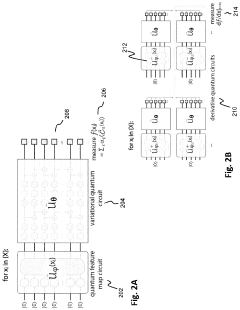

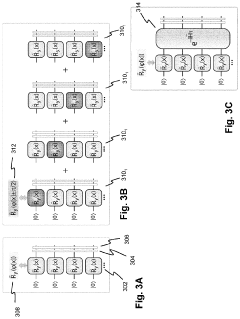

- Quantum-Classical Hybrid Algorithms: Hybrid algorithms combine classical and quantum computing techniques to solve complex problems. This approach leverages the strengths of both paradigms, using quantum processors for specific subroutines while classical computers handle other parts of the computation. Applications include optimization, machine learning, and chemistry simulations, aiming to achieve quantum advantage in practical scenarios.

- Quantum Hardware Architectures: This area focuses on the design and development of quantum hardware platforms. It includes research on various qubit technologies such as superconducting circuits, trapped ions, and topological qubits. The goal is to create scalable and coherent quantum processors with improved qubit connectivity, reduced crosstalk, and enhanced control systems for more powerful quantum computations.

- Quantum Software and Programming Tools: Quantum software development involves creating programming languages, compilers, and development environments specifically designed for quantum computers. This field aims to bridge the gap between quantum hardware and high-level applications, providing tools for quantum algorithm design, simulation, and optimization. It also includes efforts to standardize quantum programming interfaces and create user-friendly quantum development kits.

02 Error Correction and Fault Tolerance

Error correction and fault tolerance are crucial for building reliable quantum computers. This field encompasses techniques to detect and correct quantum errors, develop fault-tolerant quantum gates, and design robust quantum memory systems. It aims to mitigate the effects of decoherence and other sources of noise in quantum systems.Expand Specific Solutions03 Quantum-Classical Hybrid Algorithms

This area explores the integration of quantum and classical computing paradigms to solve complex problems. It involves developing algorithms that leverage the strengths of both quantum and classical processors, optimizing the distribution of tasks between the two, and creating efficient interfaces for data exchange. These hybrid approaches aim to achieve quantum advantage in practical applications.Expand Specific Solutions04 Quantum Hardware Architecture

This field focuses on the design and implementation of quantum computing hardware. It includes the development of various qubit technologies, such as superconducting circuits, trapped ions, and topological qubits. Research in this area also covers quantum memory systems, quantum interconnects, and scalable architectures for large-scale quantum processors.Expand Specific Solutions05 Quantum Software and Programming Languages

This area involves the development of software tools, programming languages, and frameworks specifically designed for quantum computing. It includes creating high-level quantum programming languages, compilers that can optimize quantum circuits, and simulation tools for testing and debugging quantum algorithms. The goal is to make quantum computing more accessible to developers and researchers.Expand Specific Solutions

Key Quantum Computing Industry Players

The quantum computing landscape for big data analysis is rapidly evolving, with the market currently in its early growth stage. Major players like IBM, Google, and Amazon are investing heavily in quantum technologies, while startups such as Zapata Computing and 1QB Information Technologies are developing specialized quantum software solutions. The market size is projected to expand significantly in the coming years, driven by increasing demand for advanced data processing capabilities. Technologically, quantum computing is progressing, with companies like Origin Quantum and IBM making strides in quantum hardware development. However, the field is still in its nascent stages, with practical applications for big data analysis being actively explored but not yet fully realized at scale.

Amazon Technologies, Inc.

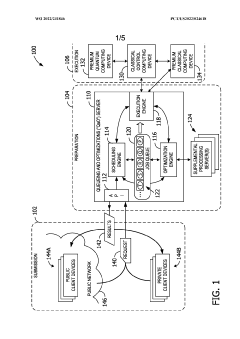

Technical Solution: Amazon's approach to quantum computing in big data analysis is centered around their Amazon Braket service, which provides access to various quantum hardware and simulators. They offer a hybrid quantum-classical infrastructure that allows users to experiment with quantum algorithms for big data problems using different qubit technologies[13]. Amazon is developing quantum annealing solutions for optimization problems in supply chain and logistics, which have direct applications in big data analysis[14]. They are also investing in error-corrected quantum computing research, aiming to build fault-tolerant quantum systems capable of handling large-scale data processing tasks[15]. Amazon's focus on quantum machine learning includes the development of variational quantum eigensolver (VQE) algorithms for data classification and feature selection in big datasets[16].

Strengths: Diverse quantum hardware access, strong integration with classical cloud computing, and focus on practical business applications. Weaknesses: Reliance on third-party quantum hardware and less in-house quantum hardware development compared to some competitors.

Zapata Computing, Inc.

Technical Solution: Zapata Computing specializes in developing quantum software and algorithms for big data analysis and machine learning applications. Their Orquestra platform integrates quantum and classical computational resources, allowing for the development of hybrid quantum-classical workflows for data analysis[17]. Zapata focuses on near-term quantum advantage in areas such as optimization, machine learning, and simulation, which are crucial for big data applications[18]. They have developed quantum-inspired algorithms that can run on classical hardware but leverage quantum principles to solve complex optimization problems in data analysis more efficiently[19]. Zapata's research includes quantum generative models and quantum reinforcement learning, which show promise for processing and generating insights from large datasets[20].

Strengths: Specialized quantum software development, focus on near-term quantum advantage, and strong industry partnerships. Weaknesses: Dependence on hardware developments from other companies and the challenge of achieving practical quantum advantage in the near term.

Breakthrough Quantum Technologies for Data Analysis

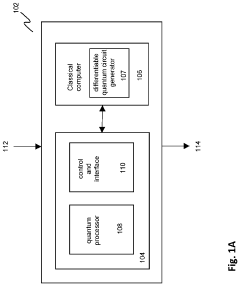

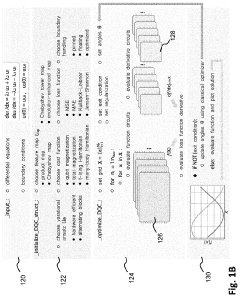

Solving a set of (NON)linear differential equations using a hybrid data processing system comprising a classical computer system and a quantum computer system

PatentPendingUS20230418896A1

Innovation

- A quantum-classical hybrid system that uses differentiable quantum circuits to encode and solve differential equations, allowing for analytical derivatives and efficient encoding of solutions, compatible with near-term quantum hardware, and extensible to fault-tolerant systems, by employing quantum feature maps and variational circuits for optimization.

System and method of in-queue optimizations for quantum cloud computing

PatentWO2022231846A1

Innovation

- A quantum computing system with a queueing and optimizations (QaO) server that performs in-queue optimizations, including prediction models for execution times and machine calibration, to improve the quality of quantum circuit execution, reduce wait times, and balance performance characteristics, utilizing both intra-job and inter-job optimizations to enhance fidelity and throughput.

Quantum Computing Infrastructure Requirements

Quantum computing infrastructure requirements for big data analysis are complex and demanding, necessitating significant advancements in both hardware and software components. The foundation of quantum computing systems relies on the development of stable and scalable qubits, which are the fundamental units of quantum information. Current quantum processors face challenges in maintaining coherence and minimizing errors, requiring sophisticated error correction techniques and cryogenic cooling systems to operate at near-absolute zero temperatures.

To effectively harness quantum computing for big data analysis, substantial improvements in qubit count and quality are essential. Leading quantum computing companies are striving to increase the number of qubits while simultaneously enhancing their fidelity and interconnectivity. This scaling effort is crucial for tackling complex big data problems that classical computers struggle to solve efficiently.

Quantum memory and quantum communication channels are integral components of the infrastructure, enabling the storage and transfer of quantum information. These elements are vital for implementing distributed quantum computing architectures capable of processing vast amounts of data across multiple quantum processors.

Software infrastructure for quantum computing in big data analysis requires the development of specialized quantum algorithms and programming languages. These tools must bridge the gap between classical data structures and quantum computational models, allowing data scientists to leverage quantum advantages without extensive quantum physics expertise.

Hybrid quantum-classical systems are emerging as a practical approach to integrating quantum capabilities into existing big data infrastructures. These systems combine the strengths of classical computing for data preprocessing and result interpretation with quantum processors for specific computational tasks that benefit from quantum speedup.

As quantum computing infrastructure evolves, it must also address security concerns unique to quantum systems. Quantum-resistant cryptography and secure quantum communication protocols are essential to protect sensitive big data as it moves between classical and quantum domains.

The integration of quantum computing with cloud services and data centers presents another infrastructural challenge. Developing quantum cloud platforms that can seamlessly connect with traditional big data analytics tools will be crucial for widespread adoption in data-intensive industries.

To effectively harness quantum computing for big data analysis, substantial improvements in qubit count and quality are essential. Leading quantum computing companies are striving to increase the number of qubits while simultaneously enhancing their fidelity and interconnectivity. This scaling effort is crucial for tackling complex big data problems that classical computers struggle to solve efficiently.

Quantum memory and quantum communication channels are integral components of the infrastructure, enabling the storage and transfer of quantum information. These elements are vital for implementing distributed quantum computing architectures capable of processing vast amounts of data across multiple quantum processors.

Software infrastructure for quantum computing in big data analysis requires the development of specialized quantum algorithms and programming languages. These tools must bridge the gap between classical data structures and quantum computational models, allowing data scientists to leverage quantum advantages without extensive quantum physics expertise.

Hybrid quantum-classical systems are emerging as a practical approach to integrating quantum capabilities into existing big data infrastructures. These systems combine the strengths of classical computing for data preprocessing and result interpretation with quantum processors for specific computational tasks that benefit from quantum speedup.

As quantum computing infrastructure evolves, it must also address security concerns unique to quantum systems. Quantum-resistant cryptography and secure quantum communication protocols are essential to protect sensitive big data as it moves between classical and quantum domains.

The integration of quantum computing with cloud services and data centers presents another infrastructural challenge. Developing quantum cloud platforms that can seamlessly connect with traditional big data analytics tools will be crucial for widespread adoption in data-intensive industries.

Quantum-Safe Cryptography for Big Data

As quantum computing continues to advance, its potential impact on big data analysis becomes increasingly significant. However, this technological leap also brings new challenges to data security. Quantum-safe cryptography for big data is emerging as a critical field to address these concerns and ensure the protection of sensitive information in the quantum era.

Traditional cryptographic methods, such as RSA and ECC, rely on the computational difficulty of certain mathematical problems. These algorithms are vulnerable to attacks by quantum computers, which can solve these problems exponentially faster than classical computers. This vulnerability poses a significant threat to the security of big data systems, particularly those handling sensitive information in fields like finance, healthcare, and national security.

Quantum-safe cryptography, also known as post-quantum cryptography, aims to develop cryptographic systems that are secure against both quantum and classical attacks. These new algorithms are designed to resist the computational power of quantum computers while remaining efficient on classical systems. Several approaches are being explored, including lattice-based cryptography, hash-based signatures, and code-based cryptography.

Lattice-based cryptography is one of the most promising candidates for quantum-safe encryption. It relies on the difficulty of solving certain problems in high-dimensional lattices, which are believed to be resistant to quantum attacks. This approach offers versatility and efficiency, making it suitable for various applications in big data environments.

Hash-based signatures provide another avenue for quantum-safe cryptography. These signatures use hash functions, which are considered secure against quantum attacks, to create digital signatures. While they have limitations in terms of the number of signatures that can be generated, they offer strong security guarantees and are particularly useful for software updates and long-term document verification in big data systems.

Code-based cryptography, based on the difficulty of decoding general linear codes, is another promising approach. These systems offer fast encryption and decryption speeds, making them suitable for high-throughput big data applications. However, they typically require larger key sizes, which can pose challenges in terms of storage and transmission.

As the field of quantum-safe cryptography evolves, standardization efforts are underway to ensure interoperability and widespread adoption. The National Institute of Standards and Technology (NIST) is leading a process to evaluate and standardize post-quantum cryptographic algorithms, with final selections expected in the coming years. This standardization will be crucial for the integration of quantum-safe cryptography into existing big data infrastructure and applications.

Traditional cryptographic methods, such as RSA and ECC, rely on the computational difficulty of certain mathematical problems. These algorithms are vulnerable to attacks by quantum computers, which can solve these problems exponentially faster than classical computers. This vulnerability poses a significant threat to the security of big data systems, particularly those handling sensitive information in fields like finance, healthcare, and national security.

Quantum-safe cryptography, also known as post-quantum cryptography, aims to develop cryptographic systems that are secure against both quantum and classical attacks. These new algorithms are designed to resist the computational power of quantum computers while remaining efficient on classical systems. Several approaches are being explored, including lattice-based cryptography, hash-based signatures, and code-based cryptography.

Lattice-based cryptography is one of the most promising candidates for quantum-safe encryption. It relies on the difficulty of solving certain problems in high-dimensional lattices, which are believed to be resistant to quantum attacks. This approach offers versatility and efficiency, making it suitable for various applications in big data environments.

Hash-based signatures provide another avenue for quantum-safe cryptography. These signatures use hash functions, which are considered secure against quantum attacks, to create digital signatures. While they have limitations in terms of the number of signatures that can be generated, they offer strong security guarantees and are particularly useful for software updates and long-term document verification in big data systems.

Code-based cryptography, based on the difficulty of decoding general linear codes, is another promising approach. These systems offer fast encryption and decryption speeds, making them suitable for high-throughput big data applications. However, they typically require larger key sizes, which can pose challenges in terms of storage and transmission.

As the field of quantum-safe cryptography evolves, standardization efforts are underway to ensure interoperability and widespread adoption. The National Institute of Standards and Technology (NIST) is leading a process to evaluate and standardize post-quantum cryptographic algorithms, with final selections expected in the coming years. This standardization will be crucial for the integration of quantum-safe cryptography into existing big data infrastructure and applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!