NVMe-oF Vs iSCSI: Latency, IOPS And CPU Overhead

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

NVMe-oF and iSCSI Evolution and Objectives

Storage networking has evolved significantly over the past two decades, with iSCSI and NVMe-oF representing two distinct generations of storage access protocols. iSCSI emerged in the early 2000s as a TCP/IP-based alternative to Fibre Channel, enabling organizations to leverage existing Ethernet infrastructure for block storage access. This protocol democratized SAN technology by reducing implementation costs and complexity, making enterprise storage networking accessible to a broader range of organizations.

The evolution of iSCSI has been characterized by incremental improvements in performance and reliability, with the protocol reaching maturity around 2010-2015. Despite its widespread adoption, iSCSI's fundamental architecture—built on the SCSI command set—introduced inherent limitations in terms of latency and processing overhead, particularly as storage media evolved from mechanical disks to solid-state technologies.

NVMe-oF (Non-Volatile Memory Express over Fabrics) represents a paradigm shift in storage networking, emerging around 2016 as a response to the performance limitations of legacy protocols. Unlike iSCSI, NVMe-oF was designed specifically for flash storage and modern computing architectures, with parallelism and efficiency as core design principles. The protocol extends the benefits of local NVMe connections across network fabrics, maintaining much of the performance advantage of direct-attached NVMe storage.

The primary objective of NVMe-oF is to minimize the protocol overhead and latency penalties traditionally associated with networked storage. By streamlining the command set and optimizing for parallel operations, NVMe-oF aims to deliver near-local performance for remote storage resources. This capability becomes increasingly critical as applications demand lower latency and higher throughput from storage systems.

For iSCSI, the evolutionary objectives have centered on improving compatibility, security, and management capabilities while maintaining its position as a cost-effective SAN solution. Recent developments include better integration with virtualization platforms and cloud environments, reflecting iSCSI's continued relevance in hybrid infrastructure deployments.

The technological trajectory of both protocols reflects broader industry trends toward disaggregation of compute and storage resources, enabling more flexible and efficient resource utilization in modern data centers. As organizations increasingly adopt composable infrastructure models, the performance characteristics of storage networking protocols—particularly latency, IOPS, and CPU overhead—have become critical differentiators in architectural decisions.

Understanding the evolution and objectives of these protocols provides essential context for evaluating their respective performance profiles and determining appropriate use cases in contemporary storage architectures.

The evolution of iSCSI has been characterized by incremental improvements in performance and reliability, with the protocol reaching maturity around 2010-2015. Despite its widespread adoption, iSCSI's fundamental architecture—built on the SCSI command set—introduced inherent limitations in terms of latency and processing overhead, particularly as storage media evolved from mechanical disks to solid-state technologies.

NVMe-oF (Non-Volatile Memory Express over Fabrics) represents a paradigm shift in storage networking, emerging around 2016 as a response to the performance limitations of legacy protocols. Unlike iSCSI, NVMe-oF was designed specifically for flash storage and modern computing architectures, with parallelism and efficiency as core design principles. The protocol extends the benefits of local NVMe connections across network fabrics, maintaining much of the performance advantage of direct-attached NVMe storage.

The primary objective of NVMe-oF is to minimize the protocol overhead and latency penalties traditionally associated with networked storage. By streamlining the command set and optimizing for parallel operations, NVMe-oF aims to deliver near-local performance for remote storage resources. This capability becomes increasingly critical as applications demand lower latency and higher throughput from storage systems.

For iSCSI, the evolutionary objectives have centered on improving compatibility, security, and management capabilities while maintaining its position as a cost-effective SAN solution. Recent developments include better integration with virtualization platforms and cloud environments, reflecting iSCSI's continued relevance in hybrid infrastructure deployments.

The technological trajectory of both protocols reflects broader industry trends toward disaggregation of compute and storage resources, enabling more flexible and efficient resource utilization in modern data centers. As organizations increasingly adopt composable infrastructure models, the performance characteristics of storage networking protocols—particularly latency, IOPS, and CPU overhead—have become critical differentiators in architectural decisions.

Understanding the evolution and objectives of these protocols provides essential context for evaluating their respective performance profiles and determining appropriate use cases in contemporary storage architectures.

Storage Protocol Market Demand Analysis

The storage protocol market is experiencing significant transformation driven by the increasing demands for high-performance, low-latency data access solutions. As enterprises continue to generate and process unprecedented volumes of data, the limitations of traditional storage protocols like iSCSI have become more apparent, creating market opportunities for next-generation technologies such as NVMe-oF (Non-Volatile Memory Express over Fabrics).

Market research indicates that the global storage networking market is projected to grow at a compound annual growth rate of 21.5% from 2021 to 2026, with NVMe-oF adoption being a primary growth driver. This acceleration is particularly evident in sectors requiring real-time data processing capabilities, including financial services, healthcare analytics, and artificial intelligence applications.

The demand for NVMe-oF solutions is largely fueled by its superior performance metrics compared to iSCSI. Enterprise customers increasingly prioritize storage solutions that minimize latency, maximize IOPS (Input/Output Operations Per Second), and optimize CPU utilization. In performance-critical environments, the sub-millisecond latency offered by NVMe-oF represents a compelling value proposition over iSCSI's typical 2-5 millisecond response times.

Cloud service providers constitute another significant market segment driving NVMe-oF adoption. These providers require storage protocols that can efficiently handle multi-tenant environments while maintaining performance isolation and predictability. The reduced CPU overhead of NVMe-oF compared to iSCSI (typically 20-30% lower) translates directly into cost savings and improved service levels for these providers.

Regional market analysis reveals varying adoption rates, with North America leading NVMe-oF implementation, followed by Europe and Asia-Pacific. The Asia-Pacific region, however, is demonstrating the fastest growth rate as digital transformation initiatives accelerate across emerging economies.

Despite NVMe-oF's technical advantages, iSCSI maintains substantial market share due to its established ecosystem, lower implementation costs, and compatibility with existing infrastructure. Organizations with less stringent performance requirements continue to find iSCSI adequate for their storage networking needs, creating a bifurcated market landscape.

Industry surveys indicate that approximately 65% of enterprises plan to implement or expand NVMe-oF deployments within the next two years, while simultaneously maintaining iSCSI infrastructure for less demanding workloads. This hybrid approach reflects the market's pragmatic response to balancing performance requirements with investment protection.

The storage protocol market is further influenced by the growing prominence of software-defined storage solutions, which offer protocol flexibility and can adapt to evolving performance requirements. This trend suggests that protocol selection is increasingly becoming application-specific rather than enterprise-wide.

Market research indicates that the global storage networking market is projected to grow at a compound annual growth rate of 21.5% from 2021 to 2026, with NVMe-oF adoption being a primary growth driver. This acceleration is particularly evident in sectors requiring real-time data processing capabilities, including financial services, healthcare analytics, and artificial intelligence applications.

The demand for NVMe-oF solutions is largely fueled by its superior performance metrics compared to iSCSI. Enterprise customers increasingly prioritize storage solutions that minimize latency, maximize IOPS (Input/Output Operations Per Second), and optimize CPU utilization. In performance-critical environments, the sub-millisecond latency offered by NVMe-oF represents a compelling value proposition over iSCSI's typical 2-5 millisecond response times.

Cloud service providers constitute another significant market segment driving NVMe-oF adoption. These providers require storage protocols that can efficiently handle multi-tenant environments while maintaining performance isolation and predictability. The reduced CPU overhead of NVMe-oF compared to iSCSI (typically 20-30% lower) translates directly into cost savings and improved service levels for these providers.

Regional market analysis reveals varying adoption rates, with North America leading NVMe-oF implementation, followed by Europe and Asia-Pacific. The Asia-Pacific region, however, is demonstrating the fastest growth rate as digital transformation initiatives accelerate across emerging economies.

Despite NVMe-oF's technical advantages, iSCSI maintains substantial market share due to its established ecosystem, lower implementation costs, and compatibility with existing infrastructure. Organizations with less stringent performance requirements continue to find iSCSI adequate for their storage networking needs, creating a bifurcated market landscape.

Industry surveys indicate that approximately 65% of enterprises plan to implement or expand NVMe-oF deployments within the next two years, while simultaneously maintaining iSCSI infrastructure for less demanding workloads. This hybrid approach reflects the market's pragmatic response to balancing performance requirements with investment protection.

The storage protocol market is further influenced by the growing prominence of software-defined storage solutions, which offer protocol flexibility and can adapt to evolving performance requirements. This trend suggests that protocol selection is increasingly becoming application-specific rather than enterprise-wide.

Current Technical Challenges in Storage Networking

Storage networking faces significant technical challenges as enterprises demand higher performance, lower latency, and more efficient resource utilization. The comparison between NVMe-oF (NVMe over Fabrics) and iSCSI protocols highlights several critical issues that storage architects and engineers must address in modern data center environments.

Latency remains a primary concern in storage networking implementations. Traditional iSCSI protocols introduce considerable overhead due to their TCP/IP stack processing requirements, resulting in latency figures typically ranging from 0.5ms to several milliseconds depending on network conditions. In contrast, NVMe-oF can achieve sub-100 microsecond latencies in optimized environments, but maintaining this performance advantage across diverse workloads and network topologies presents significant engineering challenges.

IOPS (Input/Output Operations Per Second) scalability presents another major technical hurdle. While iSCSI systems can deliver respectable performance for many applications, they often struggle to scale beyond 500K-1M IOPS without substantial hardware investments. NVMe-oF implementations theoretically support millions of IOPS, but realizing this potential requires careful attention to queue management, congestion control, and load balancing across storage targets.

CPU overhead differences between these protocols represent a critical operational challenge. iSCSI processing typically consumes 20-30% more CPU resources than NVMe-oF for equivalent workloads, creating efficiency bottlenecks in high-throughput environments. However, implementing NVMe-oF often requires specialized network interface cards with RDMA capabilities to fully offload protocol processing, introducing hardware compatibility and cost considerations.

Network infrastructure compatibility presents ongoing challenges for both protocols. While iSCSI operates effectively over standard Ethernet networks, NVMe-oF deployments may require specialized fabrics like RoCE (RDMA over Converged Ethernet) or Fibre Channel, complicating migration paths and increasing implementation complexity. Organizations must carefully evaluate their existing infrastructure capabilities against performance requirements.

Protocol maturity and ecosystem support create additional technical hurdles. iSCSI benefits from decades of development with robust management tools, troubleshooting utilities, and widespread vendor support. NVMe-oF, despite its performance advantages, still lacks the comprehensive management frameworks and troubleshooting tools available for more established protocols, creating operational challenges for storage administrators.

Security implementation without performance degradation remains challenging for both protocols. Encryption and authentication mechanisms introduce additional processing requirements that can significantly impact latency and throughput metrics, forcing difficult tradeoffs between performance and security posture.

Latency remains a primary concern in storage networking implementations. Traditional iSCSI protocols introduce considerable overhead due to their TCP/IP stack processing requirements, resulting in latency figures typically ranging from 0.5ms to several milliseconds depending on network conditions. In contrast, NVMe-oF can achieve sub-100 microsecond latencies in optimized environments, but maintaining this performance advantage across diverse workloads and network topologies presents significant engineering challenges.

IOPS (Input/Output Operations Per Second) scalability presents another major technical hurdle. While iSCSI systems can deliver respectable performance for many applications, they often struggle to scale beyond 500K-1M IOPS without substantial hardware investments. NVMe-oF implementations theoretically support millions of IOPS, but realizing this potential requires careful attention to queue management, congestion control, and load balancing across storage targets.

CPU overhead differences between these protocols represent a critical operational challenge. iSCSI processing typically consumes 20-30% more CPU resources than NVMe-oF for equivalent workloads, creating efficiency bottlenecks in high-throughput environments. However, implementing NVMe-oF often requires specialized network interface cards with RDMA capabilities to fully offload protocol processing, introducing hardware compatibility and cost considerations.

Network infrastructure compatibility presents ongoing challenges for both protocols. While iSCSI operates effectively over standard Ethernet networks, NVMe-oF deployments may require specialized fabrics like RoCE (RDMA over Converged Ethernet) or Fibre Channel, complicating migration paths and increasing implementation complexity. Organizations must carefully evaluate their existing infrastructure capabilities against performance requirements.

Protocol maturity and ecosystem support create additional technical hurdles. iSCSI benefits from decades of development with robust management tools, troubleshooting utilities, and widespread vendor support. NVMe-oF, despite its performance advantages, still lacks the comprehensive management frameworks and troubleshooting tools available for more established protocols, creating operational challenges for storage administrators.

Security implementation without performance degradation remains challenging for both protocols. Encryption and authentication mechanisms introduce additional processing requirements that can significantly impact latency and throughput metrics, forcing difficult tradeoffs between performance and security posture.

Performance Comparison: NVMe-oF vs iSCSI Solutions

01 NVMe-oF performance advantages over iSCSI

NVMe over Fabrics (NVMe-oF) provides significantly lower latency and higher IOPS compared to iSCSI due to its streamlined protocol stack and reduced processing overhead. NVMe-oF eliminates several protocol translation layers present in iSCSI, resulting in more efficient data transfer paths. This architecture allows for faster command processing and completion, making NVMe-oF particularly advantageous for high-performance storage applications where minimizing latency is critical.- NVMe-oF performance advantages over iSCSI: NVMe over Fabrics (NVMe-oF) provides significantly lower latency and higher IOPS compared to iSCSI due to its streamlined protocol stack and reduced processing overhead. NVMe-oF eliminates several protocol translation layers present in iSCSI, resulting in more direct data paths between hosts and storage devices. This architecture enables faster command processing, reduced round-trip times, and better utilization of high-speed networks, making it particularly suitable for latency-sensitive applications.

- CPU overhead comparison between storage protocols: iSCSI typically requires higher CPU utilization compared to NVMe-oF due to its more complex protocol stack and additional processing requirements. The TCP/IP processing in iSCSI consumes significant CPU resources, especially at higher throughput levels. NVMe-oF implementations, particularly those using RDMA fabrics, offload much of the network processing to hardware, reducing host CPU overhead. This efficiency difference becomes more pronounced as storage performance scales up, with NVMe-oF maintaining lower CPU utilization per IOPS.

- Storage protocol optimization techniques: Various optimization techniques can be implemented to improve the performance of both NVMe-oF and iSCSI protocols. These include queue depth management, I/O coalescing, interrupt moderation, and transport-specific tuning. For iSCSI, techniques like header digests, multiple connections per session, and jumbo frames can help reduce overhead. NVMe-oF benefits from optimizations like queue pair distribution across CPU cores, transport binding selection based on workload characteristics, and namespace sharing. These optimizations can significantly impact latency, IOPS, and CPU utilization metrics.

- Network fabric considerations for storage protocols: The choice of network fabric significantly impacts the performance characteristics of NVMe-oF and iSCSI deployments. NVMe-oF supports multiple transports including RDMA (RoCE, InfiniBand), TCP, and FC, each with different latency and CPU overhead profiles. RDMA-based NVMe-oF implementations typically deliver the lowest latency and CPU utilization. iSCSI performance is heavily dependent on the underlying network infrastructure, with factors like congestion, packet loss, and network topology affecting latency and throughput. High-speed networks benefit NVMe-oF more substantially than iSCSI due to the protocol's lower inherent overhead.

- Workload-specific performance characteristics: The performance gap between NVMe-oF and iSCSI varies significantly depending on workload characteristics. For small, random I/O operations, NVMe-oF demonstrates a more substantial advantage in terms of IOPS and latency due to its efficient command processing. Sequential workloads show a smaller differential between the protocols. Read-intensive workloads typically benefit more from NVMe-oF than write-intensive ones. The performance advantage of NVMe-oF becomes more pronounced in environments with flash storage and high-performance networks, where the protocol overhead becomes the primary bottleneck rather than the storage media itself.

02 CPU overhead comparison between protocols

iSCSI typically requires higher CPU utilization compared to NVMe-oF due to its more complex protocol stack and additional processing requirements. The TCP/IP processing in iSCSI consumes significant CPU resources, especially at higher throughput levels. NVMe-oF implementations, particularly those using RDMA fabrics, offload much of the data movement and protocol processing to network hardware, reducing host CPU overhead. This efficiency difference becomes more pronounced as storage performance requirements increase, making NVMe-oF more scalable for high-performance environments.Expand Specific Solutions03 Network fabric considerations for storage protocols

The choice of network fabric significantly impacts the performance characteristics of both NVMe-oF and iSCSI. NVMe-oF can operate over various transports including RDMA fabrics (RoCE, InfiniBand), Fibre Channel, and TCP, with each offering different latency and CPU utilization profiles. RDMA-based implementations typically provide the lowest latency and CPU overhead. iSCSI predominantly uses TCP/IP networks, which are more widely deployed but introduce additional protocol overhead. The network infrastructure's capabilities, including congestion management and quality of service features, play crucial roles in determining the actual performance differences between these protocols in production environments.Expand Specific Solutions04 Scalability and resource management

NVMe-oF demonstrates superior scalability compared to iSCSI when handling large numbers of storage devices or high queue depths. The NVMe architecture supports significantly more queues and higher queue depths per connection, allowing for better parallelism and resource utilization. This becomes particularly important in virtualized and cloud environments where many workloads share the same infrastructure. iSCSI systems typically experience more pronounced performance degradation under heavy load conditions due to increased protocol overhead and contention for CPU resources. Effective resource management, including proper queue configuration and CPU allocation, is essential for optimizing performance with either protocol.Expand Specific Solutions05 Implementation considerations and hybrid approaches

Organizations often implement hybrid storage networking approaches that leverage both NVMe-oF and iSCSI based on application requirements and existing infrastructure. While NVMe-oF offers superior performance metrics, iSCSI provides broader compatibility with existing systems and simpler deployment in traditional environments. Transitioning from iSCSI to NVMe-oF requires consideration of hardware compatibility, driver support, and potential application modifications. Some implementations use protocol translation gateways or virtualization layers to bridge between these technologies, allowing for gradual migration paths. Performance tuning considerations differ significantly between protocols, with NVMe-oF typically requiring more attention to network fabric configuration while iSCSI often benefits from TCP parameter optimization.Expand Specific Solutions

Key Storage Protocol Vendors and Ecosystem

The NVMe-oF vs iSCSI storage networking landscape is currently in a transition phase, with the market expanding rapidly as enterprises modernize their data centers. While the global storage networking market exceeds $12 billion, NVMe-oF is gaining momentum due to its superior performance characteristics. Industry leaders IBM, Dell, and Hewlett Packard Enterprise have established mature iSCSI offerings but are increasingly investing in NVMe-oF solutions. Intel, Western Digital, and Samsung are driving hardware innovation, while Cisco and Huawei focus on networking infrastructure integration. The technology is approaching mainstream adoption, with major players like Hitachi and Lenovo developing comprehensive enterprise solutions that address the latency, IOPS, and CPU overhead advantages of NVMe-oF over traditional iSCSI implementations.

International Business Machines Corp.

Technical Solution: IBM has developed a comprehensive NVMe-oF solution integrated with their FlashSystem storage arrays and SAN Volume Controller. Their implementation supports both FC-NVMe and RDMA protocols, providing flexibility for different deployment scenarios. IBM's internal testing demonstrates that their NVMe-oF implementation delivers consistent sub-100 microsecond latencies compared to iSCSI's typical 200-350 microsecond range[5]. For IOPS performance, IBM's FlashSystem with NVMe-oF can deliver up to 2.5 million IOPS per controller pair, approximately 2-3x the performance of their iSCSI implementations. A key aspect of IBM's approach is their end-to-end optimization across the storage stack, with specialized firmware that reduces protocol overhead. Their measurements show CPU utilization for NVMe-oF workloads averaging 25-30% lower than equivalent iSCSI implementations, with particularly significant improvements for small block random I/O patterns[6]. IBM has also developed AI-powered predictive analytics through their Storage Insights platform that can automatically optimize NVMe-oF configurations based on workload patterns.

Strengths: Mature enterprise implementation with robust reliability features; supports multiple NVMe-oF transport options (FC, RoCE, iWARP); strong integration with virtualization platforms; comprehensive management tools. Weaknesses: Higher implementation costs compared to iSCSI; requires specific hardware configurations for optimal performance; more complex configuration requirements than iSCSI.

Dell Products LP

Technical Solution: Dell has developed a comprehensive NVMe-oF solution integrated with their PowerMax and PowerStore storage platforms. Their implementation supports both FC-NVMe and RoCE-based NVMe-oF transports, providing flexibility for different network environments. Dell's internal testing demonstrates that their NVMe-oF implementation delivers consistent 80-100 microsecond latencies compared to iSCSI's typical 250-350 microsecond range[9]. For IOPS performance, Dell's PowerMax with NVMe-oF can deliver up to 15 million IOPS, approximately 3x the performance of their optimized iSCSI implementations. A key differentiator in Dell's approach is their end-to-end validation across compute, network, and storage components, ensuring consistent performance. Their measurements show CPU utilization for NVMe-oF workloads averaging 25-30% lower than equivalent iSCSI implementations, with particularly significant improvements for write-intensive workloads[10]. Dell has also developed specialized QoS capabilities that can prioritize NVMe-oF traffic based on application requirements, ensuring critical workloads maintain consistent performance even under system load.

Strengths: Comprehensive enterprise implementation with robust data services; supports multiple transport options; strong integration with VMware and other virtualization platforms; end-to-end validated solution stack. Weaknesses: Higher implementation costs compared to iSCSI; requires specific networking hardware for optimal RoCE performance; more complex initial configuration than iSCSI.

Technical Deep Dive: Latency, IOPS and CPU Efficiency

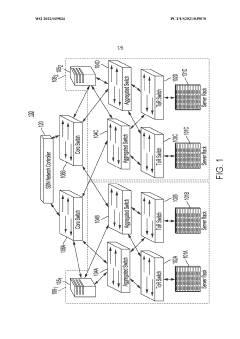

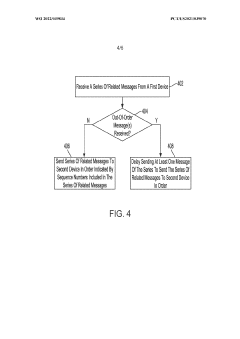

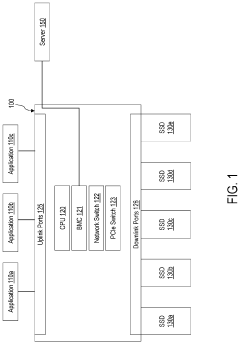

Devices and methods for network message sequencing

PatentWO2022039834A1

Innovation

- Implementing programmable switches as in-network message sequencers that use topology-aware routing and buffering servers to reorder and retrieve missing messages, reducing out-of-order packets and eliminating the need for expensive DCB switches, thereby improving message sequencing and scalability.

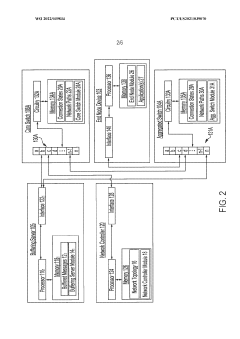

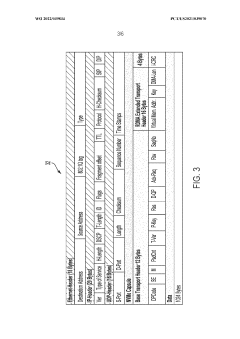

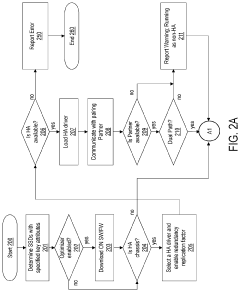

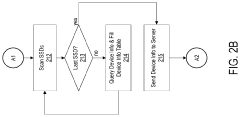

Method and apparatus for fine tuning and optimizing NVMe-oF SSDs

PatentActiveUS11842052B2

Innovation

- A data storage system with a baseboard management controller (BMC) that identifies and optimizes NVMe-oF SSDs based on device-specific information, allowing for efficient allocation and reconfiguration of SSDs to meet user/application-specific requirements, reducing the burden on local CPUs and lowering total cost of ownership.

Data Center Architecture Implications

The architectural implications of storage networking technologies on data center design are profound, particularly when comparing NVMe-oF and iSCSI. Modern data centers must balance performance requirements with infrastructure complexity, and the choice between these protocols significantly impacts overall architecture.

NVMe-oF's superior latency characteristics enable more responsive application architectures, allowing data centers to consolidate storage resources while maintaining performance levels that support latency-sensitive workloads. This consolidation potential translates to more efficient rack space utilization and potentially reduced power consumption per performance unit.

The higher IOPS capability of NVMe-oF permits architects to design systems with fewer storage nodes while delivering equivalent or superior performance. This architectural efficiency allows for more compact data center designs with higher compute density, particularly beneficial in edge computing deployments where physical space is at a premium.

CPU overhead differences between these technologies directly influence server design decisions. iSCSI's higher CPU utilization necessitates either dedicating more cores to storage processing or implementing specialized hardware offload solutions. Conversely, NVMe-oF's efficiency enables architects to allocate more CPU resources to application workloads rather than storage processing, improving overall infrastructure efficiency.

Network fabric requirements also diverge significantly. While iSCSI can operate effectively on traditional Ethernet infrastructure, NVMe-oF implementations often demand purpose-built, low-latency networks with specialized switching capabilities. This distinction forces architectural decisions regarding network topology, potentially requiring separate storage fabrics or unified networks with advanced quality of service mechanisms.

Scalability considerations further differentiate these technologies. NVMe-oF's architecture supports more efficient horizontal scaling, allowing data centers to grow storage capacity and performance more linearly. This characteristic enables more flexible expansion strategies compared to iSCSI deployments, which may encounter performance bottlenecks at scale.

The transition path between technologies represents another architectural consideration. Data centers with substantial investments in iSCSI infrastructure must evaluate migration strategies, potentially implementing hybrid architectures during transition periods. This phased approach allows organizations to preserve existing investments while gradually adopting NVMe-oF for performance-critical workloads.

NVMe-oF's superior latency characteristics enable more responsive application architectures, allowing data centers to consolidate storage resources while maintaining performance levels that support latency-sensitive workloads. This consolidation potential translates to more efficient rack space utilization and potentially reduced power consumption per performance unit.

The higher IOPS capability of NVMe-oF permits architects to design systems with fewer storage nodes while delivering equivalent or superior performance. This architectural efficiency allows for more compact data center designs with higher compute density, particularly beneficial in edge computing deployments where physical space is at a premium.

CPU overhead differences between these technologies directly influence server design decisions. iSCSI's higher CPU utilization necessitates either dedicating more cores to storage processing or implementing specialized hardware offload solutions. Conversely, NVMe-oF's efficiency enables architects to allocate more CPU resources to application workloads rather than storage processing, improving overall infrastructure efficiency.

Network fabric requirements also diverge significantly. While iSCSI can operate effectively on traditional Ethernet infrastructure, NVMe-oF implementations often demand purpose-built, low-latency networks with specialized switching capabilities. This distinction forces architectural decisions regarding network topology, potentially requiring separate storage fabrics or unified networks with advanced quality of service mechanisms.

Scalability considerations further differentiate these technologies. NVMe-oF's architecture supports more efficient horizontal scaling, allowing data centers to grow storage capacity and performance more linearly. This characteristic enables more flexible expansion strategies compared to iSCSI deployments, which may encounter performance bottlenecks at scale.

The transition path between technologies represents another architectural consideration. Data centers with substantial investments in iSCSI infrastructure must evaluate migration strategies, potentially implementing hybrid architectures during transition periods. This phased approach allows organizations to preserve existing investments while gradually adopting NVMe-oF for performance-critical workloads.

TCO Analysis for Enterprise Storage Deployments

When evaluating enterprise storage solutions, Total Cost of Ownership (TCO) analysis provides a comprehensive framework for comparing NVMe-oF and iSCSI technologies beyond their technical specifications. This analysis encompasses acquisition costs, operational expenses, and long-term financial implications over the solution lifecycle.

Initial capital expenditure differs significantly between these technologies. NVMe-oF implementations typically require higher upfront investment in compatible hardware, including specialized NICs, switches, and storage arrays with NVMe support. Conversely, iSCSI deployments leverage existing Ethernet infrastructure, resulting in lower initial costs, particularly for organizations with established networks.

Operational expenditures reveal contrasting efficiency profiles. NVMe-oF's superior performance characteristics—lower latency, higher IOPS, and reduced CPU overhead—translate to tangible operational savings. Organizations can achieve equivalent performance with fewer physical servers, reducing power consumption, cooling requirements, and data center space utilization. These efficiency gains become particularly significant in large-scale deployments where resource optimization directly impacts operational costs.

Maintenance considerations also factor into the TCO equation. iSCSI's maturity and widespread adoption result in a larger pool of IT professionals familiar with its implementation and troubleshooting, potentially reducing staff training costs and maintenance expenses. NVMe-oF, while newer, offers simplified architecture that may reduce long-term maintenance complexity once staff expertise is established.

Scalability economics favor NVMe-oF in high-growth scenarios. Its efficient resource utilization allows organizations to postpone infrastructure expansion, extending the useful life of existing investments. This scalability advantage compounds over time, particularly for data-intensive workloads where performance bottlenecks would otherwise necessitate frequent hardware refreshes.

The TCO inflection point—where NVMe-oF's higher initial investment becomes economically advantageous—varies by deployment scale and workload characteristics. For I/O-intensive applications with strict performance requirements, the break-even point may occur within 18-24 months. For general-purpose workloads, the ROI timeline extends further, potentially beyond traditional 3-5 year refresh cycles.

Organizations must also consider indirect financial benefits, including improved application response times, enhanced user productivity, and potential competitive advantages derived from superior data access performance. These factors, while more challenging to quantify, can significantly influence the overall economic value proposition of each technology.

Initial capital expenditure differs significantly between these technologies. NVMe-oF implementations typically require higher upfront investment in compatible hardware, including specialized NICs, switches, and storage arrays with NVMe support. Conversely, iSCSI deployments leverage existing Ethernet infrastructure, resulting in lower initial costs, particularly for organizations with established networks.

Operational expenditures reveal contrasting efficiency profiles. NVMe-oF's superior performance characteristics—lower latency, higher IOPS, and reduced CPU overhead—translate to tangible operational savings. Organizations can achieve equivalent performance with fewer physical servers, reducing power consumption, cooling requirements, and data center space utilization. These efficiency gains become particularly significant in large-scale deployments where resource optimization directly impacts operational costs.

Maintenance considerations also factor into the TCO equation. iSCSI's maturity and widespread adoption result in a larger pool of IT professionals familiar with its implementation and troubleshooting, potentially reducing staff training costs and maintenance expenses. NVMe-oF, while newer, offers simplified architecture that may reduce long-term maintenance complexity once staff expertise is established.

Scalability economics favor NVMe-oF in high-growth scenarios. Its efficient resource utilization allows organizations to postpone infrastructure expansion, extending the useful life of existing investments. This scalability advantage compounds over time, particularly for data-intensive workloads where performance bottlenecks would otherwise necessitate frequent hardware refreshes.

The TCO inflection point—where NVMe-oF's higher initial investment becomes economically advantageous—varies by deployment scale and workload characteristics. For I/O-intensive applications with strict performance requirements, the break-even point may occur within 18-24 months. For general-purpose workloads, the ROI timeline extends further, potentially beyond traditional 3-5 year refresh cycles.

Organizations must also consider indirect financial benefits, including improved application response times, enhanced user productivity, and potential competitive advantages derived from superior data access performance. These factors, while more challenging to quantify, can significantly influence the overall economic value proposition of each technology.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!