Dolby Vision’s Influence on 3D Audio-Visual Synchronization

JUL 30, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Dolby Vision Evolution

Dolby Vision has undergone significant evolution since its introduction, marking key milestones in the advancement of high dynamic range (HDR) imaging technology. Initially launched in 2014, Dolby Vision aimed to enhance the visual experience by offering superior contrast, brightness, and color accuracy compared to standard dynamic range (SDR) content.

The early iterations of Dolby Vision focused primarily on improving the overall picture quality for 2D content. However, as the technology matured, it began to address more complex challenges, including the synchronization of audio and visual elements in 3D environments. This evolution was driven by the increasing demand for immersive entertainment experiences and the growing popularity of 3D content in both cinema and home entertainment settings.

One of the critical developments in Dolby Vision's evolution was the introduction of dynamic metadata. This feature allowed for frame-by-frame optimization of HDR content, ensuring that each scene could be displayed with optimal brightness, contrast, and color settings. This dynamic approach proved particularly beneficial for 3D content, where depth perception and visual cues play a crucial role in the viewer's experience.

As Dolby Vision continued to evolve, it began to incorporate more sophisticated algorithms for handling motion and depth in 3D environments. These advancements were instrumental in addressing the challenges of audio-visual synchronization in 3D content. By improving the precision of visual rendering and reducing latency, Dolby Vision helped to minimize the discrepancies between audio and visual cues that can often lead to disorientation or discomfort in 3D viewing experiences.

The integration of Dolby Vision with other Dolby technologies, such as Dolby Atmos, marked another significant milestone in its evolution. This integration allowed for a more holistic approach to audio-visual synchronization, where the visual enhancements provided by Dolby Vision could be precisely aligned with the spatial audio capabilities of Dolby Atmos. This synergy between visual and audio technologies opened up new possibilities for creating truly immersive 3D experiences.

Recent developments in Dolby Vision have focused on expanding its compatibility and adaptability across various display technologies and content delivery platforms. This includes support for a wider range of color gamuts, higher frame rates, and improved tone mapping techniques. These advancements have further refined the technology's ability to handle the complex interplay between visual and audio elements in 3D environments, contributing to more seamless and realistic audio-visual synchronization.

The early iterations of Dolby Vision focused primarily on improving the overall picture quality for 2D content. However, as the technology matured, it began to address more complex challenges, including the synchronization of audio and visual elements in 3D environments. This evolution was driven by the increasing demand for immersive entertainment experiences and the growing popularity of 3D content in both cinema and home entertainment settings.

One of the critical developments in Dolby Vision's evolution was the introduction of dynamic metadata. This feature allowed for frame-by-frame optimization of HDR content, ensuring that each scene could be displayed with optimal brightness, contrast, and color settings. This dynamic approach proved particularly beneficial for 3D content, where depth perception and visual cues play a crucial role in the viewer's experience.

As Dolby Vision continued to evolve, it began to incorporate more sophisticated algorithms for handling motion and depth in 3D environments. These advancements were instrumental in addressing the challenges of audio-visual synchronization in 3D content. By improving the precision of visual rendering and reducing latency, Dolby Vision helped to minimize the discrepancies between audio and visual cues that can often lead to disorientation or discomfort in 3D viewing experiences.

The integration of Dolby Vision with other Dolby technologies, such as Dolby Atmos, marked another significant milestone in its evolution. This integration allowed for a more holistic approach to audio-visual synchronization, where the visual enhancements provided by Dolby Vision could be precisely aligned with the spatial audio capabilities of Dolby Atmos. This synergy between visual and audio technologies opened up new possibilities for creating truly immersive 3D experiences.

Recent developments in Dolby Vision have focused on expanding its compatibility and adaptability across various display technologies and content delivery platforms. This includes support for a wider range of color gamuts, higher frame rates, and improved tone mapping techniques. These advancements have further refined the technology's ability to handle the complex interplay between visual and audio elements in 3D environments, contributing to more seamless and realistic audio-visual synchronization.

Market Demand Analysis

The market demand for Dolby Vision's influence on 3D audio-visual synchronization has been steadily growing, driven by the increasing consumer appetite for immersive entertainment experiences. As the entertainment industry continues to evolve, there is a rising demand for technologies that can deliver more realistic and engaging audio-visual content across various platforms, including cinema, home theaters, and mobile devices.

The integration of Dolby Vision with 3D audio technologies has created a new benchmark for audio-visual synchronization, addressing a critical need in the market for seamless and lifelike multimedia experiences. This technology combination has particularly gained traction in the film and television industry, where producers and directors are constantly seeking ways to enhance storytelling through improved sensory engagement.

In the home entertainment sector, there is a growing trend towards high-end home theater systems that can replicate the cinema experience. This has led to an increased demand for technologies that can deliver precise audio-visual synchronization, with Dolby Vision playing a crucial role in this ecosystem. The market for smart TVs and streaming devices that support Dolby Vision and advanced audio formats has seen significant growth, indicating a strong consumer interest in premium audio-visual experiences.

The gaming industry has also shown a keen interest in the potential of Dolby Vision for enhancing 3D audio-visual synchronization. As gaming graphics and soundscapes become more sophisticated, there is a growing need for technologies that can provide a more immersive and responsive gaming experience. This has opened up new market opportunities for game developers and hardware manufacturers to incorporate Dolby Vision technology into their products.

In the mobile device market, there is an emerging demand for smartphones and tablets that can deliver high-quality audio-visual experiences. As mobile content consumption continues to rise, manufacturers are looking to differentiate their products by offering superior display and audio capabilities, with Dolby Vision becoming an increasingly important feature for premium devices.

The professional content creation industry has also seen a surge in demand for tools and technologies that can facilitate better 3D audio-visual synchronization. Post-production houses, animation studios, and virtual reality content creators are increasingly adopting Dolby Vision to enhance their workflows and deliver more compelling content to audiences.

As the market for immersive technologies expands, there is a growing need for standardization and interoperability in audio-visual synchronization technologies. This has led to increased collaboration between technology providers, content creators, and distribution platforms to ensure seamless integration of Dolby Vision with various 3D audio formats across different devices and platforms.

The integration of Dolby Vision with 3D audio technologies has created a new benchmark for audio-visual synchronization, addressing a critical need in the market for seamless and lifelike multimedia experiences. This technology combination has particularly gained traction in the film and television industry, where producers and directors are constantly seeking ways to enhance storytelling through improved sensory engagement.

In the home entertainment sector, there is a growing trend towards high-end home theater systems that can replicate the cinema experience. This has led to an increased demand for technologies that can deliver precise audio-visual synchronization, with Dolby Vision playing a crucial role in this ecosystem. The market for smart TVs and streaming devices that support Dolby Vision and advanced audio formats has seen significant growth, indicating a strong consumer interest in premium audio-visual experiences.

The gaming industry has also shown a keen interest in the potential of Dolby Vision for enhancing 3D audio-visual synchronization. As gaming graphics and soundscapes become more sophisticated, there is a growing need for technologies that can provide a more immersive and responsive gaming experience. This has opened up new market opportunities for game developers and hardware manufacturers to incorporate Dolby Vision technology into their products.

In the mobile device market, there is an emerging demand for smartphones and tablets that can deliver high-quality audio-visual experiences. As mobile content consumption continues to rise, manufacturers are looking to differentiate their products by offering superior display and audio capabilities, with Dolby Vision becoming an increasingly important feature for premium devices.

The professional content creation industry has also seen a surge in demand for tools and technologies that can facilitate better 3D audio-visual synchronization. Post-production houses, animation studios, and virtual reality content creators are increasingly adopting Dolby Vision to enhance their workflows and deliver more compelling content to audiences.

As the market for immersive technologies expands, there is a growing need for standardization and interoperability in audio-visual synchronization technologies. This has led to increased collaboration between technology providers, content creators, and distribution platforms to ensure seamless integration of Dolby Vision with various 3D audio formats across different devices and platforms.

Current AV Sync Challenges

Audio-visual synchronization remains a critical challenge in modern multimedia systems, particularly with the advent of advanced technologies like Dolby Vision. The increasing complexity of content delivery pipelines and the diversity of playback devices have exacerbated existing AV sync issues while introducing new ones.

One of the primary challenges is the variability in processing times for audio and video streams. High-quality video formats, such as those enhanced by Dolby Vision, often require more intensive processing compared to audio streams. This discrepancy can lead to noticeable delays between audio and video, especially in live broadcasting scenarios or when streaming content over networks with varying bandwidth.

Another significant challenge is the lack of standardization across different platforms and devices. While efforts have been made to establish common protocols, the implementation of AV sync mechanisms can vary widely between manufacturers and content providers. This inconsistency makes it difficult to ensure a uniform synchronization experience across all viewing environments.

The increasing adoption of object-based audio formats, which complement advanced video technologies like Dolby Vision, introduces additional complexity to the synchronization process. These audio formats require real-time rendering based on the playback environment, potentially introducing variable latencies that must be accounted for in the synchronization pipeline.

Furthermore, the proliferation of multi-screen and second-screen experiences has created new synchronization challenges. Ensuring that audio and video remain in sync across multiple devices, potentially with different processing capabilities and network conditions, adds another layer of complexity to the AV sync problem.

The transition to IP-based content delivery systems has also introduced new variables that affect AV sync. Network jitter, packet loss, and variable bitrates can all contribute to synchronization issues that were less prevalent in traditional broadcast systems.

Lastly, the push for higher frame rates and resolutions in video content, often associated with technologies like Dolby Vision, places increased demands on processing power and bandwidth. This can lead to situations where audio processing outpaces video processing, resulting in lip-sync errors that are particularly noticeable in dialogue-heavy content.

Addressing these challenges requires a multi-faceted approach that combines advancements in hardware capabilities, software algorithms, and industry standards. As the audiovisual landscape continues to evolve, solving these synchronization issues will be crucial for delivering the immersive experiences promised by technologies like Dolby Vision and 3D audio systems.

One of the primary challenges is the variability in processing times for audio and video streams. High-quality video formats, such as those enhanced by Dolby Vision, often require more intensive processing compared to audio streams. This discrepancy can lead to noticeable delays between audio and video, especially in live broadcasting scenarios or when streaming content over networks with varying bandwidth.

Another significant challenge is the lack of standardization across different platforms and devices. While efforts have been made to establish common protocols, the implementation of AV sync mechanisms can vary widely between manufacturers and content providers. This inconsistency makes it difficult to ensure a uniform synchronization experience across all viewing environments.

The increasing adoption of object-based audio formats, which complement advanced video technologies like Dolby Vision, introduces additional complexity to the synchronization process. These audio formats require real-time rendering based on the playback environment, potentially introducing variable latencies that must be accounted for in the synchronization pipeline.

Furthermore, the proliferation of multi-screen and second-screen experiences has created new synchronization challenges. Ensuring that audio and video remain in sync across multiple devices, potentially with different processing capabilities and network conditions, adds another layer of complexity to the AV sync problem.

The transition to IP-based content delivery systems has also introduced new variables that affect AV sync. Network jitter, packet loss, and variable bitrates can all contribute to synchronization issues that were less prevalent in traditional broadcast systems.

Lastly, the push for higher frame rates and resolutions in video content, often associated with technologies like Dolby Vision, places increased demands on processing power and bandwidth. This can lead to situations where audio processing outpaces video processing, resulting in lip-sync errors that are particularly noticeable in dialogue-heavy content.

Addressing these challenges requires a multi-faceted approach that combines advancements in hardware capabilities, software algorithms, and industry standards. As the audiovisual landscape continues to evolve, solving these synchronization issues will be crucial for delivering the immersive experiences promised by technologies like Dolby Vision and 3D audio systems.

Dolby Vision AV Solutions

01 Audio-visual synchronization techniques

Various methods and systems are employed to ensure synchronization between audio and video streams in Dolby Vision content. These techniques involve precise timing mechanisms, buffer management, and adaptive algorithms to maintain lip-sync and overall audio-visual coherence across different playback devices and network conditions.- Audio-visual synchronization techniques: Various methods and systems are employed to ensure synchronization between audio and visual components in Dolby Vision content. These techniques involve precise timing mechanisms, buffer management, and adaptive algorithms to maintain lip-sync and overall audio-visual coherence across different playback devices and network conditions.

- High dynamic range (HDR) video processing: Dolby Vision incorporates advanced HDR video processing techniques to enhance image quality. This includes methods for tone mapping, color grading, and metadata handling to ensure optimal display of HDR content across various devices while maintaining synchronization with audio elements.

- Adaptive streaming and playback: Systems and methods for adaptive streaming and playback of Dolby Vision content are implemented to maintain audio-visual synchronization across varying network conditions and device capabilities. This includes dynamic bitrate adjustment, buffer management, and intelligent content delivery mechanisms.

- Device-specific optimization: Techniques for optimizing Dolby Vision audio-visual synchronization across different devices and display technologies are developed. This includes methods for calibrating and adjusting playback parameters based on specific device characteristics, such as processing power, display capabilities, and audio output systems.

- Error detection and correction: Advanced error detection and correction mechanisms are implemented to maintain audio-visual synchronization in Dolby Vision content. These systems identify and address timing discrepancies, packet loss, and other factors that could lead to synchronization issues, ensuring a seamless viewing experience.

02 High Dynamic Range (HDR) processing for Dolby Vision

Dolby Vision incorporates advanced HDR processing techniques to enhance image quality while maintaining synchronization with audio. This includes color grading, tone mapping, and dynamic metadata handling to ensure optimal visual performance across various display technologies without compromising audio-visual sync.Expand Specific Solutions03 Adaptive streaming and playback for Dolby Vision content

Adaptive streaming technologies are implemented to deliver Dolby Vision content while maintaining audio-visual synchronization. These systems adjust video and audio quality based on network conditions and device capabilities, ensuring smooth playback and consistent sync across different viewing scenarios.Expand Specific Solutions04 Device-specific optimization for Dolby Vision playback

Techniques are developed to optimize Dolby Vision playback on various devices, including TVs, mobile devices, and streaming boxes. These optimizations focus on device-specific processing capabilities, display characteristics, and audio output systems to maintain high-quality audio-visual synchronization across different platforms.Expand Specific Solutions05 Error detection and correction in Dolby Vision audio-visual sync

Advanced error detection and correction mechanisms are implemented to identify and resolve audio-visual synchronization issues in Dolby Vision content. These systems use real-time monitoring, feedback loops, and adaptive algorithms to maintain precise sync even in challenging playback environments or when processing complex HDR content.Expand Specific Solutions

Key Industry Players

The competitive landscape for Dolby Vision's influence on 3D audio-visual synchronization is evolving rapidly, with the market in its growth phase. Major players like Samsung Electronics, Sony Group, and LG Electronics are investing heavily in this technology, indicating its increasing importance in the consumer electronics sector. The market size is expanding as demand for immersive audio-visual experiences grows across various platforms, from home entertainment to cinema. Technologically, companies like Dolby Laboratories are leading the way, with others like Panasonic and Philips also making significant strides. The technology's maturity is advancing, with continuous improvements in synchronization accuracy and implementation across different devices and content formats.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed its own HDR technology called HDR10+ as an alternative to Dolby Vision, which also aims to improve 3D audio-visual synchronization. HDR10+ uses dynamic metadata to optimize picture quality on a scene-by-scene basis, similar to Dolby Vision[8]. Samsung's approach to audio-visual synchronization includes the implementation of advanced audio processing technologies in their TVs and sound systems. They have introduced features like Q-Symphony, which synchronizes the TV speakers with their soundbars to create a more immersive and synchronized audio experience[9]. Samsung has also developed an AI-powered technology called Object Tracking Sound+, which uses multiple speakers built into the TV to create audio that tracks the movement of objects on screen, enhancing the perception of synchronization between audio and visual elements[10].

Strengths: Market leader in TV technology, proprietary HDR10+ technology, strong focus on AI-enhanced audio features. Weaknesses: Lack of support for Dolby Vision may limit compatibility with some content.

Sony Group Corp.

Technical Solution: Sony has developed its own 3D audio technology called 360 Reality Audio, which works in tandem with their visual technologies to enhance audio-visual synchronization. Their approach focuses on object-based spatial audio rendering, where each sound source is treated as a distinct object in 3D space[4]. This allows for precise placement and movement of audio elements in relation to the visual content. Sony's technology also incorporates advanced signal processing algorithms that adjust the audio timing to match the visual elements, ensuring tight synchronization even in complex scenes. Additionally, Sony has implemented a feature called "Sound-from-Picture Reality," which uses AI to analyze the on-screen action and adjust the audio output accordingly, further enhancing the perception of synchronization between audio and visual elements[5].

Strengths: Comprehensive ecosystem of audio-visual products, strong brand recognition in entertainment technology. Weaknesses: Proprietary technology may limit compatibility with other systems.

Core Dolby Vision Patents

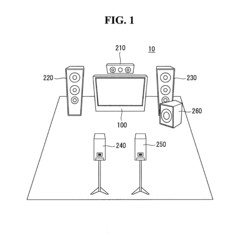

Electronic device generating stereo sound synchronized with stereographic moving picture

PatentInactiveUS20120127264A1

Innovation

- An electronic device comprising a receiver, controller, and audio processor that generates a sound zooming factor based on depth information from stereoscopic moving images, adjusting sound output accordingly across multiple speakers to create a synchronized stereo sound experience.

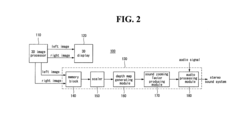

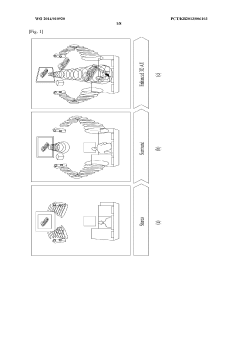

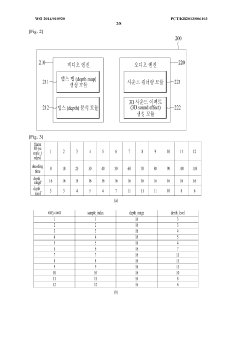

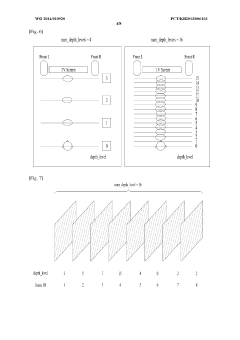

Enhanced 3D audio/video processing apparatus and method

PatentWO2014010920A1

Innovation

- An enhanced 3D audio/video processing method that uses the MPEG file format to signal depth information, including frame identification, depth level, and depth range, to generate synchronized 3D audio effects based on 3D video depth, reducing redundant information and simplifying device design.

Standardization Efforts

Standardization efforts play a crucial role in the widespread adoption and implementation of Dolby Vision's influence on 3D audio-visual synchronization. As the technology continues to evolve, industry stakeholders are working together to establish common guidelines and protocols to ensure interoperability and consistency across different platforms and devices.

One of the primary organizations leading these standardization efforts is the Society of Motion Picture and Television Engineers (SMPTE). SMPTE has been actively developing standards for audio-visual synchronization in immersive environments, including those utilizing Dolby Vision technology. Their work focuses on defining precise timing mechanisms and metadata formats to maintain accurate synchronization between video and 3D audio elements.

The International Telecommunication Union (ITU) has also been involved in the standardization process, particularly through its Radiocommunication Sector (ITU-R). ITU-R has been working on recommendations for the production and exchange of immersive audio-visual content, addressing the unique challenges posed by technologies like Dolby Vision and 3D audio systems.

Additionally, the Moving Picture Experts Group (MPEG) has been actively developing standards for the efficient coding and transmission of immersive audio-visual content. Their work includes the development of MPEG-H 3D Audio and MPEG-I standards, which aim to provide a comprehensive framework for delivering synchronized 3D audio and high-quality video experiences.

The Digital Video Broadcasting (DVB) project has also been contributing to standardization efforts, focusing on the broadcast and streaming aspects of immersive content delivery. Their work includes defining specifications for the transmission of Dolby Vision metadata and associated 3D audio streams over various distribution networks.

Collaboration between these standardization bodies and industry leaders like Dolby Laboratories has been essential in addressing the complex challenges of 3D audio-visual synchronization. These efforts have resulted in the development of key standards and recommendations, such as SMPTE ST 2094 for dynamic metadata in HDR systems and ITU-R BS.2076 for the Audio Definition Model (ADM).

As the technology continues to advance, ongoing standardization efforts are focusing on refining existing standards and developing new ones to address emerging challenges. These include improving latency management in live production environments, enhancing synchronization accuracy in virtual and augmented reality applications, and ensuring seamless integration with next-generation display and audio technologies.

The success of these standardization efforts will be crucial in enabling widespread adoption of Dolby Vision and 3D audio technologies across various industries, including film production, broadcast, streaming services, and gaming. By establishing common frameworks and protocols, these standards will help ensure consistent, high-quality experiences for end-users across different platforms and devices.

One of the primary organizations leading these standardization efforts is the Society of Motion Picture and Television Engineers (SMPTE). SMPTE has been actively developing standards for audio-visual synchronization in immersive environments, including those utilizing Dolby Vision technology. Their work focuses on defining precise timing mechanisms and metadata formats to maintain accurate synchronization between video and 3D audio elements.

The International Telecommunication Union (ITU) has also been involved in the standardization process, particularly through its Radiocommunication Sector (ITU-R). ITU-R has been working on recommendations for the production and exchange of immersive audio-visual content, addressing the unique challenges posed by technologies like Dolby Vision and 3D audio systems.

Additionally, the Moving Picture Experts Group (MPEG) has been actively developing standards for the efficient coding and transmission of immersive audio-visual content. Their work includes the development of MPEG-H 3D Audio and MPEG-I standards, which aim to provide a comprehensive framework for delivering synchronized 3D audio and high-quality video experiences.

The Digital Video Broadcasting (DVB) project has also been contributing to standardization efforts, focusing on the broadcast and streaming aspects of immersive content delivery. Their work includes defining specifications for the transmission of Dolby Vision metadata and associated 3D audio streams over various distribution networks.

Collaboration between these standardization bodies and industry leaders like Dolby Laboratories has been essential in addressing the complex challenges of 3D audio-visual synchronization. These efforts have resulted in the development of key standards and recommendations, such as SMPTE ST 2094 for dynamic metadata in HDR systems and ITU-R BS.2076 for the Audio Definition Model (ADM).

As the technology continues to advance, ongoing standardization efforts are focusing on refining existing standards and developing new ones to address emerging challenges. These include improving latency management in live production environments, enhancing synchronization accuracy in virtual and augmented reality applications, and ensuring seamless integration with next-generation display and audio technologies.

The success of these standardization efforts will be crucial in enabling widespread adoption of Dolby Vision and 3D audio technologies across various industries, including film production, broadcast, streaming services, and gaming. By establishing common frameworks and protocols, these standards will help ensure consistent, high-quality experiences for end-users across different platforms and devices.

Cross-Platform Integration

Dolby Vision's integration across various platforms has significantly impacted the synchronization of 3D audio-visual content. The technology's widespread adoption has necessitated the development of standardized protocols for seamless cross-platform implementation. Major streaming services, including Netflix, Amazon Prime Video, and Apple TV+, have incorporated Dolby Vision into their ecosystems, driving the need for consistent audio-visual synchronization across diverse devices and operating systems.

The integration process has faced challenges due to the varying hardware capabilities and software architectures of different platforms. Mobile devices, smart TVs, gaming consoles, and desktop computers each present unique requirements for implementing Dolby Vision and ensuring precise audio-visual synchronization. This diversity has led to the development of adaptive algorithms that can adjust synchronization parameters based on the specific platform characteristics.

Content creators and post-production teams have had to adapt their workflows to accommodate cross-platform integration. This has resulted in the emergence of new tools and software solutions designed to streamline the production process while maintaining consistent audio-visual synchronization across multiple delivery platforms. These tools often incorporate machine learning algorithms to predict and compensate for potential synchronization discrepancies on various devices.

The push for cross-platform integration has also accelerated the development of cloud-based rendering and delivery systems. These systems aim to offload some of the processing requirements from end-user devices, potentially improving synchronization accuracy and consistency across platforms. However, this approach introduces new challenges related to network latency and bandwidth limitations, which must be carefully managed to maintain the integrity of the audio-visual experience.

Standardization efforts have played a crucial role in facilitating cross-platform integration. Organizations such as the Society of Motion Picture and Television Engineers (SMPTE) and the Advanced Television Systems Committee (ATSC) have worked to establish guidelines and specifications for implementing Dolby Vision and ensuring accurate audio-visual synchronization across different platforms. These standards have helped to create a more unified ecosystem, reducing compatibility issues and improving the overall user experience.

As the technology continues to evolve, there is an increasing focus on developing platform-agnostic solutions that can adapt to a wide range of devices and operating systems. This approach aims to simplify the integration process for content providers and device manufacturers while ensuring consistent audio-visual synchronization for end-users across all platforms.

The integration process has faced challenges due to the varying hardware capabilities and software architectures of different platforms. Mobile devices, smart TVs, gaming consoles, and desktop computers each present unique requirements for implementing Dolby Vision and ensuring precise audio-visual synchronization. This diversity has led to the development of adaptive algorithms that can adjust synchronization parameters based on the specific platform characteristics.

Content creators and post-production teams have had to adapt their workflows to accommodate cross-platform integration. This has resulted in the emergence of new tools and software solutions designed to streamline the production process while maintaining consistent audio-visual synchronization across multiple delivery platforms. These tools often incorporate machine learning algorithms to predict and compensate for potential synchronization discrepancies on various devices.

The push for cross-platform integration has also accelerated the development of cloud-based rendering and delivery systems. These systems aim to offload some of the processing requirements from end-user devices, potentially improving synchronization accuracy and consistency across platforms. However, this approach introduces new challenges related to network latency and bandwidth limitations, which must be carefully managed to maintain the integrity of the audio-visual experience.

Standardization efforts have played a crucial role in facilitating cross-platform integration. Organizations such as the Society of Motion Picture and Television Engineers (SMPTE) and the Advanced Television Systems Committee (ATSC) have worked to establish guidelines and specifications for implementing Dolby Vision and ensuring accurate audio-visual synchronization across different platforms. These standards have helped to create a more unified ecosystem, reducing compatibility issues and improving the overall user experience.

As the technology continues to evolve, there is an increasing focus on developing platform-agnostic solutions that can adapt to a wide range of devices and operating systems. This approach aims to simplify the integration process for content providers and device manufacturers while ensuring consistent audio-visual synchronization for end-users across all platforms.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!