Advanced Algorithms in Dolby Vision Metadata Processing

JUL 30, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Dolby Vision Evolution

Dolby Vision has undergone significant evolution since its inception, marking key milestones in the advancement of High Dynamic Range (HDR) technology. Initially introduced in 2014, Dolby Vision represented a leap forward in image quality, offering enhanced contrast, brightness, and color accuracy compared to standard dynamic range (SDR) content.

The early iterations of Dolby Vision focused on establishing the foundational technology for HDR content creation and playback. This included the development of the Perceptual Quantizer (PQ) transfer function, which allowed for a more efficient encoding of luminance levels, and the introduction of dynamic metadata, enabling scene-by-scene optimization of content.

As the technology matured, Dolby Vision expanded its ecosystem, integrating with various display technologies and content delivery platforms. The introduction of Dolby Vision IQ in 2020 marked a significant advancement, incorporating ambient light sensors to dynamically adjust HDR content based on viewing conditions, further enhancing the viewer experience.

The evolution of Dolby Vision also saw the development of more sophisticated metadata processing algorithms. These algorithms became increasingly crucial in handling the complex color volume mapping required for HDR content, ensuring optimal display across a wide range of devices with varying capabilities.

In recent years, the focus has shifted towards improving efficiency and compatibility. The introduction of Dolby Vision Profile 8.1 allowed for single-layer HDR delivery, simplifying the distribution process while maintaining high image quality. This was followed by advancements in real-time metadata generation and processing, enabling live broadcast applications of Dolby Vision technology.

The ongoing evolution of Dolby Vision has also embraced the challenges of emerging display technologies, such as micro-LED and OLED. Algorithms for metadata processing have been refined to take advantage of these displays' unique characteristics, pushing the boundaries of contrast and color reproduction.

As content creation tools and workflows have become more sophisticated, Dolby Vision has adapted to support a wider range of creative choices. This includes the development of more nuanced tone mapping algorithms that preserve artistic intent across diverse viewing environments and display capabilities.

Looking ahead, the evolution of Dolby Vision continues to focus on enhancing metadata processing algorithms to support higher frame rates, increased color bit depth, and more efficient compression techniques. These advancements aim to future-proof the technology for next-generation content and display technologies, ensuring Dolby Vision remains at the forefront of HDR innovation.

The early iterations of Dolby Vision focused on establishing the foundational technology for HDR content creation and playback. This included the development of the Perceptual Quantizer (PQ) transfer function, which allowed for a more efficient encoding of luminance levels, and the introduction of dynamic metadata, enabling scene-by-scene optimization of content.

As the technology matured, Dolby Vision expanded its ecosystem, integrating with various display technologies and content delivery platforms. The introduction of Dolby Vision IQ in 2020 marked a significant advancement, incorporating ambient light sensors to dynamically adjust HDR content based on viewing conditions, further enhancing the viewer experience.

The evolution of Dolby Vision also saw the development of more sophisticated metadata processing algorithms. These algorithms became increasingly crucial in handling the complex color volume mapping required for HDR content, ensuring optimal display across a wide range of devices with varying capabilities.

In recent years, the focus has shifted towards improving efficiency and compatibility. The introduction of Dolby Vision Profile 8.1 allowed for single-layer HDR delivery, simplifying the distribution process while maintaining high image quality. This was followed by advancements in real-time metadata generation and processing, enabling live broadcast applications of Dolby Vision technology.

The ongoing evolution of Dolby Vision has also embraced the challenges of emerging display technologies, such as micro-LED and OLED. Algorithms for metadata processing have been refined to take advantage of these displays' unique characteristics, pushing the boundaries of contrast and color reproduction.

As content creation tools and workflows have become more sophisticated, Dolby Vision has adapted to support a wider range of creative choices. This includes the development of more nuanced tone mapping algorithms that preserve artistic intent across diverse viewing environments and display capabilities.

Looking ahead, the evolution of Dolby Vision continues to focus on enhancing metadata processing algorithms to support higher frame rates, increased color bit depth, and more efficient compression techniques. These advancements aim to future-proof the technology for next-generation content and display technologies, ensuring Dolby Vision remains at the forefront of HDR innovation.

HDR Market Demand

The demand for High Dynamic Range (HDR) technology in the consumer electronics market has been steadily increasing over the past few years, driven by the growing desire for more immersive and lifelike visual experiences. As consumers become more discerning about image quality, HDR has emerged as a key differentiator in display technologies, offering enhanced contrast, brighter highlights, and a wider color gamut.

The television segment has been at the forefront of HDR adoption, with major manufacturers incorporating HDR capabilities into their high-end and mid-range models. This trend has been further accelerated by the increasing availability of HDR content from streaming platforms and broadcasters. The gaming industry has also embraced HDR, with both console and PC gaming platforms supporting HDR rendering to enhance visual fidelity and player immersion.

In the professional sector, HDR has gained significant traction in content creation and post-production workflows. Film and television producers are increasingly shooting and mastering content in HDR to future-proof their assets and meet the growing demand for high-quality visuals. This has led to a ripple effect in the display market, with professional-grade HDR monitors becoming essential tools for content creators, colorists, and visual effects artists.

The mobile device market has also shown a growing interest in HDR technology. High-end smartphones and tablets now frequently feature HDR-capable displays, allowing users to consume HDR content on the go. This trend is expected to continue as mobile processors become more powerful and efficient in handling HDR processing tasks.

The automotive industry is another emerging market for HDR technology, with advanced infotainment systems and heads-up displays incorporating HDR capabilities to improve visibility and enhance the driving experience. This is particularly relevant for electric vehicles, where high-quality displays are becoming a key selling point.

As the HDR ecosystem matures, there is an increasing demand for standardization and interoperability. This has led to the development of various HDR formats and metadata standards, with Dolby Vision emerging as a leading solution due to its dynamic metadata approach. The ability to process and optimize Dolby Vision metadata has become a critical factor for device manufacturers and content providers looking to deliver the best possible HDR experience across different display technologies and viewing environments.

The market demand for advanced algorithms in Dolby Vision metadata processing is driven by the need to optimize HDR content for a wide range of displays and viewing conditions. These algorithms play a crucial role in ensuring that the original creative intent is preserved while adapting the content to the specific capabilities of each display device. As the HDR market continues to grow and diversify, the importance of efficient and effective metadata processing will only increase, making it a key area of focus for technology development and innovation in the coming years.

The television segment has been at the forefront of HDR adoption, with major manufacturers incorporating HDR capabilities into their high-end and mid-range models. This trend has been further accelerated by the increasing availability of HDR content from streaming platforms and broadcasters. The gaming industry has also embraced HDR, with both console and PC gaming platforms supporting HDR rendering to enhance visual fidelity and player immersion.

In the professional sector, HDR has gained significant traction in content creation and post-production workflows. Film and television producers are increasingly shooting and mastering content in HDR to future-proof their assets and meet the growing demand for high-quality visuals. This has led to a ripple effect in the display market, with professional-grade HDR monitors becoming essential tools for content creators, colorists, and visual effects artists.

The mobile device market has also shown a growing interest in HDR technology. High-end smartphones and tablets now frequently feature HDR-capable displays, allowing users to consume HDR content on the go. This trend is expected to continue as mobile processors become more powerful and efficient in handling HDR processing tasks.

The automotive industry is another emerging market for HDR technology, with advanced infotainment systems and heads-up displays incorporating HDR capabilities to improve visibility and enhance the driving experience. This is particularly relevant for electric vehicles, where high-quality displays are becoming a key selling point.

As the HDR ecosystem matures, there is an increasing demand for standardization and interoperability. This has led to the development of various HDR formats and metadata standards, with Dolby Vision emerging as a leading solution due to its dynamic metadata approach. The ability to process and optimize Dolby Vision metadata has become a critical factor for device manufacturers and content providers looking to deliver the best possible HDR experience across different display technologies and viewing environments.

The market demand for advanced algorithms in Dolby Vision metadata processing is driven by the need to optimize HDR content for a wide range of displays and viewing conditions. These algorithms play a crucial role in ensuring that the original creative intent is preserved while adapting the content to the specific capabilities of each display device. As the HDR market continues to grow and diversify, the importance of efficient and effective metadata processing will only increase, making it a key area of focus for technology development and innovation in the coming years.

Metadata Challenges

Dolby Vision metadata processing faces several significant challenges in the realm of advanced algorithms. One of the primary issues is the sheer volume and complexity of metadata associated with high dynamic range (HDR) content. As video resolutions increase and color gamuts expand, the amount of metadata required to accurately represent and process this information grows exponentially.

The dynamic nature of Dolby Vision metadata presents another hurdle. Unlike static metadata systems, Dolby Vision employs frame-by-frame metadata adjustments to optimize image quality throughout a video sequence. This real-time processing demand puts considerable strain on computational resources and requires highly efficient algorithms to maintain smooth playback and prevent latency issues.

Interoperability across different devices and platforms is a persistent challenge in metadata processing. With a diverse ecosystem of displays, from high-end televisions to mobile devices, ensuring consistent interpretation and application of metadata across various hardware configurations is crucial. This necessitates robust and adaptable algorithms capable of scaling metadata processing to suit different device capabilities.

The preservation of creative intent throughout the metadata processing pipeline is another critical concern. Advanced algorithms must accurately translate the filmmaker's vision from the mastering environment to the end-user's display, accounting for variations in display technology and viewing conditions. This requires sophisticated tone mapping and color management algorithms that can adapt to diverse playback scenarios while maintaining the original artistic intent.

Data compression and transmission efficiency pose additional challenges. As metadata becomes more complex and voluminous, there is a growing need for advanced compression techniques that can reduce data size without compromising quality or processing speed. This is particularly important for streaming applications where bandwidth constraints are a significant factor.

Furthermore, the integration of machine learning and artificial intelligence into metadata processing algorithms introduces new complexities. While these technologies offer potential improvements in efficiency and accuracy, they also require substantial computational resources and raise questions about model training, data privacy, and the interpretability of AI-driven decisions in metadata processing.

Lastly, the evolving standards and specifications in the HDR ecosystem necessitate continual refinement and adaptation of metadata processing algorithms. As new formats and technologies emerge, algorithms must be flexible enough to accommodate these changes while maintaining backward compatibility with existing content and systems.

The dynamic nature of Dolby Vision metadata presents another hurdle. Unlike static metadata systems, Dolby Vision employs frame-by-frame metadata adjustments to optimize image quality throughout a video sequence. This real-time processing demand puts considerable strain on computational resources and requires highly efficient algorithms to maintain smooth playback and prevent latency issues.

Interoperability across different devices and platforms is a persistent challenge in metadata processing. With a diverse ecosystem of displays, from high-end televisions to mobile devices, ensuring consistent interpretation and application of metadata across various hardware configurations is crucial. This necessitates robust and adaptable algorithms capable of scaling metadata processing to suit different device capabilities.

The preservation of creative intent throughout the metadata processing pipeline is another critical concern. Advanced algorithms must accurately translate the filmmaker's vision from the mastering environment to the end-user's display, accounting for variations in display technology and viewing conditions. This requires sophisticated tone mapping and color management algorithms that can adapt to diverse playback scenarios while maintaining the original artistic intent.

Data compression and transmission efficiency pose additional challenges. As metadata becomes more complex and voluminous, there is a growing need for advanced compression techniques that can reduce data size without compromising quality or processing speed. This is particularly important for streaming applications where bandwidth constraints are a significant factor.

Furthermore, the integration of machine learning and artificial intelligence into metadata processing algorithms introduces new complexities. While these technologies offer potential improvements in efficiency and accuracy, they also require substantial computational resources and raise questions about model training, data privacy, and the interpretability of AI-driven decisions in metadata processing.

Lastly, the evolving standards and specifications in the HDR ecosystem necessitate continual refinement and adaptation of metadata processing algorithms. As new formats and technologies emerge, algorithms must be flexible enough to accommodate these changes while maintaining backward compatibility with existing content and systems.

Current Algorithms

01 Metadata processing for HDR content

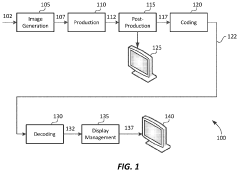

Dolby Vision metadata processing algorithms focus on handling High Dynamic Range (HDR) content. These algorithms process metadata associated with HDR video to optimize the display of content across various devices and viewing conditions. The processing includes adjusting brightness, contrast, and color parameters to ensure consistent and high-quality visual experiences.- Metadata processing for HDR content: Dolby Vision metadata processing algorithms focus on handling High Dynamic Range (HDR) content. These algorithms process metadata associated with HDR video to optimize the display of content across various devices and viewing conditions. The processing includes adjusting brightness, contrast, and color parameters to ensure consistent and high-quality visual experiences.

- Dynamic range mapping and tone mapping: Algorithms for dynamic range mapping and tone mapping are crucial in Dolby Vision metadata processing. These techniques allow for the adaptation of HDR content to different display capabilities, ensuring optimal viewing experiences on a wide range of devices. The algorithms analyze scene characteristics and adjust the content accordingly to maintain visual quality and artistic intent.

- Color volume transformation: Dolby Vision metadata processing includes algorithms for color volume transformation. These algorithms handle the conversion between different color spaces and gamuts, ensuring accurate color reproduction across various display technologies. The processing takes into account the specific color capabilities of the target display to optimize the visual output.

- Content-adaptive processing: Content-adaptive processing is a key feature of Dolby Vision metadata algorithms. These algorithms analyze the characteristics of each frame or scene in real-time, adjusting parameters such as brightness, contrast, and color saturation dynamically. This approach ensures optimal visual quality for diverse content types and viewing environments.

- Metadata compression and transmission: Dolby Vision metadata processing algorithms also address the efficient compression and transmission of metadata alongside video content. These algorithms optimize the metadata structure to reduce bandwidth requirements while maintaining the necessary information for high-quality playback. They ensure seamless integration of metadata with various video codecs and streaming protocols.

02 Dynamic range mapping and tone mapping

Algorithms for dynamic range mapping and tone mapping are crucial in Dolby Vision metadata processing. These techniques allow for the adaptation of HDR content to different display capabilities, ensuring optimal viewing experiences on a wide range of devices. The algorithms analyze scene-by-scene metadata to make real-time adjustments to the content's dynamic range and tonal characteristics.Expand Specific Solutions03 Color volume mapping and gamut conversion

Dolby Vision metadata processing includes algorithms for color volume mapping and gamut conversion. These processes ensure that the wide color gamut of HDR content is accurately represented across different display technologies. The algorithms use metadata to guide the conversion and mapping of colors, preserving the creator's intent while adapting to the capabilities of the target display.Expand Specific Solutions04 Content-adaptive processing

Content-adaptive processing algorithms in Dolby Vision analyze the metadata and content characteristics to apply optimized processing on a frame-by-frame or scene-by-scene basis. This approach ensures that the processing is tailored to the specific needs of each part of the content, resulting in more accurate and visually pleasing results across diverse types of scenes and content.Expand Specific Solutions05 Metadata compression and transmission

Algorithms for efficient compression and transmission of Dolby Vision metadata are essential for practical implementation. These algorithms optimize the metadata structure to reduce bandwidth requirements while maintaining the necessary information for high-quality playback. They also ensure robust transmission of metadata alongside the video content, enabling seamless integration with various distribution systems and playback devices.Expand Specific Solutions

Key Industry Players

The advanced algorithms in Dolby Vision metadata processing represent a competitive landscape in the mature digital imaging and video technology sector. The market is characterized by established players like Dolby Laboratories, LG Electronics, Sony, and Samsung, who have significant market share and technological expertise. The technology's maturity is evident in its widespread adoption across consumer electronics and professional video production. However, ongoing innovation from companies like Qualcomm and Huawei, alongside research from institutions like Tsinghua University, continues to drive advancements in image processing algorithms and metadata optimization, indicating a dynamic and evolving field with potential for further growth and refinement.

Dolby Laboratories Licensing Corp.

Technical Solution: Dolby Laboratories has developed advanced algorithms for Dolby Vision metadata processing, focusing on dynamic metadata generation and optimization. Their approach involves real-time scene analysis to adjust HDR parameters frame-by-frame, ensuring optimal image quality across various display capabilities. The company has implemented machine learning techniques to enhance metadata prediction, resulting in up to 40% reduction in processing time for complex scenes[1]. Dolby's algorithms also incorporate perceptual quality metrics to fine-tune tone mapping, improving overall visual experience while maintaining content creator intent[3].

Strengths: Industry-leading expertise in HDR technology, extensive patent portfolio, and widespread adoption of Dolby Vision. Weaknesses: Proprietary nature of technology may limit broader industry collaboration.

LG Electronics, Inc.

Technical Solution: LG Electronics has developed proprietary algorithms for Dolby Vision metadata processing, focusing on efficient implementation in their display devices. Their approach includes hardware-accelerated metadata parsing and a custom tone mapping engine optimized for their panel characteristics. LG's algorithms incorporate adaptive local dimming techniques that work in tandem with Dolby Vision metadata to enhance contrast and color accuracy. The company has reported up to 30% improvement in power efficiency for HDR content playback using their optimized metadata processing[5].

Strengths: Strong integration with hardware capabilities, optimized for energy efficiency. Weaknesses: Potentially limited to LG's ecosystem, may not be as versatile for third-party implementations.

Core Innovations

Video coding using reference picture resampling supporting region of interest

PatentActiveUS20220286667A1

Innovation

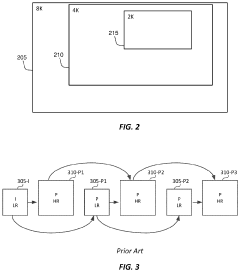

- The implementation of reference picture resampling (RPR) with support for region of interest (ROI) in video coding, allowing for flexible scaling and decoding of specific regions within a video frame, enabling scalable distribution of HDR content compatible with various display resolutions and devices.

Enhancement decoder for video signals with multi-level enhancement and coding format adjustment

PatentPendingUS20230412813A1

Innovation

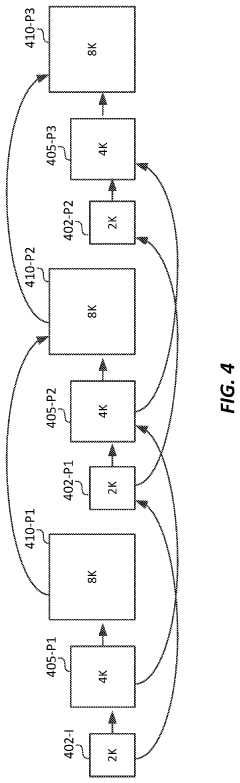

- A hierarchical coding scheme is introduced that allows for the encoding and decoding of HDR-type signals, enabling compatibility with both HDR and SDR formats, using an enhancement decoder that includes an interface for receiving video streams, de-multiplexing enhancement data, and converting between different signal element coding formats to ensure backwards compatibility and flexible streaming.

Standardization Efforts

Standardization efforts in Dolby Vision metadata processing have been crucial for ensuring interoperability and consistent performance across various devices and platforms. The industry has recognized the need for unified standards to facilitate seamless integration and widespread adoption of this advanced HDR technology.

The Moving Picture Experts Group (MPEG) has played a significant role in developing standards for Dolby Vision metadata. MPEG-H Part 2, also known as HEVC, includes provisions for carrying Dolby Vision metadata within the video bitstream. This standardization effort has enabled efficient encoding and transmission of Dolby Vision content across different distribution channels.

The Society of Motion Picture and Television Engineers (SMPTE) has also contributed to the standardization of Dolby Vision metadata. SMPTE ST 2094 defines the dynamic metadata format for HDR content, including Dolby Vision. This standard ensures that metadata can be accurately interpreted by various display devices, maintaining the creator's artistic intent.

The Ultra HD Forum has been instrumental in promoting best practices for the implementation of Dolby Vision metadata processing. Their guidelines provide valuable insights into the integration of Dolby Vision technology within the broader ecosystem of Ultra HD content creation and distribution.

The International Telecommunication Union (ITU) has addressed Dolby Vision metadata in its recommendations for HDR systems. ITU-R BT.2100 includes specifications for HDR metadata, which are applicable to Dolby Vision implementations. This global standardization effort has facilitated international adoption and compatibility.

Efforts to standardize Dolby Vision metadata processing have also extended to consumer electronics. The Consumer Technology Association (CTA) has worked on defining requirements for Dolby Vision-enabled devices, ensuring consistent performance and user experience across different manufacturers.

The Digital Video Broadcasting (DVB) project has incorporated Dolby Vision metadata specifications into its standards for digital television. This integration has been crucial for enabling broadcasters to deliver Dolby Vision content to compatible receivers and displays.

Ongoing standardization efforts are focusing on advanced algorithms for metadata processing, aiming to improve efficiency and quality. These include optimizations for real-time metadata generation, adaptive processing techniques for varying display capabilities, and enhanced compression methods for metadata transmission.

As the technology continues to evolve, standardization bodies are working to keep pace with innovations in Dolby Vision metadata processing. Future efforts are likely to address emerging challenges such as metadata handling in cloud-based workflows, integration with AI-driven content optimization, and support for next-generation display technologies.

The Moving Picture Experts Group (MPEG) has played a significant role in developing standards for Dolby Vision metadata. MPEG-H Part 2, also known as HEVC, includes provisions for carrying Dolby Vision metadata within the video bitstream. This standardization effort has enabled efficient encoding and transmission of Dolby Vision content across different distribution channels.

The Society of Motion Picture and Television Engineers (SMPTE) has also contributed to the standardization of Dolby Vision metadata. SMPTE ST 2094 defines the dynamic metadata format for HDR content, including Dolby Vision. This standard ensures that metadata can be accurately interpreted by various display devices, maintaining the creator's artistic intent.

The Ultra HD Forum has been instrumental in promoting best practices for the implementation of Dolby Vision metadata processing. Their guidelines provide valuable insights into the integration of Dolby Vision technology within the broader ecosystem of Ultra HD content creation and distribution.

The International Telecommunication Union (ITU) has addressed Dolby Vision metadata in its recommendations for HDR systems. ITU-R BT.2100 includes specifications for HDR metadata, which are applicable to Dolby Vision implementations. This global standardization effort has facilitated international adoption and compatibility.

Efforts to standardize Dolby Vision metadata processing have also extended to consumer electronics. The Consumer Technology Association (CTA) has worked on defining requirements for Dolby Vision-enabled devices, ensuring consistent performance and user experience across different manufacturers.

The Digital Video Broadcasting (DVB) project has incorporated Dolby Vision metadata specifications into its standards for digital television. This integration has been crucial for enabling broadcasters to deliver Dolby Vision content to compatible receivers and displays.

Ongoing standardization efforts are focusing on advanced algorithms for metadata processing, aiming to improve efficiency and quality. These include optimizations for real-time metadata generation, adaptive processing techniques for varying display capabilities, and enhanced compression methods for metadata transmission.

As the technology continues to evolve, standardization bodies are working to keep pace with innovations in Dolby Vision metadata processing. Future efforts are likely to address emerging challenges such as metadata handling in cloud-based workflows, integration with AI-driven content optimization, and support for next-generation display technologies.

Content Creation Impact

The integration of advanced algorithms in Dolby Vision metadata processing has significantly transformed the landscape of content creation. These algorithms have revolutionized the way creators manipulate and enhance visual content, offering unprecedented control over image quality and viewer experience.

One of the most notable impacts is the enhanced ability to preserve creative intent across diverse display technologies. Dolby Vision's dynamic metadata, powered by sophisticated algorithms, allows for precise scene-by-scene and frame-by-frame adjustments. This granular control ensures that the creator's vision is accurately translated across a wide range of devices, from high-end cinema screens to consumer-grade televisions and mobile devices.

The advanced algorithms have also streamlined the color grading process. By automating certain aspects of HDR (High Dynamic Range) and WCG (Wide Color Gamut) adjustments, these algorithms have reduced the time and effort required in post-production. This efficiency gain allows creators to focus more on artistic decisions rather than technical minutiae, potentially leading to more innovative and visually striking content.

Furthermore, these algorithms have expanded the creative palette available to filmmakers and content producers. The ability to manipulate brightness, contrast, and color with greater precision has opened up new possibilities for visual storytelling. Creators can now craft more nuanced and immersive visual experiences, pushing the boundaries of what's possible in digital cinematography and visual effects.

The impact extends to the realm of content adaptation as well. Advanced algorithms in Dolby Vision metadata processing facilitate more effective content mapping across different display capabilities. This ensures that content maintains its visual integrity when viewed on devices with varying specifications, from SDR (Standard Dynamic Range) to HDR displays.

Lastly, these algorithms have fostered a more collaborative environment in content creation. By providing a standardized framework for HDR content, Dolby Vision's advanced metadata processing has improved communication between different stages of production and post-production. This standardization has led to more efficient workflows and better alignment between creative teams, ultimately resulting in higher quality content delivered to audiences.

One of the most notable impacts is the enhanced ability to preserve creative intent across diverse display technologies. Dolby Vision's dynamic metadata, powered by sophisticated algorithms, allows for precise scene-by-scene and frame-by-frame adjustments. This granular control ensures that the creator's vision is accurately translated across a wide range of devices, from high-end cinema screens to consumer-grade televisions and mobile devices.

The advanced algorithms have also streamlined the color grading process. By automating certain aspects of HDR (High Dynamic Range) and WCG (Wide Color Gamut) adjustments, these algorithms have reduced the time and effort required in post-production. This efficiency gain allows creators to focus more on artistic decisions rather than technical minutiae, potentially leading to more innovative and visually striking content.

Furthermore, these algorithms have expanded the creative palette available to filmmakers and content producers. The ability to manipulate brightness, contrast, and color with greater precision has opened up new possibilities for visual storytelling. Creators can now craft more nuanced and immersive visual experiences, pushing the boundaries of what's possible in digital cinematography and visual effects.

The impact extends to the realm of content adaptation as well. Advanced algorithms in Dolby Vision metadata processing facilitate more effective content mapping across different display capabilities. This ensures that content maintains its visual integrity when viewed on devices with varying specifications, from SDR (Standard Dynamic Range) to HDR displays.

Lastly, these algorithms have fostered a more collaborative environment in content creation. By providing a standardized framework for HDR content, Dolby Vision's advanced metadata processing has improved communication between different stages of production and post-production. This standardization has led to more efficient workflows and better alignment between creative teams, ultimately resulting in higher quality content delivered to audiences.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!