High Pass Filters for Digital Twin Implementations in Predictive Analytics

JUL 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Digital Twin HPF Background and Objectives

Digital twins have emerged as a transformative technology in the realm of predictive analytics, offering unprecedented capabilities for real-time monitoring, simulation, and optimization of physical assets and systems. The concept of digital twins originated in the early 2000s, primarily in the aerospace and manufacturing sectors, but has since expanded to various industries including healthcare, energy, and smart cities. As the technology evolved, the need for more sophisticated data processing techniques became apparent, leading to the integration of high pass filters (HPFs) in digital twin implementations.

High pass filters, traditionally used in signal processing to attenuate low-frequency signals while allowing high-frequency signals to pass through, have found a new application in the context of digital twins. In predictive analytics, HPFs play a crucial role in enhancing the accuracy and reliability of digital twin models by filtering out noise and irrelevant low-frequency data, thereby focusing on the most significant and rapidly changing aspects of the system being modeled.

The primary objective of incorporating HPFs in digital twin implementations for predictive analytics is to improve the overall performance and predictive capabilities of these virtual replicas. By effectively isolating high-frequency components of data streams, HPFs enable digital twins to capture and respond to rapid changes in the physical system more accurately. This enhanced sensitivity to dynamic variations is particularly valuable in scenarios where quick detection of anomalies or sudden shifts in system behavior is critical.

Furthermore, the integration of HPFs aims to address several challenges inherent in digital twin technology. These include reducing the computational burden associated with processing vast amounts of data, minimizing the impact of sensor drift and low-frequency environmental fluctuations, and improving the signal-to-noise ratio in data acquisition. By tackling these issues, HPFs contribute to the development of more robust and reliable digital twin models, capable of providing more accurate predictions and insights.

As the field of predictive analytics continues to advance, the role of HPFs in digital twin implementations is expected to grow in importance. The ongoing research and development in this area focus on optimizing filter designs, exploring adaptive filtering techniques, and integrating machine learning algorithms to enhance the effectiveness of HPFs in various application domains. The ultimate goal is to create digital twins that can provide increasingly accurate, real-time representations of physical systems, enabling more informed decision-making and proactive maintenance strategies across industries.

High pass filters, traditionally used in signal processing to attenuate low-frequency signals while allowing high-frequency signals to pass through, have found a new application in the context of digital twins. In predictive analytics, HPFs play a crucial role in enhancing the accuracy and reliability of digital twin models by filtering out noise and irrelevant low-frequency data, thereby focusing on the most significant and rapidly changing aspects of the system being modeled.

The primary objective of incorporating HPFs in digital twin implementations for predictive analytics is to improve the overall performance and predictive capabilities of these virtual replicas. By effectively isolating high-frequency components of data streams, HPFs enable digital twins to capture and respond to rapid changes in the physical system more accurately. This enhanced sensitivity to dynamic variations is particularly valuable in scenarios where quick detection of anomalies or sudden shifts in system behavior is critical.

Furthermore, the integration of HPFs aims to address several challenges inherent in digital twin technology. These include reducing the computational burden associated with processing vast amounts of data, minimizing the impact of sensor drift and low-frequency environmental fluctuations, and improving the signal-to-noise ratio in data acquisition. By tackling these issues, HPFs contribute to the development of more robust and reliable digital twin models, capable of providing more accurate predictions and insights.

As the field of predictive analytics continues to advance, the role of HPFs in digital twin implementations is expected to grow in importance. The ongoing research and development in this area focus on optimizing filter designs, exploring adaptive filtering techniques, and integrating machine learning algorithms to enhance the effectiveness of HPFs in various application domains. The ultimate goal is to create digital twins that can provide increasingly accurate, real-time representations of physical systems, enabling more informed decision-making and proactive maintenance strategies across industries.

Predictive Analytics Market Demand

The predictive analytics market has experienced significant growth in recent years, driven by the increasing adoption of digital technologies and the growing need for data-driven decision-making across various industries. This market demand is particularly relevant to the implementation of high pass filters in digital twin applications for predictive analytics.

The global predictive analytics market size was valued at $7.2 billion in 2020 and is projected to reach $35.45 billion by 2027, growing at a CAGR of 25.2% during the forecast period. This substantial growth is attributed to the rising demand for advanced analytics solutions that can provide actionable insights and improve operational efficiency.

In the context of digital twin implementations, the demand for high pass filters in predictive analytics is driven by the need for more accurate and real-time data processing. Digital twins, which are virtual representations of physical assets or systems, require sophisticated filtering techniques to eliminate noise and focus on high-frequency data components that are crucial for predictive maintenance and performance optimization.

The manufacturing sector is one of the primary drivers of this market demand, with an increasing number of companies adopting digital twin technology to enhance their predictive maintenance capabilities. The automotive industry, in particular, has shown a strong interest in implementing high pass filters within digital twin systems to improve vehicle performance prediction and reduce maintenance costs.

Another significant market segment driving the demand for high pass filters in digital twin implementations is the healthcare industry. The use of predictive analytics in patient monitoring and medical device optimization has created a need for advanced filtering techniques to process complex physiological data accurately.

The energy sector, including oil and gas and renewable energy industries, is also contributing to the market demand. These industries are leveraging digital twin technology with high pass filters to predict equipment failures, optimize energy production, and reduce downtime.

The increasing adoption of Internet of Things (IoT) devices and sensors across various industries is further fueling the demand for high pass filters in predictive analytics applications. As the volume of data generated by these devices continues to grow, the need for efficient filtering mechanisms to extract meaningful insights becomes more critical.

Moreover, the rising interest in edge computing and real-time analytics is creating new opportunities for high pass filter implementations in digital twins. This trend is driven by the need for faster decision-making and reduced latency in data processing, particularly in applications such as autonomous vehicles and smart manufacturing.

As organizations continue to recognize the value of predictive analytics in improving operational efficiency and reducing costs, the demand for advanced filtering techniques, including high pass filters, is expected to grow. This trend is likely to drive further innovation in digital twin implementations and create new opportunities for technology providers in the predictive analytics market.

The global predictive analytics market size was valued at $7.2 billion in 2020 and is projected to reach $35.45 billion by 2027, growing at a CAGR of 25.2% during the forecast period. This substantial growth is attributed to the rising demand for advanced analytics solutions that can provide actionable insights and improve operational efficiency.

In the context of digital twin implementations, the demand for high pass filters in predictive analytics is driven by the need for more accurate and real-time data processing. Digital twins, which are virtual representations of physical assets or systems, require sophisticated filtering techniques to eliminate noise and focus on high-frequency data components that are crucial for predictive maintenance and performance optimization.

The manufacturing sector is one of the primary drivers of this market demand, with an increasing number of companies adopting digital twin technology to enhance their predictive maintenance capabilities. The automotive industry, in particular, has shown a strong interest in implementing high pass filters within digital twin systems to improve vehicle performance prediction and reduce maintenance costs.

Another significant market segment driving the demand for high pass filters in digital twin implementations is the healthcare industry. The use of predictive analytics in patient monitoring and medical device optimization has created a need for advanced filtering techniques to process complex physiological data accurately.

The energy sector, including oil and gas and renewable energy industries, is also contributing to the market demand. These industries are leveraging digital twin technology with high pass filters to predict equipment failures, optimize energy production, and reduce downtime.

The increasing adoption of Internet of Things (IoT) devices and sensors across various industries is further fueling the demand for high pass filters in predictive analytics applications. As the volume of data generated by these devices continues to grow, the need for efficient filtering mechanisms to extract meaningful insights becomes more critical.

Moreover, the rising interest in edge computing and real-time analytics is creating new opportunities for high pass filter implementations in digital twins. This trend is driven by the need for faster decision-making and reduced latency in data processing, particularly in applications such as autonomous vehicles and smart manufacturing.

As organizations continue to recognize the value of predictive analytics in improving operational efficiency and reducing costs, the demand for advanced filtering techniques, including high pass filters, is expected to grow. This trend is likely to drive further innovation in digital twin implementations and create new opportunities for technology providers in the predictive analytics market.

HPF Challenges in Digital Twins

The implementation of High Pass Filters (HPFs) in Digital Twin systems for predictive analytics presents several significant challenges. These challenges stem from the complex nature of digital twin environments and the specific requirements of predictive analytics applications.

One of the primary challenges is the need for real-time processing capabilities. Digital twins often require continuous, high-frequency data streams to accurately represent their physical counterparts. HPFs must be designed to handle this high-volume, high-velocity data without introducing significant latency. This becomes particularly challenging when dealing with multiple data sources and diverse sensor types, each potentially requiring different filtering parameters.

Data quality and noise reduction pose another critical challenge. Industrial environments, where digital twins are commonly deployed, are often subject to various sources of noise and interference. HPFs must be robust enough to effectively remove low-frequency noise and unwanted signals without distorting the essential high-frequency components that are crucial for accurate predictive analytics. Achieving this balance requires sophisticated filter design and careful tuning.

The dynamic nature of digital twin systems adds another layer of complexity. As the physical system evolves or undergoes changes, the digital twin must adapt accordingly. This necessitates adaptive HPF algorithms that can automatically adjust their parameters based on changing system characteristics. Developing such adaptive filters that maintain stability and performance across various operating conditions is a significant technical hurdle.

Scalability is a further challenge in implementing HPFs for digital twins. As systems grow in complexity and scale, the computational demands of filtering operations increase substantially. Designing HPFs that can efficiently scale across distributed computing environments, potentially involving edge computing nodes and cloud infrastructure, requires careful consideration of resource allocation and data flow optimization.

Integration with existing digital twin platforms and predictive analytics tools presents interoperability challenges. HPFs must be designed to seamlessly interface with a wide range of software systems, data formats, and communication protocols. Ensuring compatibility and efficient data exchange between the filtering components and other elements of the digital twin ecosystem is crucial for effective implementation.

Lastly, the interpretability and explainability of HPF operations within the context of predictive analytics pose significant challenges. As these filters play a critical role in data preprocessing, understanding their impact on subsequent analysis and decision-making processes is essential. Developing methods to visualize and explain the effects of HPFs on the data and resulting predictions is crucial for building trust in the digital twin system and ensuring accurate interpretation of results.

One of the primary challenges is the need for real-time processing capabilities. Digital twins often require continuous, high-frequency data streams to accurately represent their physical counterparts. HPFs must be designed to handle this high-volume, high-velocity data without introducing significant latency. This becomes particularly challenging when dealing with multiple data sources and diverse sensor types, each potentially requiring different filtering parameters.

Data quality and noise reduction pose another critical challenge. Industrial environments, where digital twins are commonly deployed, are often subject to various sources of noise and interference. HPFs must be robust enough to effectively remove low-frequency noise and unwanted signals without distorting the essential high-frequency components that are crucial for accurate predictive analytics. Achieving this balance requires sophisticated filter design and careful tuning.

The dynamic nature of digital twin systems adds another layer of complexity. As the physical system evolves or undergoes changes, the digital twin must adapt accordingly. This necessitates adaptive HPF algorithms that can automatically adjust their parameters based on changing system characteristics. Developing such adaptive filters that maintain stability and performance across various operating conditions is a significant technical hurdle.

Scalability is a further challenge in implementing HPFs for digital twins. As systems grow in complexity and scale, the computational demands of filtering operations increase substantially. Designing HPFs that can efficiently scale across distributed computing environments, potentially involving edge computing nodes and cloud infrastructure, requires careful consideration of resource allocation and data flow optimization.

Integration with existing digital twin platforms and predictive analytics tools presents interoperability challenges. HPFs must be designed to seamlessly interface with a wide range of software systems, data formats, and communication protocols. Ensuring compatibility and efficient data exchange between the filtering components and other elements of the digital twin ecosystem is crucial for effective implementation.

Lastly, the interpretability and explainability of HPF operations within the context of predictive analytics pose significant challenges. As these filters play a critical role in data preprocessing, understanding their impact on subsequent analysis and decision-making processes is essential. Developing methods to visualize and explain the effects of HPFs on the data and resulting predictions is crucial for building trust in the digital twin system and ensuring accurate interpretation of results.

Current HPF Solutions for Digital Twins

01 Circuit design for high pass filters

High pass filters are designed using various circuit configurations to attenuate low-frequency signals while allowing high-frequency signals to pass through. These designs often involve the use of capacitors and resistors in specific arrangements to achieve the desired frequency response. Advanced designs may incorporate active components like operational amplifiers to enhance performance and provide additional functionality.- Circuit design for high pass filters: High pass filters are designed using various circuit configurations to attenuate low-frequency signals while allowing high-frequency signals to pass through. These designs often involve the use of capacitors and resistors in specific arrangements to achieve the desired frequency response. Advanced designs may incorporate active components like operational amplifiers to enhance performance and provide additional functionality.

- Digital implementation of high pass filters: High pass filters can be implemented digitally using digital signal processing techniques. These implementations often involve the use of digital filters, such as finite impulse response (FIR) or infinite impulse response (IIR) filters. Digital high pass filters offer advantages in terms of flexibility, precision, and ease of integration with other digital systems.

- Application in image and video processing: High pass filters play a crucial role in image and video processing applications. They are used for edge detection, sharpening, and noise reduction in digital images and video streams. These filters help to enhance the high-frequency components of visual data, improving overall image quality and facilitating various computer vision tasks.

- High pass filters in communication systems: High pass filters are essential components in various communication systems, including wireless and wired networks. They are used to remove low-frequency noise and interference, improve signal quality, and separate different frequency bands in multi-channel communication systems. These filters are crucial for maintaining signal integrity and optimizing system performance.

- Adaptive and tunable high pass filters: Advanced high pass filter designs incorporate adaptive and tunable features, allowing the filter characteristics to be adjusted dynamically based on changing signal conditions or system requirements. These filters may use variable components, digital control mechanisms, or feedback systems to optimize their performance in real-time, enhancing their versatility and effectiveness in various applications.

02 Application in image and video processing

High pass filters play a crucial role in image and video processing applications. They are used to enhance edge detection, improve image sharpness, and remove low-frequency noise from visual data. These filters can be implemented in both analog and digital domains, with digital implementations often utilizing specialized algorithms and hardware for real-time processing of high-resolution images and video streams.Expand Specific Solutions03 Integration with communication systems

High pass filters are integral components in various communication systems, including wireless and wired networks. They are used to remove unwanted low-frequency interference, improve signal-to-noise ratios, and separate different frequency bands in multi-channel communications. These filters can be found in transmitters, receivers, and intermediate signal processing stages of communication equipment.Expand Specific Solutions04 Adaptive and tunable high pass filters

Advanced high pass filter designs incorporate adaptive and tunable features, allowing for dynamic adjustment of filter characteristics based on changing signal conditions or user requirements. These filters may use variable components, digital control systems, or software-defined algorithms to modify their cutoff frequency, order, or response shape in real-time, enhancing their versatility and performance in various applications.Expand Specific Solutions05 High pass filters in audio systems

High pass filters are extensively used in audio systems for various purposes, including speaker protection, frequency band separation in multi-way speaker systems, and noise reduction. These filters can be implemented using analog circuits, digital signal processing techniques, or a combination of both. In professional audio applications, high pass filters are often adjustable to accommodate different acoustic environments and source materials.Expand Specific Solutions

Key Digital Twin Analytics Players

The high pass filter technology for digital twin implementations in predictive analytics is in a nascent stage of development, with the market showing significant growth potential. The industry is transitioning from early adoption to more widespread implementation, driven by increasing demand for advanced predictive maintenance solutions. While the market size is expanding, it remains relatively small compared to more established analytics sectors. Technologically, the field is evolving rapidly, with companies like Tata Consultancy Services, Huawei Technologies, and IBM leading innovation. These firms are developing sophisticated algorithms and integrating high pass filters with AI and machine learning to enhance digital twin accuracy and predictive capabilities. However, the technology's maturity varies across different applications and industries, indicating room for further advancement and standardization.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed an advanced High Pass Filter (HPF) system for Digital Twin implementations in predictive analytics. Their solution utilizes a combination of hardware and software components to achieve high-performance filtering. The system employs Field-Programmable Gate Arrays (FPGAs) for real-time signal processing, allowing for adaptive filter coefficients that can be dynamically adjusted based on the incoming data streams[1]. Huawei's HPF implementation also incorporates machine learning algorithms to optimize filter parameters, enhancing the accuracy of predictive models in digital twin applications[3]. The system is designed to handle large-scale industrial IoT deployments, processing data from thousands of sensors simultaneously while maintaining low latency[5].

Strengths: Highly scalable, low latency, and adaptable to various industrial scenarios. Weaknesses: May require significant computational resources and specialized hardware, potentially increasing implementation costs.

Hitachi Vantara LLC

Technical Solution: Hitachi Vantara has developed a comprehensive High Pass Filter solution for Digital Twin implementations in predictive analytics, integrated into their Lumada IoT platform. Their approach combines edge computing capabilities with advanced analytics in the cloud. At the edge, Hitachi employs custom-designed hardware accelerators to perform initial high-pass filtering, reducing latency and data transmission requirements[13]. In the cloud, their system utilizes a combination of traditional signal processing techniques and machine learning algorithms to optimize filter performance. Hitachi's HPF implementation is particularly focused on supporting predictive maintenance applications in manufacturing and energy sectors. The system includes adaptive filtering capabilities that can automatically adjust to changing operational conditions, ensuring consistent performance across various industrial scenarios[15]. Additionally, Hitachi's solution incorporates data quality assessment tools to ensure the reliability of filtered signals for digital twin modeling[17].

Strengths: Optimized for industrial IoT applications, low-latency edge processing, and adaptive filtering capabilities. Weaknesses: May require specialized hardware for optimal performance, potentially increasing initial implementation costs.

Core HPF Innovations for Digital Twins

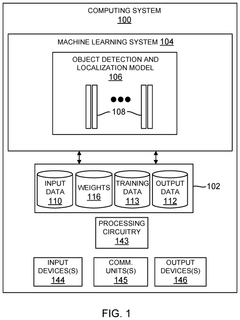

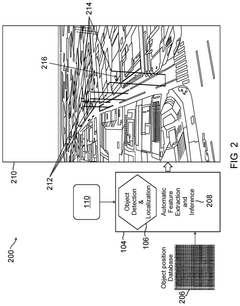

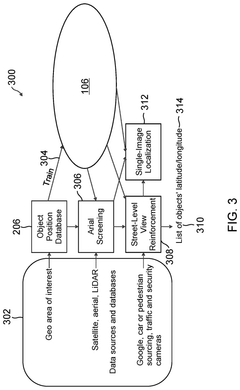

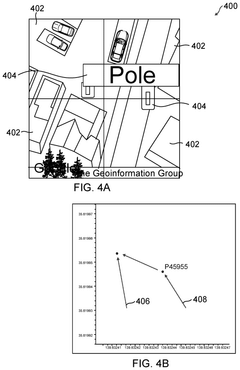

Machine-learning based object localization from images

PatentPendingUS20250095201A1

Innovation

- The use of machine learning algorithms to localize objects in aerial satellite and LIDAR sensor images, as well as street-level images, by extracting features based on object appearance, context, and relative location, and training a machine learning model to associate these features with object locations.

Compatibility verification of data standards

PatentInactiveUS20220198363A1

Innovation

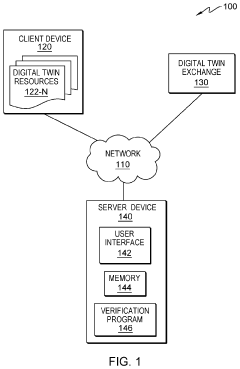

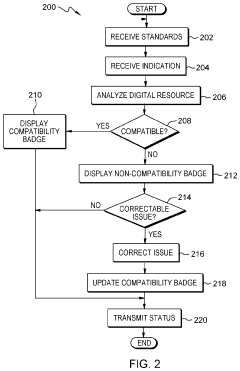

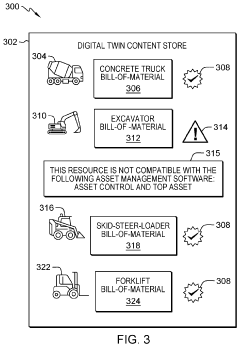

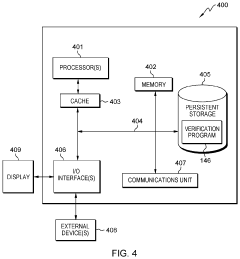

- A verification system that analyzes digital twin resources against a set of standards, displaying compatibility badges in a marketplace interface and notifying resource owners of compatibility issues, with automatic resolution capabilities for some issues.

Data Privacy in Digital Twin Analytics

Data privacy is a critical concern in the implementation of digital twin analytics, particularly when dealing with high pass filters for predictive analytics. As digital twins become more sophisticated and integrated into various industries, the volume and sensitivity of data collected and processed increase exponentially. This raises significant challenges in maintaining data privacy while ensuring the effectiveness of predictive analytics.

One of the primary concerns in digital twin analytics is the potential for unauthorized access to sensitive information. High pass filters, while essential for isolating high-frequency components in data streams, may inadvertently reveal patterns or insights that could compromise individual privacy or proprietary business information. To address this, robust encryption techniques and access control mechanisms must be implemented throughout the digital twin ecosystem.

Another crucial aspect of data privacy in this context is the principle of data minimization. Organizations must carefully evaluate the necessity of each data point collected and processed by the digital twin. High pass filters should be designed to extract only the most relevant high-frequency information, discarding unnecessary data that could pose privacy risks if compromised.

Anonymization and pseudonymization techniques play a vital role in preserving privacy in digital twin analytics. These methods can help obscure individual identities while maintaining the statistical relevance of the data for predictive analytics. However, care must be taken to ensure that these techniques do not significantly impact the accuracy of the high pass filters or the overall predictive capabilities of the system.

The implementation of privacy-preserving machine learning techniques, such as federated learning and differential privacy, can further enhance data protection in digital twin analytics. These approaches allow for the development of predictive models without exposing raw data, thereby reducing the risk of privacy breaches while still leveraging the power of high pass filters for accurate predictions.

Compliance with data protection regulations, such as GDPR in Europe or CCPA in California, is paramount when implementing digital twin analytics. Organizations must ensure that their use of high pass filters and other predictive analytics tools aligns with these regulatory frameworks, including provisions for data subject rights, consent management, and data retention policies.

As the field of digital twin analytics continues to evolve, ongoing research and development in privacy-enhancing technologies will be crucial. This includes exploring advanced cryptographic methods, developing more efficient privacy-preserving algorithms, and creating industry-specific best practices for balancing data utility with privacy protection in the context of high pass filters and predictive analytics.

One of the primary concerns in digital twin analytics is the potential for unauthorized access to sensitive information. High pass filters, while essential for isolating high-frequency components in data streams, may inadvertently reveal patterns or insights that could compromise individual privacy or proprietary business information. To address this, robust encryption techniques and access control mechanisms must be implemented throughout the digital twin ecosystem.

Another crucial aspect of data privacy in this context is the principle of data minimization. Organizations must carefully evaluate the necessity of each data point collected and processed by the digital twin. High pass filters should be designed to extract only the most relevant high-frequency information, discarding unnecessary data that could pose privacy risks if compromised.

Anonymization and pseudonymization techniques play a vital role in preserving privacy in digital twin analytics. These methods can help obscure individual identities while maintaining the statistical relevance of the data for predictive analytics. However, care must be taken to ensure that these techniques do not significantly impact the accuracy of the high pass filters or the overall predictive capabilities of the system.

The implementation of privacy-preserving machine learning techniques, such as federated learning and differential privacy, can further enhance data protection in digital twin analytics. These approaches allow for the development of predictive models without exposing raw data, thereby reducing the risk of privacy breaches while still leveraging the power of high pass filters for accurate predictions.

Compliance with data protection regulations, such as GDPR in Europe or CCPA in California, is paramount when implementing digital twin analytics. Organizations must ensure that their use of high pass filters and other predictive analytics tools aligns with these regulatory frameworks, including provisions for data subject rights, consent management, and data retention policies.

As the field of digital twin analytics continues to evolve, ongoing research and development in privacy-enhancing technologies will be crucial. This includes exploring advanced cryptographic methods, developing more efficient privacy-preserving algorithms, and creating industry-specific best practices for balancing data utility with privacy protection in the context of high pass filters and predictive analytics.

Digital Twin Standards and Interoperability

Digital twin standards and interoperability are crucial aspects of implementing high pass filters in predictive analytics. As digital twins become more prevalent in various industries, the need for standardization and seamless integration has become increasingly important. Several organizations and consortia have been working towards establishing common standards and protocols to ensure interoperability between different digital twin implementations.

The Industrial Internet Consortium (IIC) has been at the forefront of developing standards for digital twins. Their Digital Twin Interoperability Framework provides guidelines for creating interoperable digital twins across different platforms and systems. This framework addresses key aspects such as data models, communication protocols, and security measures, enabling seamless integration of high pass filters in predictive analytics applications.

Another significant initiative is the Digital Twin Consortium, which brings together industry leaders to develop and promote digital twin standards. Their efforts focus on creating a common language and architecture for digital twins, facilitating the exchange of data and models between different systems. This standardization is particularly beneficial for implementing high pass filters, as it allows for consistent data processing and analysis across various digital twin implementations.

The ISO/IEC JTC 1/SC 41 committee has also been working on developing international standards for the Internet of Things (IoT) and digital twin technologies. Their work includes defining reference architectures and interoperability requirements, which are essential for integrating high pass filters in predictive analytics systems that utilize digital twins.

Interoperability challenges often arise when implementing high pass filters in digital twin environments due to the diverse range of data sources, formats, and protocols used across different systems. To address these challenges, several open-source initiatives have emerged, such as the Eclipse Digital Twin Top-Level Project. This project aims to create a vendor-neutral, open-source platform for digital twins, promoting interoperability and standardization.

The adoption of semantic web technologies, such as RDF (Resource Description Framework) and OWL (Web Ontology Language), has also contributed to improving interoperability in digital twin implementations. These technologies enable the creation of standardized ontologies and data models, facilitating the integration of high pass filters and other analytical components across different digital twin platforms.

As the field of digital twins continues to evolve, ongoing efforts in standardization and interoperability will play a crucial role in enabling the effective implementation of high pass filters for predictive analytics. These efforts will not only streamline the integration process but also enhance the overall performance and reliability of digital twin systems across various industries.

The Industrial Internet Consortium (IIC) has been at the forefront of developing standards for digital twins. Their Digital Twin Interoperability Framework provides guidelines for creating interoperable digital twins across different platforms and systems. This framework addresses key aspects such as data models, communication protocols, and security measures, enabling seamless integration of high pass filters in predictive analytics applications.

Another significant initiative is the Digital Twin Consortium, which brings together industry leaders to develop and promote digital twin standards. Their efforts focus on creating a common language and architecture for digital twins, facilitating the exchange of data and models between different systems. This standardization is particularly beneficial for implementing high pass filters, as it allows for consistent data processing and analysis across various digital twin implementations.

The ISO/IEC JTC 1/SC 41 committee has also been working on developing international standards for the Internet of Things (IoT) and digital twin technologies. Their work includes defining reference architectures and interoperability requirements, which are essential for integrating high pass filters in predictive analytics systems that utilize digital twins.

Interoperability challenges often arise when implementing high pass filters in digital twin environments due to the diverse range of data sources, formats, and protocols used across different systems. To address these challenges, several open-source initiatives have emerged, such as the Eclipse Digital Twin Top-Level Project. This project aims to create a vendor-neutral, open-source platform for digital twins, promoting interoperability and standardization.

The adoption of semantic web technologies, such as RDF (Resource Description Framework) and OWL (Web Ontology Language), has also contributed to improving interoperability in digital twin implementations. These technologies enable the creation of standardized ontologies and data models, facilitating the integration of high pass filters and other analytical components across different digital twin platforms.

As the field of digital twins continues to evolve, ongoing efforts in standardization and interoperability will play a crucial role in enabling the effective implementation of high pass filters for predictive analytics. These efforts will not only streamline the integration process but also enhance the overall performance and reliability of digital twin systems across various industries.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!