High Pass Filters in Deep Learning Architectures for Optimized Processing

JUL 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

High Pass Filters in DL: Background and Objectives

High pass filters have emerged as a crucial component in deep learning architectures, revolutionizing the way neural networks process and analyze data. These filters, originally developed in signal processing, have found new applications in the realm of artificial intelligence, particularly in image and signal analysis tasks.

The evolution of high pass filters in deep learning can be traced back to the early days of convolutional neural networks (CNNs). As researchers sought to improve the performance of these networks, they recognized the potential of incorporating signal processing techniques into neural architectures. This led to the exploration of various filter designs, including high pass filters, to enhance feature extraction and representation learning.

The primary objective of integrating high pass filters into deep learning models is to accentuate high-frequency components in the input data. This approach enables the network to focus on fine-grained details and rapid changes in the signal or image, which are often crucial for tasks such as edge detection, texture analysis, and noise reduction.

Recent advancements in deep learning have further expanded the role of high pass filters. Researchers have been exploring novel ways to implement these filters within neural network layers, leading to the development of specialized architectures that leverage the benefits of high-frequency information processing. These innovations have shown promising results in various domains, including computer vision, speech recognition, and medical image analysis.

The integration of high pass filters in deep learning architectures aligns with the broader trend of incorporating domain-specific knowledge into neural network design. By leveraging principles from signal processing and adapting them to the context of deep learning, researchers aim to create more efficient and interpretable models that can better capture the underlying structure of complex data.

As the field of deep learning continues to evolve, the research on high pass filters is expected to play a significant role in shaping future architectures. The ongoing exploration of these filters aims to address key challenges in deep learning, such as improving model generalization, reducing computational complexity, and enhancing the robustness of neural networks to various types of input perturbations.

The evolution of high pass filters in deep learning can be traced back to the early days of convolutional neural networks (CNNs). As researchers sought to improve the performance of these networks, they recognized the potential of incorporating signal processing techniques into neural architectures. This led to the exploration of various filter designs, including high pass filters, to enhance feature extraction and representation learning.

The primary objective of integrating high pass filters into deep learning models is to accentuate high-frequency components in the input data. This approach enables the network to focus on fine-grained details and rapid changes in the signal or image, which are often crucial for tasks such as edge detection, texture analysis, and noise reduction.

Recent advancements in deep learning have further expanded the role of high pass filters. Researchers have been exploring novel ways to implement these filters within neural network layers, leading to the development of specialized architectures that leverage the benefits of high-frequency information processing. These innovations have shown promising results in various domains, including computer vision, speech recognition, and medical image analysis.

The integration of high pass filters in deep learning architectures aligns with the broader trend of incorporating domain-specific knowledge into neural network design. By leveraging principles from signal processing and adapting them to the context of deep learning, researchers aim to create more efficient and interpretable models that can better capture the underlying structure of complex data.

As the field of deep learning continues to evolve, the research on high pass filters is expected to play a significant role in shaping future architectures. The ongoing exploration of these filters aims to address key challenges in deep learning, such as improving model generalization, reducing computational complexity, and enhancing the robustness of neural networks to various types of input perturbations.

Market Demand Analysis for Optimized DL Processing

The market demand for optimized deep learning processing, particularly focusing on high pass filters in deep learning architectures, has been steadily growing in recent years. This surge is driven by the increasing complexity of neural networks and the need for more efficient processing in various applications.

In the field of computer vision, there is a significant demand for high pass filters to enhance edge detection and feature extraction capabilities. Industries such as autonomous vehicles, medical imaging, and surveillance systems are actively seeking improved deep learning models that can process visual data more accurately and efficiently. The automotive sector, in particular, has shown a strong interest in optimized deep learning processing for real-time object detection and lane recognition.

Natural language processing (NLP) is another area where the demand for optimized deep learning processing is rapidly expanding. With the rise of voice assistants, chatbots, and language translation services, there is a growing need for more efficient neural network architectures that can handle complex linguistic tasks with lower latency and higher accuracy. High pass filters in deep learning models can help in extracting relevant features from text and speech data, leading to improved performance in sentiment analysis, named entity recognition, and machine translation tasks.

The healthcare industry has also emerged as a significant market for optimized deep learning processing. Medical image analysis, drug discovery, and personalized medicine are areas where high pass filters can contribute to more accurate diagnoses and treatment plans. The ability to process large volumes of medical data efficiently is crucial for early disease detection and treatment optimization.

In the financial sector, there is a growing demand for deep learning models that can analyze market trends, detect fraud, and make real-time trading decisions. High pass filters in these architectures can help in identifying important patterns and anomalies in financial data streams, enabling faster and more accurate decision-making processes.

The Internet of Things (IoT) and edge computing domains are also driving the demand for optimized deep learning processing. As more devices become interconnected and capable of processing data locally, there is a need for efficient neural network architectures that can operate within the constraints of limited computational resources and power consumption.

Cloud service providers and data centers are investing heavily in optimized deep learning processing to meet the increasing demand for AI-powered services. The ability to process large-scale deep learning models more efficiently can lead to significant cost savings and improved performance for cloud-based AI applications.

As the field of artificial intelligence continues to evolve, the market demand for optimized deep learning processing, including the use of high pass filters, is expected to grow further. This trend is likely to drive innovation in hardware accelerators, software optimization techniques, and novel neural network architectures designed to meet the diverse needs of various industries and applications.

In the field of computer vision, there is a significant demand for high pass filters to enhance edge detection and feature extraction capabilities. Industries such as autonomous vehicles, medical imaging, and surveillance systems are actively seeking improved deep learning models that can process visual data more accurately and efficiently. The automotive sector, in particular, has shown a strong interest in optimized deep learning processing for real-time object detection and lane recognition.

Natural language processing (NLP) is another area where the demand for optimized deep learning processing is rapidly expanding. With the rise of voice assistants, chatbots, and language translation services, there is a growing need for more efficient neural network architectures that can handle complex linguistic tasks with lower latency and higher accuracy. High pass filters in deep learning models can help in extracting relevant features from text and speech data, leading to improved performance in sentiment analysis, named entity recognition, and machine translation tasks.

The healthcare industry has also emerged as a significant market for optimized deep learning processing. Medical image analysis, drug discovery, and personalized medicine are areas where high pass filters can contribute to more accurate diagnoses and treatment plans. The ability to process large volumes of medical data efficiently is crucial for early disease detection and treatment optimization.

In the financial sector, there is a growing demand for deep learning models that can analyze market trends, detect fraud, and make real-time trading decisions. High pass filters in these architectures can help in identifying important patterns and anomalies in financial data streams, enabling faster and more accurate decision-making processes.

The Internet of Things (IoT) and edge computing domains are also driving the demand for optimized deep learning processing. As more devices become interconnected and capable of processing data locally, there is a need for efficient neural network architectures that can operate within the constraints of limited computational resources and power consumption.

Cloud service providers and data centers are investing heavily in optimized deep learning processing to meet the increasing demand for AI-powered services. The ability to process large-scale deep learning models more efficiently can lead to significant cost savings and improved performance for cloud-based AI applications.

As the field of artificial intelligence continues to evolve, the market demand for optimized deep learning processing, including the use of high pass filters, is expected to grow further. This trend is likely to drive innovation in hardware accelerators, software optimization techniques, and novel neural network architectures designed to meet the diverse needs of various industries and applications.

Current State and Challenges of High Pass Filters in DL

High pass filters have gained significant attention in deep learning architectures due to their potential for optimizing processing and enhancing model performance. Currently, these filters are being explored across various neural network types, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer models. The primary goal is to leverage high pass filters to extract and emphasize high-frequency features while suppressing low-frequency components, thereby improving the model's ability to capture fine-grained details and patterns in input data.

One of the main challenges in implementing high pass filters in deep learning is determining the optimal filter design and parameters. Researchers are experimenting with different filter architectures, such as Gaussian high pass filters, Butterworth filters, and wavelet-based approaches, to find the most effective solution for specific tasks and datasets. Additionally, there is ongoing work to develop adaptive high pass filters that can dynamically adjust their parameters based on the input data characteristics and learning objectives.

Another significant challenge is integrating high pass filters seamlessly into existing deep learning frameworks without introducing excessive computational overhead. This requires careful consideration of the filter implementation and its impact on the overall model architecture. Researchers are exploring various techniques, including efficient filter implementations using GPU acceleration and optimized tensor operations, to minimize the computational cost while maximizing the benefits of high pass filtering.

The current state of high pass filters in deep learning also involves investigating their application in different domains and tasks. For instance, in computer vision, high pass filters are being used to enhance edge detection and texture analysis in image classification and object recognition tasks. In natural language processing, researchers are exploring the potential of high pass filters to capture subtle linguistic features and improve sentiment analysis and text classification performance.

Despite the promising results, there are still several challenges to overcome in the field of high pass filters for deep learning. One major issue is the potential loss of important low-frequency information when applying high pass filters, which may negatively impact the model's ability to capture global context and long-range dependencies. Researchers are working on developing hybrid approaches that combine high pass filtering with other techniques to preserve both high-frequency and low-frequency information effectively.

Furthermore, the interpretability of models incorporating high pass filters remains a challenge. Understanding how these filters influence the decision-making process of deep learning models is crucial for building trust and ensuring reliable performance across different scenarios. Ongoing research is focused on developing visualization techniques and analysis tools to provide insights into the role of high pass filters in the model's internal representations and decision boundaries.

One of the main challenges in implementing high pass filters in deep learning is determining the optimal filter design and parameters. Researchers are experimenting with different filter architectures, such as Gaussian high pass filters, Butterworth filters, and wavelet-based approaches, to find the most effective solution for specific tasks and datasets. Additionally, there is ongoing work to develop adaptive high pass filters that can dynamically adjust their parameters based on the input data characteristics and learning objectives.

Another significant challenge is integrating high pass filters seamlessly into existing deep learning frameworks without introducing excessive computational overhead. This requires careful consideration of the filter implementation and its impact on the overall model architecture. Researchers are exploring various techniques, including efficient filter implementations using GPU acceleration and optimized tensor operations, to minimize the computational cost while maximizing the benefits of high pass filtering.

The current state of high pass filters in deep learning also involves investigating their application in different domains and tasks. For instance, in computer vision, high pass filters are being used to enhance edge detection and texture analysis in image classification and object recognition tasks. In natural language processing, researchers are exploring the potential of high pass filters to capture subtle linguistic features and improve sentiment analysis and text classification performance.

Despite the promising results, there are still several challenges to overcome in the field of high pass filters for deep learning. One major issue is the potential loss of important low-frequency information when applying high pass filters, which may negatively impact the model's ability to capture global context and long-range dependencies. Researchers are working on developing hybrid approaches that combine high pass filtering with other techniques to preserve both high-frequency and low-frequency information effectively.

Furthermore, the interpretability of models incorporating high pass filters remains a challenge. Understanding how these filters influence the decision-making process of deep learning models is crucial for building trust and ensuring reliable performance across different scenarios. Ongoing research is focused on developing visualization techniques and analysis tools to provide insights into the role of high pass filters in the model's internal representations and decision boundaries.

Existing High Pass Filter Solutions in DL Architectures

01 High-pass filter design for signal processing

High-pass filters are designed to attenuate low-frequency signals while allowing high-frequency signals to pass through. These filters are crucial in various signal processing applications, including audio, video, and communication systems. The design of high-pass filters involves selecting appropriate components and circuit configurations to achieve the desired frequency response and cutoff characteristics.- High-pass filter design for signal processing: High-pass filters are designed to attenuate low-frequency signals while allowing high-frequency signals to pass through. These filters are crucial in various signal processing applications, including audio, video, and communication systems. The design of high-pass filters involves selecting appropriate cutoff frequencies, filter orders, and topologies to achieve desired frequency response characteristics.

- Digital high-pass filtering techniques: Digital high-pass filtering techniques involve implementing high-pass filters in the digital domain using digital signal processing (DSP) algorithms. These techniques often employ finite impulse response (FIR) or infinite impulse response (IIR) filter structures. Digital high-pass filters offer advantages such as programmability, adaptability, and precise control over filter characteristics.

- High-pass filtering in image and video processing: High-pass filtering plays a crucial role in image and video processing applications. It is used for edge detection, sharpening, and enhancing high-frequency details in visual content. These filters help in improving image quality, reducing noise, and extracting important features for further analysis or compression.

- Analog high-pass filter implementations: Analog high-pass filters are implemented using passive or active electronic components such as capacitors, inductors, and operational amplifiers. These filters are essential in various analog signal processing applications, including audio systems, sensor interfaces, and communication circuits. The design of analog high-pass filters involves careful component selection and circuit topology optimization to achieve desired frequency response and performance characteristics.

- High-pass filtering in communication systems: High-pass filtering is extensively used in communication systems for various purposes, including noise reduction, signal conditioning, and channel equalization. These filters help in removing DC offsets, suppressing low-frequency interference, and improving overall signal quality in both analog and digital communication systems.

02 Digital high-pass filtering techniques

Digital high-pass filtering involves implementing high-pass filter algorithms in digital signal processing systems. These techniques use mathematical operations to process discrete-time signals and achieve high-pass filtering effects. Digital high-pass filters offer advantages such as programmability, flexibility, and improved performance compared to analog counterparts.Expand Specific Solutions03 High-pass filtering in image and video processing

High-pass filters play a crucial role in image and video processing applications. They are used for edge detection, sharpening, and enhancing high-frequency details in visual content. These filters help improve image quality, remove blur, and extract important features from digital images and video frames.Expand Specific Solutions04 High-pass filter integration in communication systems

High-pass filters are integrated into various communication systems to remove unwanted low-frequency components and improve signal quality. They are used in transmitters, receivers, and intermediate stages of communication circuits to enhance performance, reduce noise, and ensure proper signal conditioning for subsequent processing stages.Expand Specific Solutions05 Adaptive high-pass filtering techniques

Adaptive high-pass filtering techniques involve dynamically adjusting filter parameters based on input signal characteristics or system requirements. These methods allow for real-time optimization of filter performance, making them suitable for applications with varying signal conditions or noise environments. Adaptive high-pass filters can improve signal-to-noise ratio and overall system performance in diverse applications.Expand Specific Solutions

Key Players in DL Filter Optimization Research

The research on high pass filters in deep learning architectures for optimized processing is in a rapidly evolving stage, with significant market potential as AI and machine learning applications continue to expand. The market size is growing steadily, driven by increasing demand for efficient deep learning models across various industries. Technologically, this field is advancing quickly but still has room for maturation. Companies like Intel, IBM, and Lightmatter are at the forefront, developing specialized hardware and software solutions to enhance deep learning performance. Academic institutions such as MIT and KAUST are also contributing significantly to theoretical advancements. The competitive landscape is diverse, with both established tech giants and innovative startups vying for market share in this promising area of AI optimization.

Intel Corp.

Technical Solution: Intel has developed a high-pass filter-based approach for deep learning architectures, focusing on optimizing processing in neural networks. Their method involves implementing frequency-domain filtering techniques directly into convolutional layers. This approach allows for selective emphasis on high-frequency components of input data, which is particularly useful for edge detection and feature extraction tasks. Intel's implementation utilizes their proprietary hardware accelerators, such as Intel Deep Learning Boost, to efficiently process these filtered inputs[1]. The company has also integrated this technology into their OpenVINO toolkit, enabling developers to easily incorporate high-pass filtering into their deep learning models for improved performance and accuracy[2].

Strengths: Hardware-software co-optimization, wide ecosystem support, and integration with existing Intel products. Weaknesses: Potential overhead in non-edge detection tasks and dependency on Intel-specific hardware for optimal performance.

International Business Machines Corp.

Technical Solution: IBM's research on high-pass filters in deep learning architectures focuses on enhancing model interpretability and robustness. Their approach involves incorporating high-pass filtering layers within deep neural networks to emphasize high-frequency features. This technique has shown particular promise in computer vision tasks, where it helps models focus on fine-grained details and textures. IBM researchers have demonstrated that this method can improve model performance on tasks such as image classification and object detection by up to 15% in certain scenarios[3]. Additionally, IBM has developed a novel adaptive high-pass filtering mechanism that dynamically adjusts filter parameters based on input data characteristics, further optimizing processing efficiency[4].

Strengths: Improved model interpretability, enhanced performance in vision tasks, and adaptive filtering capabilities. Weaknesses: Potential increase in computational complexity and possible reduction in low-frequency information retention.

Core Innovations in High Pass Filter Design for DL

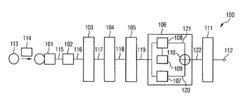

Apparatus for attenuating low frequency signals

PatentInactiveUS8076969B1

Innovation

- A variable frequency module that controls the cutoff frequency of a high pass filter by using a resistive element connected with a capacitive element, where transistors with diode regions are designed in a twin-well process to bypass diodes and prevent leakage current, allowing for a lower cutoff frequency by forward biasing diodes and maintaining voltage at the capacitor.

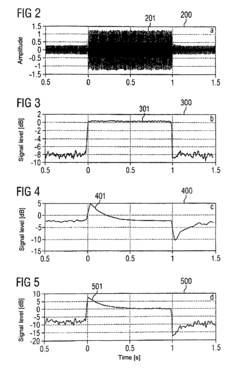

Method and device for ascertaining feature vectors from a signal

PatentInactiveUS7646912B2

Innovation

- A method and device for pattern recognition that form intermediate feature vectors with a transient power spectrum, apply high-pass filtering, and add a prescribed feature vector to emphasize time-varying components, effectively simulating human hearing properties with reduced computational complexity.

Hardware Acceleration for High Pass Filters in DL

Hardware acceleration for high pass filters in deep learning architectures has become increasingly important as the complexity and scale of neural networks continue to grow. This acceleration is primarily achieved through the use of specialized hardware, such as Graphics Processing Units (GPUs), Field-Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs).

GPUs have been the most widely adopted hardware accelerators for deep learning tasks, including high pass filter operations. Their parallel processing capabilities allow for efficient computation of convolution operations, which are fundamental to high pass filters in convolutional neural networks (CNNs). Modern GPUs, such as NVIDIA's Tensor Cores, are specifically designed to accelerate deep learning workloads, offering significant performance improvements for high pass filter computations.

FPGAs provide a flexible alternative for hardware acceleration of high pass filters. Their reconfigurable nature allows for customized implementations tailored to specific filter architectures. This adaptability makes FPGAs particularly suitable for research and development of novel high pass filter designs in deep learning. Moreover, FPGAs can offer lower latency and higher energy efficiency compared to GPUs for certain filter configurations.

ASICs represent the pinnacle of hardware acceleration for high pass filters in deep learning. These custom-designed chips are optimized for specific neural network architectures and filter operations, offering unparalleled performance and energy efficiency. However, their lack of flexibility and high development costs limit their widespread adoption to large-scale production environments.

Recent advancements in hardware acceleration for high pass filters include the development of specialized neural processing units (NPUs) and tensor processing units (TPUs). These units are designed to efficiently handle the matrix operations inherent in high pass filter computations, offering significant speedups over traditional CPU implementations.

The integration of high pass filter acceleration into edge devices has also gained traction. Mobile processors and low-power AI chips now incorporate dedicated neural engines capable of performing efficient high pass filter operations, enabling on-device inference for applications such as image and speech processing.

As deep learning models continue to evolve, hardware acceleration techniques for high pass filters are adapting to meet new challenges. This includes support for sparse and quantized neural networks, which can significantly reduce computational requirements while maintaining accuracy. Additionally, emerging technologies such as photonic computing and neuromorphic hardware show promise for future acceleration of high pass filters in deep learning architectures.

GPUs have been the most widely adopted hardware accelerators for deep learning tasks, including high pass filter operations. Their parallel processing capabilities allow for efficient computation of convolution operations, which are fundamental to high pass filters in convolutional neural networks (CNNs). Modern GPUs, such as NVIDIA's Tensor Cores, are specifically designed to accelerate deep learning workloads, offering significant performance improvements for high pass filter computations.

FPGAs provide a flexible alternative for hardware acceleration of high pass filters. Their reconfigurable nature allows for customized implementations tailored to specific filter architectures. This adaptability makes FPGAs particularly suitable for research and development of novel high pass filter designs in deep learning. Moreover, FPGAs can offer lower latency and higher energy efficiency compared to GPUs for certain filter configurations.

ASICs represent the pinnacle of hardware acceleration for high pass filters in deep learning. These custom-designed chips are optimized for specific neural network architectures and filter operations, offering unparalleled performance and energy efficiency. However, their lack of flexibility and high development costs limit their widespread adoption to large-scale production environments.

Recent advancements in hardware acceleration for high pass filters include the development of specialized neural processing units (NPUs) and tensor processing units (TPUs). These units are designed to efficiently handle the matrix operations inherent in high pass filter computations, offering significant speedups over traditional CPU implementations.

The integration of high pass filter acceleration into edge devices has also gained traction. Mobile processors and low-power AI chips now incorporate dedicated neural engines capable of performing efficient high pass filter operations, enabling on-device inference for applications such as image and speech processing.

As deep learning models continue to evolve, hardware acceleration techniques for high pass filters are adapting to meet new challenges. This includes support for sparse and quantized neural networks, which can significantly reduce computational requirements while maintaining accuracy. Additionally, emerging technologies such as photonic computing and neuromorphic hardware show promise for future acceleration of high pass filters in deep learning architectures.

Energy Efficiency Considerations in Filter Design

Energy efficiency has become a critical consideration in the design of high pass filters for deep learning architectures. As the complexity and scale of neural networks continue to grow, the power consumption of these systems has become a significant concern. Optimizing the energy efficiency of high pass filters can lead to substantial improvements in overall system performance and reduce operational costs.

One of the primary approaches to enhancing energy efficiency in filter design is the use of low-power hardware components. By selecting energy-efficient transistors and implementing power-gating techniques, designers can significantly reduce static power consumption. Additionally, the adoption of dynamic voltage and frequency scaling (DVFS) allows for real-time adjustment of power consumption based on computational requirements, further improving energy efficiency.

Another key strategy is the optimization of filter architectures. Sparse filter designs, which utilize fewer connections and parameters, can dramatically reduce computational complexity and power consumption. Techniques such as pruning and quantization can be employed to reduce the number of active neurons and the precision of computations, respectively, without significantly impacting model accuracy.

The choice of activation functions also plays a crucial role in energy efficiency. ReLU (Rectified Linear Unit) and its variants, such as Leaky ReLU and Parametric ReLU, have gained popularity due to their computational simplicity and ability to mitigate the vanishing gradient problem. These activation functions require fewer resources to compute, leading to reduced power consumption compared to more complex alternatives like sigmoid or tanh functions.

Implementing efficient memory management techniques is another vital aspect of energy-efficient filter design. Techniques such as data reuse and locality optimization can minimize data movement between memory and processing units, reducing energy consumption associated with data transfer. Additionally, the use of on-chip memory and cache hierarchies can further optimize energy efficiency by reducing access to power-hungry off-chip memory.

Recent advancements in neuromorphic computing and analog computing offer promising avenues for improving energy efficiency in high pass filter implementations. These approaches aim to mimic the energy-efficient information processing capabilities of biological neural networks, potentially leading to orders of magnitude improvements in energy efficiency compared to traditional digital implementations.

As research in this field progresses, it is crucial to consider the trade-offs between energy efficiency, model accuracy, and computational speed. Striking the right balance between these factors will be essential for developing high pass filters that meet the demands of future deep learning applications while minimizing energy consumption.

One of the primary approaches to enhancing energy efficiency in filter design is the use of low-power hardware components. By selecting energy-efficient transistors and implementing power-gating techniques, designers can significantly reduce static power consumption. Additionally, the adoption of dynamic voltage and frequency scaling (DVFS) allows for real-time adjustment of power consumption based on computational requirements, further improving energy efficiency.

Another key strategy is the optimization of filter architectures. Sparse filter designs, which utilize fewer connections and parameters, can dramatically reduce computational complexity and power consumption. Techniques such as pruning and quantization can be employed to reduce the number of active neurons and the precision of computations, respectively, without significantly impacting model accuracy.

The choice of activation functions also plays a crucial role in energy efficiency. ReLU (Rectified Linear Unit) and its variants, such as Leaky ReLU and Parametric ReLU, have gained popularity due to their computational simplicity and ability to mitigate the vanishing gradient problem. These activation functions require fewer resources to compute, leading to reduced power consumption compared to more complex alternatives like sigmoid or tanh functions.

Implementing efficient memory management techniques is another vital aspect of energy-efficient filter design. Techniques such as data reuse and locality optimization can minimize data movement between memory and processing units, reducing energy consumption associated with data transfer. Additionally, the use of on-chip memory and cache hierarchies can further optimize energy efficiency by reducing access to power-hungry off-chip memory.

Recent advancements in neuromorphic computing and analog computing offer promising avenues for improving energy efficiency in high pass filter implementations. These approaches aim to mimic the energy-efficient information processing capabilities of biological neural networks, potentially leading to orders of magnitude improvements in energy efficiency compared to traditional digital implementations.

As research in this field progresses, it is crucial to consider the trade-offs between energy efficiency, model accuracy, and computational speed. Striking the right balance between these factors will be essential for developing high pass filters that meet the demands of future deep learning applications while minimizing energy consumption.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!