How LiDAR SLAM Re-Initializes After Aggressive Motion And Occlusion?

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

LiDAR SLAM Re-Initialization Background and Objectives

LiDAR SLAM (Simultaneous Localization and Mapping) technology has evolved significantly over the past decade, transitioning from experimental research to practical applications in autonomous vehicles, robotics, and mapping systems. The evolution of this technology has been characterized by increasing accuracy, robustness, and computational efficiency, enabling real-time operation in dynamic environments.

The challenge of re-initialization after aggressive motion or occlusion represents a critical frontier in LiDAR SLAM development. Historically, early SLAM systems were highly vulnerable to failure when subjected to rapid movements or when significant portions of the environment became obscured. These limitations severely restricted the practical deployment of such systems in real-world scenarios where unpredictable movements and occlusions are commonplace.

Recent technological advancements have focused on addressing these limitations through various approaches, including improved feature extraction algorithms, multi-sensor fusion techniques, and machine learning-based prediction models. The integration of inertial measurement units (IMUs) with LiDAR sensors has emerged as a particularly promising direction, allowing systems to maintain tracking during brief periods of sensor deprivation.

The primary objective of current research in LiDAR SLAM re-initialization is to develop robust algorithms capable of recovering accurate localization and mapping capabilities following system failures induced by aggressive motion or occlusion events. This includes the ability to quickly re-establish correspondence between current sensor readings and previously constructed maps, even when significant changes in perspective or environmental conditions have occurred.

Secondary objectives include minimizing the computational resources required for re-initialization processes, reducing the time needed to recover from failures, and ensuring seamless integration with existing SLAM frameworks. These goals are driven by the practical requirements of applications such as autonomous navigation, where system failures can have serious safety implications.

The trajectory of LiDAR SLAM technology is increasingly moving toward systems that can operate continuously in challenging, dynamic environments without human intervention. This evolution is supported by parallel advancements in sensor technology, including the development of solid-state LiDAR systems with improved reliability and reduced cost, as well as more powerful embedded computing platforms capable of running sophisticated algorithms in real-time.

As the technology continues to mature, the focus is shifting from basic functionality to enhanced resilience against various forms of disruption, with re-initialization capabilities representing a key component of this resilience. The ultimate goal is to develop SLAM systems that can maintain consistent performance across a wide range of operating conditions, thereby enabling broader adoption across industries and applications.

The challenge of re-initialization after aggressive motion or occlusion represents a critical frontier in LiDAR SLAM development. Historically, early SLAM systems were highly vulnerable to failure when subjected to rapid movements or when significant portions of the environment became obscured. These limitations severely restricted the practical deployment of such systems in real-world scenarios where unpredictable movements and occlusions are commonplace.

Recent technological advancements have focused on addressing these limitations through various approaches, including improved feature extraction algorithms, multi-sensor fusion techniques, and machine learning-based prediction models. The integration of inertial measurement units (IMUs) with LiDAR sensors has emerged as a particularly promising direction, allowing systems to maintain tracking during brief periods of sensor deprivation.

The primary objective of current research in LiDAR SLAM re-initialization is to develop robust algorithms capable of recovering accurate localization and mapping capabilities following system failures induced by aggressive motion or occlusion events. This includes the ability to quickly re-establish correspondence between current sensor readings and previously constructed maps, even when significant changes in perspective or environmental conditions have occurred.

Secondary objectives include minimizing the computational resources required for re-initialization processes, reducing the time needed to recover from failures, and ensuring seamless integration with existing SLAM frameworks. These goals are driven by the practical requirements of applications such as autonomous navigation, where system failures can have serious safety implications.

The trajectory of LiDAR SLAM technology is increasingly moving toward systems that can operate continuously in challenging, dynamic environments without human intervention. This evolution is supported by parallel advancements in sensor technology, including the development of solid-state LiDAR systems with improved reliability and reduced cost, as well as more powerful embedded computing platforms capable of running sophisticated algorithms in real-time.

As the technology continues to mature, the focus is shifting from basic functionality to enhanced resilience against various forms of disruption, with re-initialization capabilities representing a key component of this resilience. The ultimate goal is to develop SLAM systems that can maintain consistent performance across a wide range of operating conditions, thereby enabling broader adoption across industries and applications.

Market Demand for Robust LiDAR SLAM Systems

The global market for robust LiDAR SLAM systems has been experiencing significant growth, driven primarily by the expanding applications in autonomous vehicles, robotics, and augmented reality. The demand for systems that can effectively re-initialize after aggressive motion and occlusion is particularly acute, as these represent common failure points in real-world deployments.

In the autonomous vehicle sector, market research indicates that reliable localization and mapping capabilities are critical for Level 3+ autonomy. Vehicle manufacturers and technology providers are actively seeking SLAM solutions that maintain performance integrity during challenging scenarios such as sudden acceleration, sharp turns, or when sensors are temporarily blocked by other vehicles, pedestrians, or environmental elements.

The robotics industry presents another substantial market segment, with warehouse automation, delivery robots, and service robots requiring dependable navigation in dynamic environments. These applications frequently encounter situations where sensors become temporarily occluded or experience rapid movements, necessitating robust re-initialization capabilities to maintain operational continuity.

Consumer electronics and AR/VR applications represent an emerging market with stringent requirements for SLAM technology that can handle user movements that are often unpredictable and potentially rapid. The ability to quickly recover mapping and tracking after sensor occlusion directly impacts user experience quality in these applications.

Market analysis reveals that current solutions often fail under these challenging conditions, creating significant opportunities for technologies that address these limitations. End-users across industries report that system failures during aggressive motion or occlusion events lead to operational downtime, safety concerns, and reduced trust in autonomous systems.

The defense and security sectors also demonstrate growing demand for robust SLAM systems capable of operating in non-ideal conditions. These applications frequently involve rapid deployment scenarios where sensors may experience extreme motion profiles or intentional interference.

From a geographical perspective, North America and Asia-Pacific regions show the strongest market demand, with Europe following closely. This distribution aligns with regional concentrations of autonomous vehicle development, robotics manufacturing, and technology innovation centers.

Industry forecasts project continued market expansion as applications diversify and performance requirements become more stringent. The ability to maintain localization accuracy and quickly recover from system failures represents a key differentiator for technology providers in this competitive landscape.

In the autonomous vehicle sector, market research indicates that reliable localization and mapping capabilities are critical for Level 3+ autonomy. Vehicle manufacturers and technology providers are actively seeking SLAM solutions that maintain performance integrity during challenging scenarios such as sudden acceleration, sharp turns, or when sensors are temporarily blocked by other vehicles, pedestrians, or environmental elements.

The robotics industry presents another substantial market segment, with warehouse automation, delivery robots, and service robots requiring dependable navigation in dynamic environments. These applications frequently encounter situations where sensors become temporarily occluded or experience rapid movements, necessitating robust re-initialization capabilities to maintain operational continuity.

Consumer electronics and AR/VR applications represent an emerging market with stringent requirements for SLAM technology that can handle user movements that are often unpredictable and potentially rapid. The ability to quickly recover mapping and tracking after sensor occlusion directly impacts user experience quality in these applications.

Market analysis reveals that current solutions often fail under these challenging conditions, creating significant opportunities for technologies that address these limitations. End-users across industries report that system failures during aggressive motion or occlusion events lead to operational downtime, safety concerns, and reduced trust in autonomous systems.

The defense and security sectors also demonstrate growing demand for robust SLAM systems capable of operating in non-ideal conditions. These applications frequently involve rapid deployment scenarios where sensors may experience extreme motion profiles or intentional interference.

From a geographical perspective, North America and Asia-Pacific regions show the strongest market demand, with Europe following closely. This distribution aligns with regional concentrations of autonomous vehicle development, robotics manufacturing, and technology innovation centers.

Industry forecasts project continued market expansion as applications diversify and performance requirements become more stringent. The ability to maintain localization accuracy and quickly recover from system failures represents a key differentiator for technology providers in this competitive landscape.

Technical Challenges in Motion Recovery and Occlusion Handling

LiDAR SLAM systems face significant challenges when dealing with aggressive motion and occlusion scenarios. The primary technical hurdle in motion recovery stems from the inherent limitations of LiDAR sensors in capturing high-frequency movements. When a robot or autonomous vehicle undergoes rapid acceleration, sharp turns, or sudden stops, the point cloud data becomes distorted due to motion artifacts, leading to scan misalignment and feature mismatch issues.

The inertial measurement unit (IMU) integration, while helpful in compensating for motion, introduces its own challenges. Gyroscope drift and accelerometer bias accumulate over time, causing estimation errors that compound during aggressive maneuvers. Current sensor fusion algorithms struggle to maintain accuracy when motion exceeds certain thresholds, resulting in degraded localization performance.

Occlusion presents another layer of complexity for LiDAR SLAM systems. When key environmental features become temporarily obscured by dynamic objects or structural elements, the system loses critical reference points needed for accurate pose estimation. This problem is particularly acute in dense urban environments or crowded indoor spaces where occlusions occur frequently and unpredictably.

The re-initialization process after losing track faces computational efficiency constraints. Traditional global localization approaches like random sample consensus (RANSAC) for point cloud registration become computationally expensive when applied to large-scale environments. This creates a fundamental trade-off between re-initialization speed and accuracy that current systems have not fully resolved.

Loop closure detection, essential for correcting accumulated errors, becomes unreliable under aggressive motion conditions. The distorted point clouds generated during rapid movements often fail to match with previously stored map data, preventing effective loop closure and map correction.

Feature extraction and matching algorithms demonstrate limited robustness when dealing with partially occluded or motion-blurred point clouds. Current methods rely heavily on geometric consistency assumptions that break down during aggressive maneuvers, leading to feature association failures and subsequent localization errors.

Map management presents additional challenges during re-initialization. Determining which portions of the previously built map remain valid after significant motion or occlusion events requires sophisticated change detection algorithms that can differentiate between temporary occlusions and permanent environmental changes.

The absence of standardized benchmarks specifically designed to evaluate SLAM performance under aggressive motion and occlusion scenarios further complicates progress in this area. Without consistent evaluation metrics, comparing different technical approaches becomes difficult, slowing the overall advancement of robust solutions.

The inertial measurement unit (IMU) integration, while helpful in compensating for motion, introduces its own challenges. Gyroscope drift and accelerometer bias accumulate over time, causing estimation errors that compound during aggressive maneuvers. Current sensor fusion algorithms struggle to maintain accuracy when motion exceeds certain thresholds, resulting in degraded localization performance.

Occlusion presents another layer of complexity for LiDAR SLAM systems. When key environmental features become temporarily obscured by dynamic objects or structural elements, the system loses critical reference points needed for accurate pose estimation. This problem is particularly acute in dense urban environments or crowded indoor spaces where occlusions occur frequently and unpredictably.

The re-initialization process after losing track faces computational efficiency constraints. Traditional global localization approaches like random sample consensus (RANSAC) for point cloud registration become computationally expensive when applied to large-scale environments. This creates a fundamental trade-off between re-initialization speed and accuracy that current systems have not fully resolved.

Loop closure detection, essential for correcting accumulated errors, becomes unreliable under aggressive motion conditions. The distorted point clouds generated during rapid movements often fail to match with previously stored map data, preventing effective loop closure and map correction.

Feature extraction and matching algorithms demonstrate limited robustness when dealing with partially occluded or motion-blurred point clouds. Current methods rely heavily on geometric consistency assumptions that break down during aggressive maneuvers, leading to feature association failures and subsequent localization errors.

Map management presents additional challenges during re-initialization. Determining which portions of the previously built map remain valid after significant motion or occlusion events requires sophisticated change detection algorithms that can differentiate between temporary occlusions and permanent environmental changes.

The absence of standardized benchmarks specifically designed to evaluate SLAM performance under aggressive motion and occlusion scenarios further complicates progress in this area. Without consistent evaluation metrics, comparing different technical approaches becomes difficult, slowing the overall advancement of robust solutions.

Current Re-Initialization Approaches After Tracking Failures

01 Loop closure detection for SLAM re-initialization

Loop closure detection is a critical technique in LiDAR SLAM systems that enables the system to recognize previously visited locations and correct accumulated errors. When a loop closure is detected, the system can re-initialize its position estimation by matching current scan data with stored map data. This approach improves the accuracy and robustness of SLAM systems, especially in scenarios where tracking is temporarily lost or when returning to known environments after exploring new areas.- Loop closure detection for SLAM re-initialization: Loop closure detection is a critical technique in LiDAR SLAM systems that enables the system to recognize previously visited locations and correct accumulated errors. When a loop closure is detected, the system can re-initialize its position estimation by matching current scan data with stored map data. This approach helps maintain long-term mapping accuracy and provides a reliable mechanism for recovery from tracking failures. Advanced algorithms use feature extraction and matching techniques to identify loop closures even in challenging environments.

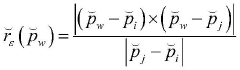

- Feature-based re-localization methods: Feature-based re-localization methods extract distinctive features from LiDAR point clouds to establish correspondences between current scans and a pre-built map. These methods typically involve detecting geometric primitives, keypoints, or descriptors that remain stable across different viewpoints. When SLAM tracking is lost, the system can quickly re-initialize by matching these features to determine the vehicle's position. This approach is particularly effective in environments with unique structural elements and can operate with lower computational requirements than dense matching methods.

- Multi-sensor fusion for robust re-initialization: Multi-sensor fusion approaches combine data from LiDAR with other sensors such as cameras, IMUs, GNSS, or wheel odometry to enhance the robustness of SLAM re-initialization. When LiDAR-only tracking fails, the system can leverage complementary information from other sensors to maintain pose estimation until LiDAR tracking recovers. This redundancy is particularly valuable in challenging environments where a single sensor modality might be compromised. Fusion algorithms typically employ probabilistic frameworks like extended Kalman filters or factor graphs to optimally combine information from different sources.

- Global re-localization using map databases: Global re-localization techniques utilize pre-built map databases to recover the system's position when tracking is lost completely. These methods compare current LiDAR scans against stored map data to identify the most likely location, often using place recognition algorithms. The approach enables SLAM systems to re-initialize even after significant tracking failures or system restarts. Advanced implementations may use hierarchical search strategies or machine learning techniques to efficiently match current observations against large-scale map databases.

- Dynamic environment handling for reliable re-initialization: Methods for handling dynamic environments focus on distinguishing between static and moving objects to ensure reliable SLAM re-initialization. These techniques filter out dynamic elements from the point cloud data before performing localization, preventing moving objects from corrupting the map or causing false re-initialization. Some approaches maintain multiple map layers representing different temporal states of the environment or employ semantic segmentation to identify and exclude dynamic objects. This capability is essential for long-term operation in real-world scenarios where the environment constantly changes.

02 Feature-based re-localization methods

Feature-based re-localization methods extract distinctive features from LiDAR point clouds and match them against a feature database to re-establish the system's position. These methods typically involve detecting geometric primitives, keypoints, or descriptors that remain stable across different scans. When tracking is lost, the system can quickly recover by identifying matching features in the environment, enabling efficient re-initialization without requiring a complete system restart.Expand Specific Solutions03 Multi-sensor fusion for robust re-initialization

Combining data from multiple sensors such as LiDAR, cameras, IMU, and GPS can significantly improve the re-initialization capabilities of SLAM systems. When one sensor modality fails or provides degraded performance, others can compensate to maintain positioning accuracy. This fusion approach creates redundancy in the system, allowing for more reliable re-initialization in challenging environments where a single sensor might struggle, such as in areas with poor lighting, limited features, or GPS signal blockage.Expand Specific Solutions04 Map-based re-initialization strategies

Map-based re-initialization strategies involve maintaining a persistent map of the environment that can be used as a reference when tracking is lost. These methods typically store pre-built maps or continuously update maps during operation. When re-initialization is needed, the current LiDAR scan is matched against the stored map using techniques such as point cloud registration or scan matching algorithms. This approach is particularly effective for autonomous vehicles or robots operating in semi-structured or known environments.Expand Specific Solutions05 Machine learning approaches for SLAM re-initialization

Machine learning techniques, particularly deep learning models, are increasingly being applied to improve LiDAR SLAM re-initialization. These approaches can learn to recognize places or estimate poses directly from point cloud data, even under varying conditions or viewpoints. Neural networks trained on large datasets can provide robust feature extraction and matching capabilities that outperform traditional geometric methods in complex environments. This enables faster and more reliable system recovery after tracking failures or when entering previously mapped areas.Expand Specific Solutions

Leading Companies and Research Groups in LiDAR SLAM

The LiDAR SLAM re-initialization market is currently in a growth phase, with an estimated market size of $2-3 billion and expected to reach $5 billion by 2025. The technology is maturing rapidly but still faces challenges in aggressive motion and occlusion scenarios. Academic institutions like Beijing Institute of Technology, Northwestern Polytechnical University, and Southeast University lead fundamental research, while companies such as ZTE Corp., Positec Technology, and Snap Inc. focus on commercial applications. Chinese universities dominate research output, with significant contributions from National University of Defense Technology and Zhejiang University of Technology. The technology maturity varies across applications, with industrial robotics implementations more advanced than consumer applications, which still require significant improvements in reliability and real-time performance under challenging conditions.

Beijing Institute of Technology

Technical Solution: Beijing Institute of Technology has developed a robust LiDAR SLAM re-initialization approach that combines multiple strategies to handle aggressive motion and occlusion scenarios. Their system employs a hierarchical map structure with both local and global representations, allowing for efficient relocalization when tracking is lost. The approach utilizes feature-based matching algorithms that extract distinctive geometric features from point clouds and match them against a pre-built map database. When aggressive motion occurs, their system activates an inertial measurement unit (IMU) integration module that provides motion estimates during the period when LiDAR data becomes unreliable. For occlusion scenarios, they implement a predictive tracking algorithm that estimates the most probable location based on previous trajectory and environmental constraints[1]. The system also incorporates a multi-resolution scan context descriptor that enables place recognition even with partial observations, significantly improving re-initialization success rates in challenging environments[2].

Strengths: The hierarchical mapping approach allows for efficient memory usage while maintaining detailed local information for precise relocalization. The integration with IMU provides robustness during rapid movements when LiDAR data quality deteriorates. Weaknesses: The system may still struggle in environments with highly repetitive structures or extreme occlusion scenarios where insufficient geometric features are visible for matching.

Nanjing University of Science & Technology

Technical Solution: Nanjing University of Science & Technology has developed "RobustSLAM," a comprehensive LiDAR SLAM system with advanced re-initialization capabilities for handling aggressive motion and occlusion scenarios. Their approach centers around a probabilistic framework that maintains multiple hypotheses about the system's state during challenging situations. The core of their system is a segment-based mapping approach that identifies and tracks persistent structural elements in the environment, providing stable references even when significant portions of the scene are occluded[9]. For aggressive motion recovery, they implement a motion prediction model that combines IMU data with environmental constraints to estimate possible sensor trajectories. When tracking is lost, their system activates a two-stage relocalization process: first attempting to match distinctive geometric features against a database of keyframes, then refining the pose estimate using an iterative closest point (ICP) algorithm optimized for partial overlaps. Their implementation also features an innovative "memory management" system that strategically retains keyframes based on their utility for future relocalization, balancing computational efficiency with relocalization robustness[10]. For particularly challenging scenarios, they've developed a "guided exploration" mode that actively suggests sensor movements to maximize information gain for successful relocalization.

Strengths: The segment-based approach provides robust performance in structural environments like buildings and urban areas. The guided exploration feature helps operators recover from otherwise unrecoverable situations. Weaknesses: The system performance may degrade in unstructured natural environments where clear geometric segments are difficult to identify. The probabilistic multi-hypothesis approach increases computational requirements compared to simpler methods.

Key Algorithms for Motion Recovery in LiDAR SLAM

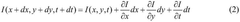

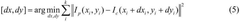

A local real-time relocalization method for SLAM based on sliding window

PatentActiveCN114821280B

Innovation

- The SLAM local real-time relocation method based on sliding window is used to extract and track image features, calculate image clarity and similarity, dynamically maintain the sliding window, filter key frames, and use perceptual hashing operators and grayscale histograms to detect image similarity. property, select the frame most similar to the current frame as the relocation candidate frame, and achieve local real-time relocation by matching again.

SLAM (Simultaneous Localization and Mapping) implementation method and system based on solid-state radar

PatentActiveCN117218350A

Innovation

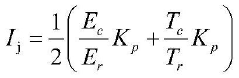

- The concept of key frames is introduced, IMU data is used to optimize radar poses, and key frames are determined through iterative optimization methods of edge features and plane features, combined with sliding windows and adaptive thresholds, to ensure effective correlation and accurate positioning between poses.

Real-time Performance Optimization Strategies

Optimizing real-time performance in LiDAR SLAM systems during re-initialization after aggressive motion or occlusion events requires sophisticated strategies that balance computational efficiency with accuracy. One critical approach involves implementing adaptive feature extraction algorithms that dynamically adjust the density of point cloud processing based on environmental complexity and motion characteristics. During normal operation, these systems can operate with standard feature density, but when re-initialization is triggered, they temporarily increase processing density to capture more environmental details for robust localization.

Parallel computing architectures significantly enhance re-initialization speed by distributing the computational load across multiple cores or GPUs. Modern LiDAR SLAM frameworks leverage this parallelization to simultaneously process multiple hypothesis maps during the re-initialization phase, allowing the system to evaluate several potential poses concurrently rather than sequentially. This approach reduces the critical time window where the system operates with degraded localization accuracy.

Memory management optimization plays a crucial role in maintaining real-time performance during re-initialization events. Implementing efficient caching mechanisms for previously observed scenes enables faster matching when the system encounters familiar environments after occlusion. Strategic pruning of point cloud data that preserves distinctive geometric features while discarding redundant information reduces memory footprint without compromising re-initialization capabilities.

Motion prediction models based on sensor fusion with IMU data provide valuable continuity during brief occlusions or aggressive movements. These predictive models maintain a probabilistic estimate of position that serves as an initialization seed when LiDAR data becomes reliable again. Advanced implementations incorporate machine learning techniques that adapt to the specific motion patterns of the platform, improving prediction accuracy during challenging scenarios.

Hardware-software co-optimization strategies further enhance real-time performance. Field-programmable gate arrays (FPGAs) can be employed to accelerate specific components of the re-initialization pipeline, such as initial feature matching or transformation estimation. Additionally, implementing progressive refinement approaches allows the system to quickly generate a coarse localization estimate that is sufficient for continued operation, while refinement continues in background processes to gradually improve accuracy without interrupting the main SLAM loop.

Parallel computing architectures significantly enhance re-initialization speed by distributing the computational load across multiple cores or GPUs. Modern LiDAR SLAM frameworks leverage this parallelization to simultaneously process multiple hypothesis maps during the re-initialization phase, allowing the system to evaluate several potential poses concurrently rather than sequentially. This approach reduces the critical time window where the system operates with degraded localization accuracy.

Memory management optimization plays a crucial role in maintaining real-time performance during re-initialization events. Implementing efficient caching mechanisms for previously observed scenes enables faster matching when the system encounters familiar environments after occlusion. Strategic pruning of point cloud data that preserves distinctive geometric features while discarding redundant information reduces memory footprint without compromising re-initialization capabilities.

Motion prediction models based on sensor fusion with IMU data provide valuable continuity during brief occlusions or aggressive movements. These predictive models maintain a probabilistic estimate of position that serves as an initialization seed when LiDAR data becomes reliable again. Advanced implementations incorporate machine learning techniques that adapt to the specific motion patterns of the platform, improving prediction accuracy during challenging scenarios.

Hardware-software co-optimization strategies further enhance real-time performance. Field-programmable gate arrays (FPGAs) can be employed to accelerate specific components of the re-initialization pipeline, such as initial feature matching or transformation estimation. Additionally, implementing progressive refinement approaches allows the system to quickly generate a coarse localization estimate that is sufficient for continued operation, while refinement continues in background processes to gradually improve accuracy without interrupting the main SLAM loop.

Sensor Fusion Approaches for Enhanced Re-Initialization

Sensor fusion represents a critical approach to enhancing LiDAR SLAM re-initialization capabilities after system failures caused by aggressive motion or occlusion events. By integrating data from multiple sensor modalities, these approaches create redundancy and complementary information streams that significantly improve robustness in challenging scenarios.

The integration of inertial measurement units (IMUs) with LiDAR systems forms the foundation of many fusion approaches. IMUs provide high-frequency motion estimates that can bridge temporary LiDAR data gaps during occlusion events. This tight coupling allows the system to maintain trajectory estimates even when point cloud quality deteriorates, providing critical initialization seeds when full LiDAR functionality returns.

Camera-LiDAR fusion approaches leverage the complementary nature of these sensing modalities. While LiDAR excels at precise depth measurement, cameras provide rich texture and feature information that remains relatively stable during motion blur. Visual-inertial odometry can maintain localization during periods when LiDAR data becomes unreliable, facilitating smoother re-initialization processes through feature matching between current and previously mapped environments.

Multi-LiDAR configurations represent another fusion strategy, where sensors with different fields of view or mounting positions create overlapping coverage. This redundancy ensures that even during partial occlusion events, sufficient environmental data remains available for localization. The distributed nature of these systems makes them particularly effective against localized occlusions that might completely blind a single-sensor setup.

GNSS integration provides absolute positioning references that can constrain drift during aggressive motion and accelerate re-initialization in outdoor environments. Modern tight-coupling approaches fuse raw GNSS measurements with LiDAR odometry at the estimation level rather than simply using GNSS as a correction mechanism, allowing for more nuanced integration of uncertainty models from both systems.

Radar-LiDAR fusion has emerged as particularly valuable for re-initialization in adverse weather conditions where LiDAR performance degrades. Radar's ability to penetrate precipitation while providing both range and velocity measurements offers complementary information that remains reliable precisely when LiDAR struggles most.

The computational approaches underpinning these fusion systems have evolved significantly, with factor graph optimization and multi-sensor calibration techniques enabling more sophisticated integration. Adaptive weighting mechanisms dynamically adjust the influence of different sensors based on their estimated reliability in current conditions, ensuring optimal use of available information during the critical re-initialization phase.

The integration of inertial measurement units (IMUs) with LiDAR systems forms the foundation of many fusion approaches. IMUs provide high-frequency motion estimates that can bridge temporary LiDAR data gaps during occlusion events. This tight coupling allows the system to maintain trajectory estimates even when point cloud quality deteriorates, providing critical initialization seeds when full LiDAR functionality returns.

Camera-LiDAR fusion approaches leverage the complementary nature of these sensing modalities. While LiDAR excels at precise depth measurement, cameras provide rich texture and feature information that remains relatively stable during motion blur. Visual-inertial odometry can maintain localization during periods when LiDAR data becomes unreliable, facilitating smoother re-initialization processes through feature matching between current and previously mapped environments.

Multi-LiDAR configurations represent another fusion strategy, where sensors with different fields of view or mounting positions create overlapping coverage. This redundancy ensures that even during partial occlusion events, sufficient environmental data remains available for localization. The distributed nature of these systems makes them particularly effective against localized occlusions that might completely blind a single-sensor setup.

GNSS integration provides absolute positioning references that can constrain drift during aggressive motion and accelerate re-initialization in outdoor environments. Modern tight-coupling approaches fuse raw GNSS measurements with LiDAR odometry at the estimation level rather than simply using GNSS as a correction mechanism, allowing for more nuanced integration of uncertainty models from both systems.

Radar-LiDAR fusion has emerged as particularly valuable for re-initialization in adverse weather conditions where LiDAR performance degrades. Radar's ability to penetrate precipitation while providing both range and velocity measurements offers complementary information that remains reliable precisely when LiDAR struggles most.

The computational approaches underpinning these fusion systems have evolved significantly, with factor graph optimization and multi-sensor calibration techniques enabling more sophisticated integration. Adaptive weighting mechanisms dynamically adjust the influence of different sensors based on their estimated reliability in current conditions, ensuring optimal use of available information during the critical re-initialization phase.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!