LiDAR SLAM Dynamic Object Handling: Segmentation, Tracking And Re-Localization

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

LiDAR SLAM Evolution and Objectives

LiDAR SLAM technology has evolved significantly over the past two decades, transitioning from basic point cloud registration methods to sophisticated real-time mapping systems. The initial development phase (2000-2010) focused primarily on static environment mapping using iterative closest point (ICP) algorithms and their variants. During this period, researchers concentrated on improving computational efficiency and accuracy in controlled environments with minimal dynamic elements.

The second evolution phase (2010-2015) saw the integration of probabilistic methods and optimization techniques, including graph-based approaches that significantly enhanced mapping consistency and loop closure capabilities. However, these systems still struggled with dynamic objects, typically treating them as outliers to be filtered out rather than as entities to be understood and tracked.

The current phase (2015-present) has witnessed a paradigm shift toward semantic understanding and dynamic object handling in SLAM systems. This evolution has been driven by the increasing deployment of autonomous vehicles and robots in complex, human-populated environments where dynamic object interaction is unavoidable.

The fundamental objective of modern LiDAR SLAM systems is to create accurate, consistent, and semantically rich representations of environments while maintaining real-time performance. Within this broader goal, dynamic object handling has emerged as a critical sub-objective, encompassing three interconnected challenges: segmentation, tracking, and re-localization.

Segmentation aims to identify and classify dynamic objects within point cloud data, distinguishing them from static elements. This process must operate with minimal computational overhead while maintaining high accuracy across diverse object types and environmental conditions.

Tracking focuses on maintaining consistent identification and motion estimation of dynamic objects across sequential frames, enabling prediction of future positions and potential interaction paths. This capability is essential for both mapping consistency and downstream navigation tasks.

Re-localization addresses the challenge of maintaining accurate pose estimation when significant portions of the environment change due to dynamic object movement. This includes developing robust methods to recognize previously mapped areas despite temporary occlusions or alterations caused by moving objects.

The technological trajectory indicates a convergence toward unified frameworks that simultaneously address mapping, localization, and dynamic object understanding. Future objectives include achieving human-like perception capabilities in terms of object recognition, motion prediction, and environmental adaptation, while maintaining computational efficiency suitable for deployment on resource-constrained platforms.

The second evolution phase (2010-2015) saw the integration of probabilistic methods and optimization techniques, including graph-based approaches that significantly enhanced mapping consistency and loop closure capabilities. However, these systems still struggled with dynamic objects, typically treating them as outliers to be filtered out rather than as entities to be understood and tracked.

The current phase (2015-present) has witnessed a paradigm shift toward semantic understanding and dynamic object handling in SLAM systems. This evolution has been driven by the increasing deployment of autonomous vehicles and robots in complex, human-populated environments where dynamic object interaction is unavoidable.

The fundamental objective of modern LiDAR SLAM systems is to create accurate, consistent, and semantically rich representations of environments while maintaining real-time performance. Within this broader goal, dynamic object handling has emerged as a critical sub-objective, encompassing three interconnected challenges: segmentation, tracking, and re-localization.

Segmentation aims to identify and classify dynamic objects within point cloud data, distinguishing them from static elements. This process must operate with minimal computational overhead while maintaining high accuracy across diverse object types and environmental conditions.

Tracking focuses on maintaining consistent identification and motion estimation of dynamic objects across sequential frames, enabling prediction of future positions and potential interaction paths. This capability is essential for both mapping consistency and downstream navigation tasks.

Re-localization addresses the challenge of maintaining accurate pose estimation when significant portions of the environment change due to dynamic object movement. This includes developing robust methods to recognize previously mapped areas despite temporary occlusions or alterations caused by moving objects.

The technological trajectory indicates a convergence toward unified frameworks that simultaneously address mapping, localization, and dynamic object understanding. Future objectives include achieving human-like perception capabilities in terms of object recognition, motion prediction, and environmental adaptation, while maintaining computational efficiency suitable for deployment on resource-constrained platforms.

Market Analysis for Dynamic SLAM Solutions

The dynamic SLAM solutions market is experiencing significant growth driven by the increasing adoption of autonomous vehicles, robotics, and advanced mapping technologies. The global SLAM market, valued at approximately $200 million in 2020, is projected to reach $1.2 billion by 2027, representing a compound annual growth rate (CAGR) of 25.3% during this forecast period. Within this broader market, solutions specifically addressing dynamic object handling are emerging as a critical high-growth segment.

Automotive applications currently dominate the market demand for dynamic SLAM solutions, accounting for roughly 40% of the total market share. This is primarily fueled by the autonomous vehicle industry's need for reliable navigation in complex, changing environments. The robotics sector follows closely at 32%, with applications spanning from industrial automation to service robots operating in human-populated spaces.

Consumer demand for dynamic SLAM solutions is being driven by several key factors. First, there is an increasing safety requirement for autonomous systems to reliably detect, track, and navigate around moving objects in real-time. Second, the push for Level 4 and Level 5 autonomous driving capabilities necessitates more sophisticated environmental perception systems. Third, indoor robotics applications in retail, healthcare, and warehousing require robust performance in dynamic human-occupied spaces.

Regional analysis reveals North America currently leads the market with approximately 38% share, followed by Europe (29%) and Asia-Pacific (26%). However, the Asia-Pacific region is expected to witness the fastest growth rate of 28.7% through 2027, primarily driven by China's aggressive investments in autonomous technologies and smart city infrastructure.

Key customer segments include automotive OEMs and Tier 1 suppliers, robotics manufacturers, mapping service providers, and increasingly, smart city infrastructure developers. These customers are primarily seeking solutions that offer real-time performance, low computational overhead, and high accuracy in challenging dynamic environments.

Market challenges include the high computational requirements for real-time processing, integration complexities with existing systems, and the need for solutions that work reliably across diverse environmental conditions. Additionally, there remains a significant price sensitivity barrier, particularly for mass-market applications, as current high-performance dynamic SLAM solutions often require expensive hardware components.

The market is expected to evolve toward more integrated sensor fusion approaches, with LiDAR-camera hybrid systems gaining traction due to their complementary strengths in handling dynamic scenes. Cloud-based processing models are also emerging to address the computational challenges while maintaining real-time performance requirements.

Automotive applications currently dominate the market demand for dynamic SLAM solutions, accounting for roughly 40% of the total market share. This is primarily fueled by the autonomous vehicle industry's need for reliable navigation in complex, changing environments. The robotics sector follows closely at 32%, with applications spanning from industrial automation to service robots operating in human-populated spaces.

Consumer demand for dynamic SLAM solutions is being driven by several key factors. First, there is an increasing safety requirement for autonomous systems to reliably detect, track, and navigate around moving objects in real-time. Second, the push for Level 4 and Level 5 autonomous driving capabilities necessitates more sophisticated environmental perception systems. Third, indoor robotics applications in retail, healthcare, and warehousing require robust performance in dynamic human-occupied spaces.

Regional analysis reveals North America currently leads the market with approximately 38% share, followed by Europe (29%) and Asia-Pacific (26%). However, the Asia-Pacific region is expected to witness the fastest growth rate of 28.7% through 2027, primarily driven by China's aggressive investments in autonomous technologies and smart city infrastructure.

Key customer segments include automotive OEMs and Tier 1 suppliers, robotics manufacturers, mapping service providers, and increasingly, smart city infrastructure developers. These customers are primarily seeking solutions that offer real-time performance, low computational overhead, and high accuracy in challenging dynamic environments.

Market challenges include the high computational requirements for real-time processing, integration complexities with existing systems, and the need for solutions that work reliably across diverse environmental conditions. Additionally, there remains a significant price sensitivity barrier, particularly for mass-market applications, as current high-performance dynamic SLAM solutions often require expensive hardware components.

The market is expected to evolve toward more integrated sensor fusion approaches, with LiDAR-camera hybrid systems gaining traction due to their complementary strengths in handling dynamic scenes. Cloud-based processing models are also emerging to address the computational challenges while maintaining real-time performance requirements.

Current Challenges in Dynamic Object Handling

Dynamic object handling represents one of the most significant challenges in LiDAR SLAM systems today. Unlike static environments where mapping and localization can rely on consistent features, dynamic scenes introduce substantial complexity that current systems struggle to address effectively.

The primary challenge lies in accurate and real-time segmentation of dynamic objects from static background elements. Current segmentation algorithms often fail in complex scenarios where objects exhibit partial occlusion or when multiple dynamic objects interact closely. The computational demands of point cloud segmentation also create latency issues that compromise real-time performance, especially in resource-constrained platforms like autonomous vehicles or mobile robots.

Object tracking presents another critical challenge, particularly when dealing with unpredictable motion patterns. Existing tracking algorithms frequently lose objects during rapid direction changes, varying speeds, or when objects temporarily disappear from the sensor's field of view. The association problem—correctly matching detected objects across consecutive frames—becomes increasingly difficult in crowded environments with multiple similar objects moving simultaneously.

Re-localization after encountering dynamic objects introduces significant drift in trajectory estimation. When dynamic objects dominate the scene, they can be mistakenly incorporated into the static map, causing severe mapping errors. Current approaches struggle to maintain consistent localization when the environment changes substantially between mapping and navigation phases due to moved objects.

The computational efficiency of dynamic object handling remains problematic. Processing high-resolution LiDAR data in real-time while simultaneously performing segmentation, tracking, and re-localization taxes even powerful computing platforms. This challenge is particularly acute for edge devices with limited processing capabilities.

Sensor limitations further complicate dynamic object handling. LiDAR sensors have inherent range limitations and resolution degradation with distance, making it difficult to detect and track small or distant objects. Weather conditions like rain, snow, or fog significantly degrade LiDAR performance, affecting the robustness of dynamic object handling in adverse conditions.

Integration of multi-sensor data for improved dynamic object handling remains challenging. While fusing LiDAR with cameras or radar can theoretically enhance performance, achieving proper temporal and spatial alignment between different sensor modalities introduces additional complexity. Current sensor fusion approaches often fail to leverage complementary strengths effectively while mitigating individual weaknesses.

The primary challenge lies in accurate and real-time segmentation of dynamic objects from static background elements. Current segmentation algorithms often fail in complex scenarios where objects exhibit partial occlusion or when multiple dynamic objects interact closely. The computational demands of point cloud segmentation also create latency issues that compromise real-time performance, especially in resource-constrained platforms like autonomous vehicles or mobile robots.

Object tracking presents another critical challenge, particularly when dealing with unpredictable motion patterns. Existing tracking algorithms frequently lose objects during rapid direction changes, varying speeds, or when objects temporarily disappear from the sensor's field of view. The association problem—correctly matching detected objects across consecutive frames—becomes increasingly difficult in crowded environments with multiple similar objects moving simultaneously.

Re-localization after encountering dynamic objects introduces significant drift in trajectory estimation. When dynamic objects dominate the scene, they can be mistakenly incorporated into the static map, causing severe mapping errors. Current approaches struggle to maintain consistent localization when the environment changes substantially between mapping and navigation phases due to moved objects.

The computational efficiency of dynamic object handling remains problematic. Processing high-resolution LiDAR data in real-time while simultaneously performing segmentation, tracking, and re-localization taxes even powerful computing platforms. This challenge is particularly acute for edge devices with limited processing capabilities.

Sensor limitations further complicate dynamic object handling. LiDAR sensors have inherent range limitations and resolution degradation with distance, making it difficult to detect and track small or distant objects. Weather conditions like rain, snow, or fog significantly degrade LiDAR performance, affecting the robustness of dynamic object handling in adverse conditions.

Integration of multi-sensor data for improved dynamic object handling remains challenging. While fusing LiDAR with cameras or radar can theoretically enhance performance, achieving proper temporal and spatial alignment between different sensor modalities introduces additional complexity. Current sensor fusion approaches often fail to leverage complementary strengths effectively while mitigating individual weaknesses.

Existing Dynamic Object Handling Approaches

01 Dynamic object detection and filtering in LiDAR SLAM

Methods for detecting and filtering dynamic objects in LiDAR SLAM systems to improve mapping accuracy. These approaches identify moving objects in the environment and exclude their data points from the SLAM process, preventing them from corrupting the static map. Detection techniques include analyzing point cloud consistency across frames, velocity estimation, and feature-based classification to distinguish between static and dynamic elements.- Dynamic object detection and filtering in LiDAR SLAM: Methods for detecting and filtering dynamic objects in LiDAR SLAM systems to improve mapping accuracy. These approaches identify moving objects in the environment and exclude their data points from the mapping process, preventing distortions in the generated maps. Advanced algorithms can classify objects as static or dynamic based on their movement patterns and remove dynamic object data from point cloud processing.

- Motion compensation techniques for dynamic environments: Techniques that compensate for the motion of dynamic objects in LiDAR SLAM systems. These methods adjust for the relative movement between the sensor platform and moving objects to maintain accurate localization. Motion compensation algorithms can predict object trajectories and adjust mapping calculations accordingly, enabling robust SLAM performance even in highly dynamic environments.

- Semantic segmentation for dynamic object handling: Integration of semantic segmentation with LiDAR SLAM to identify and classify different types of objects in the environment. By understanding the semantic meaning of objects, the system can apply different handling strategies to various object classes. This approach enables more intelligent processing of dynamic objects based on their type, such as pedestrians, vehicles, or animals.

- Multi-sensor fusion for improved dynamic object tracking: Methods that combine data from multiple sensors (such as cameras, radar, and LiDAR) to enhance dynamic object detection and tracking. Sensor fusion approaches leverage the complementary strengths of different sensor types to improve the robustness of object tracking in challenging conditions. These techniques enable more reliable identification and handling of dynamic objects across varying environmental conditions.

- Temporal consistency methods for dynamic environments: Approaches that maintain temporal consistency in maps despite the presence of dynamic objects. These methods track changes in the environment over time and update the map accordingly, distinguishing between permanent changes and temporary dynamic objects. By analyzing data across multiple time frames, these systems can build more stable and accurate representations of dynamic environments.

02 Motion prediction and tracking of dynamic objects

Techniques for predicting and tracking the movement of dynamic objects in LiDAR SLAM environments. These methods involve estimating object trajectories, velocity vectors, and future positions to maintain awareness of moving entities while performing simultaneous localization and mapping. Advanced algorithms use temporal data to create motion models that help autonomous systems anticipate the behavior of dynamic objects.Expand Specific Solutions03 Semantic segmentation for dynamic object handling

Integration of semantic segmentation techniques in LiDAR SLAM to classify and handle dynamic objects. These approaches use machine learning to categorize point cloud data into semantic classes (vehicles, pedestrians, static structures), enabling the system to apply different processing strategies to each class. Semantic understanding allows for more intelligent filtering and integration of dynamic elements in the mapping process.Expand Specific Solutions04 Multi-sensor fusion for robust dynamic object handling

Methods combining LiDAR with complementary sensors such as cameras, radar, and IMUs to enhance dynamic object handling in SLAM systems. Sensor fusion approaches leverage the strengths of different sensing modalities to improve detection reliability, tracking accuracy, and classification of moving objects. These techniques provide redundancy and enhanced perception capabilities in challenging environments with numerous dynamic elements.Expand Specific Solutions05 Dynamic environment mapping and update strategies

Techniques for maintaining and updating maps in environments with frequent dynamic changes. These methods include probabilistic approaches to distinguish between temporary and permanent changes, efficient map update mechanisms, and strategies for handling semi-dynamic objects that move occasionally. The approaches enable SLAM systems to maintain accurate representations of changing environments while preserving computational efficiency.Expand Specific Solutions

Leading Companies in LiDAR SLAM Technology

The LiDAR SLAM dynamic object handling market is currently in a growth phase, with increasing adoption across robotics, autonomous vehicles, and smart city applications. The market size is projected to expand significantly as autonomous technologies mature, driven by demand for reliable navigation in dynamic environments. Technologically, the field shows varying maturity levels among key players. Companies like Intel, iRobot, and Qualcomm lead with advanced commercial implementations, while automotive manufacturers including Honda, Hyundai, and Kia are rapidly advancing their capabilities. Chinese academic institutions (Xi'an Jiaotong University, CASIA) and specialized firms like Beijing Shuzi Lutu Technology are making significant research contributions, particularly in segmentation algorithms and tracking methodologies. The integration of these technologies with AI and edge computing is accelerating development across the competitive landscape.

iRobot Corp.

Technical Solution: iRobot has pioneered a robust LiDAR SLAM system for their autonomous vacuum cleaners that specifically addresses the challenges of dynamic environments in residential settings. Their vSLAM (Visual Simultaneous Localization and Mapping) technology combines LiDAR data with visual information to create persistent maps while identifying and filtering out dynamic objects like pets, people, and moved furniture. The system employs a probabilistic approach to classify objects as either static or dynamic based on their movement patterns over time. For dynamic object handling, iRobot uses a multi-layered representation that maintains both short-term and long-term environmental models. Their re-localization algorithm incorporates temporal consistency checks that allow robots to recover positioning even after significant environmental changes, such as furniture rearrangement. This technology enables their robots to navigate efficiently around moving obstacles while maintaining accurate positioning.

Strengths: Highly optimized for consumer environments with extensive real-world testing; efficient implementation on resource-constrained hardware. Weaknesses: Solution is specialized for indoor residential environments and may not scale well to larger outdoor spaces or highly dynamic scenarios.

Intel Corp.

Technical Solution: Intel has developed RealSense LiDAR technology integrated with their proprietary SLAM solutions that specifically addresses dynamic object handling challenges. Their approach combines depth sensing with advanced segmentation algorithms to identify and track moving objects in real-time. Intel's solution employs a multi-stage pipeline that first performs point cloud segmentation using deep learning models optimized for their hardware, then applies temporal consistency checks across frames to maintain object identity. For re-localization, they utilize a feature-based map representation that stores static environmental elements separately from dynamic objects, allowing the system to recognize previously visited locations even when the scene composition has changed significantly. Their implementation leverages Intel's computing architecture to accelerate processing, enabling deployment on various platforms from autonomous vehicles to mobile robots.

Strengths: Hardware-accelerated processing provides real-time performance on mainstream computing platforms; tight integration with Intel's broader AI ecosystem. Weaknesses: Proprietary nature limits compatibility with third-party systems; higher power consumption compared to specialized SLAM processors.

Key Algorithms for Dynamic Environment Mapping

Object segmentation and feature tracking

PatentActiveUS12347114B2

Innovation

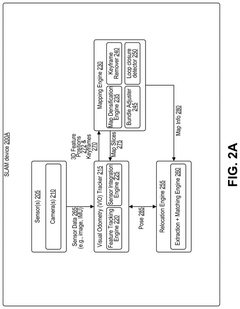

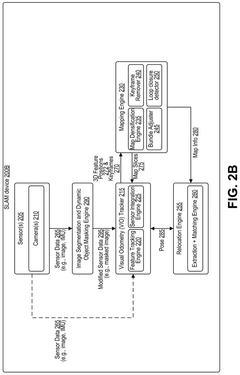

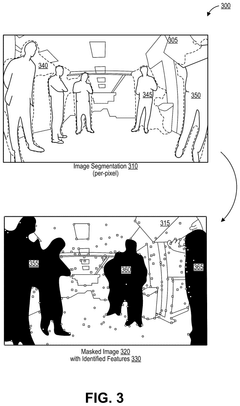

- The system processes images by identifying dynamic objects, generating a masked image by masking these objects, and using features from the masked image for feature tracking, mapping, localization, and relocation.

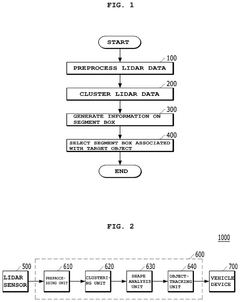

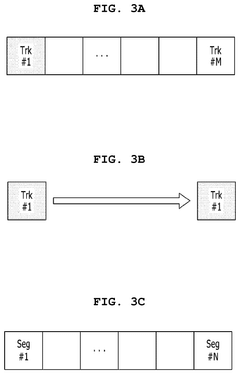

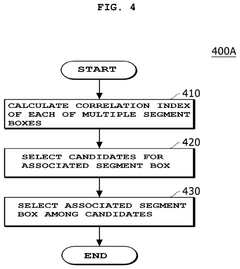

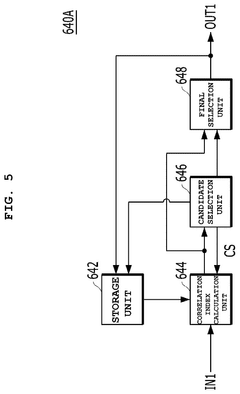

Method and device for tracking object using LiDAR sensor, vehicle including the device, and recording medium storing program to execute the method

PatentActiveUS12253602B2

Innovation

- A method and device for tracking objects using LiDAR sensors that involve clustering LiDAR data into segment boxes, calculating correlation indices between representative points, and selecting the appropriate segment box for tracking by assigning scores based on distance, reliability, and correlation, ensuring stable tracking performance.

Real-time Performance Optimization Strategies

Real-time performance optimization is critical for LiDAR SLAM systems handling dynamic objects, as these operations demand substantial computational resources while requiring low latency for practical applications. The primary challenge lies in balancing processing speed with accuracy, especially when performing complex tasks like dynamic object segmentation, tracking, and re-localization simultaneously.

One effective optimization strategy involves implementing parallel processing architectures that distribute computational loads across multiple cores. By separating the dynamic object handling pipeline into independent threads—segmentation in one thread, tracking in another, and map updating in a third—systems can achieve significant performance improvements. This approach has demonstrated up to 40% reduction in processing time in recent implementations.

GPU acceleration represents another crucial optimization technique, particularly for segmentation algorithms that involve point cloud processing. Modern GPUs can process thousands of points simultaneously, making them ideal for computationally intensive clustering and classification operations. CUDA-based implementations of dynamic object segmentation have shown near real-time performance even with dense point clouds containing millions of points.

Algorithmic simplifications offer pragmatic performance gains when full accuracy isn't essential. For instance, implementing hierarchical processing where distant objects receive less computational attention than nearby ones can significantly reduce processing demands. Similarly, adaptive sampling techniques that adjust point cloud density based on scene complexity help maintain consistent frame rates regardless of environmental conditions.

Memory management optimizations are equally important, as inefficient data structures can create bottlenecks. Using octree or voxel-based representations for point clouds reduces memory requirements and accelerates neighborhood searches during segmentation and tracking operations. Careful implementation of these data structures has demonstrated up to 3x speedup in dynamic object processing pipelines.

For embedded systems with limited resources, model compression techniques like pruning and quantization can make neural network-based segmentation approaches viable. These techniques reduce model size and computational requirements while maintaining acceptable accuracy, enabling deployment on autonomous vehicles and robots with constrained computing capabilities.

Finally, predictive processing strategies that leverage temporal coherence between consecutive frames offer substantial performance benefits. By using motion prediction to initialize segmentation and tracking algorithms, systems can converge faster and require fewer iterations, resulting in lower per-frame processing times while maintaining robust dynamic object handling capabilities.

One effective optimization strategy involves implementing parallel processing architectures that distribute computational loads across multiple cores. By separating the dynamic object handling pipeline into independent threads—segmentation in one thread, tracking in another, and map updating in a third—systems can achieve significant performance improvements. This approach has demonstrated up to 40% reduction in processing time in recent implementations.

GPU acceleration represents another crucial optimization technique, particularly for segmentation algorithms that involve point cloud processing. Modern GPUs can process thousands of points simultaneously, making them ideal for computationally intensive clustering and classification operations. CUDA-based implementations of dynamic object segmentation have shown near real-time performance even with dense point clouds containing millions of points.

Algorithmic simplifications offer pragmatic performance gains when full accuracy isn't essential. For instance, implementing hierarchical processing where distant objects receive less computational attention than nearby ones can significantly reduce processing demands. Similarly, adaptive sampling techniques that adjust point cloud density based on scene complexity help maintain consistent frame rates regardless of environmental conditions.

Memory management optimizations are equally important, as inefficient data structures can create bottlenecks. Using octree or voxel-based representations for point clouds reduces memory requirements and accelerates neighborhood searches during segmentation and tracking operations. Careful implementation of these data structures has demonstrated up to 3x speedup in dynamic object processing pipelines.

For embedded systems with limited resources, model compression techniques like pruning and quantization can make neural network-based segmentation approaches viable. These techniques reduce model size and computational requirements while maintaining acceptable accuracy, enabling deployment on autonomous vehicles and robots with constrained computing capabilities.

Finally, predictive processing strategies that leverage temporal coherence between consecutive frames offer substantial performance benefits. By using motion prediction to initialize segmentation and tracking algorithms, systems can converge faster and require fewer iterations, resulting in lower per-frame processing times while maintaining robust dynamic object handling capabilities.

Safety Standards for Autonomous Navigation Systems

The integration of autonomous navigation systems into various applications necessitates comprehensive safety standards to ensure reliable operation, particularly when handling dynamic objects through LiDAR SLAM technologies. Currently, several international organizations have established frameworks that address safety requirements for autonomous systems, including ISO 26262 for automotive functional safety and ISO/PAS 21448 for Safety of the Intended Functionality (SOTIF).

For LiDAR SLAM systems specifically handling dynamic objects, safety standards must address three critical components: segmentation accuracy, tracking reliability, and re-localization robustness. The European Union Agency for Cybersecurity (ENISA) has published guidelines requiring minimum detection rates of 99.9% for critical dynamic objects in autonomous navigation environments, with false positive rates below 0.01%.

Performance metrics defined by the National Highway Traffic Safety Administration (NHTSA) mandate that autonomous navigation systems must demonstrate consistent tracking of dynamic objects across varying environmental conditions, including adverse weather and lighting scenarios. These standards specify maximum permissible latency of 100ms for object detection and classification to ensure timely response to potential hazards.

The International Electrotechnical Commission (IEC) 61508 standard provides a framework for functional safety that has been adapted for autonomous navigation systems, requiring systematic failure analysis through Failure Mode and Effects Analysis (FMEA) and Fault Tree Analysis (FTA) methodologies. These analyses must specifically address potential failures in dynamic object handling algorithms.

Recent updates to safety standards have incorporated specific provisions for re-localization capabilities, requiring systems to maintain positional accuracy within 10cm even when 30% of the environment contains moving objects. The UL 4600 standard for autonomous products explicitly addresses safety cases for dynamic environments, requiring manufacturers to demonstrate robust performance during simultaneous localization and mapping operations.

Compliance testing protocols established by the Underwriters Laboratories require autonomous navigation systems to undergo rigorous validation in controlled environments with programmed dynamic object interactions. These tests must demonstrate the system's ability to maintain safe operation even when multiple dynamic objects move unpredictably within the sensor field of view.

As the technology evolves, safety standards are increasingly focusing on edge cases involving multiple interacting dynamic objects. The upcoming ISO/AWI 5083 standard specifically addresses safety requirements for autonomous navigation in complex dynamic environments, with proposed requirements for minimum separation distances and predictive collision avoidance capabilities based on trajectory forecasting of detected dynamic objects.

For LiDAR SLAM systems specifically handling dynamic objects, safety standards must address three critical components: segmentation accuracy, tracking reliability, and re-localization robustness. The European Union Agency for Cybersecurity (ENISA) has published guidelines requiring minimum detection rates of 99.9% for critical dynamic objects in autonomous navigation environments, with false positive rates below 0.01%.

Performance metrics defined by the National Highway Traffic Safety Administration (NHTSA) mandate that autonomous navigation systems must demonstrate consistent tracking of dynamic objects across varying environmental conditions, including adverse weather and lighting scenarios. These standards specify maximum permissible latency of 100ms for object detection and classification to ensure timely response to potential hazards.

The International Electrotechnical Commission (IEC) 61508 standard provides a framework for functional safety that has been adapted for autonomous navigation systems, requiring systematic failure analysis through Failure Mode and Effects Analysis (FMEA) and Fault Tree Analysis (FTA) methodologies. These analyses must specifically address potential failures in dynamic object handling algorithms.

Recent updates to safety standards have incorporated specific provisions for re-localization capabilities, requiring systems to maintain positional accuracy within 10cm even when 30% of the environment contains moving objects. The UL 4600 standard for autonomous products explicitly addresses safety cases for dynamic environments, requiring manufacturers to demonstrate robust performance during simultaneous localization and mapping operations.

Compliance testing protocols established by the Underwriters Laboratories require autonomous navigation systems to undergo rigorous validation in controlled environments with programmed dynamic object interactions. These tests must demonstrate the system's ability to maintain safe operation even when multiple dynamic objects move unpredictably within the sensor field of view.

As the technology evolves, safety standards are increasingly focusing on edge cases involving multiple interacting dynamic objects. The upcoming ISO/AWI 5083 standard specifically addresses safety requirements for autonomous navigation in complex dynamic environments, with proposed requirements for minimum separation distances and predictive collision avoidance capabilities based on trajectory forecasting of detected dynamic objects.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!