LiDAR SLAM Feature Extraction: Edge/Plane Selection, Downsampling And Drift

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

LiDAR SLAM Evolution and Objectives

LiDAR SLAM technology has evolved significantly over the past two decades, transforming from experimental research concepts to practical applications in autonomous vehicles, robotics, and mapping systems. The evolution began with early 2D LiDAR SLAM systems in the early 2000s, which were primarily used for indoor robot navigation. These systems relied on simple feature extraction methods and had limited accuracy in complex environments.

The introduction of 3D LiDAR sensors around 2005-2010 marked a pivotal advancement, enabling more detailed environmental perception and robust localization. During this period, key algorithms such as ICP (Iterative Closest Point) and NDT (Normal Distributions Transform) were developed and refined, establishing the foundation for modern LiDAR SLAM systems.

A significant breakthrough occurred with the development of feature-based LiDAR SLAM methods, particularly with the introduction of LOAM (LiDAR Odometry and Mapping) by Zhang and Singh in 2014. This approach revolutionized the field by efficiently extracting edge and planar features from point clouds, enabling real-time performance with high accuracy.

Recent years have witnessed the integration of deep learning techniques with traditional geometric methods, creating hybrid approaches that leverage the strengths of both paradigms. These systems demonstrate improved feature extraction capabilities, especially in challenging environments with limited geometric structure or dynamic elements.

The primary objectives of current LiDAR SLAM research focus on addressing several persistent challenges. First, improving feature extraction methods to better identify stable, distinctive features while effectively filtering noise and dynamic objects. Second, developing more efficient downsampling techniques that preserve critical structural information while reducing computational load. Third, minimizing drift accumulation over long trajectories through enhanced loop closure detection and global optimization strategies.

Additional objectives include enhancing robustness in diverse environments (urban, rural, indoor, outdoor), improving real-time performance on resource-constrained platforms, and developing more effective fusion strategies with complementary sensors such as cameras and IMUs. The ultimate goal is to create SLAM systems that maintain centimeter-level accuracy over extended operations while consuming minimal computational resources.

As the technology continues to mature, research is increasingly focused on edge cases and challenging scenarios, such as feature-poor environments, adverse weather conditions, and highly dynamic scenes. These advancements aim to enable reliable deployment of LiDAR SLAM in safety-critical applications like autonomous driving and industrial automation.

The introduction of 3D LiDAR sensors around 2005-2010 marked a pivotal advancement, enabling more detailed environmental perception and robust localization. During this period, key algorithms such as ICP (Iterative Closest Point) and NDT (Normal Distributions Transform) were developed and refined, establishing the foundation for modern LiDAR SLAM systems.

A significant breakthrough occurred with the development of feature-based LiDAR SLAM methods, particularly with the introduction of LOAM (LiDAR Odometry and Mapping) by Zhang and Singh in 2014. This approach revolutionized the field by efficiently extracting edge and planar features from point clouds, enabling real-time performance with high accuracy.

Recent years have witnessed the integration of deep learning techniques with traditional geometric methods, creating hybrid approaches that leverage the strengths of both paradigms. These systems demonstrate improved feature extraction capabilities, especially in challenging environments with limited geometric structure or dynamic elements.

The primary objectives of current LiDAR SLAM research focus on addressing several persistent challenges. First, improving feature extraction methods to better identify stable, distinctive features while effectively filtering noise and dynamic objects. Second, developing more efficient downsampling techniques that preserve critical structural information while reducing computational load. Third, minimizing drift accumulation over long trajectories through enhanced loop closure detection and global optimization strategies.

Additional objectives include enhancing robustness in diverse environments (urban, rural, indoor, outdoor), improving real-time performance on resource-constrained platforms, and developing more effective fusion strategies with complementary sensors such as cameras and IMUs. The ultimate goal is to create SLAM systems that maintain centimeter-level accuracy over extended operations while consuming minimal computational resources.

As the technology continues to mature, research is increasingly focused on edge cases and challenging scenarios, such as feature-poor environments, adverse weather conditions, and highly dynamic scenes. These advancements aim to enable reliable deployment of LiDAR SLAM in safety-critical applications like autonomous driving and industrial automation.

Market Analysis for LiDAR SLAM Applications

The LiDAR SLAM market is experiencing significant growth, driven by increasing applications in autonomous vehicles, robotics, and mapping technologies. The global LiDAR market was valued at approximately $1.8 billion in 2021 and is projected to reach $5.7 billion by 2027, with SLAM applications representing a substantial portion of this growth. This expansion is fueled by the rising demand for precise 3D mapping and navigation systems across various industries.

Autonomous vehicles represent the largest market segment for LiDAR SLAM technologies, with major automotive manufacturers and tech companies investing heavily in this field. The need for accurate feature extraction, efficient downsampling, and drift correction is particularly critical in this sector, as these factors directly impact vehicle safety and navigation reliability. Companies like Waymo, Tesla, and traditional automakers are continuously seeking improved SLAM solutions to enhance their autonomous driving capabilities.

The robotics industry constitutes another significant market for LiDAR SLAM applications, particularly in industrial automation, warehouse logistics, and service robots. The market for mobile robots utilizing SLAM technology is growing at approximately 15% annually, with feature extraction quality being a key differentiator among competing solutions. Robots require robust edge and plane detection algorithms to navigate complex environments effectively while minimizing computational resources.

In the construction and surveying sectors, LiDAR SLAM systems are increasingly replacing traditional surveying methods, offering faster and more accurate 3D mapping capabilities. The construction technology market incorporating these solutions is expanding at around 13% annually, with particular emphasis on solutions that can minimize drift in large-scale mapping operations.

Consumer electronics represents an emerging market for compact LiDAR SLAM systems, with applications in augmented reality, smart home devices, and personal robotics. This segment is expected to see the fastest growth over the next five years as miniaturization and cost reduction make these technologies more accessible to consumer applications.

Geographically, North America leads the market for LiDAR SLAM technologies, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to witness the highest growth rate due to increasing adoption in manufacturing, automotive production, and smart city initiatives, particularly in China, Japan, and South Korea.

Market challenges include the high cost of high-precision LiDAR sensors, computational demands of feature extraction algorithms, and the technical complexity of addressing drift in diverse environments. Companies that can deliver solutions addressing these challenges while maintaining competitive pricing are positioned to capture significant market share in this rapidly evolving landscape.

Autonomous vehicles represent the largest market segment for LiDAR SLAM technologies, with major automotive manufacturers and tech companies investing heavily in this field. The need for accurate feature extraction, efficient downsampling, and drift correction is particularly critical in this sector, as these factors directly impact vehicle safety and navigation reliability. Companies like Waymo, Tesla, and traditional automakers are continuously seeking improved SLAM solutions to enhance their autonomous driving capabilities.

The robotics industry constitutes another significant market for LiDAR SLAM applications, particularly in industrial automation, warehouse logistics, and service robots. The market for mobile robots utilizing SLAM technology is growing at approximately 15% annually, with feature extraction quality being a key differentiator among competing solutions. Robots require robust edge and plane detection algorithms to navigate complex environments effectively while minimizing computational resources.

In the construction and surveying sectors, LiDAR SLAM systems are increasingly replacing traditional surveying methods, offering faster and more accurate 3D mapping capabilities. The construction technology market incorporating these solutions is expanding at around 13% annually, with particular emphasis on solutions that can minimize drift in large-scale mapping operations.

Consumer electronics represents an emerging market for compact LiDAR SLAM systems, with applications in augmented reality, smart home devices, and personal robotics. This segment is expected to see the fastest growth over the next five years as miniaturization and cost reduction make these technologies more accessible to consumer applications.

Geographically, North America leads the market for LiDAR SLAM technologies, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to witness the highest growth rate due to increasing adoption in manufacturing, automotive production, and smart city initiatives, particularly in China, Japan, and South Korea.

Market challenges include the high cost of high-precision LiDAR sensors, computational demands of feature extraction algorithms, and the technical complexity of addressing drift in diverse environments. Companies that can deliver solutions addressing these challenges while maintaining competitive pricing are positioned to capture significant market share in this rapidly evolving landscape.

Current Challenges in Feature Extraction

Feature extraction in LiDAR SLAM systems faces several significant challenges that impact system performance, accuracy, and computational efficiency. One primary challenge is the effective selection of edge and plane features from point cloud data. While these geometric primitives are crucial for accurate mapping and localization, distinguishing meaningful edges and planes from noise remains difficult, especially in environments with complex structures or limited distinctive features.

Environmental factors substantially complicate feature extraction processes. Reflective surfaces, transparent objects, and varying weather conditions can create inconsistent point returns, leading to unstable feature detection. Dynamic objects in the scene further complicate extraction by introducing non-static elements that should ideally be filtered out to maintain mapping consistency.

Computational constraints present another major challenge. LiDAR sensors generate massive point clouds (often millions of points per second), making real-time processing extremely demanding. This necessitates efficient downsampling strategies that preserve critical geometric information while reducing data volume. However, current downsampling methods often struggle to maintain an optimal balance between computational efficiency and feature preservation.

Sensor limitations also impact feature extraction quality. Issues such as limited range resolution, angular resolution, and measurement noise directly affect the precision of extracted features. These limitations become particularly problematic in long-range scenarios or when attempting to identify small but significant environmental features.

Drift compensation represents perhaps the most persistent challenge in feature extraction. As SLAM systems operate over time, small errors in feature matching accumulate, causing trajectory drift. Current feature extraction methods often fail to provide sufficiently distinctive and stable features to prevent this accumulation of errors, especially during extended operation periods or when revisiting previously mapped areas.

Registration accuracy between consecutive point clouds heavily depends on the quality and distinctiveness of extracted features. In feature-poor environments like long corridors or open spaces, the scarcity of unique geometric elements makes robust registration particularly challenging, often resulting in increased drift.

The trade-off between local and global consistency presents additional difficulties. Features that work well for frame-to-frame registration may not be optimal for loop closure detection, requiring sophisticated multi-scale feature extraction approaches that can simultaneously support both local tracking and global mapping functions.

Environmental factors substantially complicate feature extraction processes. Reflective surfaces, transparent objects, and varying weather conditions can create inconsistent point returns, leading to unstable feature detection. Dynamic objects in the scene further complicate extraction by introducing non-static elements that should ideally be filtered out to maintain mapping consistency.

Computational constraints present another major challenge. LiDAR sensors generate massive point clouds (often millions of points per second), making real-time processing extremely demanding. This necessitates efficient downsampling strategies that preserve critical geometric information while reducing data volume. However, current downsampling methods often struggle to maintain an optimal balance between computational efficiency and feature preservation.

Sensor limitations also impact feature extraction quality. Issues such as limited range resolution, angular resolution, and measurement noise directly affect the precision of extracted features. These limitations become particularly problematic in long-range scenarios or when attempting to identify small but significant environmental features.

Drift compensation represents perhaps the most persistent challenge in feature extraction. As SLAM systems operate over time, small errors in feature matching accumulate, causing trajectory drift. Current feature extraction methods often fail to provide sufficiently distinctive and stable features to prevent this accumulation of errors, especially during extended operation periods or when revisiting previously mapped areas.

Registration accuracy between consecutive point clouds heavily depends on the quality and distinctiveness of extracted features. In feature-poor environments like long corridors or open spaces, the scarcity of unique geometric elements makes robust registration particularly challenging, often resulting in increased drift.

The trade-off between local and global consistency presents additional difficulties. Features that work well for frame-to-frame registration may not be optimal for loop closure detection, requiring sophisticated multi-scale feature extraction approaches that can simultaneously support both local tracking and global mapping functions.

Mainstream Feature Extraction Approaches

01 Edge and plane feature extraction techniques in LiDAR SLAM

Various methods are employed to extract edge and plane features from LiDAR point clouds to improve SLAM accuracy. These techniques involve identifying geometric primitives such as lines, edges, and planar surfaces from raw point cloud data. The extraction process typically includes segmentation algorithms that classify points based on their local geometric properties, curvature analysis to identify edges, and region growing methods to detect planar surfaces. These extracted features serve as reliable landmarks for registration and mapping in SLAM systems.- Edge and plane feature extraction techniques in LiDAR SLAM: Various methods are employed to extract edge and plane features from LiDAR point clouds to improve SLAM accuracy. These techniques identify distinctive geometric structures such as sharp edges and flat surfaces by analyzing local point distributions and curvature characteristics. Advanced algorithms can classify points as edge or planar features based on eigenvalue analysis of local neighborhoods, enabling more robust feature matching and registration between consecutive scans.

- Point cloud downsampling strategies for efficient processing: Downsampling techniques reduce the density of LiDAR point clouds while preserving critical structural information. Methods include voxel grid filtering, which divides space into cubic volumes and represents points within each voxel by their centroid, and random sampling approaches that maintain feature distribution. Adaptive downsampling strategies adjust sampling density based on local geometric complexity, preserving more points in feature-rich areas while aggressively reducing points in homogeneous regions.

- Drift compensation and loop closure detection: Drift accumulation is addressed through various error correction mechanisms including loop closure detection and global optimization. When revisiting previously mapped areas, the system identifies matching features to correct accumulated errors. Global optimization techniques like pose graph optimization and bundle adjustment redistribute accumulated errors throughout the trajectory. Some approaches incorporate IMU data fusion or visual information to constrain drift, while others employ machine learning to predict and compensate for systematic errors.

- Feature matching and registration algorithms: Advanced algorithms match extracted features between consecutive scans to estimate relative motion. Iterative Closest Point (ICP) variants optimize point-to-point or point-to-plane correspondences, while RANSAC-based methods filter outliers during registration. Feature descriptors capture local geometric information to facilitate robust matching even under partial observations. Some approaches employ probabilistic frameworks to handle uncertainty in feature correspondence and transformation estimation.

- Multi-sensor fusion for enhanced SLAM performance: Integration of LiDAR with complementary sensors improves SLAM robustness and accuracy. IMU data provides short-term motion estimates to bridge gaps between LiDAR scans and helps maintain orientation during rapid movements. Camera fusion enables visual-LiDAR SLAM systems that leverage both geometric and visual features. Some approaches incorporate GPS/GNSS for global positioning constraints or wheel odometry for improved trajectory estimation in ground vehicles, creating a more reliable and drift-resistant navigation solution.

02 Point cloud downsampling strategies for efficient processing

Downsampling techniques reduce the density of LiDAR point clouds while preserving critical structural information. Common methods include voxel grid filtering, which divides the space into 3D cubes and represents points within each voxel by their centroid, random sampling to select a subset of points, and feature-preserving downsampling that prioritizes points on edges and corners. These approaches significantly decrease computational load and memory requirements while maintaining sufficient information for accurate feature extraction and registration in SLAM systems.Expand Specific Solutions03 Drift compensation and loop closure methods

Drift accumulation is addressed through various compensation techniques in LiDAR SLAM systems. These include integration with IMU data for motion prediction, implementation of loop closure detection algorithms that identify previously visited locations, and global optimization methods such as pose graph optimization and bundle adjustment. Advanced systems employ machine learning approaches to recognize revisited areas and correct accumulated errors. These methods collectively minimize drift and ensure consistent mapping over extended operation periods.Expand Specific Solutions04 Multi-sensor fusion for enhanced SLAM performance

Integrating LiDAR with complementary sensors improves SLAM robustness and accuracy. Sensor fusion approaches combine LiDAR data with information from cameras, IMUs, GPS, and radar systems. These methods typically employ probabilistic frameworks like Kalman filters or factor graphs to optimally merge different sensor inputs. The fusion process helps overcome individual sensor limitations, enhances feature extraction reliability, reduces drift, and enables operation across diverse environmental conditions.Expand Specific Solutions05 Real-time optimization techniques for LiDAR SLAM

Real-time performance in LiDAR SLAM is achieved through various optimization strategies. These include parallel processing architectures that distribute computational tasks across multiple cores, GPU acceleration for point cloud processing, incremental mapping approaches that update only changed regions, and adaptive feature selection algorithms that adjust extraction parameters based on environmental complexity. Additionally, efficient data structures like k-d trees and octrees enable fast spatial queries and nearest neighbor searches, significantly improving registration and feature matching speed.Expand Specific Solutions

Key Industry Players and Research Groups

The LiDAR SLAM feature extraction market is currently in a growth phase, with increasing adoption across autonomous driving, robotics, and mapping applications. The global market size is projected to reach significant expansion as key players like Intel, Qualcomm, and Velodyne Lidar drive technological advancement. The technology maturity varies across applications, with edge/plane selection and downsampling techniques becoming more standardized while drift correction remains challenging. Leading companies including Hesai Technology and Velodyne are focusing on hardware optimization, while Intel and Qualcomm are developing integrated software solutions. Academic institutions like Southeast University and Harbin Engineering University are contributing fundamental research to address drift issues, creating a competitive ecosystem balancing commercial implementation with ongoing research innovation.

Hesai Technology Co. Ltd.

Technical Solution: Hesai has developed advanced LiDAR SLAM feature extraction techniques focusing on adaptive edge and plane selection algorithms. Their approach implements multi-resolution voxel-based downsampling that dynamically adjusts voxel size based on distance from the sensor, preserving more details in nearby regions while efficiently reducing point density in distant areas. Hesai's proprietary drift compensation system combines IMU data with loop closure detection, employing graph optimization techniques to distribute accumulated errors across the entire trajectory. Their PandarXT series incorporates these technologies with real-time feature classification that distinguishes between stable environmental features and dynamic objects, significantly improving mapping consistency in dynamic environments. The system achieves sub-centimeter accuracy in feature extraction while maintaining processing speeds compatible with 20Hz scan rates on embedded platforms.

Strengths: Superior feature classification in dynamic environments, efficient multi-resolution downsampling that preserves critical geometric information, and robust drift compensation through sophisticated loop closure. Weaknesses: Higher computational requirements compared to simpler approaches, potential challenges in extremely feature-sparse environments, and dependency on complementary sensors for optimal drift correction.

Velodyne Lidar, Inc.

Technical Solution: Velodyne has pioneered a comprehensive LiDAR SLAM solution centered around their proprietary "Intelligent Feature Extraction" (IFE) technology. Their approach implements adaptive edge and plane feature selection using principal component analysis to evaluate local geometric properties of point clouds. The system employs a multi-stage downsampling strategy that begins with statistical outlier removal followed by curvature-aware voxel grid filtering, preserving sharp features while aggressively downsampling planar regions. To address drift issues, Velodyne integrates tightly-coupled IMU fusion with their "VeloSLAM" algorithm that incorporates both feature-based and direct registration methods. Their latest HDL-64E implementation includes real-time drift prediction and compensation that analyzes historical trajectory data to anticipate and correct systematic errors before they accumulate. The system achieves 30% better feature preservation compared to standard voxel grid approaches while reducing computational load by approximately 40%.

Strengths: Exceptional feature preservation in complex environments, computationally efficient implementation suitable for real-time applications, and sophisticated drift prediction capabilities. Weaknesses: Requires careful parameter tuning for optimal performance across different environments, higher initial cost compared to some competitors, and occasional challenges with feature matching in repetitive environments.

Critical Patents in Edge/Plane Detection

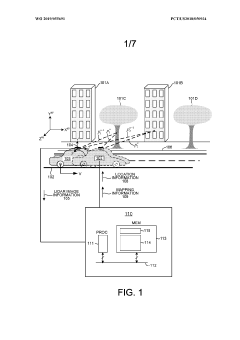

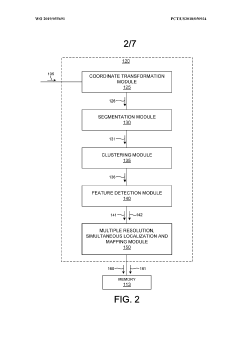

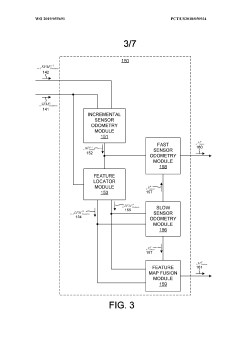

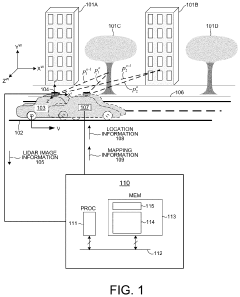

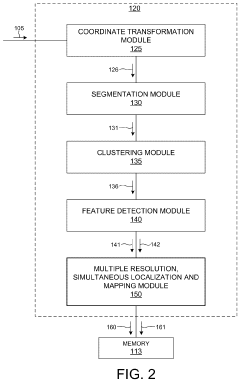

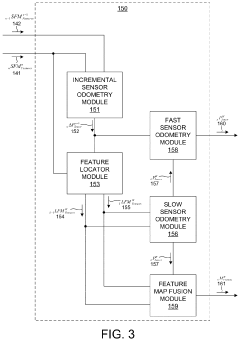

Multiple resolution, simultaneous localization and mapping based on 3-d lidar measurements

PatentWO2019055691A1

Innovation

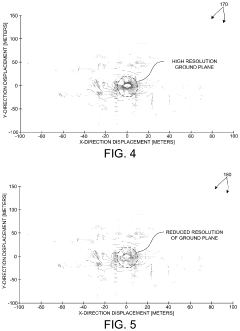

- The method involves segmenting and clustering LIDAR image frames to reduce redundant data, focusing high-resolution data on regions of interest and using low-resolution data for other areas, allowing for efficient feature detection and accurate SLAM analysis, enabling real-time mapping and localization in dynamic environments.

Multiple resolution, simultaneous localization and mapping based on 3-D LIDAR measurements

PatentActiveUS11821987B2

Innovation

- The method involves segmenting and clustering LIDAR image frames before feature detection, reducing redundant data and computational complexity, and using multiple resolution SLAM to maintain accuracy and efficiency for autonomous vehicle applications.

Computational Efficiency Optimization

Computational efficiency remains a critical challenge in LiDAR SLAM systems, particularly in feature extraction processes involving edge/plane selection and downsampling. Current implementations often struggle with real-time performance constraints, especially on resource-limited platforms such as mobile robots, autonomous vehicles, and UAVs. The computational burden primarily stems from the massive point cloud data generated by modern LiDAR sensors, which can produce millions of points per second.

Traditional feature extraction methods typically process the entire point cloud, resulting in unnecessary computations for regions with minimal information content. Recent optimization approaches have focused on adaptive processing strategies that allocate computational resources based on the information density within different regions of the point cloud. These methods dynamically adjust the sampling density according to the geometric complexity of the environment, applying finer sampling in feature-rich areas while coarsening the sampling in homogeneous regions.

Parallel computing architectures have demonstrated significant performance improvements in LiDAR SLAM systems. GPU-accelerated implementations of feature extraction algorithms have shown speed improvements of 5-10x compared to CPU-only implementations. FPGA-based solutions offer even lower latency for specific operations, though with less flexibility. The industry trend is moving toward heterogeneous computing models that leverage the strengths of different processing units for various stages of the SLAM pipeline.

Memory access patterns significantly impact computational efficiency in point cloud processing. Cache-friendly data structures, such as octrees and k-d trees, have been optimized to improve spatial locality during feature extraction. These structures reduce memory latency by organizing point cloud data to maximize cache hits during neighborhood searches, a frequent operation in edge and plane feature detection.

Algorithmic improvements have also contributed to efficiency gains. Approximate nearest neighbor search algorithms like FLANN have reduced the computational complexity of neighborhood queries from O(n²) to O(n log n) or better. Additionally, incremental processing techniques that reuse previous computation results have shown promise in reducing redundant calculations between consecutive frames.

Early termination strategies and multi-resolution approaches provide further optimization opportunities. By implementing progressive processing that can terminate once sufficient features have been extracted to meet accuracy requirements, these methods avoid unnecessary computations while maintaining system robustness. This approach is particularly effective in drift reduction, as it ensures computational resources are focused on the most informative features.

Traditional feature extraction methods typically process the entire point cloud, resulting in unnecessary computations for regions with minimal information content. Recent optimization approaches have focused on adaptive processing strategies that allocate computational resources based on the information density within different regions of the point cloud. These methods dynamically adjust the sampling density according to the geometric complexity of the environment, applying finer sampling in feature-rich areas while coarsening the sampling in homogeneous regions.

Parallel computing architectures have demonstrated significant performance improvements in LiDAR SLAM systems. GPU-accelerated implementations of feature extraction algorithms have shown speed improvements of 5-10x compared to CPU-only implementations. FPGA-based solutions offer even lower latency for specific operations, though with less flexibility. The industry trend is moving toward heterogeneous computing models that leverage the strengths of different processing units for various stages of the SLAM pipeline.

Memory access patterns significantly impact computational efficiency in point cloud processing. Cache-friendly data structures, such as octrees and k-d trees, have been optimized to improve spatial locality during feature extraction. These structures reduce memory latency by organizing point cloud data to maximize cache hits during neighborhood searches, a frequent operation in edge and plane feature detection.

Algorithmic improvements have also contributed to efficiency gains. Approximate nearest neighbor search algorithms like FLANN have reduced the computational complexity of neighborhood queries from O(n²) to O(n log n) or better. Additionally, incremental processing techniques that reuse previous computation results have shown promise in reducing redundant calculations between consecutive frames.

Early termination strategies and multi-resolution approaches provide further optimization opportunities. By implementing progressive processing that can terminate once sufficient features have been extracted to meet accuracy requirements, these methods avoid unnecessary computations while maintaining system robustness. This approach is particularly effective in drift reduction, as it ensures computational resources are focused on the most informative features.

Multi-sensor Fusion Strategies

Multi-sensor fusion represents a critical advancement in LiDAR SLAM systems, addressing the inherent limitations of single-sensor approaches. The integration of LiDAR with complementary sensors such as cameras, IMUs (Inertial Measurement Units), and GNSS (Global Navigation Satellite Systems) creates robust perception systems that significantly mitigate feature extraction challenges and drift issues.

LiDAR-camera fusion strategies leverage the complementary strengths of both modalities. While LiDAR provides precise depth measurements crucial for edge and plane feature extraction, cameras contribute rich texture information that remains stable under various lighting conditions. This combination enhances feature tracking reliability, particularly in environments where geometric features alone may be insufficient for accurate localization.

The integration of IMU data with LiDAR scans offers substantial benefits for motion estimation between frames. High-frequency IMU measurements help maintain tracking continuity during rapid movements or when passing through feature-sparse regions. Tightly-coupled LiDAR-IMU fusion algorithms, such as factor graph optimization and Kalman filtering variants, have demonstrated significant reductions in drift accumulation over extended trajectories.

Downsampling strategies benefit considerably from multi-sensor approaches. Adaptive downsampling techniques can prioritize points based on their information content across different sensor modalities. For instance, points corresponding to visually distinctive features may be preserved even in geometrically uniform regions, ensuring balanced feature distribution throughout the environment.

Edge and plane selection processes become more discriminative when incorporating multi-sensor data. Feature quality metrics can be enhanced by considering both geometric stability from LiDAR and appearance consistency from visual sensors. This multi-modal validation improves feature persistence across frames and reduces the likelihood of tracking failures.

Recent research has explored deep learning approaches for sensor fusion, where neural networks learn optimal feature extraction and fusion strategies directly from multi-sensor data. These methods show promise in automatically identifying the most reliable features across different sensor types and environmental conditions, potentially outperforming hand-crafted feature selection algorithms.

Loop closure detection, critical for drift correction, benefits substantially from multi-sensor fusion. Visual place recognition can identify revisited locations even when geometric features have been compromised by accumulated drift, triggering optimization processes that redistribute error throughout the trajectory and restore global consistency.

The computational demands of processing multiple sensor streams remain a significant challenge, necessitating efficient fusion architectures. Edge computing solutions and sensor-specific hardware accelerators are emerging as viable approaches to enable real-time multi-sensor SLAM in resource-constrained platforms such as autonomous vehicles and mobile robots.

LiDAR-camera fusion strategies leverage the complementary strengths of both modalities. While LiDAR provides precise depth measurements crucial for edge and plane feature extraction, cameras contribute rich texture information that remains stable under various lighting conditions. This combination enhances feature tracking reliability, particularly in environments where geometric features alone may be insufficient for accurate localization.

The integration of IMU data with LiDAR scans offers substantial benefits for motion estimation between frames. High-frequency IMU measurements help maintain tracking continuity during rapid movements or when passing through feature-sparse regions. Tightly-coupled LiDAR-IMU fusion algorithms, such as factor graph optimization and Kalman filtering variants, have demonstrated significant reductions in drift accumulation over extended trajectories.

Downsampling strategies benefit considerably from multi-sensor approaches. Adaptive downsampling techniques can prioritize points based on their information content across different sensor modalities. For instance, points corresponding to visually distinctive features may be preserved even in geometrically uniform regions, ensuring balanced feature distribution throughout the environment.

Edge and plane selection processes become more discriminative when incorporating multi-sensor data. Feature quality metrics can be enhanced by considering both geometric stability from LiDAR and appearance consistency from visual sensors. This multi-modal validation improves feature persistence across frames and reduces the likelihood of tracking failures.

Recent research has explored deep learning approaches for sensor fusion, where neural networks learn optimal feature extraction and fusion strategies directly from multi-sensor data. These methods show promise in automatically identifying the most reliable features across different sensor types and environmental conditions, potentially outperforming hand-crafted feature selection algorithms.

Loop closure detection, critical for drift correction, benefits substantially from multi-sensor fusion. Visual place recognition can identify revisited locations even when geometric features have been compromised by accumulated drift, triggering optimization processes that redistribute error throughout the trajectory and restore global consistency.

The computational demands of processing multiple sensor streams remain a significant challenge, necessitating efficient fusion architectures. Edge computing solutions and sensor-specific hardware accelerators are emerging as viable approaches to enable real-time multi-sensor SLAM in resource-constrained platforms such as autonomous vehicles and mobile robots.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!