LiDAR SLAM Sensor Fusion: IMU/GNSS/Camera Alignment And Timing

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

LiDAR SLAM Evolution and Objectives

LiDAR SLAM technology has evolved significantly over the past decade, transforming from experimental laboratory systems to commercially viable solutions deployed across multiple industries. The evolution began with simple 2D LiDAR systems used primarily for indoor robot navigation, progressing to sophisticated 3D systems capable of creating detailed environmental maps in complex outdoor settings. This progression has been driven by advances in sensor technology, computational capabilities, and algorithmic innovations in point cloud processing.

The fundamental objective of LiDAR SLAM (Simultaneous Localization and Mapping) is to enable autonomous systems to simultaneously determine their position within an environment while constructing a map of that environment. This dual functionality forms the cornerstone of autonomous navigation systems across various applications including autonomous vehicles, robotics, and drone technology.

In recent years, the integration of multiple sensor modalities has become increasingly critical to achieving robust performance in diverse operational conditions. The fusion of LiDAR with IMU (Inertial Measurement Unit), GNSS (Global Navigation Satellite System), and camera data represents a significant advancement in this domain, addressing limitations inherent to single-sensor approaches. This multi-modal fusion enhances accuracy, reliability, and operational range across varying environmental conditions.

The technical evolution trajectory shows a clear trend toward more sophisticated sensor fusion algorithms that can effectively synchronize and align data from heterogeneous sensors with different sampling rates, latencies, and reference frames. Early approaches relied on simple filtering techniques, while contemporary solutions employ complex probabilistic frameworks such as factor graphs and advanced Kalman filter variants to achieve optimal estimation.

A critical challenge in this evolution has been the precise temporal and spatial alignment of sensor data. Timing discrepancies as small as milliseconds can lead to significant errors in motion estimation, particularly in high-speed scenarios. Similarly, even minor misalignments in the spatial relationships between sensors can propagate into substantial mapping errors over time.

The current research frontier focuses on developing more robust calibration methodologies, real-time alignment techniques, and adaptive fusion algorithms that can dynamically adjust to changing environmental conditions. These advancements aim to create systems that maintain centimeter-level accuracy across diverse operational scenarios while minimizing computational overhead.

Looking forward, the objectives for next-generation LiDAR SLAM systems include achieving sub-centimeter localization accuracy in GPS-denied environments, reducing power consumption for mobile applications, enhancing resilience to adverse weather conditions, and developing more efficient algorithms capable of running on resource-constrained platforms.

The fundamental objective of LiDAR SLAM (Simultaneous Localization and Mapping) is to enable autonomous systems to simultaneously determine their position within an environment while constructing a map of that environment. This dual functionality forms the cornerstone of autonomous navigation systems across various applications including autonomous vehicles, robotics, and drone technology.

In recent years, the integration of multiple sensor modalities has become increasingly critical to achieving robust performance in diverse operational conditions. The fusion of LiDAR with IMU (Inertial Measurement Unit), GNSS (Global Navigation Satellite System), and camera data represents a significant advancement in this domain, addressing limitations inherent to single-sensor approaches. This multi-modal fusion enhances accuracy, reliability, and operational range across varying environmental conditions.

The technical evolution trajectory shows a clear trend toward more sophisticated sensor fusion algorithms that can effectively synchronize and align data from heterogeneous sensors with different sampling rates, latencies, and reference frames. Early approaches relied on simple filtering techniques, while contemporary solutions employ complex probabilistic frameworks such as factor graphs and advanced Kalman filter variants to achieve optimal estimation.

A critical challenge in this evolution has been the precise temporal and spatial alignment of sensor data. Timing discrepancies as small as milliseconds can lead to significant errors in motion estimation, particularly in high-speed scenarios. Similarly, even minor misalignments in the spatial relationships between sensors can propagate into substantial mapping errors over time.

The current research frontier focuses on developing more robust calibration methodologies, real-time alignment techniques, and adaptive fusion algorithms that can dynamically adjust to changing environmental conditions. These advancements aim to create systems that maintain centimeter-level accuracy across diverse operational scenarios while minimizing computational overhead.

Looking forward, the objectives for next-generation LiDAR SLAM systems include achieving sub-centimeter localization accuracy in GPS-denied environments, reducing power consumption for mobile applications, enhancing resilience to adverse weather conditions, and developing more efficient algorithms capable of running on resource-constrained platforms.

Market Analysis for Multi-Sensor Fusion Systems

The multi-sensor fusion systems market is experiencing robust growth, driven by increasing demand for autonomous vehicles, robotics, and advanced navigation systems. The global market for these systems was valued at approximately 3.2 billion USD in 2022 and is projected to reach 8.7 billion USD by 2028, representing a compound annual growth rate of 18.2% during the forecast period.

The automotive sector currently dominates the market share, accounting for nearly 42% of the total market value. This is primarily due to the rapid development and deployment of advanced driver-assistance systems (ADAS) and autonomous driving technologies, where precise sensor fusion between LiDAR, cameras, IMU, and GNSS is critical for safe operation.

Consumer electronics represents the second-largest market segment at 23%, followed by industrial robotics at 17%, aerospace and defense at 12%, and other applications comprising the remaining 6%. The industrial robotics segment is expected to witness the highest growth rate of 22.4% during the forecast period, driven by Industry 4.0 initiatives and the increasing adoption of autonomous mobile robots in manufacturing and logistics.

Geographically, North America leads the market with a 38% share, followed by Europe (29%), Asia-Pacific (26%), and the rest of the world (7%). However, the Asia-Pacific region is anticipated to grow at the fastest rate due to increasing investments in autonomous technologies and smart city infrastructure, particularly in China, Japan, and South Korea.

Key market drivers include the decreasing cost of sensor technologies, advancements in AI and machine learning algorithms for sensor fusion, and growing regulatory support for autonomous systems. The demand for high-precision timing and alignment solutions in multi-sensor systems is particularly strong, as applications increasingly require sub-millisecond synchronization between LiDAR, cameras, IMU, and GNSS sensors.

Market challenges include the high initial investment required for developing robust sensor fusion systems, technical complexities in achieving precise sensor alignment and timing synchronization, and concerns regarding data privacy and security. Additionally, the lack of standardized testing and validation methodologies for sensor fusion systems poses challenges for market growth.

The market is witnessing a trend toward integrated sensor packages that combine multiple sensing modalities with pre-calibrated alignment and synchronized timing. This trend is expected to accelerate as system integrators seek to reduce development time and complexity while improving overall system reliability and performance.

The automotive sector currently dominates the market share, accounting for nearly 42% of the total market value. This is primarily due to the rapid development and deployment of advanced driver-assistance systems (ADAS) and autonomous driving technologies, where precise sensor fusion between LiDAR, cameras, IMU, and GNSS is critical for safe operation.

Consumer electronics represents the second-largest market segment at 23%, followed by industrial robotics at 17%, aerospace and defense at 12%, and other applications comprising the remaining 6%. The industrial robotics segment is expected to witness the highest growth rate of 22.4% during the forecast period, driven by Industry 4.0 initiatives and the increasing adoption of autonomous mobile robots in manufacturing and logistics.

Geographically, North America leads the market with a 38% share, followed by Europe (29%), Asia-Pacific (26%), and the rest of the world (7%). However, the Asia-Pacific region is anticipated to grow at the fastest rate due to increasing investments in autonomous technologies and smart city infrastructure, particularly in China, Japan, and South Korea.

Key market drivers include the decreasing cost of sensor technologies, advancements in AI and machine learning algorithms for sensor fusion, and growing regulatory support for autonomous systems. The demand for high-precision timing and alignment solutions in multi-sensor systems is particularly strong, as applications increasingly require sub-millisecond synchronization between LiDAR, cameras, IMU, and GNSS sensors.

Market challenges include the high initial investment required for developing robust sensor fusion systems, technical complexities in achieving precise sensor alignment and timing synchronization, and concerns regarding data privacy and security. Additionally, the lack of standardized testing and validation methodologies for sensor fusion systems poses challenges for market growth.

The market is witnessing a trend toward integrated sensor packages that combine multiple sensing modalities with pre-calibrated alignment and synchronized timing. This trend is expected to accelerate as system integrators seek to reduce development time and complexity while improving overall system reliability and performance.

Technical Challenges in Sensor Synchronization

Sensor synchronization represents one of the most critical challenges in LiDAR SLAM sensor fusion systems. The fundamental issue stems from the inherent differences in sampling rates across various sensors. LiDAR typically operates at 10-20Hz, IMUs at 100-400Hz, cameras at 30-60Hz, and GNSS receivers at 1-10Hz. These disparate frequencies create significant difficulties in establishing a unified temporal framework for data integration.

Hardware-level synchronization presents substantial technical hurdles. The ideal solution—a common clock signal distributed to all sensors—often faces practical limitations due to proprietary sensor designs and interface restrictions. Many commercial sensors lack external trigger inputs or precise timestamp capabilities, forcing developers to rely on software-based synchronization methods that introduce additional latency and uncertainty.

Timestamp accuracy represents another major challenge. Even minor timestamp errors of a few milliseconds can result in substantial spatial misalignments, particularly in high-speed scenarios. For instance, at 60 km/h, a 10ms timing error translates to a 16.7cm positional error—significant enough to compromise mapping accuracy and object detection reliability.

Motion distortion compounds these challenges, especially for scanning LiDARs that capture points sequentially rather than simultaneously. During a single LiDAR scan (typically 100ms for mechanical systems), vehicle movement creates distortion that must be compensated using IMU data. However, this compensation itself depends on precise sensor synchronization, creating a circular dependency problem.

Environmental factors further complicate synchronization efforts. Temperature variations can affect sensor clock drift rates differently, causing gradual synchronization degradation over time. This is particularly problematic in autonomous vehicles operating in diverse climate conditions, where temperature fluctuations can be extreme.

Network and processing latencies introduce additional synchronization challenges in distributed systems. When sensors transmit data through different communication channels (Ethernet, CAN bus, USB), varying transmission delays and processing times create temporal inconsistencies that must be addressed through sophisticated buffering and prediction algorithms.

Calibration drift over time represents a persistent challenge for long-term operation. Initial temporal calibration between sensors tends to degrade due to mechanical vibrations, thermal expansion, and electronic aging. Systems must incorporate continuous self-monitoring and recalibration capabilities to maintain synchronization accuracy throughout the operational lifespan of the platform.

Hardware-level synchronization presents substantial technical hurdles. The ideal solution—a common clock signal distributed to all sensors—often faces practical limitations due to proprietary sensor designs and interface restrictions. Many commercial sensors lack external trigger inputs or precise timestamp capabilities, forcing developers to rely on software-based synchronization methods that introduce additional latency and uncertainty.

Timestamp accuracy represents another major challenge. Even minor timestamp errors of a few milliseconds can result in substantial spatial misalignments, particularly in high-speed scenarios. For instance, at 60 km/h, a 10ms timing error translates to a 16.7cm positional error—significant enough to compromise mapping accuracy and object detection reliability.

Motion distortion compounds these challenges, especially for scanning LiDARs that capture points sequentially rather than simultaneously. During a single LiDAR scan (typically 100ms for mechanical systems), vehicle movement creates distortion that must be compensated using IMU data. However, this compensation itself depends on precise sensor synchronization, creating a circular dependency problem.

Environmental factors further complicate synchronization efforts. Temperature variations can affect sensor clock drift rates differently, causing gradual synchronization degradation over time. This is particularly problematic in autonomous vehicles operating in diverse climate conditions, where temperature fluctuations can be extreme.

Network and processing latencies introduce additional synchronization challenges in distributed systems. When sensors transmit data through different communication channels (Ethernet, CAN bus, USB), varying transmission delays and processing times create temporal inconsistencies that must be addressed through sophisticated buffering and prediction algorithms.

Calibration drift over time represents a persistent challenge for long-term operation. Initial temporal calibration between sensors tends to degrade due to mechanical vibrations, thermal expansion, and electronic aging. Systems must incorporate continuous self-monitoring and recalibration capabilities to maintain synchronization accuracy throughout the operational lifespan of the platform.

Current Alignment and Timing Solutions

01 LiDAR-Camera Sensor Fusion Techniques

Integration of LiDAR and camera sensors to improve perception accuracy in SLAM systems. This approach combines the depth information from LiDAR with the rich visual features from cameras to create more robust mapping and localization. The fusion techniques include feature-level fusion, map-level fusion, and decision-level fusion, which help overcome the limitations of individual sensors and enhance the overall performance in various environmental conditions.- Temporal synchronization of LiDAR and other sensors: Accurate timing synchronization between LiDAR and other sensors (cameras, IMUs, etc.) is critical for effective sensor fusion in SLAM systems. This involves hardware-level timestamp alignment, software compensation for latency, and temporal calibration techniques to ensure measurements from different sensors are properly aligned in time. Proper synchronization reduces motion distortion and improves the accuracy of the fused data for mapping and localization.

- Spatial calibration and alignment of multi-sensor systems: Spatial calibration determines the precise geometric relationships between LiDAR and other sensors in a multi-sensor system. This includes extrinsic parameter estimation to establish coordinate transformations between sensors, automatic calibration methods that can detect and correct misalignments during operation, and optimization techniques to minimize spatial errors. Proper spatial alignment ensures that data from different sensors can be accurately combined in a common reference frame.

- Feature extraction and matching for sensor fusion: Advanced algorithms extract distinctive features from LiDAR point clouds and other sensor data to establish correspondences between different sensing modalities. These techniques include point cloud segmentation, edge and plane extraction from LiDAR data, feature descriptor generation for robust matching, and methods to handle the different characteristics of features from heterogeneous sensors. Effective feature extraction and matching facilitates accurate alignment of data from multiple sensors.

- Optimization frameworks for multi-sensor SLAM: Optimization frameworks integrate data from LiDAR and complementary sensors to improve SLAM performance. These include graph-based optimization that represents sensor measurements and poses as nodes and edges, factor graph methods that incorporate different sensor uncertainties, sliding window approaches that maintain computational efficiency, and loop closure detection to correct accumulated errors. These frameworks enable robust localization and mapping in challenging environments.

- Real-time processing and adaptive fusion strategies: Real-time processing techniques enable efficient sensor fusion for LiDAR SLAM in resource-constrained systems. These include parallel processing architectures that distribute computational load, adaptive fusion strategies that adjust sensor weights based on environmental conditions, dynamic reconfiguration of fusion parameters to optimize performance, and filtering techniques to handle sensor noise and outliers. These approaches ensure reliable operation across diverse operating conditions while maintaining real-time performance.

02 Temporal Synchronization Methods for Multi-sensor Systems

Methods for addressing timing issues in multi-sensor SLAM systems. These techniques ensure proper alignment of data streams from different sensors with varying sampling rates and latencies. Approaches include hardware-based synchronization using trigger signals, software-based timestamp alignment, and predictive algorithms that compensate for timing offsets. Effective temporal synchronization is crucial for accurate fusion of sensor data and reliable real-time operation of SLAM systems.Expand Specific Solutions03 Spatial Calibration and Registration Algorithms

Algorithms for spatial alignment of multiple sensors in SLAM systems. These methods determine the precise geometric relationships between different sensors, such as the extrinsic parameters between LiDAR and cameras. Techniques include target-based calibration, motion-based calibration, and continuous online calibration that adapts to environmental changes. Accurate spatial calibration ensures that data from different sensors can be properly integrated into a consistent coordinate system.Expand Specific Solutions04 Real-time Optimization for Mobile Robotics Applications

Optimization techniques for real-time performance in LiDAR SLAM systems deployed on mobile robots. These methods focus on efficient processing of sensor data to meet the computational constraints of mobile platforms. Approaches include parallel processing architectures, feature selection algorithms, and adaptive sampling strategies that balance accuracy and computational load. These optimizations enable reliable navigation and mapping in dynamic environments with limited computing resources.Expand Specific Solutions05 Error Compensation and Uncertainty Management

Techniques for handling uncertainties and errors in LiDAR SLAM sensor fusion systems. These methods address issues such as sensor noise, environmental interference, and motion distortion that can degrade SLAM performance. Approaches include probabilistic error models, outlier rejection algorithms, and adaptive filtering techniques that improve robustness in challenging conditions. Effective error management is essential for maintaining accurate localization and mapping over extended periods of operation.Expand Specific Solutions

Industry Leaders in LiDAR SLAM Technology

LiDAR SLAM Sensor Fusion technology is currently in a growth phase, with the market expected to expand significantly due to increasing applications in autonomous vehicles, robotics, and mapping. The global market size for sensor fusion technologies is projected to reach billions by 2025, driven by demand for precise navigation systems. Technologically, the field shows varying maturity levels across companies. Academic institutions like Northwestern Polytechnical University and Wuhan University are advancing theoretical frameworks, while commercial entities such as iRobot, NavVis, and Samsung Electronics are implementing practical applications. Companies like ZongMu Technology and Hyundai Motor are specifically focusing on automotive applications, integrating LiDAR SLAM with IMU/GNSS/Camera systems to enhance positioning accuracy and reliability in challenging environments.

iRobot Corp.

Technical Solution: iRobot has developed a lightweight LiDAR SLAM sensor fusion framework optimized for consumer robotics applications. Their approach focuses on efficient integration of low-cost LiDAR, MEMS IMUs, basic visual odometry, and occasional GNSS fixes when available. The system employs a factor-graph optimization technique that explicitly models sensor timing uncertainties as additional variables to be optimized. iRobot's solution features an innovative "adaptive sampling" mechanism that dynamically adjusts sensor sampling rates based on motion dynamics and environmental complexity, conserving computational resources while maintaining accuracy. For spatial calibration, they utilize a semi-automated procedure that combines factory calibration with in-field refinement through SLAM consistency metrics. The temporal synchronization employs a predictive buffer management system that compensates for variable sensor latencies. This technology powers iRobot's navigation systems, enabling reliable operation in dynamic home environments with computational efficiency suitable for battery-powered devices.

Strengths: Exceptional power efficiency optimized for battery operation; effective performance with lower-cost sensor components; robust operation in typical indoor environments. Weaknesses: Limited performance in highly dynamic or extremely large environments; reduced accuracy compared to higher-end systems; greater reliance on environmental features for consistent performance.

NavVis GmbH

Technical Solution: NavVis has developed a specialized LiDAR SLAM sensor fusion platform focused on high-precision indoor mapping applications. Their system integrates multiple LiDAR sensors with IMU data and camera imagery through a sophisticated calibration framework that achieves sub-millimeter spatial alignment between sensors. The NavVis approach employs a continuous-time trajectory representation using B-splines to address temporal misalignment issues, allowing precise interpolation between sensor measurements regardless of varying capture rates. Their solution features a unique "multi-resolution mapping" technique that combines coarse global positioning with fine-grained local feature matching to maintain consistency across large indoor spaces. The system implements a rigorous calibration procedure that utilizes specialized calibration targets and automated optimization routines to establish and maintain precise inter-sensor relationships. NavVis has deployed this technology in their mobile mapping systems, achieving mapping accuracy of 1-3mm in indoor environments while maintaining robust performance even in challenging conditions such as featureless corridors and repetitive architectural elements.

Strengths: Exceptional accuracy suitable for as-built documentation and digital twin creation; comprehensive calibration procedures ensuring consistent results; robust performance in challenging indoor environments. Weaknesses: Relatively high hardware costs; complex setup and calibration procedures requiring technical expertise; primarily optimized for controlled indoor environments rather than dynamic outdoor scenes.

Key Patents in Sensor Calibration Methods

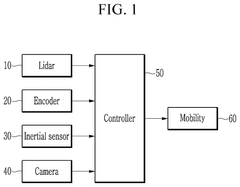

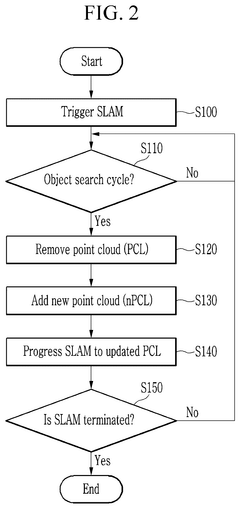

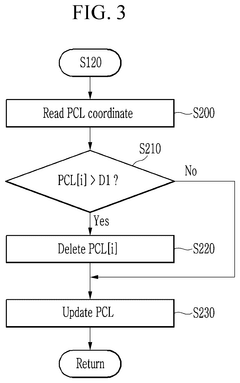

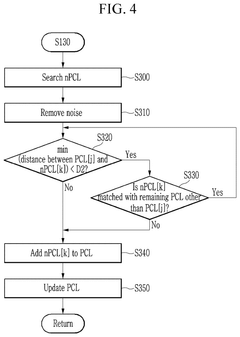

Method and system for simultaneous localization and mapping based on 2d lidar and a camera with different viewing ranges

PatentPendingUS20250209652A1

Innovation

- A method and system that maintains feature points detected by a camera with a narrow viewing angle in conjunction with a Lidar sensor's wide viewing angle, involving a controller to remove feature points exceeding a set distance and add newly searched points, ensuring robustness and reducing memory usage.

GNSS inertial navigation and visual fusion positioning method based on tight coupling

PatentPendingCN117804446A

Innovation

- The GNSS inertial navigation visual fusion positioning method based on tight coupling is used to preprocess the measurement data of the inertial measurement unit, GNSS and camera, use the factor graph for initialization and optimization, and combine the differential model and base station information to eliminate satellite and receiver clock errors. , the receiver autonomous integrity monitoring algorithm is used to strictly eliminate outliers, and sliding window optimization and state marginalization processing are used to improve positioning accuracy and robustness.

Robustness Testing Methodologies

Robustness testing for LiDAR SLAM sensor fusion systems requires comprehensive methodologies to ensure reliable operation across diverse environments and conditions. These testing approaches must evaluate the system's ability to maintain accurate alignment and timing between LiDAR, IMU, GNSS, and camera sensors under various challenging scenarios.

Environmental stress testing forms the foundation of robustness evaluation, subjecting the fusion system to extreme temperature variations (-40°C to 85°C), humidity fluctuations, and vibration profiles that simulate real-world deployment conditions. These tests reveal potential hardware failures and calibration drift that may compromise sensor alignment over time.

Degraded signal testing deliberately introduces signal quality issues to assess system resilience. This includes GPS signal blockage or multipath effects in urban canyons, IMU drift accumulation during extended GNSS outages, and camera exposure challenges in high-contrast lighting. The system's ability to maintain accurate timing synchronization despite these degradations provides critical insights into its operational boundaries.

Motion profile testing employs robotic platforms to execute precise movement patterns that stress sensor fusion algorithms. Rapid accelerations, sudden stops, and complex multi-axis rotations can reveal timing discrepancies between sensors that might remain hidden during normal operation. Specialized test tracks with known features allow quantitative measurement of positioning accuracy under these dynamic conditions.

Simulation-based testing complements physical testing by generating synthetic sensor data with precisely controlled degradation parameters. Monte Carlo simulations with thousands of randomized scenarios help identify edge cases where timing synchronization or spatial alignment might fail. This approach enables statistical confidence assessment of the system's robustness before physical deployment.

Long-duration reliability testing evaluates how sensor calibration and timing synchronization perform over extended operational periods. Continuous operation for 24-72 hours under varying conditions reveals drift patterns and potential timing synchronization failures that might emerge gradually. These tests are particularly important for autonomous vehicle applications where safety-critical operation must be maintained indefinitely.

Cross-validation methodologies compare fusion results against high-precision reference systems to quantify absolute performance. Ground truth positioning data from differential GNSS or motion capture systems provides benchmarks against which fusion accuracy can be measured, establishing confidence intervals for position and orientation estimates under various testing conditions.

Environmental stress testing forms the foundation of robustness evaluation, subjecting the fusion system to extreme temperature variations (-40°C to 85°C), humidity fluctuations, and vibration profiles that simulate real-world deployment conditions. These tests reveal potential hardware failures and calibration drift that may compromise sensor alignment over time.

Degraded signal testing deliberately introduces signal quality issues to assess system resilience. This includes GPS signal blockage or multipath effects in urban canyons, IMU drift accumulation during extended GNSS outages, and camera exposure challenges in high-contrast lighting. The system's ability to maintain accurate timing synchronization despite these degradations provides critical insights into its operational boundaries.

Motion profile testing employs robotic platforms to execute precise movement patterns that stress sensor fusion algorithms. Rapid accelerations, sudden stops, and complex multi-axis rotations can reveal timing discrepancies between sensors that might remain hidden during normal operation. Specialized test tracks with known features allow quantitative measurement of positioning accuracy under these dynamic conditions.

Simulation-based testing complements physical testing by generating synthetic sensor data with precisely controlled degradation parameters. Monte Carlo simulations with thousands of randomized scenarios help identify edge cases where timing synchronization or spatial alignment might fail. This approach enables statistical confidence assessment of the system's robustness before physical deployment.

Long-duration reliability testing evaluates how sensor calibration and timing synchronization perform over extended operational periods. Continuous operation for 24-72 hours under varying conditions reveals drift patterns and potential timing synchronization failures that might emerge gradually. These tests are particularly important for autonomous vehicle applications where safety-critical operation must be maintained indefinitely.

Cross-validation methodologies compare fusion results against high-precision reference systems to quantify absolute performance. Ground truth positioning data from differential GNSS or motion capture systems provides benchmarks against which fusion accuracy can be measured, establishing confidence intervals for position and orientation estimates under various testing conditions.

Standardization Efforts in Sensor Integration

The standardization landscape for sensor integration in LiDAR SLAM systems has evolved significantly in recent years, driven by the increasing complexity of multi-sensor fusion applications. Several industry consortia and standards bodies have emerged to address the critical challenges of sensor alignment and timing synchronization across LiDAR, IMU, GNSS, and camera systems.

The IEEE P2020 working group has been instrumental in developing standards specifically for automotive image quality and sensor fusion applications. Their work includes standardized methods for temporal alignment between different sensor modalities, which is crucial for accurate SLAM implementations. This initiative has gained support from major automotive and technology companies seeking to establish common benchmarks for sensor integration performance.

Similarly, the Open Source Robotics Foundation (OSRF) has contributed significantly through the development of ROS (Robot Operating System) timing protocols. These protocols provide standardized approaches for handling timestamp synchronization across heterogeneous sensor networks, addressing one of the fundamental challenges in multi-sensor SLAM systems.

In the automotive sector, AUTOSAR (Automotive Open System Architecture) has extended its scope to include sensor fusion standardization, with specific attention to timing requirements for safety-critical applications. Their Timing Extensions framework offers guidelines for managing sensor data streams with different sampling rates and latencies, which is particularly relevant for LiDAR-camera-IMU integration.

The OGC (Open Geospatial Consortium) has also made notable contributions through their SensorThings API standard, which provides interoperability specifications for IoT sensors and observation data. This framework includes standardized approaches for spatial and temporal alignment of sensor data, supporting more robust SLAM implementations across different hardware platforms.

From a hardware perspective, the IEEE 1588 Precision Time Protocol (PTP) has become increasingly important for sensor fusion applications, enabling sub-microsecond synchronization across distributed sensor networks. Many modern LiDAR and camera systems now support PTP, facilitating more precise temporal alignment in SLAM applications.

Industry-specific initiatives have also emerged, such as the Autoware Foundation's sensor calibration working group, which focuses on standardizing extrinsic calibration procedures for autonomous vehicle sensor suites. Their open-source tools and methodologies have been widely adopted for aligning LiDAR, camera, and IMU coordinate frames in production environments.

Despite these efforts, significant gaps remain in standardization coverage, particularly regarding performance metrics for evaluating sensor fusion quality and reliability across different environmental conditions. Future standardization work will likely focus on developing more comprehensive evaluation frameworks and certification processes for integrated sensor systems.

The IEEE P2020 working group has been instrumental in developing standards specifically for automotive image quality and sensor fusion applications. Their work includes standardized methods for temporal alignment between different sensor modalities, which is crucial for accurate SLAM implementations. This initiative has gained support from major automotive and technology companies seeking to establish common benchmarks for sensor integration performance.

Similarly, the Open Source Robotics Foundation (OSRF) has contributed significantly through the development of ROS (Robot Operating System) timing protocols. These protocols provide standardized approaches for handling timestamp synchronization across heterogeneous sensor networks, addressing one of the fundamental challenges in multi-sensor SLAM systems.

In the automotive sector, AUTOSAR (Automotive Open System Architecture) has extended its scope to include sensor fusion standardization, with specific attention to timing requirements for safety-critical applications. Their Timing Extensions framework offers guidelines for managing sensor data streams with different sampling rates and latencies, which is particularly relevant for LiDAR-camera-IMU integration.

The OGC (Open Geospatial Consortium) has also made notable contributions through their SensorThings API standard, which provides interoperability specifications for IoT sensors and observation data. This framework includes standardized approaches for spatial and temporal alignment of sensor data, supporting more robust SLAM implementations across different hardware platforms.

From a hardware perspective, the IEEE 1588 Precision Time Protocol (PTP) has become increasingly important for sensor fusion applications, enabling sub-microsecond synchronization across distributed sensor networks. Many modern LiDAR and camera systems now support PTP, facilitating more precise temporal alignment in SLAM applications.

Industry-specific initiatives have also emerged, such as the Autoware Foundation's sensor calibration working group, which focuses on standardizing extrinsic calibration procedures for autonomous vehicle sensor suites. Their open-source tools and methodologies have been widely adopted for aligning LiDAR, camera, and IMU coordinate frames in production environments.

Despite these efforts, significant gaps remain in standardization coverage, particularly regarding performance metrics for evaluating sensor fusion quality and reliability across different environmental conditions. Future standardization work will likely focus on developing more comprehensive evaluation frameworks and certification processes for integrated sensor systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!