How to Evaluate Quantum Model Scalability in Data Centers

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing Evolution and Scalability Goals

Quantum computing has evolved significantly since its theoretical conception in the early 1980s. Initially proposed by Richard Feynman and others as a means to simulate quantum systems efficiently, quantum computing has progressed from abstract mathematical concepts to increasingly practical implementations. The evolution trajectory spans from the development of quantum algorithms like Shor's and Grover's in the 1990s to the creation of the first rudimentary quantum bits (qubits) using various physical systems such as superconducting circuits, trapped ions, and photonics.

The past decade has witnessed remarkable acceleration in quantum hardware development, transitioning from laboratory experiments to commercially available quantum processing units (QPUs). Companies like IBM, Google, and Rigetti have developed quantum computers with increasingly higher qubit counts, while simultaneously working to reduce error rates and improve coherence times. This progression has culminated in demonstrations of quantum advantage, where quantum systems perform specific calculations faster than classical supercomputers.

In data center contexts, quantum computing scalability goals are multifaceted and ambitious. The primary objective is to develop quantum systems that can reliably scale to thousands or even millions of logical qubits, necessary for implementing error-corrected quantum computations with practical applications. This requires not only increasing physical qubit counts but also dramatically improving qubit quality metrics such as coherence times, gate fidelities, and connectivity.

Another critical scalability goal involves the integration of quantum processing units within existing data center infrastructures. This includes developing efficient interfaces between classical and quantum systems, optimizing resource allocation for hybrid quantum-classical workloads, and creating scalable control systems that can manage increasingly complex quantum hardware configurations.

Energy efficiency represents another crucial scalability objective. Current quantum systems require extensive cooling infrastructure, with many implementations operating at near absolute zero temperatures. Future scalability depends on either improving the energy efficiency of cryogenic systems or developing quantum technologies that can operate at higher temperatures while maintaining acceptable performance characteristics.

The ultimate scalability goal for quantum computing in data centers is achieving fault-tolerant quantum computation at a commercially viable scale. This requires implementing effective quantum error correction codes across large qubit arrays while maintaining logical error rates below critical thresholds. Industry roadmaps typically project that practical, error-corrected quantum computers capable of solving commercially relevant problems may emerge within the next decade, though significant technical challenges remain.

The past decade has witnessed remarkable acceleration in quantum hardware development, transitioning from laboratory experiments to commercially available quantum processing units (QPUs). Companies like IBM, Google, and Rigetti have developed quantum computers with increasingly higher qubit counts, while simultaneously working to reduce error rates and improve coherence times. This progression has culminated in demonstrations of quantum advantage, where quantum systems perform specific calculations faster than classical supercomputers.

In data center contexts, quantum computing scalability goals are multifaceted and ambitious. The primary objective is to develop quantum systems that can reliably scale to thousands or even millions of logical qubits, necessary for implementing error-corrected quantum computations with practical applications. This requires not only increasing physical qubit counts but also dramatically improving qubit quality metrics such as coherence times, gate fidelities, and connectivity.

Another critical scalability goal involves the integration of quantum processing units within existing data center infrastructures. This includes developing efficient interfaces between classical and quantum systems, optimizing resource allocation for hybrid quantum-classical workloads, and creating scalable control systems that can manage increasingly complex quantum hardware configurations.

Energy efficiency represents another crucial scalability objective. Current quantum systems require extensive cooling infrastructure, with many implementations operating at near absolute zero temperatures. Future scalability depends on either improving the energy efficiency of cryogenic systems or developing quantum technologies that can operate at higher temperatures while maintaining acceptable performance characteristics.

The ultimate scalability goal for quantum computing in data centers is achieving fault-tolerant quantum computation at a commercially viable scale. This requires implementing effective quantum error correction codes across large qubit arrays while maintaining logical error rates below critical thresholds. Industry roadmaps typically project that practical, error-corrected quantum computers capable of solving commercially relevant problems may emerge within the next decade, though significant technical challenges remain.

Market Analysis for Quantum Computing in Data Centers

The quantum computing market in data centers is experiencing significant growth, driven by increasing demand for computational power to solve complex problems that classical computers struggle with. Current market estimates value the quantum computing market at approximately $500 million, with projections suggesting growth to $8.6 billion by 2027, representing a compound annual growth rate of over 30%. Data centers are positioned as critical infrastructure for the deployment of quantum computing resources, creating a specialized segment within this emerging market.

Enterprise adoption of quantum computing in data centers is currently concentrated in sectors with complex computational challenges, including pharmaceuticals, finance, logistics, and materials science. Financial institutions are exploring quantum algorithms for portfolio optimization and risk assessment, while pharmaceutical companies are leveraging quantum computing for molecular modeling and drug discovery processes. These early adopters are primarily accessing quantum computing capabilities through cloud-based services rather than on-premises installations.

Market research indicates that 67% of Fortune 1000 companies are either exploring or implementing quantum computing initiatives, with 20% having allocated dedicated budgets for quantum technology integration. This represents a significant shift from just three years ago when only 3% of enterprises reported active quantum computing programs. The cloud-based quantum computing services market is growing particularly rapidly, with major providers reporting subscription growth rates exceeding 45% annually.

Regional analysis shows North America leading quantum computing adoption in data centers with approximately 45% market share, followed by Europe at 30% and Asia-Pacific at 20%. China has made quantum computing a strategic priority with substantial government investment, while the European Union's Quantum Flagship program has allocated €1 billion to accelerate quantum technology development and commercialization.

Customer demand patterns reveal a preference for hybrid quantum-classical computing solutions that can be integrated with existing data center infrastructure. This reflects the current technological reality where quantum advantage is limited to specific computational problems rather than general-purpose computing. Market surveys indicate that 78% of potential enterprise customers prioritize seamless integration with existing systems over raw quantum performance metrics.

The competitive landscape features established technology companies like IBM, Google, and Microsoft offering quantum computing services through their cloud platforms, alongside specialized quantum hardware providers such as D-Wave, IonQ, and Rigetti. Cloud service providers are emerging as key distribution channels for quantum computing capabilities, with Amazon Web Services, Microsoft Azure, and Google Cloud Platform all expanding their quantum offerings to enterprise customers through their data center networks.

Enterprise adoption of quantum computing in data centers is currently concentrated in sectors with complex computational challenges, including pharmaceuticals, finance, logistics, and materials science. Financial institutions are exploring quantum algorithms for portfolio optimization and risk assessment, while pharmaceutical companies are leveraging quantum computing for molecular modeling and drug discovery processes. These early adopters are primarily accessing quantum computing capabilities through cloud-based services rather than on-premises installations.

Market research indicates that 67% of Fortune 1000 companies are either exploring or implementing quantum computing initiatives, with 20% having allocated dedicated budgets for quantum technology integration. This represents a significant shift from just three years ago when only 3% of enterprises reported active quantum computing programs. The cloud-based quantum computing services market is growing particularly rapidly, with major providers reporting subscription growth rates exceeding 45% annually.

Regional analysis shows North America leading quantum computing adoption in data centers with approximately 45% market share, followed by Europe at 30% and Asia-Pacific at 20%. China has made quantum computing a strategic priority with substantial government investment, while the European Union's Quantum Flagship program has allocated €1 billion to accelerate quantum technology development and commercialization.

Customer demand patterns reveal a preference for hybrid quantum-classical computing solutions that can be integrated with existing data center infrastructure. This reflects the current technological reality where quantum advantage is limited to specific computational problems rather than general-purpose computing. Market surveys indicate that 78% of potential enterprise customers prioritize seamless integration with existing systems over raw quantum performance metrics.

The competitive landscape features established technology companies like IBM, Google, and Microsoft offering quantum computing services through their cloud platforms, alongside specialized quantum hardware providers such as D-Wave, IonQ, and Rigetti. Cloud service providers are emerging as key distribution channels for quantum computing capabilities, with Amazon Web Services, Microsoft Azure, and Google Cloud Platform all expanding their quantum offerings to enterprise customers through their data center networks.

Current Quantum Model Limitations in Enterprise Environments

Current quantum computing models face significant limitations when deployed in enterprise data center environments. The primary constraint is quantum coherence time, which typically ranges from microseconds to milliseconds in today's quantum processors. This brief window severely restricts the complexity and depth of quantum algorithms that can be executed before decoherence occurs, making it challenging to maintain quantum advantage for enterprise-scale problems.

Quantum error rates present another substantial barrier. Current quantum systems exhibit error rates of approximately 10^-3 to 10^-2 per gate operation, orders of magnitude higher than classical computing's 10^-15 error rate. While quantum error correction techniques exist theoretically, they require substantial qubit overhead—often demanding thousands of physical qubits to create a single logical qubit—making them impractical for most enterprise implementations.

Scalability itself remains a formidable challenge. The largest quantum processors currently available feature around 100-433 qubits, whereas enterprise applications would require thousands or millions of qubits for meaningful computational advantage. The engineering difficulties in scaling quantum systems while maintaining coherence and connectivity grow exponentially with system size.

Connectivity limitations further constrain quantum model deployment. Many quantum algorithms assume all-to-all qubit connectivity, but physical quantum processors typically offer only nearest-neighbor or limited connectivity topologies. This mismatch necessitates additional SWAP operations that consume precious coherence time and increase error rates.

Enterprise integration presents practical obstacles as well. Current quantum systems require specialized infrastructure including cryogenic cooling (for superconducting qubits), ultra-high vacuum systems (for trapped ions), or other highly controlled environments that are difficult to integrate with conventional data center infrastructure. These systems typically consume significant power and physical space relative to their computational output.

The software stack for quantum computing remains immature compared to classical computing ecosystems. Enterprise-grade development tools, debugging capabilities, and performance optimization frameworks are still evolving, creating barriers for adoption in production environments. Additionally, the shortage of quantum computing expertise within enterprise IT teams limits effective implementation and operation.

Cost considerations cannot be overlooked. The total cost of ownership for quantum computing resources remains prohibitively high for most enterprise use cases, with limited return on investment in the near term due to the aforementioned technical limitations.

Quantum error rates present another substantial barrier. Current quantum systems exhibit error rates of approximately 10^-3 to 10^-2 per gate operation, orders of magnitude higher than classical computing's 10^-15 error rate. While quantum error correction techniques exist theoretically, they require substantial qubit overhead—often demanding thousands of physical qubits to create a single logical qubit—making them impractical for most enterprise implementations.

Scalability itself remains a formidable challenge. The largest quantum processors currently available feature around 100-433 qubits, whereas enterprise applications would require thousands or millions of qubits for meaningful computational advantage. The engineering difficulties in scaling quantum systems while maintaining coherence and connectivity grow exponentially with system size.

Connectivity limitations further constrain quantum model deployment. Many quantum algorithms assume all-to-all qubit connectivity, but physical quantum processors typically offer only nearest-neighbor or limited connectivity topologies. This mismatch necessitates additional SWAP operations that consume precious coherence time and increase error rates.

Enterprise integration presents practical obstacles as well. Current quantum systems require specialized infrastructure including cryogenic cooling (for superconducting qubits), ultra-high vacuum systems (for trapped ions), or other highly controlled environments that are difficult to integrate with conventional data center infrastructure. These systems typically consume significant power and physical space relative to their computational output.

The software stack for quantum computing remains immature compared to classical computing ecosystems. Enterprise-grade development tools, debugging capabilities, and performance optimization frameworks are still evolving, creating barriers for adoption in production environments. Additionally, the shortage of quantum computing expertise within enterprise IT teams limits effective implementation and operation.

Cost considerations cannot be overlooked. The total cost of ownership for quantum computing resources remains prohibitively high for most enterprise use cases, with limited return on investment in the near term due to the aforementioned technical limitations.

Existing Quantum Model Evaluation Frameworks

01 Quantum Computing Architecture Scalability

Quantum computing architectures are being developed to address scalability challenges in quantum models. These architectures include novel approaches to qubit connectivity, error correction mechanisms, and hardware-software integration that allow quantum systems to scale beyond current limitations. Innovations in quantum circuit design and topology enable more efficient computation as the number of qubits increases, making larger quantum models feasible for practical applications.- Quantum computing architecture scalability: Scalable quantum computing architectures are designed to overcome limitations in traditional quantum systems. These architectures incorporate modular approaches, distributed quantum processing, and novel interconnection methods to enable the expansion of quantum systems beyond current physical constraints. Scalable designs address challenges related to qubit coherence, error rates, and connectivity as system size increases, allowing quantum models to be applied to increasingly complex problems.

- Error mitigation in scalable quantum models: Error mitigation techniques are essential for scaling quantum models to practical applications. These approaches include error correction codes, noise-resilient quantum gates, and hybrid classical-quantum algorithms that can compensate for quantum decoherence and gate errors. Advanced error mitigation strategies enable quantum models to maintain computational accuracy as they scale to larger problem sizes, making them more viable for real-world applications.

- Resource optimization for quantum model scaling: Resource optimization techniques focus on efficient allocation and utilization of quantum resources to enable model scalability. These methods include quantum circuit compression, qubit reuse strategies, and optimized quantum algorithm design. By minimizing resource requirements while maintaining computational power, these approaches allow quantum models to scale to larger problem sizes within the constraints of available quantum hardware.

- Hybrid quantum-classical computing frameworks: Hybrid quantum-classical frameworks combine the strengths of both computing paradigms to enhance quantum model scalability. These approaches distribute computational tasks between quantum processors and classical computers, allowing quantum resources to be focused on the most suitable portions of an algorithm. Variational quantum algorithms, quantum machine learning with classical pre-processing, and federated quantum learning are examples that enable practical scaling of quantum models for complex applications.

- Hardware-specific quantum model optimization: Hardware-specific optimization techniques adapt quantum models to the unique characteristics and constraints of particular quantum computing platforms. These approaches include custom gate sets, topology-aware circuit mapping, and hardware-native algorithm design. By tailoring quantum models to leverage the strengths and mitigate the weaknesses of specific quantum hardware implementations, these techniques improve scalability and performance across different quantum computing architectures.

02 Error Mitigation in Scalable Quantum Models

Error mitigation techniques are essential for scaling quantum models to practical sizes. These approaches include quantum error correction codes, noise-resilient algorithm design, and hybrid classical-quantum methods that compensate for quantum decoherence. Advanced error suppression techniques allow quantum models to maintain computational integrity at scale, enabling more complex quantum simulations and calculations that would otherwise be limited by quantum noise and environmental interference.Expand Specific Solutions03 Distributed Quantum Computing for Model Scaling

Distributed quantum computing approaches enable quantum models to scale beyond the limitations of single quantum processors. These systems utilize quantum networks, entanglement distribution protocols, and modular quantum processing units to create larger effective quantum systems. By connecting multiple smaller quantum processors, these architectures allow quantum models to scale to sizes that would be impractical on monolithic systems, while maintaining the quantum advantages of the underlying computation.Expand Specific Solutions04 Hybrid Classical-Quantum Approaches for Scalability

Hybrid classical-quantum approaches offer practical pathways to scale quantum models by optimally distributing computational tasks between classical and quantum resources. These methods leverage classical preprocessing, quantum subroutines for specific computational bottlenecks, and post-processing techniques to maximize the effective scale of quantum models. By focusing quantum resources on the parts of algorithms where they provide the greatest advantage, these hybrid approaches enable practical scaling of quantum models despite current hardware limitations.Expand Specific Solutions05 Quantum Model Compression and Optimization

Quantum model compression and optimization techniques enable larger quantum models to run on resource-constrained quantum hardware. These approaches include quantum circuit compression algorithms, gate optimization methods, and qubit-efficient encoding schemes that reduce the resource requirements of quantum models. By minimizing the quantum resources needed while preserving computational capabilities, these techniques allow quantum models to scale to larger problem sizes even on intermediate-scale quantum devices.Expand Specific Solutions

Key Industry Players in Quantum Data Center Solutions

Quantum model scalability evaluation in data centers is currently in an early growth phase, with the market expected to expand significantly as quantum computing transitions from research to practical applications. The global quantum computing market is projected to reach $1.7 billion by 2026, with scalability solutions being a critical segment. Technologically, the field shows varying maturity levels across players. Industry leaders like Google, Microsoft, and IBM are developing comprehensive quantum cloud infrastructures, while specialized quantum companies such as D-Wave Systems, Rigetti, and Origin Quantum are focusing on hardware-specific scalability solutions. Academic institutions including MIT and University of Chicago are contributing fundamental research. Enterprise technology providers like VMware, Dell, and Huawei are increasingly integrating quantum-classical hybrid approaches for data center implementations, addressing the scalability challenges at the infrastructure level.

D-Wave Systems, Inc.

Technical Solution: D-Wave has developed a comprehensive framework for evaluating quantum model scalability in data centers through their Advantage™ quantum system and Leap™ quantum cloud service. Their approach focuses on hybrid quantum-classical computing models where quantum processing units (QPUs) are integrated with classical computing infrastructure. D-Wave's quantum annealing technology allows for evaluation of scalability through metrics including qubit connectivity, coherence times, and quantum volume. Their D-Wave Ocean SDK provides tools for benchmarking quantum performance against classical alternatives, measuring quantum advantage crossover points for specific workloads. The company has implemented a quantum-as-a-service model that enables data centers to incrementally scale quantum resources without complete infrastructure overhauls, using their quantum cloud service to monitor real-time performance metrics and resource utilization patterns across distributed quantum-classical workloads.

Strengths: Industry-leading expertise in quantum annealing systems with practical implementation experience in commercial environments. Their hybrid quantum-classical approach provides a pragmatic path to quantum integration in existing data centers. Weaknesses: Their quantum annealing approach is specialized for optimization problems rather than general-purpose quantum computing, potentially limiting applicability across all quantum computing use cases.

Microsoft Technology Licensing LLC

Technical Solution: Microsoft has developed Azure Quantum as a comprehensive platform for evaluating quantum model scalability in data centers. Their approach integrates quantum hardware access from multiple providers (IonQ, Honeywell, QCI) with classical high-performance computing resources. Microsoft's Resource Estimation toolkit allows organizations to predict quantum resource requirements for specific algorithms at different scales, helping data centers plan capacity and infrastructure needs. Their Quantum Development Kit (QDK) and Q# programming language provide tools for benchmarking quantum algorithms against classical alternatives and measuring performance metrics like circuit depth, gate fidelity, and qubit requirements. Microsoft has pioneered the concept of "quantum-ready" data centers, which involves establishing baseline infrastructure requirements, cooling systems, and connectivity architectures that can accommodate future quantum hardware integration. Their quantum efficiency metrics track performance-per-watt and space utilization factors critical for data center operations.

Strengths: Comprehensive cloud-based approach that abstracts hardware complexity and provides access to multiple quantum technologies through a unified interface. Strong integration with existing enterprise data center infrastructure and Microsoft's cloud ecosystem. Weaknesses: Relies on third-party quantum hardware providers rather than developing proprietary quantum processing units, potentially limiting optimization capabilities for their specific platform architecture.

Critical Patents in Quantum Scalability Assessment

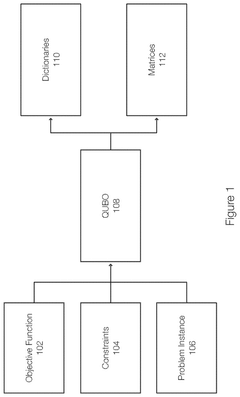

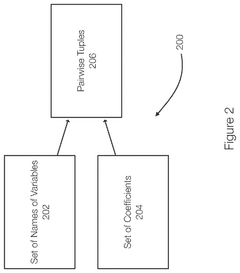

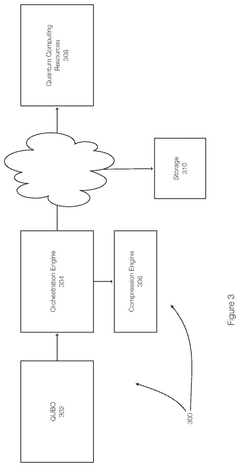

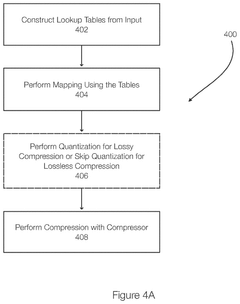

A compression method for qubos and ising models

PatentPendingUS20250202500A1

Innovation

- The proposed solution involves constructing lookup tables for unique variable names and coefficients in QUBO models, translating these into index-based representations, and applying entropy encoding and quantization to achieve lossy or lossless compression.

System and method for analysis of cloud droplet dynamics in high and low vorticity region using direct numeric simulation (DNS) data

PatentInactiveIN202321007689A

Innovation

- Integration of quantum machine learning with direct numeric simulation data to identify high and low vorticity regions in cloud droplet dynamics, using quantum computing to process and analyze three-dimensional velocity components, temperature, and humidity features, employing variational quantum circuits and quantum kernel modules for enhanced accuracy and efficiency.

Infrastructure Requirements for Quantum Data Centers

Quantum computing infrastructure in data centers requires specialized environments and systems that differ significantly from classical computing setups. The integration of quantum processing units demands precise environmental controls, with temperature requirements approaching absolute zero (-273.15°C) for superconducting qubits. These extreme cooling needs necessitate sophisticated cryogenic systems, typically utilizing dilution refrigerators that consume substantial space and power while requiring specialized maintenance protocols.

Power infrastructure represents another critical consideration, as quantum systems demand both high-quality, stable power for operation and significant energy for cooling systems. Uninterruptible power supplies with advanced filtering capabilities are essential to prevent quantum decoherence caused by electrical fluctuations. Additionally, data centers must implement quantum-specific power distribution architectures that minimize electromagnetic interference which can disrupt qubit coherence.

Network infrastructure for quantum data centers must support both classical and quantum information exchange. This hybrid architecture requires ultra-low latency connections between quantum processors and classical control systems, often utilizing specialized fiber optic cables with minimal signal degradation. Quantum-classical interfaces demand purpose-built hardware that can translate between quantum states and classical binary information with minimal loss of quantum information.

Physical space considerations extend beyond mere square footage to include vibration isolation, electromagnetic shielding, and specialized flooring systems. Quantum processors are extremely sensitive to environmental disturbances, requiring data centers to implement advanced isolation technologies such as pneumatic vibration dampening platforms and Faraday cage-like structures to shield quantum circuits from external electromagnetic radiation.

Security infrastructure must protect both physical quantum hardware and the quantum information being processed. This includes specialized access control systems, continuous environmental monitoring, and quantum-specific encryption protocols for data in transit between quantum and classical systems. As quantum computers can potentially break certain classical encryption methods, data centers must implement quantum-resistant security measures to protect sensitive information.

Scalability assessment frameworks must evaluate how these infrastructure requirements change as quantum systems grow from tens to thousands of qubits. This includes modeling the non-linear increases in cooling capacity, power requirements, and space needs as quantum systems scale, while considering the technological roadmaps of different quantum computing modalities and their respective infrastructure implications.

Power infrastructure represents another critical consideration, as quantum systems demand both high-quality, stable power for operation and significant energy for cooling systems. Uninterruptible power supplies with advanced filtering capabilities are essential to prevent quantum decoherence caused by electrical fluctuations. Additionally, data centers must implement quantum-specific power distribution architectures that minimize electromagnetic interference which can disrupt qubit coherence.

Network infrastructure for quantum data centers must support both classical and quantum information exchange. This hybrid architecture requires ultra-low latency connections between quantum processors and classical control systems, often utilizing specialized fiber optic cables with minimal signal degradation. Quantum-classical interfaces demand purpose-built hardware that can translate between quantum states and classical binary information with minimal loss of quantum information.

Physical space considerations extend beyond mere square footage to include vibration isolation, electromagnetic shielding, and specialized flooring systems. Quantum processors are extremely sensitive to environmental disturbances, requiring data centers to implement advanced isolation technologies such as pneumatic vibration dampening platforms and Faraday cage-like structures to shield quantum circuits from external electromagnetic radiation.

Security infrastructure must protect both physical quantum hardware and the quantum information being processed. This includes specialized access control systems, continuous environmental monitoring, and quantum-specific encryption protocols for data in transit between quantum and classical systems. As quantum computers can potentially break certain classical encryption methods, data centers must implement quantum-resistant security measures to protect sensitive information.

Scalability assessment frameworks must evaluate how these infrastructure requirements change as quantum systems grow from tens to thousands of qubits. This includes modeling the non-linear increases in cooling capacity, power requirements, and space needs as quantum systems scale, while considering the technological roadmaps of different quantum computing modalities and their respective infrastructure implications.

Energy Efficiency Considerations for Quantum Systems

Energy efficiency represents a critical dimension in evaluating quantum model scalability within data center environments. Quantum computing systems inherently demand substantial energy resources, primarily due to the extreme cooling requirements necessary to maintain quantum coherence. Current quantum processors typically operate at temperatures approaching absolute zero (approximately 15 millikelvin), requiring sophisticated cryogenic infrastructure that consumes significant power.

When assessing quantum model scalability, energy consumption metrics must be analyzed across multiple operational layers. The power usage effectiveness (PUE) for quantum systems often exceeds that of classical computing infrastructures by orders of magnitude. This disparity stems from the additional energy overhead required for maintaining quantum states, error correction processes, and the specialized control electronics that interface with quantum processors.

The energy cost per quantum operation presents another crucial evaluation metric. As quantum models scale, the relationship between computational advantage and energy expenditure becomes increasingly complex. Research indicates that while quantum systems may offer exponential computational advantages for specific problems, their energy efficiency curves do not necessarily follow the same trajectory. This creates a nuanced optimization challenge when determining appropriate scaling parameters.

Integration of quantum systems within existing data center infrastructures introduces additional energy considerations. Hybrid quantum-classical computing approaches, which are currently the most practical implementation strategy, require careful energy allocation between quantum and classical components. The data transfer interfaces between these systems introduce further energy overhead that must be factored into scalability assessments.

Future quantum model scalability evaluations must incorporate emerging energy-efficient quantum technologies. Developments in room-temperature quantum computing, though still in early research phases, could dramatically alter energy efficiency profiles. Similarly, advancements in superconducting materials and cryogenic systems may reduce cooling energy requirements, potentially flattening the energy scaling curve as quantum systems grow in complexity.

Establishing standardized energy efficiency benchmarks specifically designed for quantum computing represents an essential step toward meaningful scalability evaluations. These benchmarks should account for both the computational advantages offered by quantum models and their corresponding energy footprints, enabling data center operators to make informed decisions regarding quantum technology adoption and scaling strategies.

When assessing quantum model scalability, energy consumption metrics must be analyzed across multiple operational layers. The power usage effectiveness (PUE) for quantum systems often exceeds that of classical computing infrastructures by orders of magnitude. This disparity stems from the additional energy overhead required for maintaining quantum states, error correction processes, and the specialized control electronics that interface with quantum processors.

The energy cost per quantum operation presents another crucial evaluation metric. As quantum models scale, the relationship between computational advantage and energy expenditure becomes increasingly complex. Research indicates that while quantum systems may offer exponential computational advantages for specific problems, their energy efficiency curves do not necessarily follow the same trajectory. This creates a nuanced optimization challenge when determining appropriate scaling parameters.

Integration of quantum systems within existing data center infrastructures introduces additional energy considerations. Hybrid quantum-classical computing approaches, which are currently the most practical implementation strategy, require careful energy allocation between quantum and classical components. The data transfer interfaces between these systems introduce further energy overhead that must be factored into scalability assessments.

Future quantum model scalability evaluations must incorporate emerging energy-efficient quantum technologies. Developments in room-temperature quantum computing, though still in early research phases, could dramatically alter energy efficiency profiles. Similarly, advancements in superconducting materials and cryogenic systems may reduce cooling energy requirements, potentially flattening the energy scaling curve as quantum systems grow in complexity.

Establishing standardized energy efficiency benchmarks specifically designed for quantum computing represents an essential step toward meaningful scalability evaluations. These benchmarks should account for both the computational advantages offered by quantum models and their corresponding energy footprints, enabling data center operators to make informed decisions regarding quantum technology adoption and scaling strategies.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!