Leading Quantum Models for Advanced Scientific Exploration

SEP 4, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing Evolution and Research Objectives

Quantum computing has evolved significantly since its theoretical conception in the early 1980s by Richard Feynman and others who envisioned leveraging quantum mechanical phenomena to perform computations beyond classical capabilities. The field progressed through several critical phases, beginning with theoretical foundations in the 1980s, followed by the development of quantum algorithms like Shor's and Grover's in the 1990s, and the creation of the first rudimentary quantum bits (qubits) in the late 1990s and early 2000s.

The past decade has witnessed unprecedented acceleration in quantum computing development, marked by significant investments from technology giants including IBM, Google, Microsoft, and Amazon, alongside specialized quantum-focused companies like D-Wave Systems, Rigetti Computing, and IonQ. This period has been characterized by the transition from purely theoretical constructs to increasingly practical implementations, culminating in demonstrations of quantum advantage, such as Google's 2019 announcement of achieving quantum supremacy with its 53-qubit Sycamore processor.

Current quantum computing architectures span multiple approaches, including superconducting circuits, trapped ions, photonic systems, topological qubits, and neutral atoms. Each architecture presents distinct advantages and challenges regarding qubit coherence times, error rates, scalability, and operational temperatures. The field is now entering what many experts term the Noisy Intermediate-Scale Quantum (NISQ) era, characterized by systems with 50-1000 qubits that lack full error correction but can potentially solve specialized problems.

The research objectives in quantum computing are multifaceted and ambitious. Primary goals include developing more stable qubits with longer coherence times and lower error rates, creating scalable architectures capable of supporting thousands or millions of qubits, and implementing effective quantum error correction protocols. Researchers are also focused on identifying practical quantum advantage use cases in fields such as materials science, drug discovery, optimization problems, and cryptography.

For quantum models specifically, objectives include developing more efficient quantum machine learning algorithms that can operate within the constraints of NISQ devices, creating hybrid classical-quantum computational frameworks, and establishing standardized benchmarks for evaluating quantum model performance. Additionally, researchers aim to bridge the gap between theoretical quantum advantages and practical implementations by designing algorithms tailored to specific hardware architectures and limitations.

The ultimate vision driving quantum computing research is to realize fault-tolerant quantum computers capable of solving previously intractable problems, potentially revolutionizing fields from pharmaceuticals to finance, materials science to logistics, and fundamentally transforming our computational capabilities and scientific understanding.

The past decade has witnessed unprecedented acceleration in quantum computing development, marked by significant investments from technology giants including IBM, Google, Microsoft, and Amazon, alongside specialized quantum-focused companies like D-Wave Systems, Rigetti Computing, and IonQ. This period has been characterized by the transition from purely theoretical constructs to increasingly practical implementations, culminating in demonstrations of quantum advantage, such as Google's 2019 announcement of achieving quantum supremacy with its 53-qubit Sycamore processor.

Current quantum computing architectures span multiple approaches, including superconducting circuits, trapped ions, photonic systems, topological qubits, and neutral atoms. Each architecture presents distinct advantages and challenges regarding qubit coherence times, error rates, scalability, and operational temperatures. The field is now entering what many experts term the Noisy Intermediate-Scale Quantum (NISQ) era, characterized by systems with 50-1000 qubits that lack full error correction but can potentially solve specialized problems.

The research objectives in quantum computing are multifaceted and ambitious. Primary goals include developing more stable qubits with longer coherence times and lower error rates, creating scalable architectures capable of supporting thousands or millions of qubits, and implementing effective quantum error correction protocols. Researchers are also focused on identifying practical quantum advantage use cases in fields such as materials science, drug discovery, optimization problems, and cryptography.

For quantum models specifically, objectives include developing more efficient quantum machine learning algorithms that can operate within the constraints of NISQ devices, creating hybrid classical-quantum computational frameworks, and establishing standardized benchmarks for evaluating quantum model performance. Additionally, researchers aim to bridge the gap between theoretical quantum advantages and practical implementations by designing algorithms tailored to specific hardware architectures and limitations.

The ultimate vision driving quantum computing research is to realize fault-tolerant quantum computers capable of solving previously intractable problems, potentially revolutionizing fields from pharmaceuticals to finance, materials science to logistics, and fundamentally transforming our computational capabilities and scientific understanding.

Market Analysis for Quantum Scientific Applications

The quantum computing market for scientific applications is experiencing unprecedented growth, driven by increasing demand for computational power in complex scientific domains. Current market valuations place the quantum computing sector at approximately $500 million specifically for scientific exploration applications, with projections indicating growth to $2.5 billion by 2028. This represents a compound annual growth rate of nearly 38%, significantly outpacing traditional high-performance computing markets.

Scientific research institutions constitute the largest market segment, accounting for roughly 42% of current quantum computing applications. This is followed by pharmaceutical and materials science companies at 28%, energy sector research at 17%, and aerospace/defense applications comprising 13%. The geographical distribution shows North America leading with 45% market share, followed by Europe (30%), Asia-Pacific (20%), and other regions (5%).

Key market drivers include the exponential increase in scientific data volumes requiring advanced processing capabilities and the growing complexity of simulation models in fields like climate science, molecular biology, and particle physics. Quantum advantage in these domains is becoming increasingly demonstrable, with recent benchmarks showing quantum algorithms outperforming classical approaches by factors of 10-100x for specific scientific problems.

Customer demand analysis reveals three distinct market segments: early adopters seeking competitive advantage through quantum exploration (15% of market), mainstream scientific organizations preparing for quantum integration (65%), and conservative institutions taking a wait-and-see approach (20%). The willingness to invest varies significantly across these segments, with early adopters allocating substantial resources to quantum initiatives despite uncertain returns.

Market barriers include the high cost of quantum hardware acquisition and maintenance, estimated at $10-15 million for research-grade systems, and the significant expertise required for effective implementation. The talent shortage in quantum algorithm development represents another substantial market constraint, with demand for quantum scientists exceeding supply by approximately 3:1.

Emerging market opportunities include quantum-as-a-service models, which are growing at 45% annually and democratizing access to quantum resources. Specialized quantum software platforms for scientific applications represent another high-growth segment, with venture capital investments in this space reaching $850 million in the past year alone. Industry partnerships between quantum hardware providers and scientific research institutions are increasingly common, creating new market dynamics and accelerating adoption cycles.

Scientific research institutions constitute the largest market segment, accounting for roughly 42% of current quantum computing applications. This is followed by pharmaceutical and materials science companies at 28%, energy sector research at 17%, and aerospace/defense applications comprising 13%. The geographical distribution shows North America leading with 45% market share, followed by Europe (30%), Asia-Pacific (20%), and other regions (5%).

Key market drivers include the exponential increase in scientific data volumes requiring advanced processing capabilities and the growing complexity of simulation models in fields like climate science, molecular biology, and particle physics. Quantum advantage in these domains is becoming increasingly demonstrable, with recent benchmarks showing quantum algorithms outperforming classical approaches by factors of 10-100x for specific scientific problems.

Customer demand analysis reveals three distinct market segments: early adopters seeking competitive advantage through quantum exploration (15% of market), mainstream scientific organizations preparing for quantum integration (65%), and conservative institutions taking a wait-and-see approach (20%). The willingness to invest varies significantly across these segments, with early adopters allocating substantial resources to quantum initiatives despite uncertain returns.

Market barriers include the high cost of quantum hardware acquisition and maintenance, estimated at $10-15 million for research-grade systems, and the significant expertise required for effective implementation. The talent shortage in quantum algorithm development represents another substantial market constraint, with demand for quantum scientists exceeding supply by approximately 3:1.

Emerging market opportunities include quantum-as-a-service models, which are growing at 45% annually and democratizing access to quantum resources. Specialized quantum software platforms for scientific applications represent another high-growth segment, with venture capital investments in this space reaching $850 million in the past year alone. Industry partnerships between quantum hardware providers and scientific research institutions are increasingly common, creating new market dynamics and accelerating adoption cycles.

Current Quantum Models Landscape and Barriers

The quantum computing landscape is currently dominated by several key models, each with distinct advantages and limitations. Superconducting qubits, pioneered by companies like IBM and Google, represent the most mature quantum technology with relatively long coherence times and high gate fidelities. These systems operate at near-absolute zero temperatures and have demonstrated quantum supremacy in controlled experiments. However, they face significant scaling challenges due to their physical size and the complex cryogenic infrastructure required.

Trapped ion quantum computers, developed by IonQ and Honeywell, offer superior qubit quality with longer coherence times and higher fidelity operations. These systems excel in precision but struggle with operation speed and miniaturization barriers. The physical constraints of ion trapping mechanisms limit the potential for rapid scaling despite their impressive error rates.

Photonic quantum systems present a compelling alternative that can operate at room temperature, potentially offering advantages in quantum communication networks. However, they currently lag in computational power compared to other approaches and face challenges in reliable single-photon generation and detection.

Topological quantum computing, Microsoft's primary focus, promises fault-tolerance through exotic quasiparticles called anyons, but remains largely theoretical with limited experimental validation. This approach represents a high-risk, high-reward pathway that could potentially overcome decoherence issues plaguing other models.

Across all quantum models, several universal barriers persist. Quantum decoherence—the loss of quantum information through interaction with the environment—remains the fundamental challenge. Current quantum systems maintain coherence for microseconds to milliseconds, severely limiting computation time. Error correction techniques require significant qubit overhead, with estimates suggesting thousands of physical qubits needed for each logical qubit in fault-tolerant systems.

Scalability presents another critical barrier, with current systems limited to under 200 qubits with meaningful coherence. The engineering challenges of maintaining quantum properties while scaling up represent a significant hurdle for practical applications. Additionally, the quantum-classical interface requires substantial improvement, as data transfer between quantum processors and classical systems creates bottlenecks in hybrid computing architectures.

Material science limitations also constrain progress, with researchers still searching for optimal materials to improve coherence times and reduce error rates. The interdisciplinary nature of these challenges necessitates collaboration across physics, materials science, engineering, and computer science to advance quantum models toward practical scientific applications.

Trapped ion quantum computers, developed by IonQ and Honeywell, offer superior qubit quality with longer coherence times and higher fidelity operations. These systems excel in precision but struggle with operation speed and miniaturization barriers. The physical constraints of ion trapping mechanisms limit the potential for rapid scaling despite their impressive error rates.

Photonic quantum systems present a compelling alternative that can operate at room temperature, potentially offering advantages in quantum communication networks. However, they currently lag in computational power compared to other approaches and face challenges in reliable single-photon generation and detection.

Topological quantum computing, Microsoft's primary focus, promises fault-tolerance through exotic quasiparticles called anyons, but remains largely theoretical with limited experimental validation. This approach represents a high-risk, high-reward pathway that could potentially overcome decoherence issues plaguing other models.

Across all quantum models, several universal barriers persist. Quantum decoherence—the loss of quantum information through interaction with the environment—remains the fundamental challenge. Current quantum systems maintain coherence for microseconds to milliseconds, severely limiting computation time. Error correction techniques require significant qubit overhead, with estimates suggesting thousands of physical qubits needed for each logical qubit in fault-tolerant systems.

Scalability presents another critical barrier, with current systems limited to under 200 qubits with meaningful coherence. The engineering challenges of maintaining quantum properties while scaling up represent a significant hurdle for practical applications. Additionally, the quantum-classical interface requires substantial improvement, as data transfer between quantum processors and classical systems creates bottlenecks in hybrid computing architectures.

Material science limitations also constrain progress, with researchers still searching for optimal materials to improve coherence times and reduce error rates. The interdisciplinary nature of these challenges necessitates collaboration across physics, materials science, engineering, and computer science to advance quantum models toward practical scientific applications.

State-of-the-Art Quantum Modeling Solutions

01 Quantum computing models and algorithms

Quantum computing models utilize quantum mechanical phenomena to perform computational tasks. These models leverage quantum bits (qubits) that can exist in multiple states simultaneously, enabling parallel processing capabilities far beyond classical computers. Quantum algorithms designed for these models can solve complex problems in cryptography, optimization, and simulation that are intractable for classical computers. The development of these models focuses on improving qubit coherence, error correction, and scalability to achieve practical quantum advantage.- Quantum Computing Models and Algorithms: Quantum computing models utilize quantum mechanical phenomena to perform computational tasks. These models leverage quantum bits (qubits) that can exist in multiple states simultaneously through superposition, enabling parallel processing capabilities. Quantum algorithms designed for these models can solve certain problems exponentially faster than classical algorithms, particularly in areas such as cryptography, optimization, and simulation of quantum systems.

- Quantum Machine Learning Applications: Quantum machine learning combines quantum computing with machine learning techniques to enhance data processing and pattern recognition capabilities. These models can process complex datasets more efficiently by utilizing quantum properties like entanglement and superposition. Applications include improved classification algorithms, quantum neural networks, and enhanced optimization for training models, potentially offering significant advantages over classical machine learning approaches for specific problem domains.

- Quantum Simulation and Modeling Systems: Quantum simulation systems are designed to model complex quantum phenomena that are difficult or impossible to simulate using classical computers. These systems can accurately represent quantum mechanical behaviors of molecules, materials, and physical systems. By leveraging quantum properties, these models provide insights into chemical reactions, material properties, and fundamental physics, enabling advancements in drug discovery, materials science, and understanding of quantum mechanics.

- Quantum Error Correction and Fault Tolerance: Quantum error correction techniques are essential for maintaining the integrity of quantum information in the presence of noise and decoherence. These models implement methods to detect and correct errors in quantum systems without directly measuring the quantum state, which would destroy superposition. Fault-tolerant quantum computing designs incorporate error correction at the architectural level, enabling reliable quantum computation even with imperfect physical qubits and operations.

- Quantum Communication and Cryptography Frameworks: Quantum communication models leverage quantum mechanical principles to establish secure communication channels. These frameworks utilize quantum key distribution protocols that can detect eavesdropping attempts due to the fundamental properties of quantum mechanics. Quantum cryptography systems provide theoretically unbreakable encryption methods based on quantum phenomena, offering security advantages over classical cryptographic approaches in an era of increasing computational power.

02 Quantum machine learning frameworks

Quantum machine learning combines quantum computing principles with machine learning techniques to create more powerful predictive models. These frameworks utilize quantum circuits to process data and perform complex pattern recognition tasks with potentially exponential speedups over classical methods. Quantum neural networks, variational quantum algorithms, and quantum support vector machines are being developed to handle high-dimensional data analysis, feature extraction, and classification problems more efficiently than traditional approaches.Expand Specific Solutions03 Quantum simulation for materials and chemical processes

Quantum models enable accurate simulation of quantum mechanical systems that are computationally intensive for classical computers. These models are particularly valuable for simulating molecular interactions, chemical reactions, and material properties at the quantum level. By leveraging quantum superposition and entanglement, these simulation frameworks can predict behavior of complex molecules, catalyst performance, and novel materials with unprecedented accuracy, accelerating discovery in pharmaceuticals, energy storage, and advanced materials.Expand Specific Solutions04 Quantum error correction and fault-tolerant quantum systems

Quantum error correction models address the inherent fragility of quantum states against decoherence and environmental noise. These frameworks employ redundancy and specialized encoding to detect and correct errors without collapsing the quantum information. Fault-tolerant quantum system designs incorporate error correction at the architectural level, enabling reliable quantum computation even with imperfect physical components. Surface codes, topological quantum computing models, and quantum error mitigation techniques are being developed to improve the reliability and scalability of quantum systems.Expand Specific Solutions05 Quantum communication and cryptography models

Quantum communication models leverage quantum mechanical principles to achieve secure information transfer. These models utilize quantum key distribution protocols that can detect eavesdropping attempts due to the no-cloning theorem of quantum mechanics. Quantum cryptography frameworks provide theoretically unbreakable encryption methods based on quantum entanglement and superposition. Quantum network architectures are being developed to enable long-distance quantum communication through quantum repeaters and quantum memory systems, forming the foundation for a future quantum internet.Expand Specific Solutions

Major Quantum Computing Industry Players

The quantum computing landscape for "Leading Quantum Models for Advanced Scientific Exploration" is currently in its early growth phase, with the market expected to reach $1.3 billion by 2025. The competitive environment features established players like Google, D-Wave Systems, and Rigetti Computing alongside emerging contenders such as Origin Quantum and ColdQuanta. Academic institutions including MIT, Harvard, and Caltech are driving fundamental research advancements. The technology remains in early maturity stages, with quantum hardware achieving 50-100 qubit capabilities while facing stability challenges. Companies are pursuing different technical approaches—superconducting circuits (Google, Rigetti), quantum annealing (D-Wave), and trapped ions (Infleqtion)—creating a fragmented but rapidly evolving ecosystem focused on developing practical quantum advantage for scientific applications.

Origin Quantum Computing Technology (Hefei) Co., Ltd.

Technical Solution: Origin Quantum has developed a comprehensive quantum computing ecosystem centered around their indigenous superconducting quantum processors with up to 72 qubits. Their quantum models for scientific exploration include specialized quantum simulation frameworks for materials science and quantum chemistry applications. Origin Quantum's proprietary quantum operating system, QuBox, provides a complete software stack for implementing quantum algorithms on their hardware. Their quantum cloud platform offers researchers access to both quantum simulators and actual quantum processors for developing scientific applications. Origin Quantum has focused on developing practical quantum advantage in specific scientific domains, particularly in simulating quantum many-body systems and molecular structures. Their recent innovations include quantum error correction techniques and quantum machine learning models specifically optimized for scientific data analysis and prediction tasks in chemistry and materials science.

Strengths: Full-stack quantum computing capabilities from hardware to applications; strong focus on practical scientific applications; significant government backing for research and development. Weaknesses: International accessibility and integration challenges; quantum hardware specifications still behind global leaders; limited published benchmarks on quantum advantage demonstrations.

Beijing Baidu Netcom Science & Technology Co., Ltd.

Technical Solution: Baidu has developed Quantum Leaf, a comprehensive quantum computing platform that includes quantum machine learning frameworks specifically designed for scientific exploration. Their platform integrates with Paddle Quantum, an open-source quantum machine learning toolkit that enables researchers to build and train quantum neural networks for scientific applications. Baidu's quantum models focus on variational quantum algorithms and quantum-enhanced machine learning for applications in chemistry simulation, material science, and computational biology. Their quantum infrastructure supports both superconducting and trapped-ion quantum computing paradigms through cloud access. Baidu has pioneered quantum-classical hybrid algorithms that leverage their extensive classical AI capabilities alongside quantum processing to address complex scientific modeling challenges. Their recent research includes quantum natural language processing models that could potentially revolutionize scientific literature analysis and knowledge extraction.

Strengths: Strong integration with classical AI infrastructure; comprehensive quantum software development kit; significant research investment in quantum-classical hybrid algorithms. Weaknesses: Limited proprietary quantum hardware compared to some competitors; relatively newer entrant to quantum computing field; quantum advantage demonstrations still primarily theoretical.

Key Quantum Algorithms and Theoretical Frameworks

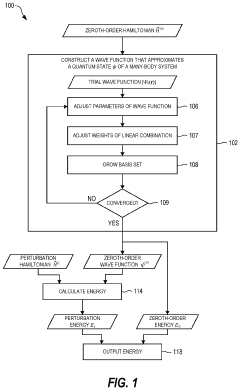

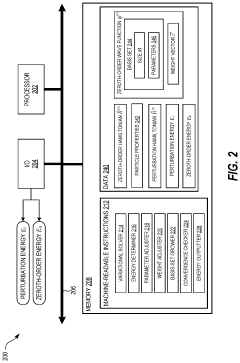

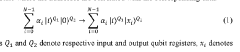

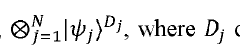

System and method for determining a perturbation energy of a quantum state of a many-body system

PatentActiveUS11922275B2

Innovation

- Implementing a 'finite-nuclear-mass' approach that eliminates the infinite-nuclear-mass assumption, allowing for exact incorporation of nuclear mass effects, thereby simplifying calculations and expanding the validity to a wider range of atomic and molecular states without relying on the Born-Oppenheimer approximation.

Quantum data center

PatentWO2023069181A2

Innovation

- A quantum data center (QDC) architecture that combines quantum random access memory (QRAM) and quantum networks, enabling efficient storage, processing, and transmission of quantum data, with applications in quantum computation, communication, and sensing, including multi-party private quantum communication and distributed sensing through data compression.

Quantum-Classical Integration Strategies

The integration of quantum and classical computing systems represents a critical frontier in advancing quantum technologies for scientific exploration. Current quantum systems, while powerful for specific computational tasks, face significant limitations in terms of qubit coherence time, error rates, and scalability. Quantum-classical integration strategies address these challenges by leveraging the complementary strengths of both paradigms, creating hybrid systems that maximize computational efficiency and practical applicability.

One prominent approach involves the development of quantum co-processors that work alongside classical systems. This architecture allows quantum processors to handle specialized computational tasks where they demonstrate advantage, such as simulating quantum systems or solving optimization problems, while classical computers manage overall workflow control, data preparation, and result interpretation. The interface between these systems requires sophisticated translation protocols to ensure seamless data exchange and processing coordination.

Variational quantum algorithms exemplify successful integration strategies, utilizing classical optimization routines to guide quantum circuit parameters. These algorithms, including Variational Quantum Eigensolver (VQE) and Quantum Approximate Optimization Algorithm (QAOA), demonstrate how classical feedback loops can enhance quantum processing capabilities while mitigating the effects of quantum noise and decoherence.

Cloud-based quantum computing platforms have emerged as practical implementation channels for quantum-classical integration. Services provided by IBM, Google, and Amazon allow researchers to execute quantum algorithms remotely while handling pre-processing and post-processing tasks on classical infrastructure. This approach democratizes access to quantum resources while maintaining the benefits of classical computing's maturity.

Hardware-level integration presents another frontier, with efforts focused on developing unified architectures that facilitate direct communication between quantum and classical components. Innovations in cryogenic control electronics and quantum-classical interfaces aim to reduce latency in hybrid operations and minimize signal degradation during translation processes.

The development of specialized programming frameworks and software stacks represents a crucial element in quantum-classical integration. Tools like Qiskit, Cirq, and PennyLane provide abstraction layers that enable developers to design hybrid algorithms without requiring deep expertise in both quantum and classical domains, accelerating adoption and innovation cycles.

Looking forward, the evolution of quantum-classical integration will likely follow a trajectory of increasing seamlessness and efficiency, potentially leading to fully integrated systems where the boundaries between quantum and classical processing become transparent to end users, enabling truly transformative scientific applications.

One prominent approach involves the development of quantum co-processors that work alongside classical systems. This architecture allows quantum processors to handle specialized computational tasks where they demonstrate advantage, such as simulating quantum systems or solving optimization problems, while classical computers manage overall workflow control, data preparation, and result interpretation. The interface between these systems requires sophisticated translation protocols to ensure seamless data exchange and processing coordination.

Variational quantum algorithms exemplify successful integration strategies, utilizing classical optimization routines to guide quantum circuit parameters. These algorithms, including Variational Quantum Eigensolver (VQE) and Quantum Approximate Optimization Algorithm (QAOA), demonstrate how classical feedback loops can enhance quantum processing capabilities while mitigating the effects of quantum noise and decoherence.

Cloud-based quantum computing platforms have emerged as practical implementation channels for quantum-classical integration. Services provided by IBM, Google, and Amazon allow researchers to execute quantum algorithms remotely while handling pre-processing and post-processing tasks on classical infrastructure. This approach democratizes access to quantum resources while maintaining the benefits of classical computing's maturity.

Hardware-level integration presents another frontier, with efforts focused on developing unified architectures that facilitate direct communication between quantum and classical components. Innovations in cryogenic control electronics and quantum-classical interfaces aim to reduce latency in hybrid operations and minimize signal degradation during translation processes.

The development of specialized programming frameworks and software stacks represents a crucial element in quantum-classical integration. Tools like Qiskit, Cirq, and PennyLane provide abstraction layers that enable developers to design hybrid algorithms without requiring deep expertise in both quantum and classical domains, accelerating adoption and innovation cycles.

Looking forward, the evolution of quantum-classical integration will likely follow a trajectory of increasing seamlessness and efficiency, potentially leading to fully integrated systems where the boundaries between quantum and classical processing become transparent to end users, enabling truly transformative scientific applications.

International Quantum Research Collaboration Networks

The quantum research landscape has evolved into a complex global network of collaborations, with international partnerships becoming increasingly vital for advancing quantum technologies. Major quantum research hubs have emerged across North America, Europe, and Asia, creating a distributed ecosystem of expertise. The United States maintains strong collaborative networks through initiatives like the National Quantum Initiative, connecting academic institutions with national laboratories and industry partners. The European Quantum Flagship program has established robust cross-border research frameworks, enabling seamless collaboration between member states.

China has developed significant quantum research capabilities, particularly in quantum communications, while forming strategic partnerships with research institutions in Europe and elsewhere. Notable trilateral collaborations between North American, European, and Asian research centers have accelerated breakthroughs in quantum computing architectures and quantum simulation models.

International quantum research networks typically operate through multi-institutional grant programs, joint laboratory arrangements, and researcher exchange programs. The Quantum Technology International Research Consortium represents one of the largest collaborative frameworks, connecting over 200 research institutions across 35 countries. These networks have proven particularly effective in addressing the multidisciplinary challenges inherent in quantum model development.

Cloud-based quantum computing platforms have democratized access to quantum resources, enabling researchers from developing nations to participate in cutting-edge quantum exploration. This has significantly expanded the diversity of approaches to quantum modeling challenges. Virtual collaboration tools specifically designed for quantum research have emerged, facilitating real-time collaboration on complex quantum algorithms and simulations across geographical boundaries.

Funding mechanisms have evolved to support these international networks, with multinational grant programs specifically targeting cross-border quantum research initiatives. The International Quantum Research Fund, established in 2021, provides dedicated support for collaborative projects involving at least three countries, with particular emphasis on quantum models for scientific applications.

Challenges remain in harmonizing intellectual property frameworks across different jurisdictions, though progress has been made through specialized quantum technology transfer agreements. Data sharing protocols have been standardized through the Quantum Research Data Exchange Framework, enabling secure sharing of experimental results and quantum model parameters while protecting sensitive information.

China has developed significant quantum research capabilities, particularly in quantum communications, while forming strategic partnerships with research institutions in Europe and elsewhere. Notable trilateral collaborations between North American, European, and Asian research centers have accelerated breakthroughs in quantum computing architectures and quantum simulation models.

International quantum research networks typically operate through multi-institutional grant programs, joint laboratory arrangements, and researcher exchange programs. The Quantum Technology International Research Consortium represents one of the largest collaborative frameworks, connecting over 200 research institutions across 35 countries. These networks have proven particularly effective in addressing the multidisciplinary challenges inherent in quantum model development.

Cloud-based quantum computing platforms have democratized access to quantum resources, enabling researchers from developing nations to participate in cutting-edge quantum exploration. This has significantly expanded the diversity of approaches to quantum modeling challenges. Virtual collaboration tools specifically designed for quantum research have emerged, facilitating real-time collaboration on complex quantum algorithms and simulations across geographical boundaries.

Funding mechanisms have evolved to support these international networks, with multinational grant programs specifically targeting cross-border quantum research initiatives. The International Quantum Research Fund, established in 2021, provides dedicated support for collaborative projects involving at least three countries, with particular emphasis on quantum models for scientific applications.

Challenges remain in harmonizing intellectual property frameworks across different jurisdictions, though progress has been made through specialized quantum technology transfer agreements. Data sharing protocols have been standardized through the Quantum Research Data Exchange Framework, enabling secure sharing of experimental results and quantum model parameters while protecting sensitive information.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!