How to Compare Quantum Algorithms for Performance in Real-Time

SEP 4, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Algorithm Performance Benchmarking Background

Quantum algorithm performance benchmarking has evolved significantly since the early days of quantum computing. Initially, theoretical comparisons dominated the field, focusing on asymptotic complexity measures like Big O notation to demonstrate quantum advantage. The landmark example remains Shor's algorithm (1994), which theoretically offers exponential speedup for integer factorization compared to classical algorithms, fundamentally changing our understanding of quantum computational potential.

As quantum hardware advanced from the early 2000s, benchmarking approaches shifted toward more practical considerations. The emergence of Noisy Intermediate-Scale Quantum (NISQ) devices around 2018 created an urgent need for realistic performance metrics that account for hardware limitations, error rates, and decoherence effects. This transition marked a pivotal moment when theoretical advantages needed validation through implementable benchmarks.

The quantum volume metric, introduced by IBM in 2019, represented a significant advancement by quantifying the maximum size of square quantum circuits that could be successfully implemented on a given quantum processor. This was followed by other hardware-aware metrics like circuit layer operations per second (CLOPS) and quantum left-behind (QLB), each addressing different aspects of quantum system performance.

Recent developments have focused on application-specific benchmarks that evaluate quantum algorithms in the context of particular problem domains. Examples include the Quantum Approximate Optimization Algorithm (QAOA) for combinatorial optimization problems and Variational Quantum Eigensolver (VQE) for quantum chemistry applications, where performance is measured against the best classical alternatives for specific problem instances.

The current challenge in quantum algorithm benchmarking lies in developing real-time comparative frameworks that can dynamically assess performance across different quantum hardware architectures, compiler optimizations, and error mitigation techniques. Traditional benchmarking approaches often require extensive post-processing and analysis, making real-time comparisons difficult.

International standardization efforts have begun to emerge, with organizations like IEEE and ISO working to establish common benchmarking protocols. The Quantum Economic Development Consortium (QED-C) has also proposed standardized test suites for evaluating quantum computational capabilities across different platforms.

As quantum hardware continues to scale and error rates improve, benchmarking methodologies must evolve to provide meaningful comparisons that account for both the theoretical promise and practical limitations of quantum algorithms. The field is now moving toward holistic benchmarking frameworks that consider execution time, resource requirements, solution quality, and scalability simultaneously.

As quantum hardware advanced from the early 2000s, benchmarking approaches shifted toward more practical considerations. The emergence of Noisy Intermediate-Scale Quantum (NISQ) devices around 2018 created an urgent need for realistic performance metrics that account for hardware limitations, error rates, and decoherence effects. This transition marked a pivotal moment when theoretical advantages needed validation through implementable benchmarks.

The quantum volume metric, introduced by IBM in 2019, represented a significant advancement by quantifying the maximum size of square quantum circuits that could be successfully implemented on a given quantum processor. This was followed by other hardware-aware metrics like circuit layer operations per second (CLOPS) and quantum left-behind (QLB), each addressing different aspects of quantum system performance.

Recent developments have focused on application-specific benchmarks that evaluate quantum algorithms in the context of particular problem domains. Examples include the Quantum Approximate Optimization Algorithm (QAOA) for combinatorial optimization problems and Variational Quantum Eigensolver (VQE) for quantum chemistry applications, where performance is measured against the best classical alternatives for specific problem instances.

The current challenge in quantum algorithm benchmarking lies in developing real-time comparative frameworks that can dynamically assess performance across different quantum hardware architectures, compiler optimizations, and error mitigation techniques. Traditional benchmarking approaches often require extensive post-processing and analysis, making real-time comparisons difficult.

International standardization efforts have begun to emerge, with organizations like IEEE and ISO working to establish common benchmarking protocols. The Quantum Economic Development Consortium (QED-C) has also proposed standardized test suites for evaluating quantum computational capabilities across different platforms.

As quantum hardware continues to scale and error rates improve, benchmarking methodologies must evolve to provide meaningful comparisons that account for both the theoretical promise and practical limitations of quantum algorithms. The field is now moving toward holistic benchmarking frameworks that consider execution time, resource requirements, solution quality, and scalability simultaneously.

Market Demand for Quantum Algorithm Comparison Tools

The quantum computing market is experiencing unprecedented growth, with the global quantum computing market projected to reach $1.7 billion by 2026, growing at a CAGR of 30.2% from 2021. Within this expanding ecosystem, there is a significant and growing demand for specialized tools that can effectively compare and evaluate quantum algorithms in real-time performance scenarios.

Organizations investing in quantum computing capabilities are increasingly seeking robust benchmarking tools to optimize their quantum algorithm implementations. This demand is particularly acute among research institutions, financial services companies, pharmaceutical corporations, and technology giants that are early adopters of quantum computing technologies. These entities require sophisticated comparison tools to make informed decisions about which quantum algorithms best suit their specific computational challenges.

The market demand is driven by several key factors. First, as quantum hardware continues to evolve rapidly, organizations need to continuously reassess algorithm performance across different quantum processing units (QPUs). Real-time comparison tools enable them to identify the most efficient algorithms for their specific hardware configurations, maximizing return on substantial quantum computing investments.

Second, the quantum talent shortage has created demand for automated tools that can reduce the expertise barrier. Current estimates suggest there are fewer than 10,000 individuals worldwide with deep quantum algorithm expertise. Comparison tools that provide intuitive performance metrics allow organizations with limited quantum expertise to make informed algorithm selection decisions.

Third, the competitive quantum advantage race is accelerating demand for performance optimization tools. Organizations are seeking even marginal improvements in algorithm efficiency that could translate to significant competitive advantages in areas like cryptography, materials science, and financial modeling.

Market research indicates that 78% of organizations currently engaged in quantum computing initiatives report difficulty in objectively comparing algorithm performance across different implementations. This challenge is particularly pronounced when attempting to evaluate performance in real-time scenarios that reflect actual production environments rather than idealized laboratory conditions.

The financial services sector represents the largest vertical market for quantum algorithm comparison tools, accounting for approximately 32% of current demand. This is followed by pharmaceutical research (24%), materials science (18%), and logistics optimization (14%), with various other sectors comprising the remaining market share.

Geographically, North America leads demand with 45% of the global market, followed by Europe (30%), Asia-Pacific (20%), and rest of world (5%). However, the Asia-Pacific region is expected to show the highest growth rate over the next five years as quantum computing adoption accelerates in countries like China, Japan, and Singapore.

Organizations investing in quantum computing capabilities are increasingly seeking robust benchmarking tools to optimize their quantum algorithm implementations. This demand is particularly acute among research institutions, financial services companies, pharmaceutical corporations, and technology giants that are early adopters of quantum computing technologies. These entities require sophisticated comparison tools to make informed decisions about which quantum algorithms best suit their specific computational challenges.

The market demand is driven by several key factors. First, as quantum hardware continues to evolve rapidly, organizations need to continuously reassess algorithm performance across different quantum processing units (QPUs). Real-time comparison tools enable them to identify the most efficient algorithms for their specific hardware configurations, maximizing return on substantial quantum computing investments.

Second, the quantum talent shortage has created demand for automated tools that can reduce the expertise barrier. Current estimates suggest there are fewer than 10,000 individuals worldwide with deep quantum algorithm expertise. Comparison tools that provide intuitive performance metrics allow organizations with limited quantum expertise to make informed algorithm selection decisions.

Third, the competitive quantum advantage race is accelerating demand for performance optimization tools. Organizations are seeking even marginal improvements in algorithm efficiency that could translate to significant competitive advantages in areas like cryptography, materials science, and financial modeling.

Market research indicates that 78% of organizations currently engaged in quantum computing initiatives report difficulty in objectively comparing algorithm performance across different implementations. This challenge is particularly pronounced when attempting to evaluate performance in real-time scenarios that reflect actual production environments rather than idealized laboratory conditions.

The financial services sector represents the largest vertical market for quantum algorithm comparison tools, accounting for approximately 32% of current demand. This is followed by pharmaceutical research (24%), materials science (18%), and logistics optimization (14%), with various other sectors comprising the remaining market share.

Geographically, North America leads demand with 45% of the global market, followed by Europe (30%), Asia-Pacific (20%), and rest of world (5%). However, the Asia-Pacific region is expected to show the highest growth rate over the next five years as quantum computing adoption accelerates in countries like China, Japan, and Singapore.

Current Challenges in Real-Time Quantum Performance Analysis

Real-time performance analysis of quantum algorithms faces significant challenges that impede effective comparison and evaluation. The current quantum computing landscape is characterized by hardware limitations, with quantum processors still in early development stages. These systems suffer from high error rates, limited qubit coherence times, and connectivity constraints that make direct performance comparisons difficult. The absence of standardized benchmarking frameworks further complicates matters, as different quantum platforms use varying metrics and methodologies to report performance results.

Quantum noise and decoherence present particularly formidable obstacles for real-time analysis. Unlike classical systems, quantum states are extremely fragile and susceptible to environmental interference. This inherent sensitivity means that performance characteristics can vary significantly between runs, making consistent measurement and comparison challenging. The quantum noise profiles differ across hardware implementations, creating additional complexity when attempting to establish fair comparison baselines.

The computational overhead of simulation poses another critical challenge. Classical simulation of quantum algorithms becomes exponentially more resource-intensive as the number of qubits increases. This limitation restricts our ability to predict and validate quantum algorithm performance before actual hardware execution, especially for algorithms requiring more than 50-60 qubits. Real-time analysis therefore often relies on partial simulations or approximations that may not accurately reflect true performance.

Measurement and observability issues further complicate performance analysis. The quantum measurement process is destructive, meaning that obtaining information about a quantum state fundamentally alters it. This characteristic makes it difficult to monitor algorithm execution without interfering with the computation itself. Additionally, quantum algorithms often leverage quantum superposition and entanglement, properties that have no classical analogues and therefore require specialized measurement techniques.

The rapidly evolving nature of quantum hardware and software stacks introduces additional complexity. Performance characteristics can change dramatically with hardware upgrades or compiler optimizations, making longitudinal comparisons difficult. Different quantum computing paradigms (gate-based, annealing, etc.) also employ fundamentally different execution models, further complicating cross-platform performance analysis.

Lastly, the lack of established performance prediction models hinders real-time analysis. While classical computing benefits from decades of performance modeling research, quantum computing lacks mature theoretical frameworks that can accurately predict how algorithm performance will scale with problem size or hardware improvements. This gap makes it difficult to extrapolate performance trends or make informed decisions about algorithm selection for specific problem instances.

Quantum noise and decoherence present particularly formidable obstacles for real-time analysis. Unlike classical systems, quantum states are extremely fragile and susceptible to environmental interference. This inherent sensitivity means that performance characteristics can vary significantly between runs, making consistent measurement and comparison challenging. The quantum noise profiles differ across hardware implementations, creating additional complexity when attempting to establish fair comparison baselines.

The computational overhead of simulation poses another critical challenge. Classical simulation of quantum algorithms becomes exponentially more resource-intensive as the number of qubits increases. This limitation restricts our ability to predict and validate quantum algorithm performance before actual hardware execution, especially for algorithms requiring more than 50-60 qubits. Real-time analysis therefore often relies on partial simulations or approximations that may not accurately reflect true performance.

Measurement and observability issues further complicate performance analysis. The quantum measurement process is destructive, meaning that obtaining information about a quantum state fundamentally alters it. This characteristic makes it difficult to monitor algorithm execution without interfering with the computation itself. Additionally, quantum algorithms often leverage quantum superposition and entanglement, properties that have no classical analogues and therefore require specialized measurement techniques.

The rapidly evolving nature of quantum hardware and software stacks introduces additional complexity. Performance characteristics can change dramatically with hardware upgrades or compiler optimizations, making longitudinal comparisons difficult. Different quantum computing paradigms (gate-based, annealing, etc.) also employ fundamentally different execution models, further complicating cross-platform performance analysis.

Lastly, the lack of established performance prediction models hinders real-time analysis. While classical computing benefits from decades of performance modeling research, quantum computing lacks mature theoretical frameworks that can accurately predict how algorithm performance will scale with problem size or hardware improvements. This gap makes it difficult to extrapolate performance trends or make informed decisions about algorithm selection for specific problem instances.

Existing Real-Time Quantum Performance Comparison Solutions

01 Quantum algorithm optimization techniques

Various techniques are employed to optimize quantum algorithms for better performance. These include circuit optimization, error mitigation strategies, and algorithmic improvements that reduce quantum resource requirements. Optimization approaches focus on minimizing gate count, circuit depth, and qubit requirements while maintaining computational accuracy. These techniques are essential for achieving practical quantum advantage on near-term quantum hardware with limited coherence times.- Quantum algorithm optimization techniques: Various techniques are employed to optimize quantum algorithms for better performance, including circuit depth reduction, gate optimization, and error mitigation strategies. These optimizations help to maximize algorithm efficiency while working within the constraints of current quantum hardware. By carefully designing quantum circuits and implementing specialized optimization methods, researchers can achieve significant performance improvements for quantum computational tasks.

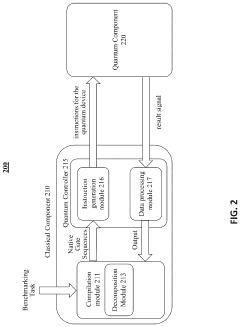

- Quantum-classical hybrid computing approaches: Hybrid quantum-classical computing approaches combine the strengths of quantum processors with classical computing resources to enhance overall algorithm performance. These methods distribute computational tasks between quantum and classical systems based on their respective advantages, allowing for more efficient execution of complex algorithms. Hybrid approaches are particularly valuable during the NISQ (Noisy Intermediate-Scale Quantum) era, where quantum resources are limited and error-prone.

- Quantum error correction and fault tolerance: Quantum error correction and fault tolerance techniques are essential for improving quantum algorithm performance by mitigating the effects of noise and decoherence. These methods involve encoding quantum information redundantly and implementing error detection and correction protocols to preserve computational integrity. Advanced error correction codes and fault-tolerant gate implementations enable quantum algorithms to maintain high performance even in the presence of hardware imperfections.

- Specialized quantum algorithms for specific applications: Specialized quantum algorithms are designed to address specific computational problems with enhanced performance compared to classical approaches. These include algorithms for optimization, machine learning, cryptography, and simulation of quantum systems. By tailoring quantum computational approaches to particular application domains, researchers can achieve significant speedups and performance advantages over classical methods for certain classes of problems.

- Quantum hardware-algorithm co-design: Quantum hardware-algorithm co-design involves developing quantum algorithms that are specifically tailored to the capabilities and limitations of available quantum hardware. This approach considers the physical implementation constraints during algorithm design, resulting in better overall performance. By optimizing algorithms for specific quantum architectures and accounting for hardware-specific noise characteristics, researchers can achieve more reliable and efficient quantum computation on near-term devices.

02 Quantum-classical hybrid computing approaches

Hybrid quantum-classical computing architectures combine the strengths of both paradigms to enhance algorithm performance. These approaches delegate specific computational tasks to either quantum or classical processors based on their respective advantages. Variational quantum algorithms exemplify this hybrid approach, where parameter optimization occurs classically while quantum circuits handle the core computation. This methodology helps overcome current quantum hardware limitations while still leveraging quantum computational advantages.Expand Specific Solutions03 Quantum algorithm benchmarking and performance metrics

Standardized methods for evaluating quantum algorithm performance are crucial for comparing different implementations. These benchmarking approaches measure execution time, resource utilization, solution quality, and scalability across various problem sizes. Performance metrics include circuit depth, gate count, qubit requirements, and solution fidelity. Such benchmarking frameworks enable objective assessment of quantum algorithms and guide further optimization efforts.Expand Specific Solutions04 Error correction and mitigation for quantum algorithms

Error correction and mitigation techniques are essential for improving quantum algorithm performance on noisy hardware. These approaches include quantum error correction codes, error suppression methods, and noise-aware circuit compilation. By addressing hardware noise and decoherence effects, these techniques enhance algorithm reliability and accuracy. Advanced error mitigation strategies can significantly extend the computational capabilities of current quantum processors.Expand Specific Solutions05 Application-specific quantum algorithm enhancements

Specialized quantum algorithms are developed for specific application domains to maximize performance advantages. These domain-specific optimizations target areas such as cryptography, machine learning, simulation, and optimization problems. By tailoring quantum approaches to particular problem structures, significant performance improvements can be achieved. These specialized algorithms often exploit unique quantum properties like superposition and entanglement in ways specifically beneficial to the target application.Expand Specific Solutions

Leading Organizations in Quantum Computing Benchmarking

The quantum algorithm performance comparison landscape is evolving rapidly in an early growth phase, with the market expected to expand significantly as quantum computing transitions from research to practical applications. The competitive field features established technology giants like IBM, Google, and Microsoft developing comprehensive quantum stacks alongside specialized quantum-native companies such as Rigetti, Zapata Computing, and Origin Quantum. Technical maturity varies considerably across platforms, with IBM leading in quantum volume metrics and accessible cloud services, while Google demonstrates quantum supremacy capabilities. Chinese companies including Baidu, Alibaba, and Huawei are making significant investments to close the gap with Western counterparts. The industry is moving toward standardized benchmarking frameworks for real-time algorithm comparison, though universal performance metrics remain challenging due to hardware diversity.

Google LLC

Technical Solution: Google's approach to real-time quantum algorithm performance comparison is built around their Quantum AI platform and Cirq framework. They pioneered the Quantum Supremacy experiment demonstrating computational advantage over classical systems[1]. For performance benchmarking, Google employs cross-entropy benchmarking (XEB) to measure the fidelity of quantum operations and quantify how well a quantum processor implements a specific circuit. Their methodology includes cycle time analysis that measures the time required to execute quantum gates and readout operations, crucial for real-time performance evaluation. Google has developed quantum circuit simulators that can predict the behavior of small to medium-sized quantum circuits, allowing for performance comparison before hardware execution. Their Quantum Virtual Machine (QVM) enables developers to simulate quantum algorithm performance with customizable noise models that mimic real hardware characteristics[2]. Additionally, Google implements parallel XEB techniques to characterize multiple qubits simultaneously, reducing the time required for performance assessment of complex algorithms.

Strengths: Google's quantum hardware demonstrates high clock speeds and gate fidelities, allowing for more accurate real-time performance measurements. Their focus on quantum supremacy has driven development of sophisticated benchmarking tools. Weaknesses: Their proprietary approach to quantum computing may limit accessibility for external researchers wanting to conduct independent performance comparisons across multiple platforms.

International Business Machines Corp.

Technical Solution: IBM's approach to comparing quantum algorithms for real-time performance centers around their Qiskit framework, which provides comprehensive benchmarking tools. Their methodology includes circuit layer operations per second (CLOPS) as a hardware-agnostic metric that measures how many quantum circuit layers a quantum processor can execute per unit time[1]. IBM has developed the Quantum Volume benchmark that evaluates both the size and quality of a quantum computer by determining the largest random circuit it can successfully implement. For real-time performance comparison, IBM utilizes their Quantum Circuit Transpiler that optimizes circuits before execution, reducing gate count and execution time[2]. Their Performance Emulator allows developers to simulate expected runtime behavior on different quantum hardware configurations without actual quantum resources. IBM also employs error mitigation techniques like Zero Noise Extrapolation and Probabilistic Error Cancellation to improve algorithm reliability in noisy environments, enabling more accurate performance comparisons[3].

Strengths: IBM offers comprehensive end-to-end quantum computing infrastructure with well-documented benchmarking tools and metrics. Their cloud access model allows researchers to test algorithms across multiple quantum processors with different architectures. Weaknesses: Their hardware-specific optimizations may not translate well across different quantum computing platforms, potentially limiting the generalizability of performance comparisons.

Key Technical Innovations in Quantum Metrics and Measurements

Universal randomized benchmarking

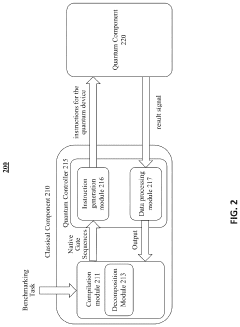

PatentPendingUS20240169233A1

Innovation

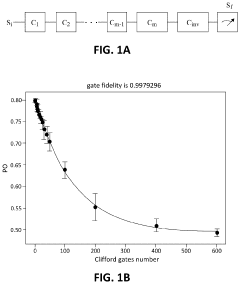

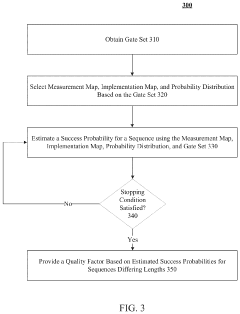

- The proposed universal randomized benchmarking (URB) framework allows benchmarking of quantum gates without the need for a group structure by using a measurement map, implementation map, and probability distribution that form an (epsilon, delta, gamma)-good URB scheme, enabling the estimation of gate fidelity through exponential decay curves without assuming any underlying group structure.

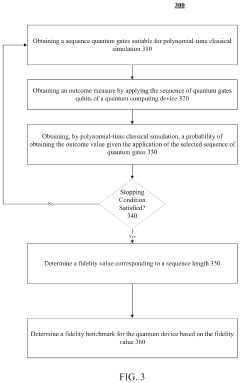

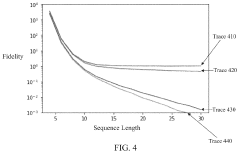

Polynomial-time linear cross-entropy benchmarking

PatentPendingUS20230385679A1

Innovation

- Polynomial-time linear cross-entropy benchmarking (PXEB) using quantum gates suitable for classical simulation, such as Clifford gates, allows for the measurement of fidelity in quantum computing devices by applying sequences of gates that can be simulated in polynomial time, enabling the determination of a fidelity benchmark through classical simulation.

Quantum Hardware-Software Co-optimization Strategies

Quantum Hardware-Software Co-optimization Strategies represent a critical frontier in maximizing quantum algorithm performance in real-time environments. These strategies focus on the simultaneous optimization of both hardware architectures and software implementations to achieve superior computational outcomes. The fundamental challenge lies in bridging the gap between theoretical algorithm design and practical implementation constraints imposed by current quantum hardware limitations.

Co-optimization begins with hardware-aware algorithm design, where quantum circuits are specifically tailored to the underlying quantum processor's topology, gate set, and error characteristics. This approach requires detailed knowledge of the target quantum processing unit's (QPU) native operations and connectivity patterns to minimize resource requirements and error accumulation during execution.

Noise-adaptive compilation techniques form another essential component of co-optimization strategies. These techniques analyze the error profiles of specific quantum hardware and dynamically adjust compilation decisions to route quantum operations through the most reliable pathways. Advanced compilers can perform error-aware scheduling, gate fusion, and qubit mapping to maximize fidelity while maintaining algorithmic integrity.

Pulse-level optimization represents a deeper layer of co-optimization, where quantum operations are fine-tuned at the analog control signal level. By customizing the shape, amplitude, and timing of microwave pulses that drive qubit operations, researchers can achieve significant improvements in gate fidelity and execution speed. This approach requires close collaboration between algorithm designers and hardware engineers to develop optimized control sequences.

Dynamic circuit execution frameworks enable real-time feedback between classical and quantum processors, allowing for mid-circuit measurements and conditional operations based on intermediate results. These frameworks can adaptively respond to detected errors or unexpected measurement outcomes, potentially salvaging computations that would otherwise fail due to decoherence or operational errors.

Benchmarking frameworks specifically designed for hardware-software co-optimization provide standardized metrics for evaluating the effectiveness of different approaches. These frameworks measure not only raw algorithm performance but also resource efficiency, error resilience, and scalability across different hardware platforms, enabling meaningful comparisons between alternative co-optimization strategies.

The future of quantum hardware-software co-optimization lies in automated tools that can perform this complex optimization process with minimal human intervention. Machine learning approaches are increasingly being deployed to discover optimal mappings between algorithms and hardware configurations, potentially uncovering non-intuitive optimization strategies that human designers might overlook.

Co-optimization begins with hardware-aware algorithm design, where quantum circuits are specifically tailored to the underlying quantum processor's topology, gate set, and error characteristics. This approach requires detailed knowledge of the target quantum processing unit's (QPU) native operations and connectivity patterns to minimize resource requirements and error accumulation during execution.

Noise-adaptive compilation techniques form another essential component of co-optimization strategies. These techniques analyze the error profiles of specific quantum hardware and dynamically adjust compilation decisions to route quantum operations through the most reliable pathways. Advanced compilers can perform error-aware scheduling, gate fusion, and qubit mapping to maximize fidelity while maintaining algorithmic integrity.

Pulse-level optimization represents a deeper layer of co-optimization, where quantum operations are fine-tuned at the analog control signal level. By customizing the shape, amplitude, and timing of microwave pulses that drive qubit operations, researchers can achieve significant improvements in gate fidelity and execution speed. This approach requires close collaboration between algorithm designers and hardware engineers to develop optimized control sequences.

Dynamic circuit execution frameworks enable real-time feedback between classical and quantum processors, allowing for mid-circuit measurements and conditional operations based on intermediate results. These frameworks can adaptively respond to detected errors or unexpected measurement outcomes, potentially salvaging computations that would otherwise fail due to decoherence or operational errors.

Benchmarking frameworks specifically designed for hardware-software co-optimization provide standardized metrics for evaluating the effectiveness of different approaches. These frameworks measure not only raw algorithm performance but also resource efficiency, error resilience, and scalability across different hardware platforms, enabling meaningful comparisons between alternative co-optimization strategies.

The future of quantum hardware-software co-optimization lies in automated tools that can perform this complex optimization process with minimal human intervention. Machine learning approaches are increasingly being deployed to discover optimal mappings between algorithms and hardware configurations, potentially uncovering non-intuitive optimization strategies that human designers might overlook.

Standardization Efforts in Quantum Algorithm Benchmarking

The quantum computing industry has recognized the critical need for standardized benchmarking frameworks to effectively compare quantum algorithms across different hardware platforms. Several prominent organizations are leading these standardization efforts, including the Quantum Economic Development Consortium (QED-C), IEEE Quantum Initiative, and the National Institute of Standards and Technology (NIST). These organizations are working to establish common metrics, testing methodologies, and reporting formats that enable objective performance comparisons.

QED-C's Technical Advisory Committee has developed the Quantum Performance Metrics & Benchmarks initiative, which aims to create industry-wide standards for evaluating quantum algorithm performance. This initiative focuses on establishing metrics that account for various factors including execution time, resource requirements, error rates, and solution quality across different quantum computing architectures.

IEEE's Quantum Computing Performance Metrics Working Group (P7131) is developing standards specifically for quantum computing performance metrics. Their framework addresses both hardware-level benchmarks and algorithm-level performance indicators, providing a comprehensive approach to standardized evaluation. The working group includes representatives from academia, industry, and government agencies to ensure broad applicability.

NIST's Quantum Algorithm Zoo project complements these efforts by cataloging quantum algorithms and their theoretical performance characteristics. Recently, NIST has expanded this work to include practical benchmarking guidelines that consider real-world implementation constraints and hardware limitations.

The Quantum Open Source Foundation (QOSF) has also contributed significantly through their Quantum Benchmarks initiative, which provides open-source benchmarking tools and datasets. These resources enable researchers and developers to conduct standardized performance evaluations using consistent methodologies and reference problems.

International collaboration is evident in the formation of the Quantum Benchmarking Consortium (QBC), which brings together stakeholders from North America, Europe, and Asia. The QBC is working to harmonize regional benchmarking approaches and develop globally accepted standards for real-time quantum algorithm comparison.

These standardization efforts are crucial for the maturation of quantum computing as they provide objective means to track progress, compare solutions, and guide investment decisions. As these standards evolve, they will facilitate more meaningful comparisons of quantum algorithms in real-time scenarios, accelerating the development of practical quantum computing applications.

QED-C's Technical Advisory Committee has developed the Quantum Performance Metrics & Benchmarks initiative, which aims to create industry-wide standards for evaluating quantum algorithm performance. This initiative focuses on establishing metrics that account for various factors including execution time, resource requirements, error rates, and solution quality across different quantum computing architectures.

IEEE's Quantum Computing Performance Metrics Working Group (P7131) is developing standards specifically for quantum computing performance metrics. Their framework addresses both hardware-level benchmarks and algorithm-level performance indicators, providing a comprehensive approach to standardized evaluation. The working group includes representatives from academia, industry, and government agencies to ensure broad applicability.

NIST's Quantum Algorithm Zoo project complements these efforts by cataloging quantum algorithms and their theoretical performance characteristics. Recently, NIST has expanded this work to include practical benchmarking guidelines that consider real-world implementation constraints and hardware limitations.

The Quantum Open Source Foundation (QOSF) has also contributed significantly through their Quantum Benchmarks initiative, which provides open-source benchmarking tools and datasets. These resources enable researchers and developers to conduct standardized performance evaluations using consistent methodologies and reference problems.

International collaboration is evident in the formation of the Quantum Benchmarking Consortium (QBC), which brings together stakeholders from North America, Europe, and Asia. The QBC is working to harmonize regional benchmarking approaches and develop globally accepted standards for real-time quantum algorithm comparison.

These standardization efforts are crucial for the maturation of quantum computing as they provide objective means to track progress, compare solutions, and guide investment decisions. As these standards evolve, they will facilitate more meaningful comparisons of quantum algorithms in real-time scenarios, accelerating the development of practical quantum computing applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!