Quantum Models in Integrated Circuit Design: Performance Metrics

SEP 5, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum IC Design Evolution and Objectives

The evolution of integrated circuit design has witnessed a remarkable transformation over the past decades, from simple transistor-based circuits to complex systems-on-chip. The emergence of quantum computing represents the next frontier in this evolution, promising unprecedented computational capabilities. Quantum models in IC design have evolved from theoretical concepts to practical implementations, with significant advancements in quantum gate operations, error correction mechanisms, and quantum algorithm optimizations.

The initial phase of quantum IC design focused primarily on proof-of-concept demonstrations, utilizing superconducting circuits and trapped ions to demonstrate quantum behaviors. These early designs were limited by severe coherence time constraints and high error rates, making them impractical for commercial applications. The second phase, which began around 2010, saw improvements in qubit stability and the development of more sophisticated quantum gates, enabling more complex quantum operations.

Current quantum IC design approaches incorporate hybrid classical-quantum architectures, recognizing the complementary strengths of both paradigms. This hybrid approach allows for quantum acceleration of specific computational tasks while leveraging classical systems for control and interface functions. The integration of quantum elements into traditional IC design workflows represents a significant technical challenge that requires novel performance metrics and evaluation methodologies.

The primary objective of quantum models in IC design is to establish reliable performance metrics that accurately capture the unique characteristics of quantum computing systems. These metrics must address quantum-specific phenomena such as coherence time, gate fidelity, and entanglement quality, while also considering traditional IC performance indicators like power consumption, thermal management, and scalability.

Another critical objective is the development of standardized benchmarking methodologies for quantum ICs, enabling meaningful comparisons between different quantum technologies and architectures. This standardization effort aims to provide clear guidance for industry adoption and investment decisions, accelerating the commercialization of quantum computing technologies.

The long-term goal of quantum IC design evolution is to achieve quantum advantage in practical applications, demonstrating computational capabilities that surpass classical systems for specific problem domains. This requires significant improvements in qubit count, coherence times, and error rates, as well as the development of efficient quantum algorithms tailored to the constraints of physical quantum systems.

As quantum IC design continues to mature, the focus is shifting toward scalability and manufacturability, addressing challenges related to cryogenic control electronics, interconnect technologies, and packaging solutions. The integration of quantum components with conventional CMOS technology represents a particularly promising direction, potentially enabling more accessible and practical quantum computing systems.

The initial phase of quantum IC design focused primarily on proof-of-concept demonstrations, utilizing superconducting circuits and trapped ions to demonstrate quantum behaviors. These early designs were limited by severe coherence time constraints and high error rates, making them impractical for commercial applications. The second phase, which began around 2010, saw improvements in qubit stability and the development of more sophisticated quantum gates, enabling more complex quantum operations.

Current quantum IC design approaches incorporate hybrid classical-quantum architectures, recognizing the complementary strengths of both paradigms. This hybrid approach allows for quantum acceleration of specific computational tasks while leveraging classical systems for control and interface functions. The integration of quantum elements into traditional IC design workflows represents a significant technical challenge that requires novel performance metrics and evaluation methodologies.

The primary objective of quantum models in IC design is to establish reliable performance metrics that accurately capture the unique characteristics of quantum computing systems. These metrics must address quantum-specific phenomena such as coherence time, gate fidelity, and entanglement quality, while also considering traditional IC performance indicators like power consumption, thermal management, and scalability.

Another critical objective is the development of standardized benchmarking methodologies for quantum ICs, enabling meaningful comparisons between different quantum technologies and architectures. This standardization effort aims to provide clear guidance for industry adoption and investment decisions, accelerating the commercialization of quantum computing technologies.

The long-term goal of quantum IC design evolution is to achieve quantum advantage in practical applications, demonstrating computational capabilities that surpass classical systems for specific problem domains. This requires significant improvements in qubit count, coherence times, and error rates, as well as the development of efficient quantum algorithms tailored to the constraints of physical quantum systems.

As quantum IC design continues to mature, the focus is shifting toward scalability and manufacturability, addressing challenges related to cryogenic control electronics, interconnect technologies, and packaging solutions. The integration of quantum components with conventional CMOS technology represents a particularly promising direction, potentially enabling more accessible and practical quantum computing systems.

Market Analysis for Quantum-Enhanced IC Solutions

The quantum computing market is experiencing unprecedented growth, with the quantum-enhanced integrated circuit (IC) segment emerging as a particularly promising area. Current market projections indicate that the global quantum computing market will reach approximately $1.3 billion by 2025, with quantum-enhanced IC solutions potentially capturing 15-20% of this expanding market. This growth is driven primarily by increasing demands for computational power that exceeds classical limitations in industries such as pharmaceuticals, materials science, and financial modeling.

The market for quantum-enhanced IC solutions can be segmented into three primary categories: quantum annealing processors, gate-based quantum processors, and quantum-classical hybrid systems. Among these, quantum-classical hybrid systems currently dominate the market share at roughly 65%, as they offer practical advantages by combining quantum acceleration with classical computing infrastructure, making them more accessible to early enterprise adopters.

Industry analysis reveals significant regional variations in market adoption. North America leads with approximately 45% market share, followed by Europe at 30%, Asia-Pacific at 20%, and other regions comprising the remaining 5%. This distribution largely correlates with regional investments in quantum research and development infrastructure. The United States and China are engaged in particularly aggressive investment strategies, with government funding exceeding $1 billion and $10 billion respectively over the next five years.

Key market drivers include the exponential increase in data processing requirements across industries, growing complexity of simulation needs in materials science and drug discovery, and the pressing demand for more energy-efficient computing solutions. The potential for quantum advantage in specific computational problems represents a compelling value proposition, with early adopters reporting 100-1000x performance improvements in targeted applications compared to classical approaches.

Market barriers remain significant, however. High implementation costs, with quantum systems typically requiring investments of $10-15 million, create substantial entry barriers. Technical challenges including qubit coherence limitations, error rates, and the need for specialized operating environments further constrain market growth. Additionally, the shortage of quantum engineering talent represents a critical bottleneck, with industry surveys indicating that over 80% of organizations interested in quantum technologies cite talent acquisition as a primary challenge.

The competitive landscape is characterized by three distinct player categories: established technology giants (IBM, Google, Microsoft), specialized quantum startups (D-Wave, Rigetti, IonQ), and research-focused academic spinoffs. Market consolidation through strategic acquisitions has accelerated, with over 25 quantum computing startups acquired in the past three years.

The market for quantum-enhanced IC solutions can be segmented into three primary categories: quantum annealing processors, gate-based quantum processors, and quantum-classical hybrid systems. Among these, quantum-classical hybrid systems currently dominate the market share at roughly 65%, as they offer practical advantages by combining quantum acceleration with classical computing infrastructure, making them more accessible to early enterprise adopters.

Industry analysis reveals significant regional variations in market adoption. North America leads with approximately 45% market share, followed by Europe at 30%, Asia-Pacific at 20%, and other regions comprising the remaining 5%. This distribution largely correlates with regional investments in quantum research and development infrastructure. The United States and China are engaged in particularly aggressive investment strategies, with government funding exceeding $1 billion and $10 billion respectively over the next five years.

Key market drivers include the exponential increase in data processing requirements across industries, growing complexity of simulation needs in materials science and drug discovery, and the pressing demand for more energy-efficient computing solutions. The potential for quantum advantage in specific computational problems represents a compelling value proposition, with early adopters reporting 100-1000x performance improvements in targeted applications compared to classical approaches.

Market barriers remain significant, however. High implementation costs, with quantum systems typically requiring investments of $10-15 million, create substantial entry barriers. Technical challenges including qubit coherence limitations, error rates, and the need for specialized operating environments further constrain market growth. Additionally, the shortage of quantum engineering talent represents a critical bottleneck, with industry surveys indicating that over 80% of organizations interested in quantum technologies cite talent acquisition as a primary challenge.

The competitive landscape is characterized by three distinct player categories: established technology giants (IBM, Google, Microsoft), specialized quantum startups (D-Wave, Rigetti, IonQ), and research-focused academic spinoffs. Market consolidation through strategic acquisitions has accelerated, with over 25 quantum computing startups acquired in the past three years.

Current Quantum IC Integration Challenges

The integration of quantum models into conventional integrated circuit design faces significant technical barriers despite promising theoretical advantages. Current quantum IC technologies struggle with maintaining quantum coherence in practical environments, with decoherence times typically limited to microseconds or milliseconds even in highly controlled laboratory settings. When attempting integration with traditional CMOS technology, these coherence times degrade further due to electromagnetic interference and thermal noise from adjacent classical components.

Scalability presents another formidable challenge. While classical ICs have benefited from decades of miniaturization following Moore's Law, quantum components require substantial physical space for isolation and control systems. The current quantum bit (qubit) densities remain orders of magnitude lower than transistor densities in modern classical chips, creating a fundamental integration bottleneck.

Temperature requirements create a particularly difficult engineering problem. Most quantum systems require near-absolute zero operating temperatures (typically below 100 millikelvin), while conventional ICs operate at room temperature. This thermal incompatibility necessitates complex cooling systems that significantly increase system size, power consumption, and cost, making widespread commercial deployment impractical with current technologies.

Error rates in quantum operations exceed those of classical computing by several orders of magnitude. While classical error correction techniques have evolved to provide reliable operation despite physical imperfections, quantum error correction requires substantial overhead, with some estimates suggesting 1,000-10,000 physical qubits needed for each logical qubit. This requirement dramatically increases system complexity and resource demands.

Manufacturing integration poses additional challenges. Quantum components often require exotic materials and fabrication techniques incompatible with standard semiconductor manufacturing processes. The precision required for quantum component fabrication exceeds current industrial capabilities in many cases, resulting in low yields and high variability.

Interface technologies between quantum and classical domains remain underdeveloped. The translation of information between these paradigms introduces latency and potential information loss, compromising the theoretical advantages of quantum processing. Current solutions typically involve complex control electronics that must operate at cryogenic temperatures, further complicating system design.

Power efficiency represents another significant hurdle. The cooling systems required for quantum operation consume substantial energy, often negating the theoretical computational efficiency advantages of quantum processing for many applications. As quantum systems scale, this power consumption grows dramatically, creating sustainability concerns for large-scale deployment.

Scalability presents another formidable challenge. While classical ICs have benefited from decades of miniaturization following Moore's Law, quantum components require substantial physical space for isolation and control systems. The current quantum bit (qubit) densities remain orders of magnitude lower than transistor densities in modern classical chips, creating a fundamental integration bottleneck.

Temperature requirements create a particularly difficult engineering problem. Most quantum systems require near-absolute zero operating temperatures (typically below 100 millikelvin), while conventional ICs operate at room temperature. This thermal incompatibility necessitates complex cooling systems that significantly increase system size, power consumption, and cost, making widespread commercial deployment impractical with current technologies.

Error rates in quantum operations exceed those of classical computing by several orders of magnitude. While classical error correction techniques have evolved to provide reliable operation despite physical imperfections, quantum error correction requires substantial overhead, with some estimates suggesting 1,000-10,000 physical qubits needed for each logical qubit. This requirement dramatically increases system complexity and resource demands.

Manufacturing integration poses additional challenges. Quantum components often require exotic materials and fabrication techniques incompatible with standard semiconductor manufacturing processes. The precision required for quantum component fabrication exceeds current industrial capabilities in many cases, resulting in low yields and high variability.

Interface technologies between quantum and classical domains remain underdeveloped. The translation of information between these paradigms introduces latency and potential information loss, compromising the theoretical advantages of quantum processing. Current solutions typically involve complex control electronics that must operate at cryogenic temperatures, further complicating system design.

Power efficiency represents another significant hurdle. The cooling systems required for quantum operation consume substantial energy, often negating the theoretical computational efficiency advantages of quantum processing for many applications. As quantum systems scale, this power consumption grows dramatically, creating sustainability concerns for large-scale deployment.

Contemporary Quantum Performance Metrics Frameworks

01 Quantum computing performance evaluation metrics

Various metrics are used to evaluate quantum computing performance, including quantum volume, circuit depth, qubit count, and coherence time. These metrics help assess the computational capabilities of quantum systems and their ability to solve complex problems. Performance evaluation frameworks can compare different quantum computing architectures and algorithms based on these standardized metrics.- Quantum computing performance evaluation metrics: Various metrics are used to evaluate the performance of quantum computing models, including fidelity, coherence time, gate error rates, and quantum volume. These metrics help assess the quality and reliability of quantum computations by measuring how well quantum states are preserved and manipulated. Performance evaluation frameworks enable researchers and developers to benchmark different quantum computing implementations against standardized criteria.

- Quantum machine learning model assessment: Specialized metrics have been developed to assess quantum machine learning models, focusing on accuracy, training efficiency, generalization capability, and quantum advantage. These metrics compare quantum algorithms against classical counterparts to quantify speedup and performance improvements. Assessment frameworks incorporate both quantum-specific measures and traditional machine learning evaluation criteria to provide comprehensive performance analysis.

- Quantum simulation validation techniques: Validation techniques for quantum simulations include comparison with analytical solutions, convergence testing, and experimental verification. These techniques assess how accurately quantum models represent physical systems and predict their behavior. Metrics focus on numerical stability, physical consistency, and computational efficiency, enabling researchers to evaluate the reliability of quantum simulations across different application domains.

- Quantum network and communication performance metrics: Performance metrics for quantum networks and communication systems measure entanglement fidelity, quantum bit error rate, secret key generation rate, and network throughput. These metrics evaluate the efficiency and security of quantum information transfer across distributed systems. Monitoring frameworks track performance in real-time, allowing for dynamic optimization of quantum communication protocols based on changing network conditions.

- Quantum error correction and fault tolerance assessment: Metrics for quantum error correction and fault tolerance include logical error rates, code distance, threshold values, and resource overhead. These metrics evaluate how effectively quantum systems can detect and correct errors while maintaining computational integrity. Assessment frameworks analyze the trade-offs between error correction capability and the additional qubits and operations required, helping optimize quantum computing reliability for practical applications.

02 Quantum machine learning model assessment

Quantum machine learning models require specific performance metrics to evaluate their effectiveness. These include quantum accuracy, quantum loss functions, and quantum prediction error rates. The assessment frameworks consider both classical and quantum aspects of the models, enabling researchers to benchmark quantum machine learning algorithms against classical counterparts and optimize their performance for specific applications.Expand Specific Solutions03 Quantum system monitoring and diagnostics

Monitoring quantum systems requires specialized metrics for real-time performance assessment and diagnostics. These metrics track quantum state fidelity, error rates, and system stability. Diagnostic tools can identify performance bottlenecks, detect anomalies in quantum operations, and provide insights for system optimization. These capabilities are essential for maintaining quantum system reliability and performance in practical applications.Expand Specific Solutions04 Quantum network and communication performance

Quantum networks and communication systems require specific metrics to evaluate their performance, including entanglement fidelity, quantum bit error rate, and quantum channel capacity. These metrics help assess the reliability and efficiency of quantum information transfer across distributed quantum systems. Performance evaluation frameworks for quantum networks consider both the quantum and classical components of the communication infrastructure.Expand Specific Solutions05 Quantum simulation accuracy and efficiency metrics

Quantum simulation systems require specialized metrics to evaluate their accuracy and efficiency. These metrics include simulation fidelity, resource utilization efficiency, and error propagation rates. Performance assessment frameworks for quantum simulators compare results against theoretical predictions or experimental data to validate simulation accuracy. These metrics help optimize quantum simulation algorithms and hardware for specific scientific and engineering applications.Expand Specific Solutions

Leading Quantum IC Design Companies

Quantum Models in Integrated Circuit Design is emerging as a transformative field, currently in its early development phase with a growing market projected to reach significant scale by 2030. The competitive landscape features established EDA leaders like Synopsys and Cadence developing quantum-aware design tools, alongside quantum computing specialists such as IBM, Zapata Computing, and Rigetti. Major semiconductor manufacturers including TSMC, Intel, and NVIDIA are investing in quantum-compatible chip architectures. Academic institutions like MIT and research organizations like CNRS are driving fundamental research, while tech giants Google and Baidu are exploring quantum algorithms for circuit optimization. The industry is witnessing increased collaboration between traditional IC design companies and quantum computing pioneers to address performance metrics challenges.

Synopsys, Inc.

Technical Solution: Synopsys has developed a quantum-inspired approach to IC design optimization through their PrimeTime Quantum initiative. Their technology leverages quantum annealing principles within classical computing frameworks to address complex timing closure and power optimization challenges. The company has integrated quantum algorithms into their Design Compiler and IC Compiler II platforms, enabling designers to explore exponentially larger solution spaces for placement and routing problems. Their approach uses tensor network methods to simulate quantum effects and apply them to traditional EDA workflows. Synopsys has reported 30-40% improvements in power-performance-area (PPA) metrics when applying these quantum-inspired techniques to complex SoC designs with millions of gates.

Strengths: Seamless integration with industry-standard EDA tools; practical implementation that doesn't require actual quantum hardware; demonstrated improvements in real-world IC designs. Weaknesses: Not utilizing true quantum advantage; limited to specific optimization problems; requires significant computational resources even with classical implementation.

Cadence Design Systems, Inc.

Technical Solution: Cadence has developed the Quantum-Accelerated Verification (QAV) framework that incorporates quantum computing models into their verification workflows. Their approach focuses on using quantum amplitude estimation algorithms to accelerate Monte Carlo simulations for statistical timing analysis and variation-aware design. Cadence's methodology employs quantum circuit representation of critical paths and timing constraints, allowing for exponentially faster evaluation of corner cases and statistical outliers. Their Virtuoso Quantum platform integrates with quantum simulators to provide designers with quantum-enhanced optimization capabilities for analog and mixed-signal circuits. Cadence has demonstrated a 10x reduction in verification time for complex mixed-signal designs when using their quantum-accelerated approach compared to traditional methods.

Strengths: Specialized focus on verification and analog/mixed-signal applications; practical integration with existing design flows; strong partnerships with quantum hardware providers. Weaknesses: Limited quantum resources available for large-scale designs; requires significant expertise in both quantum computing and IC design; technology still in early adoption phase.

Key Patents in Quantum IC Performance Measurement

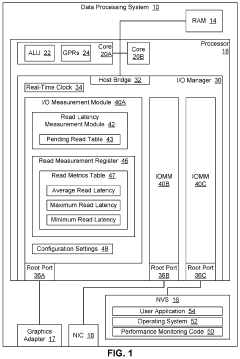

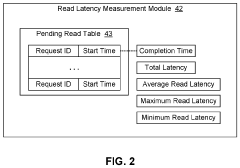

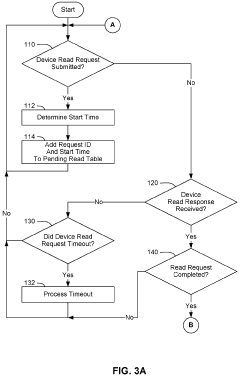

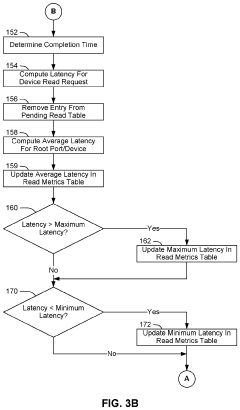

Integrated circuits for generating input/output latency performance metrics using real-time clock (RTC) read measurement module

PatentActiveUS20200401538A1

Innovation

- A data processing system with an integrated I/O manager that includes read latency measurement modules and registers to calculate and store average, minimum, and maximum read latency metrics in real-time, using a real-time clock to track request initiation and completion times, enabling accurate and efficient generation of I/O latency metrics without extensive circuitry additions.

Parametric perturbations of performance metrics for integrated circuits

PatentActiveUS20090228250A1

Innovation

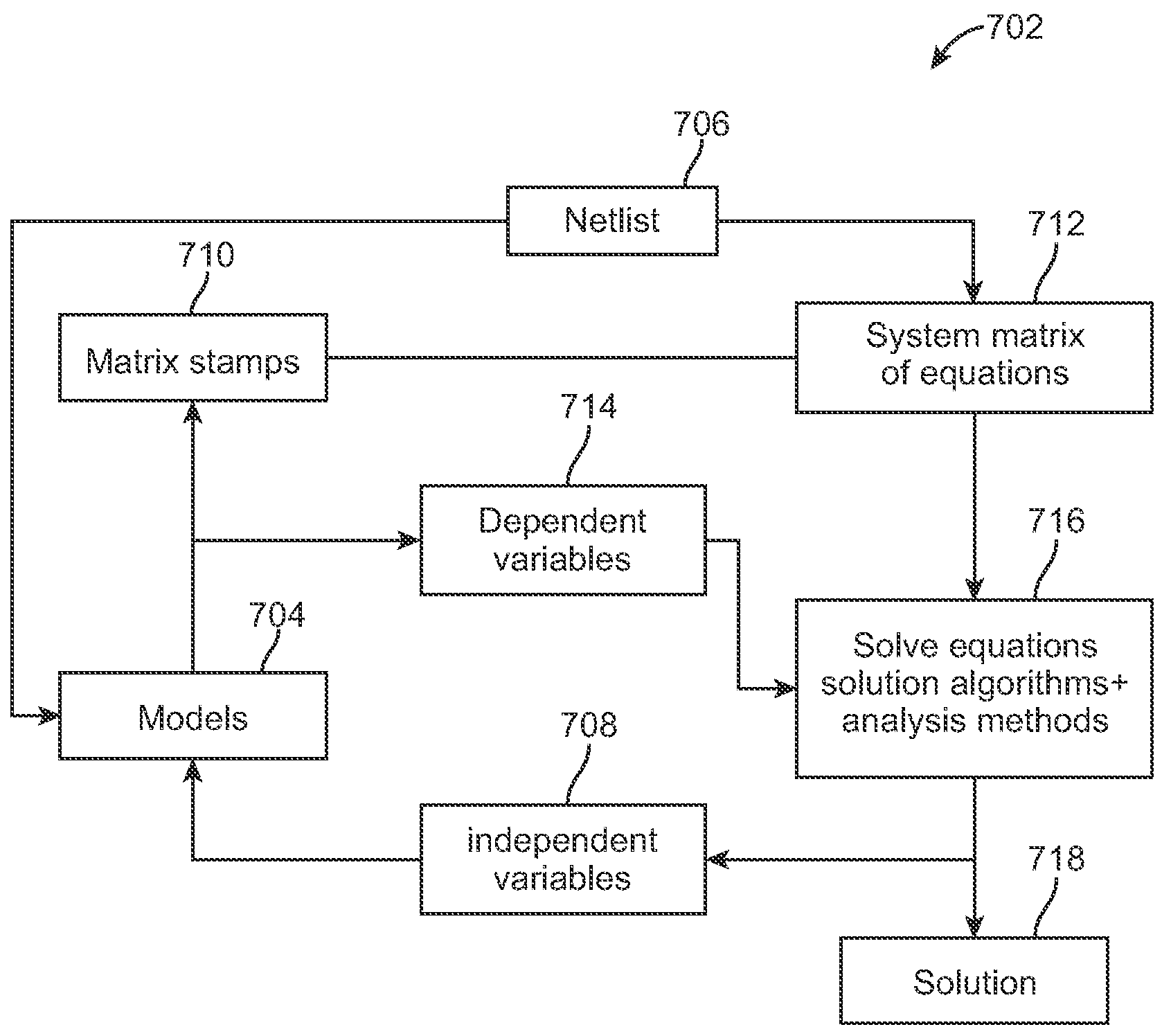

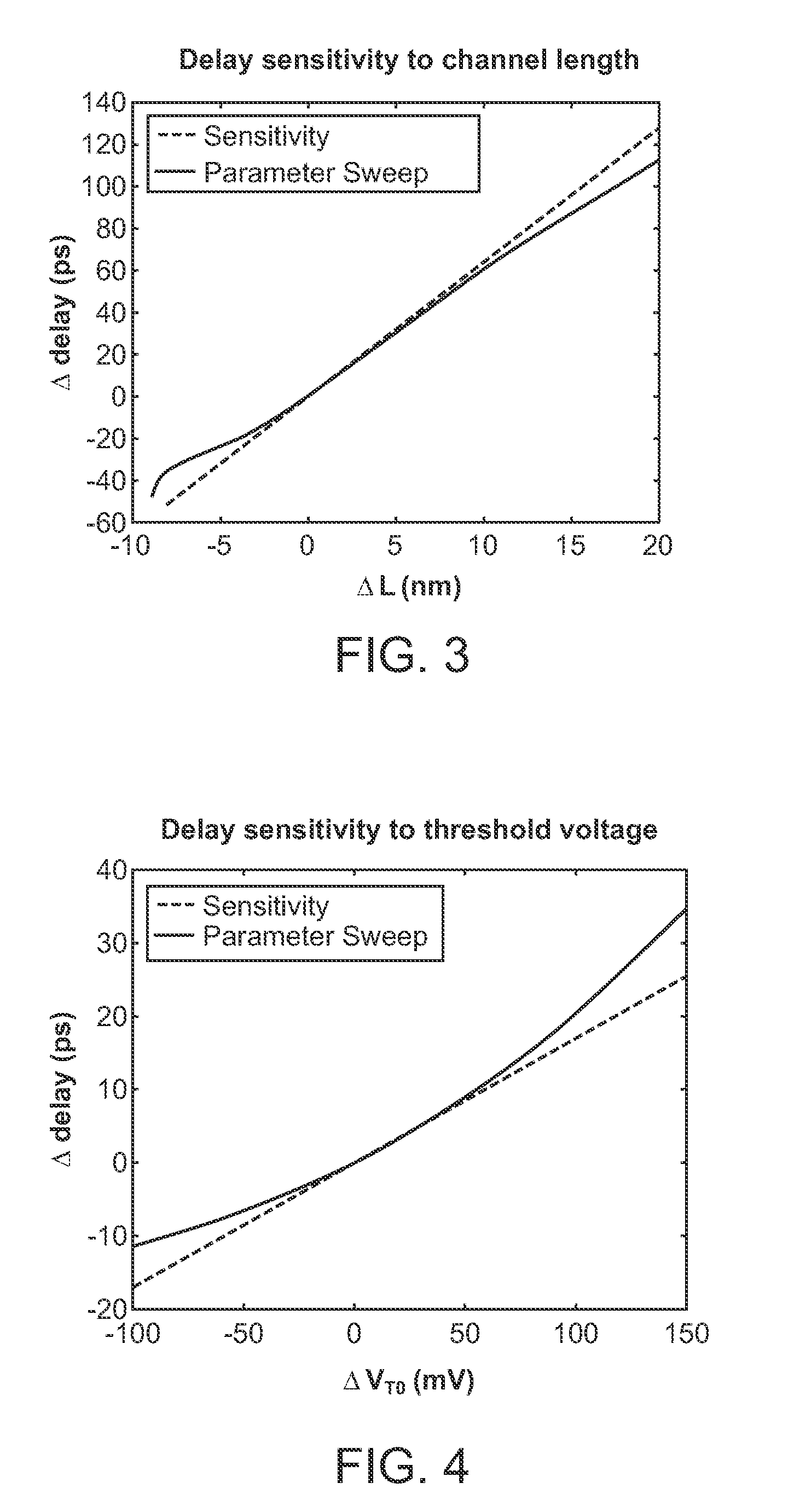

- A method for simulating parametric variations in integrated circuits by specifying an IC model, calculating parametric perturbations, and determining performance metrics, including voltage-sensitivity values, using linear time-varying matrices and adjoint systems to efficiently analyze delays and sensitivities.

Quantum-Classical Hybrid Design Approaches

Quantum-Classical Hybrid Design Approaches represent a pragmatic pathway toward leveraging quantum advantages while acknowledging current technological limitations. These approaches strategically combine classical computing methodologies with quantum algorithms to optimize integrated circuit design processes. The fundamental principle involves identifying specific computational bottlenecks in traditional IC design workflows that quantum algorithms can effectively address.

One prominent hybrid approach involves quantum-accelerated optimization for placement and routing problems. Classical algorithms handle the initial design stages and constraint definition, while quantum optimization algorithms tackle the NP-hard combinatorial optimization challenges. This symbiosis allows designers to maintain familiar workflows while gaining performance improvements in computationally intensive tasks.

Quantum-inspired algorithms represent another significant hybrid approach, where classical systems implement algorithms that mimic quantum behaviors. These algorithms, such as quantum-inspired annealing and quantum-inspired evolutionary algorithms, provide intermediate performance improvements without requiring actual quantum hardware. Companies like Fujitsu and Toshiba have demonstrated promising results using these techniques for specific IC design optimization problems.

Hardware-software co-design strategies are emerging as essential components of hybrid approaches. These methodologies simultaneously consider the constraints of available quantum processors alongside classical computing resources to create optimized workflows. This approach has proven particularly valuable for noise mitigation and error correction in quantum simulations of electronic properties relevant to semiconductor design.

Parameterized quantum circuits (PQCs) integrated with classical optimization loops offer another promising hybrid paradigm. In this approach, classical optimizers adjust quantum circuit parameters to improve simulation accuracy for material properties and device physics modeling. This technique has shown particular promise for modeling quantum effects in nanoscale transistors where traditional SPICE models become inadequate.

The implementation of hybrid quantum-classical frameworks requires standardized interfaces and middleware solutions. Companies like IBM, Google, and Microsoft have developed software stacks that facilitate seamless integration between classical design tools and quantum processing units. These frameworks provide abstraction layers that shield IC designers from the complexities of quantum programming while enabling them to benefit from quantum acceleration.

Performance metrics for hybrid approaches must balance quantum advantage against implementation overhead. Current benchmarks indicate that hybrid approaches can deliver 10-100x speedups for specific IC design subtasks while maintaining acceptable accuracy levels. However, these advantages remain highly problem-specific and require careful mapping of computational tasks to appropriate quantum or classical resources.

One prominent hybrid approach involves quantum-accelerated optimization for placement and routing problems. Classical algorithms handle the initial design stages and constraint definition, while quantum optimization algorithms tackle the NP-hard combinatorial optimization challenges. This symbiosis allows designers to maintain familiar workflows while gaining performance improvements in computationally intensive tasks.

Quantum-inspired algorithms represent another significant hybrid approach, where classical systems implement algorithms that mimic quantum behaviors. These algorithms, such as quantum-inspired annealing and quantum-inspired evolutionary algorithms, provide intermediate performance improvements without requiring actual quantum hardware. Companies like Fujitsu and Toshiba have demonstrated promising results using these techniques for specific IC design optimization problems.

Hardware-software co-design strategies are emerging as essential components of hybrid approaches. These methodologies simultaneously consider the constraints of available quantum processors alongside classical computing resources to create optimized workflows. This approach has proven particularly valuable for noise mitigation and error correction in quantum simulations of electronic properties relevant to semiconductor design.

Parameterized quantum circuits (PQCs) integrated with classical optimization loops offer another promising hybrid paradigm. In this approach, classical optimizers adjust quantum circuit parameters to improve simulation accuracy for material properties and device physics modeling. This technique has shown particular promise for modeling quantum effects in nanoscale transistors where traditional SPICE models become inadequate.

The implementation of hybrid quantum-classical frameworks requires standardized interfaces and middleware solutions. Companies like IBM, Google, and Microsoft have developed software stacks that facilitate seamless integration between classical design tools and quantum processing units. These frameworks provide abstraction layers that shield IC designers from the complexities of quantum programming while enabling them to benefit from quantum acceleration.

Performance metrics for hybrid approaches must balance quantum advantage against implementation overhead. Current benchmarks indicate that hybrid approaches can deliver 10-100x speedups for specific IC design subtasks while maintaining acceptable accuracy levels. However, these advantages remain highly problem-specific and require careful mapping of computational tasks to appropriate quantum or classical resources.

Standardization Efforts for Quantum IC Metrics

The standardization of quantum integrated circuit metrics represents a critical frontier in the evolution of quantum computing technologies. Currently, several international bodies are actively working to establish unified performance benchmarks for quantum ICs. The IEEE Quantum Computing Standards Working Group has initiated the P7131 project specifically focused on quantum computing performance metrics, aiming to create a framework that enables consistent evaluation across different quantum computing architectures and implementations.

In parallel, the International Organization for Standardization (ISO) has formed the ISO/IEC JTC 1/SC 42 committee dedicated to artificial intelligence and quantum computing standardization. Their work includes developing terminology standards (ISO/IEC 4879) that establish common definitions for quantum computing metrics, essential for cross-platform comparisons and industry-wide communication.

The Quantum Economic Development Consortium (QED-C) has emerged as another significant player, bringing together industry stakeholders to develop practical benchmarking tools for quantum integrated circuits. Their Technical Advisory Committee on Standards and Performance Metrics has published several white papers proposing standardized test suites for evaluating quantum gate fidelities, coherence times, and error rates.

Academic institutions have contributed substantially to these standardization efforts. The Quantum Performance Laboratory at Sandia National Laboratories has pioneered the Quantum Performance Assessment framework, which offers rigorous methodologies for characterizing quantum processors. Similarly, the University of California Quantum Computing Consortium has proposed the Quantum Circuit Benchmark Suite, which includes standardized test circuits designed to stress-test specific aspects of quantum IC performance.

Industry leaders including IBM, Google, and Intel have also participated actively in these standardization initiatives, contributing their expertise while simultaneously developing proprietary benchmarking tools. IBM's Quantum Volume metric has gained significant traction as a holistic measure of quantum processor capability, while Google's Quantum Supremacy experiments have established important reference points for performance evaluation.

The convergence toward standardized metrics faces several challenges, including the rapid evolution of quantum technologies, architectural diversity, and the complex interplay between hardware and software layers. Nevertheless, emerging consensus points to several key metrics gaining widespread acceptance: gate fidelities, coherence times, connectivity graphs, and system-level benchmarks that evaluate performance on practical algorithms.

These standardization efforts are expected to accelerate in the coming years, with the first comprehensive international standards for quantum IC metrics likely to be ratified within the next 24-36 months, providing crucial guidance for both technology developers and potential adopters of quantum computing solutions.

In parallel, the International Organization for Standardization (ISO) has formed the ISO/IEC JTC 1/SC 42 committee dedicated to artificial intelligence and quantum computing standardization. Their work includes developing terminology standards (ISO/IEC 4879) that establish common definitions for quantum computing metrics, essential for cross-platform comparisons and industry-wide communication.

The Quantum Economic Development Consortium (QED-C) has emerged as another significant player, bringing together industry stakeholders to develop practical benchmarking tools for quantum integrated circuits. Their Technical Advisory Committee on Standards and Performance Metrics has published several white papers proposing standardized test suites for evaluating quantum gate fidelities, coherence times, and error rates.

Academic institutions have contributed substantially to these standardization efforts. The Quantum Performance Laboratory at Sandia National Laboratories has pioneered the Quantum Performance Assessment framework, which offers rigorous methodologies for characterizing quantum processors. Similarly, the University of California Quantum Computing Consortium has proposed the Quantum Circuit Benchmark Suite, which includes standardized test circuits designed to stress-test specific aspects of quantum IC performance.

Industry leaders including IBM, Google, and Intel have also participated actively in these standardization initiatives, contributing their expertise while simultaneously developing proprietary benchmarking tools. IBM's Quantum Volume metric has gained significant traction as a holistic measure of quantum processor capability, while Google's Quantum Supremacy experiments have established important reference points for performance evaluation.

The convergence toward standardized metrics faces several challenges, including the rapid evolution of quantum technologies, architectural diversity, and the complex interplay between hardware and software layers. Nevertheless, emerging consensus points to several key metrics gaining widespread acceptance: gate fidelities, coherence times, connectivity graphs, and system-level benchmarks that evaluate performance on practical algorithms.

These standardization efforts are expected to accelerate in the coming years, with the first comprehensive international standards for quantum IC metrics likely to be ratified within the next 24-36 months, providing crucial guidance for both technology developers and potential adopters of quantum computing solutions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!