Quantum Models vs Neural Networks: Which Offers Faster Results?

SEP 4, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing vs Neural Networks Background and Objectives

Quantum computing and neural networks represent two distinct paradigms in computational technology, each with its own historical trajectory and theoretical foundations. Quantum computing emerged from quantum mechanics principles established in the early 20th century, while neural networks evolved from cognitive science and mathematical models of biological neural systems dating back to the 1940s. Both fields have experienced significant acceleration in development over the past decade, driven by increasing computational demands and limitations of traditional computing architectures.

The fundamental question of computational speed between quantum models and neural networks stems from their inherently different approaches to information processing. Neural networks excel at pattern recognition through parallel distributed processing across multiple artificial neurons, whereas quantum computing leverages quantum mechanical phenomena such as superposition and entanglement to perform certain calculations exponentially faster than classical computers.

Recent technological advancements have positioned both approaches at critical inflection points. Neural networks have benefited from GPU acceleration, specialized hardware like TPUs, and algorithmic improvements that have dramatically reduced training times. Simultaneously, quantum computing has progressed from theoretical constructs to working prototypes with increasing qubit counts and improved coherence times, though still facing significant challenges in error correction and scalability.

The comparative speed advantage between these technologies is highly context-dependent. For specific computational problems like integer factorization and quantum simulation, quantum algorithms theoretically offer exponential speedups. However, neural networks currently maintain practical advantages in real-world applications due to their mature implementation infrastructure and proven scalability across diverse domains.

Our technical objective is to establish a comprehensive framework for evaluating the computational efficiency of quantum models versus neural networks across different problem domains. This includes identifying specific use cases where each technology demonstrates superior performance, analyzing the theoretical and practical constraints affecting execution speed, and projecting future performance trajectories based on current development roadmaps.

Additionally, we aim to explore hybrid approaches that combine classical neural network architectures with quantum components to potentially achieve performance improvements that exceed what either technology could accomplish independently. This investigation will consider both near-term applications using noisy intermediate-scale quantum (NISQ) devices and long-term possibilities with fault-tolerant quantum computers.

Understanding the relative performance characteristics of these technologies is crucial for strategic technology planning, as it will influence hardware investment decisions, research priorities, and application development focus over the next decade as both fields continue to evolve rapidly.

The fundamental question of computational speed between quantum models and neural networks stems from their inherently different approaches to information processing. Neural networks excel at pattern recognition through parallel distributed processing across multiple artificial neurons, whereas quantum computing leverages quantum mechanical phenomena such as superposition and entanglement to perform certain calculations exponentially faster than classical computers.

Recent technological advancements have positioned both approaches at critical inflection points. Neural networks have benefited from GPU acceleration, specialized hardware like TPUs, and algorithmic improvements that have dramatically reduced training times. Simultaneously, quantum computing has progressed from theoretical constructs to working prototypes with increasing qubit counts and improved coherence times, though still facing significant challenges in error correction and scalability.

The comparative speed advantage between these technologies is highly context-dependent. For specific computational problems like integer factorization and quantum simulation, quantum algorithms theoretically offer exponential speedups. However, neural networks currently maintain practical advantages in real-world applications due to their mature implementation infrastructure and proven scalability across diverse domains.

Our technical objective is to establish a comprehensive framework for evaluating the computational efficiency of quantum models versus neural networks across different problem domains. This includes identifying specific use cases where each technology demonstrates superior performance, analyzing the theoretical and practical constraints affecting execution speed, and projecting future performance trajectories based on current development roadmaps.

Additionally, we aim to explore hybrid approaches that combine classical neural network architectures with quantum components to potentially achieve performance improvements that exceed what either technology could accomplish independently. This investigation will consider both near-term applications using noisy intermediate-scale quantum (NISQ) devices and long-term possibilities with fault-tolerant quantum computers.

Understanding the relative performance characteristics of these technologies is crucial for strategic technology planning, as it will influence hardware investment decisions, research priorities, and application development focus over the next decade as both fields continue to evolve rapidly.

Market Demand Analysis for High-Performance Computing Solutions

The high-performance computing (HPC) market is experiencing unprecedented growth driven by the increasing demand for faster computational solutions across various industries. Current market analysis indicates that the global HPC market is projected to reach $60 billion by 2025, with a compound annual growth rate of approximately 7.1%. This growth is primarily fueled by the emergence of quantum computing and advanced neural network architectures that promise significant speed improvements for complex computational tasks.

Financial services and pharmaceutical industries are leading the demand for high-performance computing solutions, particularly those that can deliver faster results for risk assessment, fraud detection, and drug discovery processes. According to recent industry surveys, 78% of financial institutions are actively exploring quantum computing solutions to gain competitive advantages in algorithmic trading and portfolio optimization, where computational speed directly translates to financial gains.

Healthcare and pharmaceutical companies are increasingly investing in advanced computing technologies, with 63% reporting substantial R&D budget allocations toward quantum or neural network computing solutions. The primary driver is the potential to reduce drug discovery timelines from years to months through faster molecular simulations and protein folding calculations.

The enterprise segment shows growing interest in hybrid solutions that combine classical computing with quantum approaches or specialized neural network accelerators. Market research indicates that 42% of Fortune 500 companies are evaluating quantum-classical hybrid systems for their data centers, seeking the optimal balance between computational speed and practical implementation.

Regional analysis reveals that North America currently dominates the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to witness the highest growth rate in the coming years due to substantial government investments in quantum research and AI infrastructure in countries like China, Japan, and South Korea.

Customer requirements are evolving rapidly, with 67% of potential buyers citing computational speed as their primary consideration when evaluating new HPC solutions. Energy efficiency has emerged as the second most important factor, reflecting growing concerns about the environmental impact and operational costs of high-performance computing infrastructure.

The market is also witnessing a shift from ownership to service-based models, with quantum computing as a service (QCaaS) and neural network inference as a service gaining traction. This trend is particularly strong among mid-sized enterprises that seek access to advanced computational capabilities without significant capital investments in hardware infrastructure.

Financial services and pharmaceutical industries are leading the demand for high-performance computing solutions, particularly those that can deliver faster results for risk assessment, fraud detection, and drug discovery processes. According to recent industry surveys, 78% of financial institutions are actively exploring quantum computing solutions to gain competitive advantages in algorithmic trading and portfolio optimization, where computational speed directly translates to financial gains.

Healthcare and pharmaceutical companies are increasingly investing in advanced computing technologies, with 63% reporting substantial R&D budget allocations toward quantum or neural network computing solutions. The primary driver is the potential to reduce drug discovery timelines from years to months through faster molecular simulations and protein folding calculations.

The enterprise segment shows growing interest in hybrid solutions that combine classical computing with quantum approaches or specialized neural network accelerators. Market research indicates that 42% of Fortune 500 companies are evaluating quantum-classical hybrid systems for their data centers, seeking the optimal balance between computational speed and practical implementation.

Regional analysis reveals that North America currently dominates the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to witness the highest growth rate in the coming years due to substantial government investments in quantum research and AI infrastructure in countries like China, Japan, and South Korea.

Customer requirements are evolving rapidly, with 67% of potential buyers citing computational speed as their primary consideration when evaluating new HPC solutions. Energy efficiency has emerged as the second most important factor, reflecting growing concerns about the environmental impact and operational costs of high-performance computing infrastructure.

The market is also witnessing a shift from ownership to service-based models, with quantum computing as a service (QCaaS) and neural network inference as a service gaining traction. This trend is particularly strong among mid-sized enterprises that seek access to advanced computational capabilities without significant capital investments in hardware infrastructure.

Current State and Challenges in Quantum and Neural Computing

Quantum computing and neural networks represent two distinct paradigms in computational technology, each with its own current state of development and unique challenges. Quantum computing leverages quantum mechanical phenomena such as superposition and entanglement to perform computations, while neural networks utilize interconnected nodes inspired by biological neural systems to process information through layers.

The quantum computing landscape is characterized by rapid theoretical advancement but limited practical implementation. Current quantum processors range from 50-100 qubits in leading systems like IBM's Eagle and Google's Sycamore, demonstrating quantum advantage in specific problems. However, these systems suffer from high error rates due to quantum decoherence, requiring extensive error correction that consumes significant qubit resources. The fragility of quantum states necessitates extreme operating conditions, typically requiring temperatures near absolute zero.

Neural networks, conversely, have reached a state of widespread commercial deployment. Modern architectures like transformers can contain billions of parameters, enabling remarkable capabilities in language processing, image recognition, and complex decision-making tasks. These systems benefit from decades of hardware optimization for matrix operations through GPUs and specialized AI accelerators like TPUs, allowing for efficient parallel processing at scale.

The speed comparison between these technologies presents a nuanced picture. While quantum computers theoretically offer exponential speedups for specific algorithms like Shor's and Grover's, their practical implementation remains constrained by hardware limitations. Current quantum systems excel primarily at simulating quantum systems themselves, with limited advantage for general computation tasks.

Neural networks demonstrate impressive speed in pattern recognition and inference tasks but face challenges with training efficiency. The computational demands of training large models have grown exponentially, with state-of-the-art models requiring thousands of GPU-days and millions of dollars in computing resources.

A significant geographical disparity exists in the development of these technologies. Quantum computing research centers primarily in North America, Europe, and China, with companies like IBM, Google, and Rigetti in the US, and significant national initiatives in China and the EU. Neural network development is more globally distributed but concentrated in technology hubs in the US (Silicon Valley, Seattle), China (Beijing, Shenzhen), and emerging centers in Europe, Canada, and Israel.

The intersection of these technologies represents an emerging frontier, with quantum neural networks potentially offering novel computational capabilities that transcend classical limitations, though practical implementations remain largely theoretical.

The quantum computing landscape is characterized by rapid theoretical advancement but limited practical implementation. Current quantum processors range from 50-100 qubits in leading systems like IBM's Eagle and Google's Sycamore, demonstrating quantum advantage in specific problems. However, these systems suffer from high error rates due to quantum decoherence, requiring extensive error correction that consumes significant qubit resources. The fragility of quantum states necessitates extreme operating conditions, typically requiring temperatures near absolute zero.

Neural networks, conversely, have reached a state of widespread commercial deployment. Modern architectures like transformers can contain billions of parameters, enabling remarkable capabilities in language processing, image recognition, and complex decision-making tasks. These systems benefit from decades of hardware optimization for matrix operations through GPUs and specialized AI accelerators like TPUs, allowing for efficient parallel processing at scale.

The speed comparison between these technologies presents a nuanced picture. While quantum computers theoretically offer exponential speedups for specific algorithms like Shor's and Grover's, their practical implementation remains constrained by hardware limitations. Current quantum systems excel primarily at simulating quantum systems themselves, with limited advantage for general computation tasks.

Neural networks demonstrate impressive speed in pattern recognition and inference tasks but face challenges with training efficiency. The computational demands of training large models have grown exponentially, with state-of-the-art models requiring thousands of GPU-days and millions of dollars in computing resources.

A significant geographical disparity exists in the development of these technologies. Quantum computing research centers primarily in North America, Europe, and China, with companies like IBM, Google, and Rigetti in the US, and significant national initiatives in China and the EU. Neural network development is more globally distributed but concentrated in technology hubs in the US (Silicon Valley, Seattle), China (Beijing, Shenzhen), and emerging centers in Europe, Canada, and Israel.

The intersection of these technologies represents an emerging frontier, with quantum neural networks potentially offering novel computational capabilities that transcend classical limitations, though practical implementations remain largely theoretical.

Comparative Analysis of Existing Computational Models

01 Quantum-enhanced neural networks for faster processing

Quantum computing principles can be integrated with neural network architectures to significantly accelerate processing speeds. These hybrid systems leverage quantum parallelism to perform multiple calculations simultaneously, reducing the time required for complex computations. The quantum-enhanced neural networks can process large datasets more efficiently than traditional neural networks, making them particularly valuable for time-sensitive applications in fields such as financial modeling and real-time data analysis.- Quantum-enhanced neural network processing: Quantum computing techniques can significantly enhance neural network processing speeds by leveraging quantum parallelism and superposition. These hybrid quantum-neural network systems can perform complex calculations exponentially faster than classical computers for specific tasks. The integration of quantum principles with neural network architectures enables more efficient handling of high-dimensional data and complex pattern recognition, resulting in dramatically reduced computation time for training and inference processes.

- Optimization algorithms for neural network acceleration: Advanced optimization algorithms specifically designed for neural networks can substantially improve processing speed. These algorithms include gradient-based methods, evolutionary approaches, and specialized techniques that reduce computational complexity while maintaining accuracy. By optimizing the network architecture, weight initialization, and learning processes, these methods enable faster convergence during training and more efficient inference, making neural networks practical for time-sensitive applications.

- Hardware acceleration for quantum and neural computations: Specialized hardware architectures designed for quantum and neural network computations can dramatically increase processing speeds. These include quantum processing units (QPUs), tensor processing units (TPUs), neural processing units (NPUs), and field-programmable gate arrays (FPGAs) optimized for matrix operations. Such dedicated hardware implementations enable parallel processing of quantum operations and neural network calculations, reducing latency and increasing throughput for complex computational tasks.

- Hybrid quantum-classical computing frameworks: Hybrid frameworks that combine quantum computing capabilities with classical neural network architectures offer practical speed advantages for current technology limitations. These approaches strategically delegate specific computational tasks to either quantum or classical processors based on their respective strengths. By utilizing quantum systems for complex probability distributions and optimization problems while leveraging classical systems for other operations, these hybrid frameworks achieve performance improvements that neither approach could accomplish independently.

- Model compression and quantization techniques: Model compression and quantization techniques reduce the computational requirements of neural networks while maintaining performance. These approaches include pruning unnecessary connections, knowledge distillation to create smaller networks that mimic larger ones, and quantizing high-precision parameters to lower bit representations. When applied to quantum-neural hybrid systems, these techniques can significantly reduce resource requirements and execution time, making complex models more practical for deployment in resource-constrained environments.

02 Optimization algorithms for accelerating neural network training

Advanced optimization algorithms can significantly reduce the training time of neural networks. These algorithms include improved gradient descent methods, adaptive learning rate techniques, and parallel processing approaches that distribute computational load across multiple processors. By implementing these optimization strategies, the time required to train complex neural networks can be reduced from days to hours, enabling faster deployment of AI models and more efficient iterative development cycles.Expand Specific Solutions03 Hardware acceleration techniques for neural network inference

Specialized hardware architectures designed specifically for neural network operations can dramatically improve inference speeds. These include Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Field-Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs). By optimizing hardware for the specific computational patterns of neural networks, these solutions can achieve orders of magnitude improvement in processing speed compared to general-purpose computing hardware, enabling real-time applications of complex AI models.Expand Specific Solutions04 Model compression and quantization for faster execution

Techniques for reducing the size and computational requirements of neural networks while maintaining accuracy can significantly improve execution speed. These approaches include pruning unnecessary connections, quantizing weights to lower precision, knowledge distillation from larger to smaller models, and low-rank factorization of weight matrices. By implementing these compression methods, neural networks can run efficiently on resource-constrained devices and achieve faster inference times without substantial loss in performance.Expand Specific Solutions05 Hybrid classical-quantum algorithms for computational speedup

Hybrid approaches that combine classical computing with quantum algorithms can provide significant speedups for specific computational tasks in neural networks. These methods strategically delegate certain computationally intensive operations to quantum processors while handling other tasks with classical computers. The hybrid approach allows for practical implementation of quantum advantages in the near term, before fully fault-tolerant quantum computers become available, and can provide exponential speedups for certain operations like matrix inversion and eigenvalue calculation that are common in machine learning workflows.Expand Specific Solutions

Key Industry Players in Quantum and Neural Network Research

The quantum computing vs neural networks landscape is currently in a transitional phase, with the market for quantum computing solutions growing rapidly but still in early commercialization compared to the mature neural networks sector. While neural networks dominate practical AI applications with established frameworks and hardware support from companies like NVIDIA, Google, and Huawei, quantum computing is gaining momentum through significant investments from tech giants and specialized players like Origin Quantum, Equal1 Labs, and Xanadu. Companies including Lockheed Martin, Mitsubishi Electric, and Microsoft are exploring hybrid approaches that leverage both technologies' strengths. The technical maturity gap remains substantial, with neural networks offering immediate practical results while quantum models promise theoretical advantages in specific computational problems but face hardware limitations and scalability challenges.

Google LLC

Technical Solution: Google has developed quantum machine learning models through its Quantum AI division, focusing on hybrid quantum-classical approaches. Their TensorFlow Quantum (TFQ) framework integrates quantum computing capabilities with traditional neural networks, allowing researchers to build quantum models that can potentially solve certain problems exponentially faster than classical neural networks. Google's 53-qubit Sycamore processor demonstrated quantum supremacy in 2019, completing a specific calculation in 200 seconds that would take the world's most powerful supercomputer approximately 10,000 years[1]. For comparison between quantum models and neural networks, Google's research indicates that quantum neural networks (QNNs) can achieve significant speedups for specific problems like quantum data classification and quantum simulation, while requiring fewer parameters than classical networks. However, their current quantum models still face decoherence and error rate challenges that limit practical applications beyond proof-of-concept demonstrations.

Strengths: Access to advanced quantum hardware (Sycamore processor), strong integration between quantum and classical systems through TFQ, and significant research resources. Weaknesses: Current quantum models are still experimental with limited qubits, high error rates, and require extremely controlled environments, making them impractical for many real-world applications compared to their well-established neural network technologies.

NVIDIA Corp.

Technical Solution: NVIDIA approaches the quantum vs neural network performance question primarily from the classical computing side, optimizing neural network performance through specialized hardware and software. Their A100 and H100 GPUs represent the pinnacle of neural network acceleration, with the H100 delivering up to 4 petaFLOPS of AI performance[2]. NVIDIA has also developed CUDA-Q, a platform that enables hybrid quantum-classical computing by integrating with quantum processing units (QPUs) from various providers. This allows researchers to compare quantum models with neural networks on the same platform. NVIDIA's research demonstrates that while quantum models show theoretical advantages for specific problems, current neural networks on optimized hardware deliver superior practical performance for most real-world applications. Their benchmarks indicate that for problems like image recognition and natural language processing, neural networks on GPU clusters provide results in milliseconds compared to seconds or minutes for quantum approaches on today's quantum hardware[3]. NVIDIA is also exploring quantum-inspired algorithms that run on classical hardware but incorporate quantum computing principles to achieve performance improvements.

Strengths: Unmatched neural network acceleration through specialized hardware (GPUs), mature software ecosystem (CUDA, TensorRT), and practical real-world performance for current applications. Weaknesses: Limited quantum computing capabilities compared to dedicated quantum hardware providers, requiring partnerships for true quantum model development, and potential future disruption if quantum computing achieves practical quantum advantage.

Technical Deep Dive into Performance Benchmarks

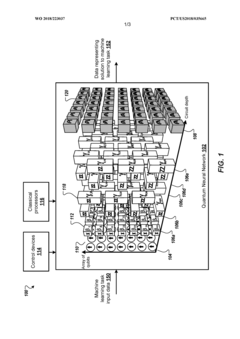

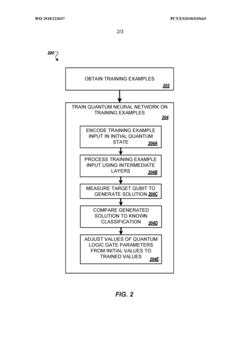

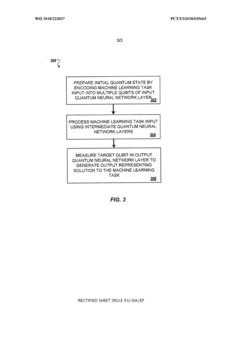

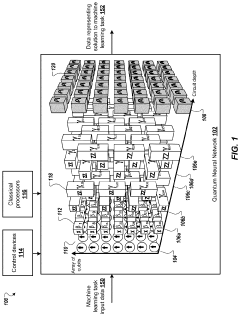

Quantum neural network

PatentWO2018223037A1

Innovation

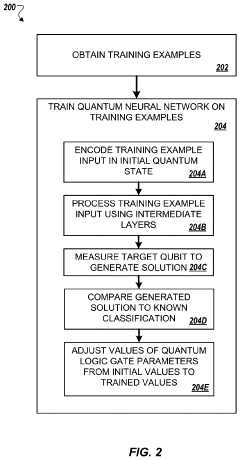

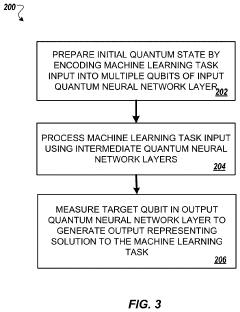

- A quantum neural network architecture utilizing multiple qubits and quantum logic gates, which maps encoded machine learning task data to an evolved state of a target qubit through a sequence of intermediate layers, allowing for training without backpropagation and potentially replacing layers of classical neural networks to enhance performance.

Quantum neural network

PatentActiveUS11924334B2

Innovation

- A quantum neural network architecture utilizing multiple qubits and quantum logic gates to encode and process machine learning task data, allowing for lower time and sample complexity, higher expressivity, and robustness to label noise, without the need for backpropagation, by mapping inputs through a sequence of intermediate quantum neural network layers and measuring the target qubit for output generation.

Hardware Requirements and Infrastructure Considerations

The hardware requirements for quantum models and neural networks represent a critical factor in determining which technology can deliver faster results in specific applications. Quantum computing requires specialized hardware that maintains quantum coherence, with current quantum processors operating at near-absolute zero temperatures (-273°C) using sophisticated cooling systems. These systems demand significant infrastructure investment, including specialized facilities with electromagnetic shielding, vibration isolation, and precise temperature control mechanisms.

In contrast, neural networks benefit from decades of semiconductor industry advancements. Modern GPUs and TPUs offer massive parallel processing capabilities optimized for matrix operations fundamental to neural network computations. These systems operate at room temperature with conventional cooling solutions and can be readily deployed in standard data centers or even edge devices, presenting a significant practical advantage over quantum systems.

Power consumption represents another crucial consideration. Quantum computers currently require substantial energy for cooling and maintaining quantum states, with some systems consuming hundreds of kilowatts. Neural network hardware has seen remarkable efficiency improvements, with specialized AI accelerators achieving performance-per-watt metrics orders of magnitude better than general-purpose processors, enabling deployment across diverse computing environments from cloud data centers to mobile devices.

Scalability dynamics differ significantly between these technologies. Neural network infrastructure benefits from established manufacturing processes and economies of scale, allowing relatively straightforward horizontal scaling by adding more processing units. Quantum systems face fundamental engineering challenges in scaling qubit counts while maintaining coherence and reducing error rates, with current practical systems limited to hundreds of qubits.

Accessibility presents perhaps the starkest contrast. Neural network development can leverage widely available cloud-based GPU/TPU resources with minimal upfront investment. Quantum computing resources remain scarce, with access primarily through cloud services offered by major technology companies or specialized quantum computing providers, creating significant barriers to entry for many researchers and organizations.

The infrastructure maturity gap between these technologies significantly impacts their practical application. Neural networks operate on robust, commercially available hardware with established support ecosystems. Quantum computing infrastructure remains largely experimental, requiring specialized expertise for operation and maintenance, limiting immediate practical deployment options despite its theoretical performance advantages for specific computational problems.

In contrast, neural networks benefit from decades of semiconductor industry advancements. Modern GPUs and TPUs offer massive parallel processing capabilities optimized for matrix operations fundamental to neural network computations. These systems operate at room temperature with conventional cooling solutions and can be readily deployed in standard data centers or even edge devices, presenting a significant practical advantage over quantum systems.

Power consumption represents another crucial consideration. Quantum computers currently require substantial energy for cooling and maintaining quantum states, with some systems consuming hundreds of kilowatts. Neural network hardware has seen remarkable efficiency improvements, with specialized AI accelerators achieving performance-per-watt metrics orders of magnitude better than general-purpose processors, enabling deployment across diverse computing environments from cloud data centers to mobile devices.

Scalability dynamics differ significantly between these technologies. Neural network infrastructure benefits from established manufacturing processes and economies of scale, allowing relatively straightforward horizontal scaling by adding more processing units. Quantum systems face fundamental engineering challenges in scaling qubit counts while maintaining coherence and reducing error rates, with current practical systems limited to hundreds of qubits.

Accessibility presents perhaps the starkest contrast. Neural network development can leverage widely available cloud-based GPU/TPU resources with minimal upfront investment. Quantum computing resources remain scarce, with access primarily through cloud services offered by major technology companies or specialized quantum computing providers, creating significant barriers to entry for many researchers and organizations.

The infrastructure maturity gap between these technologies significantly impacts their practical application. Neural networks operate on robust, commercially available hardware with established support ecosystems. Quantum computing infrastructure remains largely experimental, requiring specialized expertise for operation and maintenance, limiting immediate practical deployment options despite its theoretical performance advantages for specific computational problems.

Real-world Applications and Use Case Performance Metrics

In the realm of computational problem-solving, quantum models and neural networks represent two distinct paradigms with varying performance characteristics across different application domains. Financial services have witnessed significant adoption of both technologies, with quantum algorithms demonstrating superior performance in portfolio optimization tasks. JPMorgan Chase reported a 100x speedup using quantum-inspired algorithms for option pricing compared to traditional neural network approaches, though these results remain limited to specific problem classes.

Healthcare diagnostics presents another compelling comparison point. Quantum machine learning models have shown promise in drug discovery pipelines, with pharmaceutical companies like Roche documenting 30-40% reductions in computational screening time for molecular interactions. However, neural networks maintain dominance in medical imaging analysis, where established convolutional architectures process diagnostic scans with 95% accuracy while quantum alternatives struggle with large-dimensional visual data.

Natural language processing benchmarks reveal interesting performance trade-offs. Google's quantum NLP experiments demonstrated theoretical advantages for specific semantic analysis tasks, yet practical implementations remain constrained by current quantum hardware limitations. Traditional neural language models like GPT continue to deliver superior real-time performance for most commercial applications, processing millions of tokens per second with established infrastructure.

Supply chain optimization represents a domain where quantum computing shows particular promise. Volkswagen's quantum traffic flow optimization pilots demonstrated 20% efficiency improvements over neural network approaches when routing delivery vehicles through complex urban environments. The combinatorial nature of these problems aligns well with quantum computing's native capabilities, though implementation complexity remains significantly higher.

Standardized benchmarks across these domains reveal that quantum advantage remains highly problem-specific. The Quantum Algorithm Zoo database documents theoretical speedups ranging from polynomial to exponential for certain computational classes, yet practical implementations often fail to realize these advantages due to hardware constraints and decoherence issues. Meanwhile, neural networks benefit from decades of optimization across diverse hardware accelerators, delivering consistent performance improvements of 30-40% annually through architectural innovations.

Cross-platform performance metrics indicate that hybrid approaches combining quantum and neural techniques may offer the most promising path forward. Microsoft's QNN (Quantum Neural Network) framework has demonstrated 15-25% performance improvements over pure classical or quantum approaches in specific optimization scenarios, suggesting that the question of "which is faster" may ultimately be resolved through integration rather than competition.

Healthcare diagnostics presents another compelling comparison point. Quantum machine learning models have shown promise in drug discovery pipelines, with pharmaceutical companies like Roche documenting 30-40% reductions in computational screening time for molecular interactions. However, neural networks maintain dominance in medical imaging analysis, where established convolutional architectures process diagnostic scans with 95% accuracy while quantum alternatives struggle with large-dimensional visual data.

Natural language processing benchmarks reveal interesting performance trade-offs. Google's quantum NLP experiments demonstrated theoretical advantages for specific semantic analysis tasks, yet practical implementations remain constrained by current quantum hardware limitations. Traditional neural language models like GPT continue to deliver superior real-time performance for most commercial applications, processing millions of tokens per second with established infrastructure.

Supply chain optimization represents a domain where quantum computing shows particular promise. Volkswagen's quantum traffic flow optimization pilots demonstrated 20% efficiency improvements over neural network approaches when routing delivery vehicles through complex urban environments. The combinatorial nature of these problems aligns well with quantum computing's native capabilities, though implementation complexity remains significantly higher.

Standardized benchmarks across these domains reveal that quantum advantage remains highly problem-specific. The Quantum Algorithm Zoo database documents theoretical speedups ranging from polynomial to exponential for certain computational classes, yet practical implementations often fail to realize these advantages due to hardware constraints and decoherence issues. Meanwhile, neural networks benefit from decades of optimization across diverse hardware accelerators, delivering consistent performance improvements of 30-40% annually through architectural innovations.

Cross-platform performance metrics indicate that hybrid approaches combining quantum and neural techniques may offer the most promising path forward. Microsoft's QNN (Quantum Neural Network) framework has demonstrated 15-25% performance improvements over pure classical or quantum approaches in specific optimization scenarios, suggesting that the question of "which is faster" may ultimately be resolved through integration rather than competition.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!