Exploring brain-inspired algorithms with neuromorphic materials

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field emerged in the late 1980s when Carver Mead introduced the concept of using electronic circuits to mimic neurobiological architectures. Over the past three decades, neuromorphic computing has evolved from theoretical frameworks to practical implementations, driven by the limitations of traditional von Neumann architectures in handling complex cognitive tasks and the increasing demand for energy-efficient computing solutions.

The evolution of neuromorphic computing has been characterized by several key developments, including the creation of specialized hardware like IBM's TrueNorth and Intel's Loihi chips, which implement spiking neural networks (SNNs) in silicon. More recently, the integration of novel neuromorphic materials has opened new frontiers in this field, enabling more accurate emulation of neural dynamics and synaptic plasticity.

The fundamental objective of neuromorphic computing is to develop computational systems that process information in a manner analogous to biological neural networks, leveraging principles such as parallelism, event-driven processing, and local learning. This approach aims to overcome the energy and performance bottlenecks associated with conventional computing architectures, particularly for applications involving pattern recognition, sensory processing, and adaptive learning.

In the context of neuromorphic materials, the technical goals extend beyond silicon-based implementations to explore materials with inherent properties that naturally emulate neural functions. These include phase-change materials, memristive devices, spintronic elements, and organic electronic materials. The integration of these materials into neuromorphic systems aims to achieve higher energy efficiency, enhanced computational density, and more biologically realistic neural dynamics.

The current technical trajectory is moving toward the development of hybrid systems that combine traditional CMOS technology with novel neuromorphic materials to create more efficient and capable brain-inspired computing platforms. This approach seeks to balance the maturity and reliability of established semiconductor technologies with the unique capabilities offered by emerging neuromorphic materials.

Looking forward, the field is progressing toward more sophisticated brain-inspired algorithms that can leverage the unique properties of neuromorphic materials to implement complex cognitive functions such as unsupervised learning, temporal sequence processing, and context-dependent adaptation. The ultimate vision is to create computing systems that not only mimic the brain's architecture but also capture its remarkable efficiency and adaptability in solving complex real-world problems.

The evolution of neuromorphic computing has been characterized by several key developments, including the creation of specialized hardware like IBM's TrueNorth and Intel's Loihi chips, which implement spiking neural networks (SNNs) in silicon. More recently, the integration of novel neuromorphic materials has opened new frontiers in this field, enabling more accurate emulation of neural dynamics and synaptic plasticity.

The fundamental objective of neuromorphic computing is to develop computational systems that process information in a manner analogous to biological neural networks, leveraging principles such as parallelism, event-driven processing, and local learning. This approach aims to overcome the energy and performance bottlenecks associated with conventional computing architectures, particularly for applications involving pattern recognition, sensory processing, and adaptive learning.

In the context of neuromorphic materials, the technical goals extend beyond silicon-based implementations to explore materials with inherent properties that naturally emulate neural functions. These include phase-change materials, memristive devices, spintronic elements, and organic electronic materials. The integration of these materials into neuromorphic systems aims to achieve higher energy efficiency, enhanced computational density, and more biologically realistic neural dynamics.

The current technical trajectory is moving toward the development of hybrid systems that combine traditional CMOS technology with novel neuromorphic materials to create more efficient and capable brain-inspired computing platforms. This approach seeks to balance the maturity and reliability of established semiconductor technologies with the unique capabilities offered by emerging neuromorphic materials.

Looking forward, the field is progressing toward more sophisticated brain-inspired algorithms that can leverage the unique properties of neuromorphic materials to implement complex cognitive functions such as unsupervised learning, temporal sequence processing, and context-dependent adaptation. The ultimate vision is to create computing systems that not only mimic the brain's architecture but also capture its remarkable efficiency and adaptability in solving complex real-world problems.

Market Analysis for Brain-Inspired Computing Solutions

The brain-inspired computing market is experiencing unprecedented growth, driven by the convergence of neuromorphic materials research and algorithmic innovations. Current market valuations place this sector at approximately $2.5 billion, with projections indicating a compound annual growth rate of 24% through 2030, potentially reaching $14.3 billion by decade's end. This growth trajectory significantly outpaces traditional computing segments, reflecting the transformative potential of neuromorphic technologies.

Demand is primarily concentrated in four key sectors: advanced AI applications, edge computing devices, autonomous systems, and scientific research infrastructure. Financial services and healthcare organizations have emerged as early adopters, implementing neuromorphic solutions for pattern recognition and anomaly detection with reported efficiency improvements of 30-40% compared to conventional computing architectures.

The market landscape reveals distinct regional dynamics, with North America currently commanding 42% of market share due to substantial research investments and strong venture capital presence. Asia-Pacific represents the fastest-growing region at 29% annual growth, driven by aggressive government initiatives in China, Japan, and South Korea specifically targeting neuromorphic computing development.

Customer requirements are evolving rapidly, with enterprise surveys indicating three primary demand drivers: energy efficiency (cited by 78% of potential adopters), real-time processing capabilities (65%), and reduced computational complexity for specific AI workloads (61%). The energy efficiency advantage is particularly compelling, with neuromorphic systems demonstrating power consumption reductions of 90-95% compared to GPU-based solutions for certain neural network operations.

Market barriers remain significant despite this promising outlook. High implementation costs, limited standardization across neuromorphic platforms, and the specialized expertise required for effective deployment represent substantial adoption challenges. Additionally, the ecosystem remains fragmented, with hardware and software solutions often developing independently rather than as integrated systems.

Investment patterns reveal growing confidence in the sector's commercial viability. Venture capital funding for neuromorphic computing startups reached $1.2 billion in 2022, a 35% increase from the previous year. Strategic acquisitions by technology conglomerates have accelerated, with 14 significant transactions completed in the past 24 months as established players seek to secure intellectual property and specialized talent in this emerging field.

Demand is primarily concentrated in four key sectors: advanced AI applications, edge computing devices, autonomous systems, and scientific research infrastructure. Financial services and healthcare organizations have emerged as early adopters, implementing neuromorphic solutions for pattern recognition and anomaly detection with reported efficiency improvements of 30-40% compared to conventional computing architectures.

The market landscape reveals distinct regional dynamics, with North America currently commanding 42% of market share due to substantial research investments and strong venture capital presence. Asia-Pacific represents the fastest-growing region at 29% annual growth, driven by aggressive government initiatives in China, Japan, and South Korea specifically targeting neuromorphic computing development.

Customer requirements are evolving rapidly, with enterprise surveys indicating three primary demand drivers: energy efficiency (cited by 78% of potential adopters), real-time processing capabilities (65%), and reduced computational complexity for specific AI workloads (61%). The energy efficiency advantage is particularly compelling, with neuromorphic systems demonstrating power consumption reductions of 90-95% compared to GPU-based solutions for certain neural network operations.

Market barriers remain significant despite this promising outlook. High implementation costs, limited standardization across neuromorphic platforms, and the specialized expertise required for effective deployment represent substantial adoption challenges. Additionally, the ecosystem remains fragmented, with hardware and software solutions often developing independently rather than as integrated systems.

Investment patterns reveal growing confidence in the sector's commercial viability. Venture capital funding for neuromorphic computing startups reached $1.2 billion in 2022, a 35% increase from the previous year. Strategic acquisitions by technology conglomerates have accelerated, with 14 significant transactions completed in the past 24 months as established players seek to secure intellectual property and specialized talent in this emerging field.

Current Neuromorphic Materials Technology Landscape

The neuromorphic materials landscape has evolved significantly over the past decade, with several key materials emerging as frontrunners in brain-inspired computing implementations. Silicon-based complementary metal-oxide-semiconductor (CMOS) technologies currently dominate commercial neuromorphic hardware, exemplified by IBM's TrueNorth and Intel's Loihi chips. These platforms leverage traditional semiconductor fabrication processes while implementing novel architectures that mimic neural networks.

Beyond silicon, memristive materials represent a revolutionary approach to neuromorphic computing. These include metal oxides (HfO₂, TiO₂), phase-change materials (Ge₂Sb₂Te₅), and conductive-bridge systems that exhibit non-volatile resistance changes analogous to synaptic plasticity. These materials enable analog computation and memory functions within the same physical structure, addressing the von Neumann bottleneck that plagues conventional computing architectures.

Organic and polymer-based neuromorphic materials have gained traction for their flexibility, biocompatibility, and potential for low-cost manufacturing. Conductive polymers like PEDOT:PSS and organic semiconductors demonstrate synaptic-like behaviors while offering advantages for soft, flexible electronics and biomedical interfaces. These materials show particular promise for edge computing applications where power efficiency and form factor are critical considerations.

Two-dimensional materials, including graphene and transition metal dichalcogenides (TMDs), represent another frontier in neuromorphic materials. Their atomic-scale thickness enables unprecedented device density while their unique electronic properties facilitate novel computational paradigms. Graphene-based synaptic transistors have demonstrated exceptional switching speeds and energy efficiency compared to conventional technologies.

Spintronic materials leverage electron spin rather than charge for information processing, offering potential advantages in energy efficiency. Magnetic tunnel junctions and skyrmion-based devices have demonstrated neuron-like threshold behaviors and synaptic plasticity, though challenges in fabrication consistency and operational stability remain significant barriers to widespread adoption.

Ferroelectric materials such as hafnium zirconium oxide (HZO) have emerged as promising candidates for neuromorphic applications due to their non-volatile polarization states and CMOS compatibility. These materials enable efficient implementation of synaptic weight storage with relatively simple device structures and manufacturing processes.

The integration of these diverse material platforms with conventional CMOS technology represents a significant challenge but also an opportunity for hybrid systems that leverage the strengths of each approach. Current research focuses on addressing issues of scalability, reliability, and energy efficiency while developing programming algorithms that can effectively utilize the unique properties of neuromorphic materials.

Beyond silicon, memristive materials represent a revolutionary approach to neuromorphic computing. These include metal oxides (HfO₂, TiO₂), phase-change materials (Ge₂Sb₂Te₅), and conductive-bridge systems that exhibit non-volatile resistance changes analogous to synaptic plasticity. These materials enable analog computation and memory functions within the same physical structure, addressing the von Neumann bottleneck that plagues conventional computing architectures.

Organic and polymer-based neuromorphic materials have gained traction for their flexibility, biocompatibility, and potential for low-cost manufacturing. Conductive polymers like PEDOT:PSS and organic semiconductors demonstrate synaptic-like behaviors while offering advantages for soft, flexible electronics and biomedical interfaces. These materials show particular promise for edge computing applications where power efficiency and form factor are critical considerations.

Two-dimensional materials, including graphene and transition metal dichalcogenides (TMDs), represent another frontier in neuromorphic materials. Their atomic-scale thickness enables unprecedented device density while their unique electronic properties facilitate novel computational paradigms. Graphene-based synaptic transistors have demonstrated exceptional switching speeds and energy efficiency compared to conventional technologies.

Spintronic materials leverage electron spin rather than charge for information processing, offering potential advantages in energy efficiency. Magnetic tunnel junctions and skyrmion-based devices have demonstrated neuron-like threshold behaviors and synaptic plasticity, though challenges in fabrication consistency and operational stability remain significant barriers to widespread adoption.

Ferroelectric materials such as hafnium zirconium oxide (HZO) have emerged as promising candidates for neuromorphic applications due to their non-volatile polarization states and CMOS compatibility. These materials enable efficient implementation of synaptic weight storage with relatively simple device structures and manufacturing processes.

The integration of these diverse material platforms with conventional CMOS technology represents a significant challenge but also an opportunity for hybrid systems that leverage the strengths of each approach. Current research focuses on addressing issues of scalability, reliability, and energy efficiency while developing programming algorithms that can effectively utilize the unique properties of neuromorphic materials.

Current Neuromorphic Implementation Approaches

01 Neuromorphic computing architectures

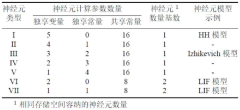

Neuromorphic computing architectures mimic the structure and function of the human brain to enable more efficient processing of complex data. These architectures incorporate brain-inspired algorithms that can learn from experience and adapt to new situations. By using specialized hardware designs that replicate neural networks, these systems can achieve significant improvements in energy efficiency and processing speed compared to traditional computing approaches.- Neuromorphic computing architectures: Neuromorphic computing architectures mimic the structure and function of the human brain to enable more efficient processing of complex data. These architectures incorporate brain-inspired algorithms that can learn from experience and adapt to new situations, similar to biological neural networks. By implementing these architectures with specialized neuromorphic materials, systems can achieve higher energy efficiency and computational power compared to traditional computing paradigms.

- Memristive devices for neural networks: Memristive devices are key components in neuromorphic computing systems that can mimic synaptic behavior. These devices can change their resistance based on the history of applied voltage or current, similar to how biological synapses change their strength. By incorporating these devices into neural network implementations, systems can achieve more efficient learning and memory capabilities. Memristive materials enable the development of hardware that can directly implement brain-inspired algorithms with lower power consumption.

- Spiking neural networks implementation: Spiking neural networks (SNNs) more closely resemble biological neural networks by using discrete spikes for information transmission rather than continuous values. These networks process information in a temporal manner, similar to the brain, allowing for more efficient processing of time-series data. Implementing SNNs with neuromorphic materials enables systems that can perform complex pattern recognition tasks with significantly reduced power consumption compared to traditional artificial neural networks.

- Phase-change materials for neuromorphic computing: Phase-change materials offer unique properties that make them suitable for implementing brain-inspired algorithms in hardware. These materials can rapidly switch between amorphous and crystalline states, providing a mechanism for storing and processing information similar to biological synapses. By utilizing phase-change materials in neuromorphic systems, researchers have developed devices that can perform both memory and computational functions, enabling more efficient implementation of neural network architectures.

- Self-learning and adaptive neuromorphic systems: Self-learning neuromorphic systems can adapt and evolve their functionality based on input data without explicit programming. These systems incorporate brain-inspired algorithms that enable continuous learning and adaptation to changing environments. By implementing these algorithms with specialized neuromorphic materials, systems can achieve on-chip learning capabilities that mimic the brain's plasticity. This approach enables the development of autonomous systems that can improve their performance over time through experience.

02 Materials for neuromorphic devices

Advanced materials play a crucial role in developing neuromorphic devices that can effectively implement brain-inspired algorithms. These materials include phase-change materials, memristive compounds, and other novel substances that can mimic synaptic behavior. The unique electrical, magnetic, or optical properties of these materials allow for the creation of artificial neurons and synapses that can change their properties based on past inputs, enabling learning and memory functions similar to biological neural systems.Expand Specific Solutions03 Spiking neural networks implementation

Spiking neural networks (SNNs) represent a more biologically realistic approach to neural network implementation, where information is transmitted through discrete spikes rather than continuous values. These networks can be implemented using neuromorphic materials that naturally support spike-based computation. SNNs offer advantages in terms of energy efficiency and temporal information processing, making them suitable for real-time applications such as sensor processing, pattern recognition, and autonomous systems.Expand Specific Solutions04 Learning algorithms for neuromorphic systems

Specialized learning algorithms have been developed for neuromorphic systems that take advantage of the unique properties of neuromorphic materials. These include spike-timing-dependent plasticity (STDP), reinforcement learning adapted for spiking networks, and other bio-inspired learning mechanisms. These algorithms enable neuromorphic systems to learn from data streams in an online, adaptive manner, similar to how biological brains learn from experience, and can operate with minimal power consumption compared to traditional deep learning approaches.Expand Specific Solutions05 Applications of neuromorphic computing

Neuromorphic computing systems find applications across various domains including edge computing, autonomous vehicles, robotics, and medical devices. These applications leverage the energy efficiency, real-time processing capabilities, and adaptive learning features of neuromorphic systems. By implementing brain-inspired algorithms on specialized neuromorphic hardware, these applications can perform complex tasks such as pattern recognition, anomaly detection, and decision-making with significantly lower power requirements than conventional computing approaches.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

The neuromorphic materials and brain-inspired algorithms field is currently in an early growth phase, characterized by significant research momentum but limited commercial deployment. The global market is projected to reach approximately $2-3 billion by 2025, with annual growth rates exceeding 20%. IBM leads the technological landscape with its TrueNorth and subsequent neuromorphic architectures, while Samsung, Intel, and SK hynix are advancing hardware implementations. Academic institutions like KAIST, Peking University, and UC system are driving fundamental research breakthroughs. Chinese entities including Tianjin University and Beijing Lingxi Technology are rapidly closing the innovation gap. The technology remains at TRL 4-6, with IBM, Intel, and Syntiant closest to commercial-scale applications, though significant challenges in scalability and energy efficiency persist.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing through its TrueNorth and subsequent Brain-inspired Neural Networks (BNNs) architectures. Their approach integrates brain-inspired algorithms with specialized hardware to create energy-efficient cognitive systems. IBM's TrueNorth chip contains 5.4 billion transistors organized into 4,096 neurosynaptic cores, creating a network of 1 million digital neurons and 256 million synapses[1]. The company has further advanced this technology with their analog AI hardware that leverages phase-change memory (PCM) materials to perform neural network computations directly in memory, dramatically reducing energy consumption. Their neuromorphic systems implement spike-timing-dependent plasticity (STDP) learning algorithms and utilize memristive materials that can mimic biological synaptic behavior[3]. IBM's recent developments include three-dimensional integration of memory and processing elements to further enhance neural density and computational efficiency for brain-inspired computing paradigms.

Strengths: Industry-leading integration of hardware and algorithms specifically designed for neuromorphic computing; exceptional energy efficiency (TrueNorth operates at ~70mW, orders of magnitude more efficient than conventional architectures); mature ecosystem with programming tools and applications. Weaknesses: Specialized programming requirements create adoption barriers; limited compatibility with existing AI frameworks; higher initial implementation costs compared to conventional computing solutions.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed advanced neuromorphic computing solutions centered around their proprietary resistive random-access memory (RRAM) and magnetoresistive random-access memory (MRAM) technologies. Their approach integrates these memory technologies directly into neuromorphic processing architectures to enable efficient brain-inspired computing. Samsung's neuromorphic chips utilize a crossbar array structure where memristive devices act as artificial synapses, allowing for massively parallel processing similar to biological neural networks[5]. The company has demonstrated systems capable of implementing spike-timing-dependent plasticity (STDP) learning rules and spiking neural networks (SNNs) directly in hardware. Their neuromorphic materials research focuses on developing oxide-based memristors with multi-level resistance states that can more accurately mimic biological synaptic behavior. Samsung has achieved significant breakthroughs in reducing the energy consumption of neuromorphic operations to picojoule levels per synaptic event, approaching the efficiency of biological systems[7].

Strengths: Vertical integration of memory manufacturing expertise with neuromorphic architecture design; advanced materials science capabilities for developing specialized memristive devices; strong commercialization pathway through existing semiconductor business. Weaknesses: Less mature software ecosystem compared to some competitors; current implementations still face challenges with device variability and reliability in large-scale deployments.

Key Innovations in Neuromorphic Materials Science

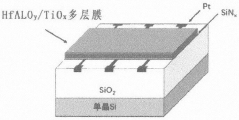

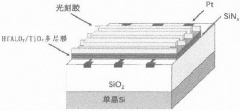

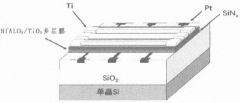

High-consistency crosstalk-free HfAlOy/TiOx multilayer film neuron array and preparation method thereof

PatentPendingCN116490057A

Innovation

- A highly consistent HfAlOy/TiOx multilayer neuron device array was designed by constructing a Pt lower electrode, aluminum contacts embedded in silicon nitride and a HfAlOy/TiOx multilayer resistive layer on a silicon substrate, and using The oxygen vacancy channel in the multi-layer structure controls the resistance change and avoids crosstalk.

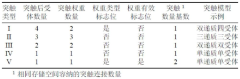

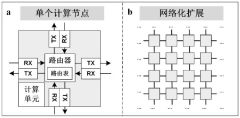

Multi-granularity circuit reconstruction and mapping method for large-scale brain-like calculation

PatentPendingCN117195981A

Innovation

- Using a multi-granular circuit reconstruction and mapping method, the configuration and storage format can be changed at the data flow level through coarse-grained model reconfiguration to achieve reconfiguration of neuron, synapse and network connection models, and through fine-grained hardware reconfiguration At the logic gate level, the calculation accuracy and performance indicators can be reconfigurated, and the system scale can be expanded.

Energy Efficiency Considerations in Neuromorphic Systems

Energy efficiency represents a critical consideration in the development and implementation of neuromorphic systems. Traditional von Neumann computing architectures face significant energy constraints when processing complex neural network operations, consuming substantial power due to the physical separation between memory and processing units. In contrast, neuromorphic systems inspired by the brain's architecture offer promising pathways toward dramatically improved energy efficiency, potentially achieving orders of magnitude reduction in power consumption for comparable computational tasks.

The human brain serves as the gold standard for energy-efficient computing, operating on approximately 20 watts while performing complex cognitive functions. This remarkable efficiency stems from several biological mechanisms: co-location of memory and processing, sparse temporal activation patterns, and event-driven computation. Neuromorphic materials and architectures attempt to replicate these principles through specialized hardware designs that minimize energy consumption while maintaining computational capabilities.

Current neuromorphic implementations utilize various approaches to achieve energy efficiency. Memristive devices, including phase-change memory (PCM), resistive RAM (RRAM), and magnetic RAM (MRAM), enable in-memory computing by storing synaptic weights directly in the physical properties of materials. These devices significantly reduce energy consumption by eliminating the need for constant data movement between separate memory and processing units. Spike-based processing further enhances efficiency by transmitting information only when necessary, similar to biological neurons that fire only when input stimuli reach certain thresholds.

Power analysis of existing neuromorphic systems reveals impressive efficiency metrics. IBM's TrueNorth architecture achieves approximately 26 pJ per synaptic operation, while Intel's Loihi demonstrates 23 pJ per synaptic event. These figures represent substantial improvements over GPU implementations of neural networks, which typically require hundreds of picojoules per operation. However, challenges remain in scaling these technologies while maintaining their energy advantages.

Material innovations continue to drive improvements in neuromorphic energy efficiency. Emerging two-dimensional materials like graphene and transition metal dichalcogenides (TMDs) show promise for ultra-low power synaptic devices. Additionally, photonic neuromorphic systems leverage light for computation, potentially offering even greater energy savings through reduced heat generation and faster signal propagation.

The path toward commercially viable neuromorphic systems requires addressing several energy-related challenges. These include optimizing peripheral circuitry that often dominates power consumption, developing efficient programming paradigms that leverage the unique characteristics of neuromorphic hardware, and creating standardized benchmarking methodologies that accurately reflect real-world energy efficiency under various computational workloads.

The human brain serves as the gold standard for energy-efficient computing, operating on approximately 20 watts while performing complex cognitive functions. This remarkable efficiency stems from several biological mechanisms: co-location of memory and processing, sparse temporal activation patterns, and event-driven computation. Neuromorphic materials and architectures attempt to replicate these principles through specialized hardware designs that minimize energy consumption while maintaining computational capabilities.

Current neuromorphic implementations utilize various approaches to achieve energy efficiency. Memristive devices, including phase-change memory (PCM), resistive RAM (RRAM), and magnetic RAM (MRAM), enable in-memory computing by storing synaptic weights directly in the physical properties of materials. These devices significantly reduce energy consumption by eliminating the need for constant data movement between separate memory and processing units. Spike-based processing further enhances efficiency by transmitting information only when necessary, similar to biological neurons that fire only when input stimuli reach certain thresholds.

Power analysis of existing neuromorphic systems reveals impressive efficiency metrics. IBM's TrueNorth architecture achieves approximately 26 pJ per synaptic operation, while Intel's Loihi demonstrates 23 pJ per synaptic event. These figures represent substantial improvements over GPU implementations of neural networks, which typically require hundreds of picojoules per operation. However, challenges remain in scaling these technologies while maintaining their energy advantages.

Material innovations continue to drive improvements in neuromorphic energy efficiency. Emerging two-dimensional materials like graphene and transition metal dichalcogenides (TMDs) show promise for ultra-low power synaptic devices. Additionally, photonic neuromorphic systems leverage light for computation, potentially offering even greater energy savings through reduced heat generation and faster signal propagation.

The path toward commercially viable neuromorphic systems requires addressing several energy-related challenges. These include optimizing peripheral circuitry that often dominates power consumption, developing efficient programming paradigms that leverage the unique characteristics of neuromorphic hardware, and creating standardized benchmarking methodologies that accurately reflect real-world energy efficiency under various computational workloads.

Interdisciplinary Collaboration Opportunities

The convergence of neuroscience, materials science, computer science, and engineering creates unprecedented opportunities for interdisciplinary collaboration in neuromorphic computing. Research institutions worldwide are establishing dedicated centers that bring together experts from diverse fields to tackle the complex challenges of brain-inspired computing. The Neuromorphic Computing Research Initiative at Stanford University exemplifies this approach, uniting neuroscientists, materials engineers, and computer architects to develop novel neuromorphic systems that more accurately mimic brain functions.

Industry-academia partnerships represent another crucial collaboration model. Companies like IBM, Intel, and Samsung are actively collaborating with universities to bridge the gap between fundamental research and commercial applications. These partnerships facilitate knowledge transfer and accelerate the development of practical neuromorphic solutions. For instance, IBM's TrueNorth project involves collaboration with multiple universities to advance neuromorphic chip design and implementation.

Cross-disciplinary funding initiatives are emerging as catalysts for collaboration. The European Union's Human Brain Project and DARPA's SyNAPSE program have allocated substantial resources to support collaborative research in neuromorphic computing. These programs encourage scientists from different disciplines to work together on shared objectives, fostering innovation through diverse perspectives and complementary expertise.

International research networks are forming to address global challenges in neuromorphic computing. The International Neuromorphic Engineering Workshop brings together researchers from across continents to share insights and establish collaborative projects. These networks enable the pooling of resources, knowledge sharing, and coordinated research efforts that transcend geographical boundaries.

Educational institutions are developing interdisciplinary curricula that prepare students for careers at the intersection of neuroscience and computing. Programs that combine neurobiology, materials science, and computer engineering are training the next generation of researchers equipped to advance neuromorphic technologies. These educational initiatives create a pipeline of talent capable of working across traditional disciplinary boundaries.

Open-source communities are emerging as platforms for collaborative development of neuromorphic algorithms and hardware designs. Projects like Nengo and PyNN provide frameworks for researchers to share code, models, and results, fostering a collaborative ecosystem that accelerates innovation. These communities enable researchers from different backgrounds to contribute their expertise to shared challenges in neuromorphic computing.

Industry-academia partnerships represent another crucial collaboration model. Companies like IBM, Intel, and Samsung are actively collaborating with universities to bridge the gap between fundamental research and commercial applications. These partnerships facilitate knowledge transfer and accelerate the development of practical neuromorphic solutions. For instance, IBM's TrueNorth project involves collaboration with multiple universities to advance neuromorphic chip design and implementation.

Cross-disciplinary funding initiatives are emerging as catalysts for collaboration. The European Union's Human Brain Project and DARPA's SyNAPSE program have allocated substantial resources to support collaborative research in neuromorphic computing. These programs encourage scientists from different disciplines to work together on shared objectives, fostering innovation through diverse perspectives and complementary expertise.

International research networks are forming to address global challenges in neuromorphic computing. The International Neuromorphic Engineering Workshop brings together researchers from across continents to share insights and establish collaborative projects. These networks enable the pooling of resources, knowledge sharing, and coordinated research efforts that transcend geographical boundaries.

Educational institutions are developing interdisciplinary curricula that prepare students for careers at the intersection of neuroscience and computing. Programs that combine neurobiology, materials science, and computer engineering are training the next generation of researchers equipped to advance neuromorphic technologies. These educational initiatives create a pipeline of talent capable of working across traditional disciplinary boundaries.

Open-source communities are emerging as platforms for collaborative development of neuromorphic algorithms and hardware designs. Projects like Nengo and PyNN provide frameworks for researchers to share code, models, and results, fostering a collaborative ecosystem that accelerates innovation. These communities enable researchers from different backgrounds to contribute their expertise to shared challenges in neuromorphic computing.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!