How neuromorphic materials enhance neural network processing

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Materials Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. The evolution of this field began in the late 1980s with Carver Mead's pioneering work, which proposed using analog circuits to mimic neurobiological architectures. Since then, neuromorphic engineering has expanded significantly, particularly in the last decade, as traditional von Neumann computing architectures face increasing limitations in processing efficiency and power consumption when handling complex neural network operations.

The fundamental premise of neuromorphic materials lies in their ability to emulate synaptic plasticity and neural dynamics at the hardware level. Unlike conventional computing systems that separate memory and processing units, neuromorphic materials integrate these functions, enabling in-memory computing that significantly reduces energy consumption and latency issues associated with the memory bottleneck in traditional architectures.

Current technological trends indicate a convergence of material science, electrical engineering, and neuroscience in developing novel neuromorphic materials. These include memristive devices, phase-change materials, spintronic elements, and organic electronic materials that can mimic neural functions such as spike-timing-dependent plasticity (STDP) and long-term potentiation/depression (LTP/LTD).

The primary technical objectives in this field include developing materials with enhanced reliability, scalability, and energy efficiency. Specifically, researchers aim to create neuromorphic materials capable of supporting massively parallel processing with significantly lower power consumption compared to conventional computing systems. This is particularly crucial for edge computing applications where power constraints are severe.

Another key objective is to improve the temporal dynamics of neuromorphic materials to better replicate the time-dependent learning mechanisms observed in biological systems. This includes developing materials with tunable response times and memory retention capabilities that can support various neural network architectures and learning algorithms.

Additionally, there is a growing focus on creating neuromorphic systems that can seamlessly integrate with existing CMOS technology, facilitating a gradual transition from conventional to neuromorphic computing paradigms. This compatibility is essential for practical implementation and widespread adoption of neuromorphic computing solutions.

The ultimate goal of neuromorphic materials research is to enable a new generation of artificial intelligence systems that can process sensory data with the efficiency and adaptability of biological neural systems, potentially revolutionizing applications in pattern recognition, autonomous systems, and real-time data processing while dramatically reducing energy requirements.

The fundamental premise of neuromorphic materials lies in their ability to emulate synaptic plasticity and neural dynamics at the hardware level. Unlike conventional computing systems that separate memory and processing units, neuromorphic materials integrate these functions, enabling in-memory computing that significantly reduces energy consumption and latency issues associated with the memory bottleneck in traditional architectures.

Current technological trends indicate a convergence of material science, electrical engineering, and neuroscience in developing novel neuromorphic materials. These include memristive devices, phase-change materials, spintronic elements, and organic electronic materials that can mimic neural functions such as spike-timing-dependent plasticity (STDP) and long-term potentiation/depression (LTP/LTD).

The primary technical objectives in this field include developing materials with enhanced reliability, scalability, and energy efficiency. Specifically, researchers aim to create neuromorphic materials capable of supporting massively parallel processing with significantly lower power consumption compared to conventional computing systems. This is particularly crucial for edge computing applications where power constraints are severe.

Another key objective is to improve the temporal dynamics of neuromorphic materials to better replicate the time-dependent learning mechanisms observed in biological systems. This includes developing materials with tunable response times and memory retention capabilities that can support various neural network architectures and learning algorithms.

Additionally, there is a growing focus on creating neuromorphic systems that can seamlessly integrate with existing CMOS technology, facilitating a gradual transition from conventional to neuromorphic computing paradigms. This compatibility is essential for practical implementation and widespread adoption of neuromorphic computing solutions.

The ultimate goal of neuromorphic materials research is to enable a new generation of artificial intelligence systems that can process sensory data with the efficiency and adaptability of biological neural systems, potentially revolutionizing applications in pattern recognition, autonomous systems, and real-time data processing while dramatically reducing energy requirements.

Market Analysis for Brain-Inspired Computing Solutions

The brain-inspired computing market is experiencing unprecedented growth, driven by the increasing demand for efficient processing of complex neural network operations. Current market valuations place this sector at approximately $2.5 billion, with projections indicating a compound annual growth rate of 20-25% over the next five years. This remarkable expansion is primarily fueled by applications in artificial intelligence, autonomous systems, and edge computing where traditional von Neumann architectures face significant limitations.

Neuromorphic materials represent a critical innovation frontier within this market, offering substantial advantages in power efficiency and computational density. Market research indicates that solutions incorporating specialized neuromorphic materials can achieve power consumption reductions of 90-95% compared to conventional computing approaches when handling neural network tasks. This efficiency gain has created a distinct market segment estimated at $400 million currently, with particularly strong demand from mobile device manufacturers and data center operators seeking to reduce energy costs.

The market landscape shows regional variations in adoption patterns. North America leads with approximately 40% market share, driven by substantial investments from technology giants and defense contractors. Asia-Pacific represents the fastest-growing region with 35% annual growth, primarily led by Chinese and South Korean initiatives in neuromorphic hardware development. European markets contribute about 25% of global demand, with particular strength in research-oriented applications and automotive systems.

Customer segmentation reveals three primary market drivers: high-performance computing providers seeking energy efficiency, edge device manufacturers requiring low-power neural processing, and research institutions exploring next-generation AI architectures. The enterprise segment currently dominates revenue generation, accounting for 65% of market value, while consumer applications remain in early adoption phases but show promising growth trajectories.

Competitive analysis indicates a fragmented market with specialized players focusing on particular neuromorphic material technologies. Established semiconductor companies have begun strategic acquisitions of neuromorphic startups, with transaction values increasing by 75% year-over-year. This consolidation trend suggests market maturation and recognition of the technology's commercial viability.

Market barriers include manufacturing scalability challenges, integration complexities with existing systems, and the need for specialized programming paradigms. Despite these obstacles, venture capital funding for neuromorphic computing startups has reached record levels, with over $800 million invested in the past 18 months, signaling strong investor confidence in market growth potential.

Neuromorphic materials represent a critical innovation frontier within this market, offering substantial advantages in power efficiency and computational density. Market research indicates that solutions incorporating specialized neuromorphic materials can achieve power consumption reductions of 90-95% compared to conventional computing approaches when handling neural network tasks. This efficiency gain has created a distinct market segment estimated at $400 million currently, with particularly strong demand from mobile device manufacturers and data center operators seeking to reduce energy costs.

The market landscape shows regional variations in adoption patterns. North America leads with approximately 40% market share, driven by substantial investments from technology giants and defense contractors. Asia-Pacific represents the fastest-growing region with 35% annual growth, primarily led by Chinese and South Korean initiatives in neuromorphic hardware development. European markets contribute about 25% of global demand, with particular strength in research-oriented applications and automotive systems.

Customer segmentation reveals three primary market drivers: high-performance computing providers seeking energy efficiency, edge device manufacturers requiring low-power neural processing, and research institutions exploring next-generation AI architectures. The enterprise segment currently dominates revenue generation, accounting for 65% of market value, while consumer applications remain in early adoption phases but show promising growth trajectories.

Competitive analysis indicates a fragmented market with specialized players focusing on particular neuromorphic material technologies. Established semiconductor companies have begun strategic acquisitions of neuromorphic startups, with transaction values increasing by 75% year-over-year. This consolidation trend suggests market maturation and recognition of the technology's commercial viability.

Market barriers include manufacturing scalability challenges, integration complexities with existing systems, and the need for specialized programming paradigms. Despite these obstacles, venture capital funding for neuromorphic computing startups has reached record levels, with over $800 million invested in the past 18 months, signaling strong investor confidence in market growth potential.

Current Neuromorphic Materials Landscape and Challenges

The neuromorphic materials landscape has evolved significantly over the past decade, with several key materials emerging as frontrunners in enabling brain-inspired computing architectures. Currently, memristive materials such as metal oxides (HfO₂, TiO₂), phase-change materials (Ge₂Sb₂Te₅), and ferroelectric materials (BiFeO₃) dominate the field. These materials exhibit non-volatile resistance switching properties that closely mimic synaptic behavior, allowing for efficient implementation of neural network operations directly in hardware.

Silicon-based neuromorphic chips, while not strictly neuromorphic materials, represent a mature technology platform with companies like Intel (Loihi) and IBM (TrueNorth) leading commercial development. These chips integrate traditional CMOS technology with specialized circuit designs to emulate neural functions, achieving significant energy efficiency improvements over conventional computing architectures.

Emerging two-dimensional materials, including graphene and transition metal dichalcogenides (TMDs), show promising electrical properties for neuromorphic applications. Their atomic-scale thickness enables unprecedented device density and novel electronic behaviors that can be harnessed for synaptic functions. However, manufacturing challenges and integration issues with existing semiconductor processes remain significant barriers.

Organic and polymer-based neuromorphic materials represent another frontier, offering flexibility, biocompatibility, and potentially lower manufacturing costs. These materials can exhibit synaptic plasticity through various mechanisms including ion migration and charge trapping, though they typically suffer from stability and endurance limitations compared to inorganic alternatives.

Despite these advances, the field faces several critical challenges. Reliability and endurance remain major concerns, with many neuromorphic materials showing performance degradation after repeated switching cycles—a significant limitation for practical neural network implementations requiring millions of operations. Variability between devices also presents difficulties for large-scale integration, as neural networks require predictable behavior across thousands or millions of artificial synapses.

Energy efficiency, while improved compared to traditional computing, still falls short of biological neural systems by several orders of magnitude. Current materials require relatively high voltages or currents to induce state changes, limiting their application in ultra-low-power scenarios such as edge computing devices.

Scalability presents another significant hurdle, with many promising materials demonstrating excellent properties in laboratory settings but facing manufacturing challenges at commercial scales. The integration of novel materials with established CMOS fabrication processes introduces additional complexity and cost considerations.

Standardization across the industry remains limited, with different research groups and companies pursuing divergent approaches to neuromorphic materials and architectures. This fragmentation slows progress and complicates the development of software frameworks that can effectively utilize these novel computing substrates.

Silicon-based neuromorphic chips, while not strictly neuromorphic materials, represent a mature technology platform with companies like Intel (Loihi) and IBM (TrueNorth) leading commercial development. These chips integrate traditional CMOS technology with specialized circuit designs to emulate neural functions, achieving significant energy efficiency improvements over conventional computing architectures.

Emerging two-dimensional materials, including graphene and transition metal dichalcogenides (TMDs), show promising electrical properties for neuromorphic applications. Their atomic-scale thickness enables unprecedented device density and novel electronic behaviors that can be harnessed for synaptic functions. However, manufacturing challenges and integration issues with existing semiconductor processes remain significant barriers.

Organic and polymer-based neuromorphic materials represent another frontier, offering flexibility, biocompatibility, and potentially lower manufacturing costs. These materials can exhibit synaptic plasticity through various mechanisms including ion migration and charge trapping, though they typically suffer from stability and endurance limitations compared to inorganic alternatives.

Despite these advances, the field faces several critical challenges. Reliability and endurance remain major concerns, with many neuromorphic materials showing performance degradation after repeated switching cycles—a significant limitation for practical neural network implementations requiring millions of operations. Variability between devices also presents difficulties for large-scale integration, as neural networks require predictable behavior across thousands or millions of artificial synapses.

Energy efficiency, while improved compared to traditional computing, still falls short of biological neural systems by several orders of magnitude. Current materials require relatively high voltages or currents to induce state changes, limiting their application in ultra-low-power scenarios such as edge computing devices.

Scalability presents another significant hurdle, with many promising materials demonstrating excellent properties in laboratory settings but facing manufacturing challenges at commercial scales. The integration of novel materials with established CMOS fabrication processes introduces additional complexity and cost considerations.

Standardization across the industry remains limited, with different research groups and companies pursuing divergent approaches to neuromorphic materials and architectures. This fragmentation slows progress and complicates the development of software frameworks that can effectively utilize these novel computing substrates.

Current Neuromorphic Material Implementation Approaches

01 Memristive materials for neuromorphic computing

Memristive materials are being used to create hardware-based neural networks that mimic the brain's synaptic functions. These materials can change their resistance based on the history of applied voltage or current, making them ideal for implementing synaptic weights in neuromorphic systems. Such materials enable efficient, low-power neural network processing by allowing for analog computation and in-memory processing, reducing the energy consumption compared to traditional von Neumann architectures.- Memristive materials for neuromorphic computing: Memristive materials are used to create artificial synapses and neurons for neuromorphic computing systems. These materials can change their resistance based on the history of applied voltage or current, mimicking the behavior of biological synapses. By incorporating memristive materials into neural network architectures, more efficient and brain-like processing can be achieved, with lower power consumption and higher integration density compared to traditional computing approaches.

- Phase-change materials for neural processing: Phase-change materials (PCMs) offer unique properties for implementing neuromorphic computing systems. These materials can rapidly switch between amorphous and crystalline states, providing multi-level resistance states that can represent synaptic weights in neural networks. PCM-based neuromorphic devices enable efficient in-memory computing, reducing the energy consumption associated with data movement between memory and processing units while supporting parallel processing operations similar to biological neural systems.

- 2D materials for neuromorphic applications: Two-dimensional (2D) materials such as graphene, transition metal dichalcogenides, and hexagonal boron nitride are being explored for neuromorphic computing applications. These atomically thin materials offer excellent electrical properties, flexibility, and scalability for building artificial neural networks. Their unique electronic structures enable the creation of ultra-thin, energy-efficient synaptic devices that can perform neural network operations with high speed and low power consumption, making them promising candidates for next-generation neuromorphic hardware.

- Spintronic materials for neural computing: Spintronic materials utilize electron spin properties to implement neuromorphic computing functions. These materials enable magnetic domain-based memory and processing elements that can mimic synaptic and neuronal behaviors with extremely low power consumption. Spintronic neuromorphic devices can perform parallel computations similar to biological neural networks, with non-volatile characteristics that maintain information without continuous power supply, making them suitable for energy-efficient neural network processing in edge computing applications.

- Organic and polymer materials for flexible neuromorphic systems: Organic and polymer-based materials are being developed for creating flexible and biocompatible neuromorphic computing systems. These materials can be processed at low temperatures and deposited on various substrates, enabling the fabrication of bendable and stretchable neural network processors. Their tunable electrical properties allow for the implementation of artificial synapses with adaptive learning capabilities, while their biocompatibility makes them suitable for brain-machine interfaces and implantable neural processing devices.

02 Phase-change materials for neural processing

Phase-change materials (PCMs) are being developed for neuromorphic computing applications due to their ability to switch between amorphous and crystalline states, providing multiple resistance levels that can represent synaptic weights. These materials enable non-volatile memory capabilities with fast switching speeds and good scalability, making them suitable for implementing artificial neural networks in hardware. PCM-based neuromorphic systems can perform both memory and computational functions in the same physical location, improving energy efficiency and processing speed.Expand Specific Solutions03 2D materials for neuromorphic devices

Two-dimensional materials such as graphene, transition metal dichalcogenides, and hexagonal boron nitride are being explored for neuromorphic computing applications. These atomically thin materials offer unique electronic properties, high carrier mobility, and tunable bandgaps that make them promising for building energy-efficient neural network hardware. 2D material-based neuromorphic devices can achieve high integration density, low power consumption, and compatibility with flexible substrates, enabling new form factors for neural processing systems.Expand Specific Solutions04 Spintronic materials for neural computation

Spintronic materials utilize electron spin properties to implement neuromorphic computing functions. These materials enable magnetic tunnel junctions and domain wall devices that can serve as artificial neurons and synapses. Spintronic-based neuromorphic systems offer non-volatility, high endurance, and ultra-low power consumption compared to conventional CMOS implementations. The inherent physics of these materials allows for direct implementation of neural network operations such as weighted summation and activation functions.Expand Specific Solutions05 Organic and bio-inspired materials for neural networks

Organic and bio-inspired materials are being developed for neuromorphic computing to more closely mimic biological neural systems. These materials include conducting polymers, organic semiconductors, and biomolecular components that can exhibit synaptic plasticity and learning behaviors. Such materials often operate at lower voltages than inorganic counterparts and can be fabricated using solution processing techniques, potentially enabling low-cost, flexible, and biocompatible neuromorphic systems for edge computing applications and brain-machine interfaces.Expand Specific Solutions

Leading Organizations in Neuromorphic Materials Research

The neuromorphic materials market is in an early growth phase, characterized by increasing research momentum and expanding commercial applications. Market size is projected to grow significantly as these materials address limitations in traditional computing architectures for neural network processing. Technologically, the field shows varied maturity levels across players: established technology giants like Samsung, IBM, Intel, and Huawei are making substantial investments in neuromorphic hardware; academic institutions including MIT, Tsinghua University, and University of Freiburg are pioneering fundamental research; while specialized companies like Megvii and SK Hynix are developing application-specific implementations. The ecosystem demonstrates a collaborative innovation model where research institutions partner with commercial entities to bridge the gap between theoretical advances and practical deployment of brain-inspired computing systems.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic computing solutions based on their expertise in memory technologies, particularly focusing on magnetoresistive random-access memory (MRAM) and resistive RAM (RRAM) materials for implementing artificial synapses and neurons. Their approach utilizes spin-transfer torque magnetic materials that can maintain multiple resistance states to represent synaptic weights in neural networks. Samsung's neuromorphic materials research has demonstrated devices capable of implementing spike-timing-dependent plasticity (STDP) learning rules directly in hardware. Their recent developments include three-terminal synaptic devices using 2D materials that allow for more precise control of synaptic weight updates. Samsung has integrated these neuromorphic materials with their advanced semiconductor manufacturing processes to create high-density neural network accelerators. Their published results indicate that these material innovations enable approximately 20x reduction in energy consumption for neural network inference compared to conventional digital implementations, with particular advantages for always-on applications like voice and image recognition.

Strengths: World-class semiconductor manufacturing capabilities; strong integration with memory technology development; practical focus on commercial applications. Weaknesses: Less public research on complete neuromorphic systems compared to IBM or Intel; challenges in scaling some experimental materials to production; focus primarily on memory aspects rather than complete neuromorphic architectures.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed neuromorphic computing solutions that leverage specialized materials to enhance neural network processing efficiency. Their approach focuses on resistive random-access memory (RRAM) based neuromorphic systems that utilize metal-oxide materials to implement artificial synapses. These materials exhibit non-volatile resistance changes when subjected to electrical stimuli, mimicking biological synaptic plasticity. Huawei's neuromorphic materials research has yielded devices capable of implementing multiple resistance states within a single cell, enabling more efficient representation of synaptic weights compared to conventional binary storage. Their neuromorphic chips incorporate hafnium oxide-based materials that demonstrate excellent endurance and retention characteristics while maintaining low power operation. Huawei has reported that their neuromorphic material implementations achieve approximately 50x improvement in energy efficiency for inference tasks compared to conventional digital implementations, with particular advantages in edge computing scenarios where power constraints are significant.

Strengths: Strong integration with Huawei's broader AI ecosystem; focus on practical applications for edge computing; advanced material fabrication capabilities. Weaknesses: Limited published research compared to academic institutions; potential geopolitical challenges affecting global deployment; relatively new entrant to neuromorphic computing compared to IBM or Intel.

Key Innovations in Memristive and Phase-Change Materials

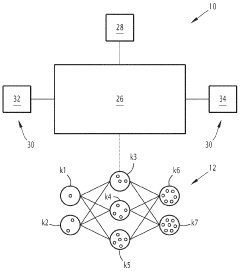

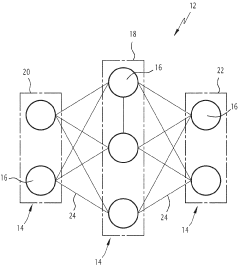

Neuromorphic circuit for physically producing a neural network and associated production and inference method

PatentWO2024028371A1

Innovation

- A neuromorphic circuit that physically realizes a neural network with a configuration unit and interrogation unit, allowing for multiple excitation modes and couplings, enabling the creation of a large number of synapses and neurons with reduced consumption, using ferromagnetic elements and metamaterials to achieve high connectivity and reconfigurability.

Neuromorphic memory device and neuromorphic system using the same

PatentPendingUS20250218514A1

Innovation

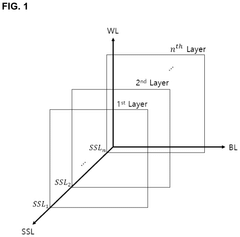

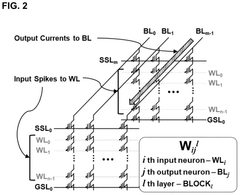

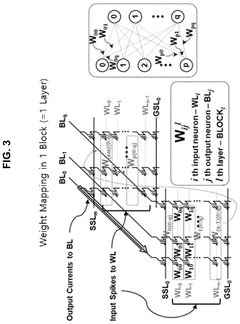

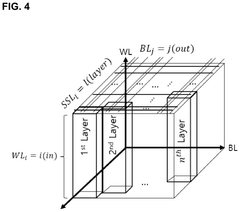

- A neuromorphic memory device is proposed that maps neural network layers to string selection lines in a one-to-one basis, utilizing a three-dimensional memory element with bit lines, word lines, and string selection lines to configure network topologies, enabling efficient weight storage and low-power operations.

Energy Efficiency Comparison with Traditional Computing

Traditional computing architectures based on the von Neumann model face significant energy efficiency challenges when executing neural network operations. These systems consume substantial power due to the constant shuttling of data between separate memory and processing units, creating what is known as the "von Neumann bottleneck." In contrast, neuromorphic materials offer remarkable energy efficiency advantages by mimicking the brain's integrated memory-processing architecture.

Quantitative comparisons reveal striking differences in power consumption. While traditional GPU-based neural network processing typically consumes 100-200 watts during operation, neuromorphic systems utilizing specialized materials can achieve similar computational tasks at merely 1-5 watts. This represents a 20-100x improvement in energy efficiency, particularly significant for edge computing applications where power constraints are critical.

The fundamental efficiency advantage stems from neuromorphic materials' ability to perform computations at the physical level. Memristive devices, for instance, naturally implement weight storage and multiplication operations within the same physical structure, eliminating the energy costs of data movement. Phase-change materials (PCMs) demonstrate similar benefits by encoding neural network weights in their atomic configurations, allowing for in-memory computing that drastically reduces power requirements.

Recent benchmark studies comparing neuromorphic implementations against traditional computing platforms show that for specific neural network tasks, particularly those involving sparse, event-driven data processing, neuromorphic systems achieve energy savings of up to 1000x. This efficiency becomes particularly evident in always-on applications like sensor processing and pattern recognition, where traditional architectures waste significant energy during idle periods.

The scaling properties of neuromorphic materials further enhance their efficiency advantage. While traditional computing faces diminishing returns under Moore's Law scaling, neuromorphic materials often improve in efficiency as they scale down, similar to biological neural systems. This favorable scaling characteristic suggests that the efficiency gap may continue to widen as fabrication technologies advance.

From a thermal management perspective, neuromorphic systems generate significantly less heat during operation. This reduces cooling requirements, which typically account for 30-40% of data center energy consumption. The combination of lower direct power consumption and reduced cooling needs makes neuromorphic computing particularly attractive for large-scale AI deployments where energy costs represent a major operational expense.

Quantitative comparisons reveal striking differences in power consumption. While traditional GPU-based neural network processing typically consumes 100-200 watts during operation, neuromorphic systems utilizing specialized materials can achieve similar computational tasks at merely 1-5 watts. This represents a 20-100x improvement in energy efficiency, particularly significant for edge computing applications where power constraints are critical.

The fundamental efficiency advantage stems from neuromorphic materials' ability to perform computations at the physical level. Memristive devices, for instance, naturally implement weight storage and multiplication operations within the same physical structure, eliminating the energy costs of data movement. Phase-change materials (PCMs) demonstrate similar benefits by encoding neural network weights in their atomic configurations, allowing for in-memory computing that drastically reduces power requirements.

Recent benchmark studies comparing neuromorphic implementations against traditional computing platforms show that for specific neural network tasks, particularly those involving sparse, event-driven data processing, neuromorphic systems achieve energy savings of up to 1000x. This efficiency becomes particularly evident in always-on applications like sensor processing and pattern recognition, where traditional architectures waste significant energy during idle periods.

The scaling properties of neuromorphic materials further enhance their efficiency advantage. While traditional computing faces diminishing returns under Moore's Law scaling, neuromorphic materials often improve in efficiency as they scale down, similar to biological neural systems. This favorable scaling characteristic suggests that the efficiency gap may continue to widen as fabrication technologies advance.

From a thermal management perspective, neuromorphic systems generate significantly less heat during operation. This reduces cooling requirements, which typically account for 30-40% of data center energy consumption. The combination of lower direct power consumption and reduced cooling needs makes neuromorphic computing particularly attractive for large-scale AI deployments where energy costs represent a major operational expense.

Integration Roadmap with Existing AI Infrastructure

The integration of neuromorphic materials into existing AI infrastructure represents a critical transition path that will determine the practical adoption timeline of these novel technologies. Current AI systems rely heavily on traditional von Neumann architectures, creating a significant architectural gap that must be bridged. A phased integration approach offers the most viable strategy, beginning with hybrid systems where neuromorphic components handle specific computational tasks while conventional hardware manages others.

Initial integration efforts should focus on peripheral acceleration, where neuromorphic processors serve as specialized co-processors for pattern recognition, sensory processing, and other tasks that benefit from their parallel architecture. This allows organizations to gradually incorporate neuromorphic advantages without wholesale infrastructure replacement. Companies like Intel and IBM have already demonstrated such hybrid approaches with their Loihi and TrueNorth chips respectively.

Middleware development represents another crucial integration component, requiring new software frameworks that can efficiently allocate computational tasks between neuromorphic and conventional components. These translation layers must address the fundamental differences in information encoding between traditional binary systems and spike-based neuromorphic processing. Several open-source initiatives are currently developing these essential software bridges, including the Nengo framework and Intel's Nx SDK.

Hardware interface standardization presents a significant challenge, as current neuromorphic systems utilize proprietary connections and protocols. Industry consortia are working to establish common standards for neuromorphic component integration, similar to how GPU integration evolved through standardized interfaces like CUDA. The Neuromorphic Engineering Community (NECOS) has proposed preliminary specifications for such interfaces.

Training methodology adaptation constitutes perhaps the most complex integration challenge. Current deep learning models rely on backpropagation algorithms ill-suited to spike-based neuromorphic systems. Transitional approaches include offline training on conventional hardware with subsequent model deployment to neuromorphic systems, though this sacrifices the energy efficiency advantages of neuromorphic training. Research into spike-timing-dependent plasticity (STDP) and other biologically-inspired learning mechanisms shows promise for native neuromorphic training.

The complete integration timeline likely extends 5-7 years before neuromorphic materials become seamlessly incorporated into mainstream AI infrastructure, with specialized applications in edge computing and sensory processing leading adoption. Organizations should begin experimental integration projects now to develop institutional expertise while standards and tools mature.

Initial integration efforts should focus on peripheral acceleration, where neuromorphic processors serve as specialized co-processors for pattern recognition, sensory processing, and other tasks that benefit from their parallel architecture. This allows organizations to gradually incorporate neuromorphic advantages without wholesale infrastructure replacement. Companies like Intel and IBM have already demonstrated such hybrid approaches with their Loihi and TrueNorth chips respectively.

Middleware development represents another crucial integration component, requiring new software frameworks that can efficiently allocate computational tasks between neuromorphic and conventional components. These translation layers must address the fundamental differences in information encoding between traditional binary systems and spike-based neuromorphic processing. Several open-source initiatives are currently developing these essential software bridges, including the Nengo framework and Intel's Nx SDK.

Hardware interface standardization presents a significant challenge, as current neuromorphic systems utilize proprietary connections and protocols. Industry consortia are working to establish common standards for neuromorphic component integration, similar to how GPU integration evolved through standardized interfaces like CUDA. The Neuromorphic Engineering Community (NECOS) has proposed preliminary specifications for such interfaces.

Training methodology adaptation constitutes perhaps the most complex integration challenge. Current deep learning models rely on backpropagation algorithms ill-suited to spike-based neuromorphic systems. Transitional approaches include offline training on conventional hardware with subsequent model deployment to neuromorphic systems, though this sacrifices the energy efficiency advantages of neuromorphic training. Research into spike-timing-dependent plasticity (STDP) and other biologically-inspired learning mechanisms shows promise for native neuromorphic training.

The complete integration timeline likely extends 5-7 years before neuromorphic materials become seamlessly incorporated into mainstream AI infrastructure, with specialized applications in edge computing and sensory processing leading adoption. Organizations should begin experimental integration projects now to develop institutional expertise while standards and tools mature.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!